Understanding Azure Data Factory’s Role in Data Integration

Azure Data Factory (ADF) serves as a cloud-based, fully managed ETL/ELT service. It simplifies data integration across diverse sources and destinations. ADF’s core functionality streamlines the movement and transformation of data, playing a crucial role in modern data warehousing and business intelligence strategies. This robust platform handles complex data integration scenarios efficiently. A key component of ADF is the copy activity, enabling the seamless transfer of data between various systems. Understanding copy activity in Azure Data Factory is fundamental to mastering data movement within the platform. The copy activity in Azure Data Factory forms the foundation for numerous data integration processes. It offers a highly scalable and reliable solution for moving large volumes of data.

Data integration is critical for organizations seeking to leverage the full potential of their data assets. ADF addresses this need by providing a centralized platform for managing and orchestrating data pipelines. Its ability to connect to numerous on-premises and cloud-based data stores makes it a versatile tool. The copy activity in Azure Data Factory simplifies the process of extracting, transforming, and loading (ETL) or extracting, loading, and transforming (ELT) data. It allows users to easily move data between various sources and sinks. Efficient data movement, facilitated by the copy activity in Azure Data Factory, is essential for timely insights and informed decision-making.

Many organizations rely on ADF’s scalability and reliability. The platform’s capacity to handle massive datasets and complex transformations is essential for large-scale data warehousing and analytics initiatives. ADF’s managed services model reduces the operational overhead associated with managing on-premises ETL infrastructure. The copy activity in Azure Data Factory contributes significantly to this efficiency. It allows developers to focus on data integration logic rather than managing underlying infrastructure. This focus allows for faster development cycles and quicker time-to-insights. Data integration is now made simpler and more efficient with ADF’s copy activity.

Exploring the Versatility of Azure Data Factory Copy Activities

Azure Data Factory (ADF) copy activity offers unparalleled versatility in moving data. It supports a vast array of sources and sinks, enabling seamless integration across diverse data landscapes. Data can be efficiently transferred from various cloud-based services such as Azure Blob Storage, Azure SQL Database, Azure Synapse Analytics, and Azure Cosmos DB. The copy activity in Azure Data Factory also handles on-premises data sources like SQL Server, Oracle, and even flat files, providing a unified approach to data integration regardless of location. This flexibility extends to diverse data formats including CSV, JSON, Parquet, Avro, ORC, and XML, ensuring compatibility with existing data structures. The copy activity in Azure Data Factory handles these diverse formats with ease. Efficient data movement is a core function of the copy activity in Azure Data Factory.

A key advantage of the ADF copy activity lies in its robust schema handling capabilities. Users can leverage automatic schema inference, simplifying the process for common formats. For more complex scenarios, the copy activity in Azure Data Factory allows for custom schema mapping, providing granular control over data transformation during the copy process. This feature is particularly valuable when dealing with evolving schemas or needing to adapt data structures to align with target systems. The ability to define custom mappings offers advanced users greater flexibility. Furthermore, the copy activity’s ability to handle different compression codecs (like Gzip, Deflate, and Snappy) optimizes storage and transfer efficiency. Choosing the right codec significantly influences the copy activity’s performance. This level of control is critical for optimizing the performance and cost-effectiveness of data movement operations.

The power of the copy activity in Azure Data Factory extends to its support for various data types. Whether handling structured data from relational databases, semi-structured data from JSON files, or unstructured data from blob storage, ADF copy activity provides a consistent and efficient mechanism. This capability streamlines data integration initiatives across various enterprise systems and data platforms, enabling data democratization and informed decision-making. This functionality is key for modern data warehousing and data lake implementations. Advanced users can also leverage features like self-hosted integration runtime for secure and efficient data transfer from on-premises or hybrid environments. The Azure Data Factory copy activity simplifies these complex integration scenarios. The copy activity in Azure Data Factory truly is a powerful tool for data integration.

Setting Up a Basic Copy Activity in Azure Data Factory

This section provides a step-by-step guide to configuring a basic copy activity in Azure Data Factory. The process involves creating linked services to connect to data sources and sinks, defining datasets to specify the data to be moved, and configuring the copy activity itself. Mastering the copy activity in Azure Data Factory is crucial for efficient data integration. Begin by creating linked services. These services act as connections to your data sources and destinations. For example, to move data from an Azure Blob Storage container to an Azure SQL Database, you’ll need linked services for both. The creation process usually involves providing connection strings or credentials. Remember to securely manage these credentials.

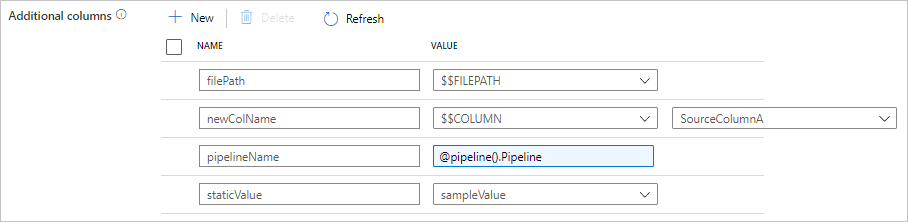

Next, define datasets. Datasets represent the data you’re working with. For the source, specify the Blob Storage container and its file structure. For the destination, specify the Azure SQL Database table. You’ll need to select the appropriate data format (e.g., CSV, JSON, Parquet) for both source and destination. The copy activity in Azure Data Factory supports a wide variety of formats. This is where you will define schema mapping if required. The platform offers automatic schema inference, simplifying the process. However, for complex scenarios or specific requirements, you may need to create custom schema mappings. This ensures data is correctly transferred to the target location. The copy activity in Azure Data Factory handles this aspect effectively.

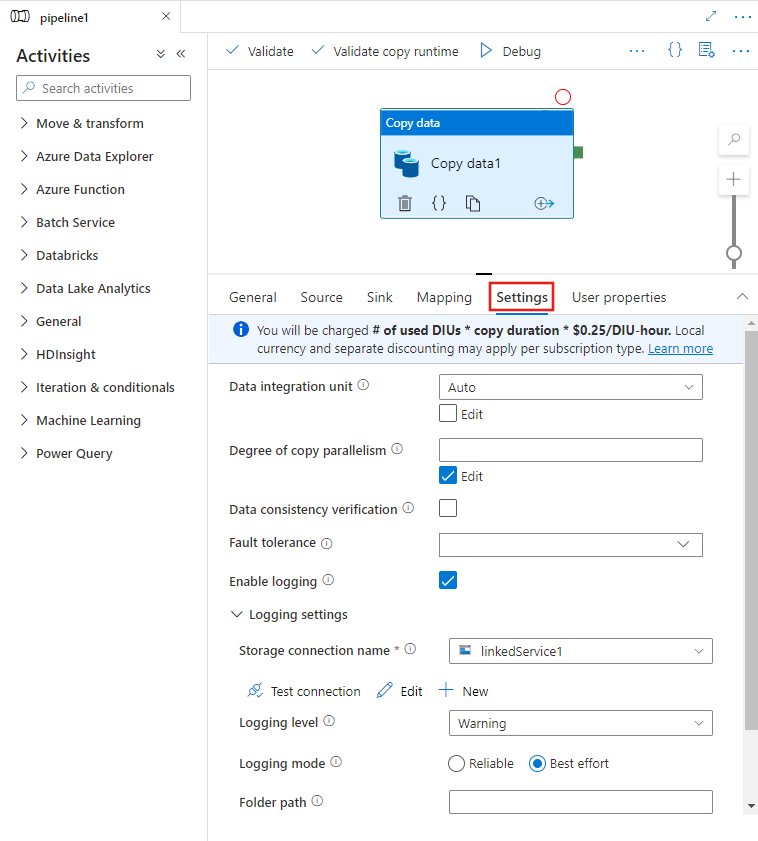

Finally, create the copy activity. This activity links your source and destination datasets via their linked services. Specify the activity’s name, select your source and destination datasets, and configure other properties as needed. These properties can include error handling settings, copy behavior (e.g., append, overwrite), and performance tuning options. The settings for this copy activity in Azure Data Factory are important for the overall efficiency of the data transfer operation. The monitoring and logging features provided by Azure Data Factory allow you to track the progress and identify any issues with your copy activity. Remember to test thoroughly after configuration. This ensures the copy activity in Azure Data Factory performs as expected before deployment to production.

Optimizing Copy Activity Performance: Strategies and Best Practices

Optimizing the performance of a copy activity in Azure Data Factory is crucial for efficient data integration. Several strategies can significantly enhance speed and throughput. Utilizing parallel copies allows the copy activity to break down the data into smaller chunks, processing them concurrently. This dramatically reduces overall processing time, especially for large datasets. Data partitioning, dividing the data into smaller, manageable units before the copy operation, complements parallel processing, further boosting efficiency. The choice of data format also impacts performance. Columnar formats like Parquet often outperform row-oriented formats like CSV, especially for analytical workloads. Careful consideration of the format used in the copy activity in Azure Data Factory is a key optimization step. Regular monitoring helps identify performance bottlenecks and allows for timely adjustments.

Pre-copy and post-copy transformations can also influence copy activity performance. Pre-copy transformations, performed before the data is moved, can reduce the volume of data needing to be copied. For example, filtering out unnecessary data before the copy operation reduces the processing load and speeds up the transfer. Post-copy transformations, conversely, allow for data manipulation after the copy is complete, separating transformation tasks from the core data movement process. This approach avoids lengthening copy activity execution time. By leveraging these strategies, organizations can ensure efficient and timely data movement using the copy activity in Azure Data Factory. Remember to appropriately size the compute resources allocated to the copy activity, scaling them up as needed for larger datasets. Insufficient compute can lead to performance bottlenecks and extend processing times.

Troubleshooting slow copy operations often involves examining logs and monitoring tools. Azure Data Factory provides detailed metrics on copy activity performance, including data throughput, execution time, and error rates. Analyzing these metrics can pinpoint the root cause of slowdowns, which might stem from network issues, insufficient compute resources, or poorly configured settings. Effective error handling is crucial. Implementing robust error handling within the copy activity in Azure Data Factory prevents data loss and ensures data integrity. Strategies such as retry policies and dead-letter queues can help handle temporary failures and ensure data is processed correctly. Regularly review and adjust settings in your copy activity in Azure Data Factory to maintain optimal performance and address any emerging issues.

Handling Data Transformations During Copy Activity in Azure Data Factory

Azure Data Factory’s copy activity offers robust capabilities for data transformation, streamlining data processing within the data movement process. Instead of separate transformation steps, data cleaning, filtering, and basic manipulations can be integrated directly into the copy activity, improving efficiency. This is achieved using built-in data flow transformations, providing a powerful way to modify data during the copy process. For instance, one can filter out irrelevant rows, convert data types, or clean up inconsistent values directly as data is transferred from the source to the destination using the copy activity in Azure Data Factory. This reduces the need for pre- or post-copy processing steps, simplifying the overall data pipeline and minimizing latency. The copy activity in Azure Data Factory supports various transformation options.

For more complex transformations exceeding the capabilities of built-in data flow transformations within the copy activity, consider pre-copy or post-copy data flows. These offer a more comprehensive transformation environment, handling intricate data manipulations beyond what’s directly supported within the copy activity’s settings. This approach allows for staged transformations, where data is prepared or refined before the main copy activity, or processed further after the data transfer is complete. This modular approach makes the entire process more maintainable and allows for easier debugging and troubleshooting. Using pre-copy and post-copy data flows with the copy activity in Azure Data Factory, offers the flexibility to tailor transformations to specific needs, enhancing the overall effectiveness of the data integration process.

The choice between integrating transformations within the copy activity itself or using pre/post-copy data flows depends on the complexity of the required transformations. Simple data cleaning or filtering tasks are efficiently handled within the copy activity. More complex scenarios, such as joining data from multiple sources, applying complex business logic, or implementing advanced data cleansing routines, benefit from the more powerful and flexible capabilities of separate data flows. Regardless of the chosen approach, using data transformations alongside the copy activity in Azure Data Factory optimizes data processing. This streamlines the data integration pipeline, improving efficiency and overall data quality.

Advanced Copy Activity Features: Dealing with Complex Scenarios

Handling exceptionally large datasets requires strategic planning with the copy activity in Azure Data Factory. Techniques like partitioning the data into smaller, manageable chunks improve performance significantly. This approach allows for parallel processing, dramatically reducing overall copy times. Careful consideration of the chosen data format is also crucial. Parquet, for example, often offers superior compression and performance compared to CSV for large-scale data movement. The copy activity in Azure Data Factory supports these optimizations, empowering users to handle terabytes or even petabytes of data efficiently.

Complex schemas present another challenge. The copy activity in Azure Data Factory offers robust schema mapping capabilities. Users can define custom mappings to handle mismatches between source and destination schemas. This ensures data integrity and avoids errors during the copy process. For particularly intricate schemas, iterative testing and refinement are advisable. Thorough schema validation before initiating the copy activity minimizes the risk of data corruption or unexpected behavior. This meticulous approach guarantees data quality throughout the entire data integration process using the copy activity in Azure Data Factory.

Security is paramount when dealing with sensitive data. The copy activity in Azure Data Factory integrates seamlessly with Azure Active Directory, allowing for secure authentication and authorization. Managed identities provide a secure way to access data sources without storing credentials directly within the pipeline. This approach enhances security and complies with data governance standards. Furthermore, robust error handling and logging are essential. The copy activity in Azure Data Factory provides mechanisms for capturing and reporting errors, facilitating prompt identification and resolution of issues. This includes detailed logging of successful and unsuccessful operations, providing insights into the overall health and performance of the data movement process. Comprehensive logging aids in auditing and compliance efforts.

Monitoring and Troubleshooting Your Copy Activities in Azure Data Factory

Effective monitoring is crucial for managing copy activity in Azure Data Factory. The Azure portal provides comprehensive monitoring tools. These tools display the status of each copy activity, including execution time, data processed, and any errors encountered. Data engineers can use this information to identify performance bottlenecks and address issues proactively. Real-time monitoring allows for immediate intervention if a copy activity fails, minimizing downtime and ensuring data integrity. The monitoring interface presents key performance indicators (KPIs), offering insights into the efficiency of data movement operations within the copy activity. Understanding these KPIs enables data engineers to optimize their copy activities and improve overall data pipeline performance.

Troubleshooting copy activities in Azure Data Factory often involves examining activity logs. These logs provide detailed information about each copy activity’s execution, including error messages and timestamps. Analyzing these logs helps pinpoint the root cause of failures, such as connection problems, insufficient permissions, or schema mismatches. The copy activity in Azure Data Factory supports various error handling mechanisms. These mechanisms allow for the redirection of failed data or the automatic retry of failed operations. Proper configuration of error handling is vital for robust data integration. By implementing appropriate error handling strategies, data engineers can ensure the resilience of their data pipelines and prevent data loss or corruption. Careful review of error messages and logs is essential for effective troubleshooting of copy activity issues.

Beyond individual activity monitoring, Azure Data Factory offers pipeline monitoring capabilities. This enables tracking the progress and health of entire data pipelines, providing a holistic view of data movement processes. Visualizing pipeline execution helps identify dependencies between copy activities and other pipeline components. This holistic perspective supports optimization efforts, including adjusting parallel execution settings or refining data partitioning strategies to enhance the overall efficiency of the copy activity in Azure Data Factory. Understanding pipeline monitoring features allows proactive management and optimization of complex data integration workflows. The platform’s extensive logging and monitoring capabilities are crucial resources for ensuring the reliable operation and efficient performance of the copy activity in Azure Data Factory.

Integrating Copy Activities into Larger Data Pipelines

Azure Data Factory (ADF) excels at orchestrating complex data integration processes. Copy activities, the workhorses of data movement, seamlessly integrate within larger ADF pipelines. These pipelines utilize control flows to manage the execution sequence of multiple copy activities and other data processing tasks. This allows for sophisticated data workflows, exceeding the capabilities of individual copy activity in Azure Data Factory operations. Consider a scenario involving data from various sources: a relational database, cloud storage, and an API. A well-designed ADF pipeline can ingest data from each source using separate copy activities, then transform and load the combined data into a data warehouse using additional copy activities and data transformation steps. The efficient execution of these diverse tasks relies on the powerful orchestration features of ADF pipelines. Each copy activity in Azure Data Factory contributes to the overall pipeline’s success.

Control flows provide the mechanism to define the order and dependencies between tasks. For instance, one copy activity might depend on the successful completion of another. Conditional execution allows for branching logic, where different paths are followed based on specific criteria. This enables dynamic pipeline behavior, adapting to changing data conditions or error situations. Looping constructs allow repetitive execution of copy activities, processing data in batches or iterating over multiple files. This feature is particularly useful when dealing with large volumes of data or when processing data that arrives incrementally. Effectively managing dependencies between tasks within the pipeline ensures efficient data processing. Robust pipeline design, incorporating error handling and logging, is essential for reliable data integration. Careful planning of the pipeline’s structure and the individual copy activity in Azure Data Factory configurations minimizes risks and optimizes performance.

Advanced pipeline features enhance the capabilities of individual copy activities in Azure Data Factory. For example, using triggers to automate pipeline execution based on schedules or events makes the overall data integration process more efficient. Monitoring tools provide insights into the performance and status of the entire pipeline, giving a holistic view of the data movement operations. This detailed monitoring empowers proactive problem identification and resolution, crucial for maintaining data quality and pipeline reliability. The ability to integrate copy activities within larger data pipelines transforms ADF from a simple data movement tool into a robust data integration platform. This allows data engineers to build highly efficient and dependable solutions for complex data warehousing and analytics needs. The successful implementation of such pipelines hinges on careful consideration of task dependencies, control flow design, and the proper configuration of each individual copy activity in Azure Data Factory.