Understanding Containerization: A Modern Approach to Virtualization

Containerization technology has emerged as a modern and efficient approach to virtualization, enabling the creation of lightweight, portable, and self-contained application environments. Among various containerization platforms, Docker has gained significant popularity due to its simplicity, flexibility, and wide range of features. This article provides an in-depth analysis of containerization technology Docker, highlighting its benefits, components, and applications in the software development industry.

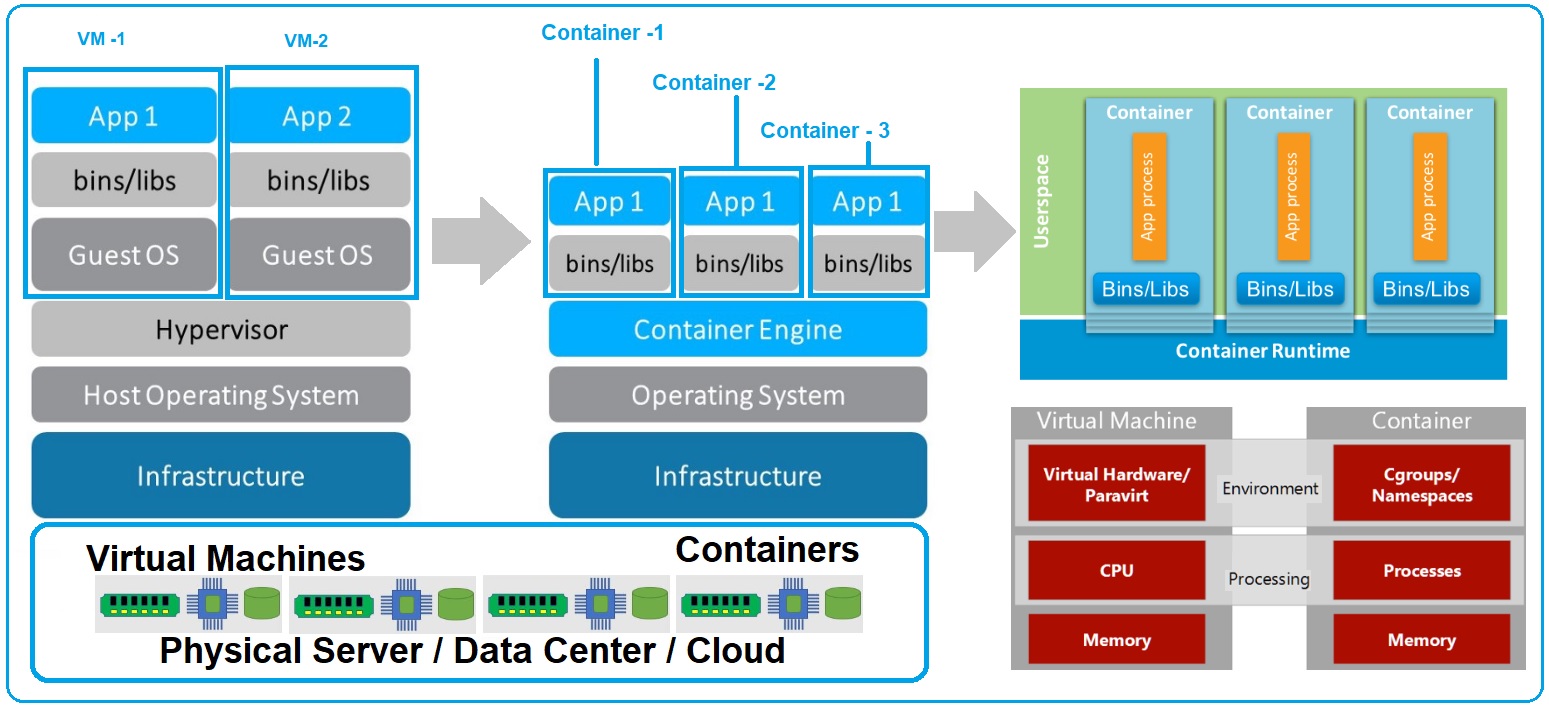

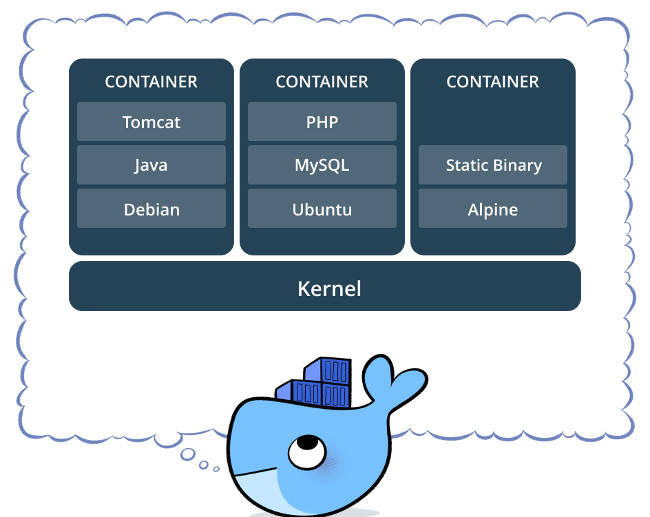

Containerization technology Docker leverages the power of operating system-level virtualization, allowing multiple isolated applications to run on a single host while sharing the same kernel. This approach results in substantial resource utilization improvements, faster deployment speeds, and increased system stability compared to traditional virtualization methods using hypervisors.

Docker: A Leading Containerization Platform

Docker is a powerful and widely-used open-source containerization platform that has revolutionized the software development industry. It provides an efficient and flexible solution for creating, deploying, and managing applications inside portable, self-contained containers. The main features of Docker include process isolation, resource control, and a strong focus on automation, making it an ideal choice for modern DevOps practices.

The advantages of using containerization technology Docker are numerous. First, it significantly reduces the overhead associated with traditional virtualization methods, leading to better resource utilization and faster deployment speeds. Second, Docker ensures application consistency across different environments, simplifying the development, testing, and production stages. Third, Docker containers are highly portable and can run on various platforms and operating systems, enabling seamless collaboration and integration among development teams.

Docker has a wide range of applications in the software development industry, such as web development, DevOps, big data, and machine learning. For instance, web developers can use Docker to create isolated development environments, streamline the deployment process, and ensure consistent behavior across various servers. DevOps teams can leverage Docker to manage microservices architectures, automate build, test, and deployment pipelines, and enforce security and compliance policies.

Key Components of Docker Containerization Technology

Docker is built on several core components that work together to provide a robust and efficient containerization platform. These components include Docker Engine, Docker Hub, Docker Compose, and Docker Swarm, each playing a crucial role in the containerization process.

Docker Engine

Docker Engine is the core runtime component responsible for managing and executing Docker containers. It consists of the Docker daemon, REST API, and command-line interface (CLI). The Docker daemon handles container lifecycle events, such as creating, starting, stopping, and removing containers. The REST API and CLI allow developers to interact with the Docker daemon, executing commands and automating various tasks.

Docker Hub

Docker Hub is a cloud-based registry service that allows users to store, distribute, and manage Docker images. It provides a centralized repository for sharing Docker images, enabling collaboration among development teams and simplifying the distribution of applications. Docker Hub also supports automated builds, allowing users to automatically build Docker images from source code repositories such as GitHub.

Docker Compose

Docker Compose is a tool for defining and managing multi-container Docker applications. It uses a YAML file, called docker-compose.yml, to configure the application’s services, networks, and volumes. Docker Compose simplifies the process of managing multi-container applications, allowing developers to start, stop, and rebuild the entire application stack with a single command.

Docker Swarm

Docker Swarm is a native clustering and scheduling tool for Docker that transforms a group of Docker hosts into a single virtual host. It allows users to create and manage a swarm of Docker nodes, distributing services across the cluster and ensuring high availability and load balancing. Docker Swarm integrates seamlessly with other Docker components, providing a cohesive and easy-to-use container orchestration solution.

How to Get Started with Docker: A Step-by-Step Guide

Getting started with Docker is a straightforward process, and this guide will walk you through installing and setting up Docker on various operating systems. Additionally, we will provide basic commands and examples to help you familiarize yourself with the platform.

Installing Docker on Linux

To install Docker on a Linux system, you can use the package manager specific to your distribution. For example, on Ubuntu, you can run the following commands:

sudo apt-get update sudo apt-get install docker.io After installation, start and enable the Docker service:

sudo systemctl start docker sudo systemctl enable docker Installing Docker on macOS

To install Docker on macOS, download the Docker Desktop for Mac package from the official Docker website. After downloading, open the package and follow the installation prompts. Once installed, open Docker Desktop to start the Docker service.

Installing Docker on Windows

To install Docker on Windows, download the Docker Desktop for Windows package from the official Docker website. Ensure your system meets the minimum requirements (64-bit Windows 10 Pro, Enterprise, or Education edition). After downloading, open the package and follow the installation prompts. Once installed, open Docker Desktop to start the Docker service.

Basic Docker Commands

Here are some basic Docker commands to help you get started:

docker run: Run a new Docker container from an image.docker ps: List running Docker containers.docker stop: Stop a running Docker container.docker rm: Remove a stopped Docker container.docker images: List Docker images available on your system.docker rmi: Remove a Docker image.

For more information on Docker commands, refer to the official Docker documentation.

Docker Use Cases: Real-World Applications of Containerization Technology

Containerization technology Docker has become increasingly popular in various industries, offering numerous benefits such as resource efficiency, faster deployment, and improved system stability. This section showcases real-world use cases of Docker in web development, DevOps, big data, and machine learning, highlighting the advantages and challenges of implementing containerization technology in these scenarios.

Web Development

Docker simplifies web development by providing consistent and isolated development environments. Developers can easily set up a local development environment using Docker Compose, which defines services, networks, and volumes for the application. This approach ensures that the development environment matches the production environment, reducing potential compatibility issues and bugs.

DevOps

Docker has become a crucial tool in DevOps, enabling seamless collaboration between development and operations teams. Docker Swarm and Kubernetes allow for container orchestration, simplifying the management of large-scale applications and ensuring high availability, load balancing, and automatic scaling. Additionally, Docker simplifies the continuous integration and continuous delivery (CI/CD) pipeline, allowing for faster and more reliable deployments.

Big Data

Docker can help streamline big data processing by containerizing individual big data components, such as Hadoop, Spark, and Kafka. Containerization enables easier management, scaling, and updating of these components, reducing the operational overhead and improving overall system performance. Moreover, Docker simplifies the deployment of big data clusters in various environments, such as on-premises, cloud, or hybrid environments.

Machine Learning

Docker can be used to containerize machine learning models and their dependencies, ensuring consistent behavior across different environments. This approach simplifies the deployment of machine learning models in production, allowing data scientists to focus on model development rather than operational concerns. Additionally, Docker can be used to create containerized development environments for machine learning projects, ensuring that all team members have access to the same tools and libraries.

Challenges and Considerations

While Docker offers numerous benefits, there are also challenges and considerations when implementing containerization technology. These include managing container security, optimizing resource utilization, and maintaining compatibility across different container versions and host operating systems. To address these challenges, it is essential to follow best practices and guidelines for building, testing, and deploying Docker containers, as well as staying up-to-date with the latest developments and innovations in the containerization industry.

https://www.youtube.com/watch?v=rcYswUg0J5k

Docker vs. Virtual Machines: A Comparative Analysis

Containerization technology Docker and traditional virtual machines (VMs) are two popular virtualization approaches, each with its unique advantages and disadvantages. Understanding the similarities, differences, and trade-offs between Docker and VMs can help organizations make informed decisions about which approach best suits their needs in terms of performance, scalability, security, and cost.

Similarities

Both Docker and VMs provide a layer of abstraction that allows applications to run in isolated environments, reducing compatibility issues and conflicts between applications. Additionally, both approaches enable the creation of portable and consistent environments that can be easily deployed across different systems and platforms.

Differences

The primary difference between Docker and VMs lies in their underlying architecture and resource utilization. VMs rely on hypervisors to create and manage virtualized environments, each with its own operating system and kernel. In contrast, Docker containers share the host operating system kernel, resulting in reduced overhead and improved resource utilization.

Performance

Docker containers generally offer better performance than VMs due to their lighter weight and reduced overhead. Containers can start up and shut down more quickly than VMs, and they consume fewer resources, such as memory and CPU. This makes Docker an ideal choice for applications that require rapid scaling and high availability.

Scalability

Docker containers are more scalable than VMs due to their lighter weight and easier management. Docker Swarm and Kubernetes enable seamless container orchestration, allowing organizations to manage large-scale applications and ensure high availability, load balancing, and automatic scaling. In contrast, managing large-scale VM environments can be more complex and resource-intensive.

Security

Both Docker and VMs provide robust security features, such as isolation, network segmentation, and access control. However, Docker containers share the host operating system kernel, which can introduce potential security risks if not properly managed. To mitigate these risks, it is essential to follow best practices for container security, such as using namespaces, capabilities, and security policies.

Cost

Docker containers are generally more cost-effective than VMs due to their reduced overhead and resource utilization. Containers enable organizations to run more applications on the same hardware, reducing the need for additional infrastructure and maintenance costs. However, organizations should also consider the costs associated with managing and maintaining a containerized environment, such as container orchestration tools and training for staff.

Conclusion

Choosing between containerization technology Docker and traditional virtual machines depends on an organization’s specific needs and requirements. Docker offers better performance, scalability, and cost-effectiveness, while VMs provide stronger isolation and security features. By understanding the similarities, differences, and trade-offs between these two virtualization approaches, organizations can make informed decisions about which approach best suits their needs.

Best Practices for Building and Deploying Docker Containers

Containerization technology Docker has become increasingly popular in the software development industry due to its numerous benefits, such as resource efficiency, faster deployment, and improved system stability. To maximize these benefits, it is essential to follow best practices and tips for building, testing, and deploying Docker containers. This section covers essential best practices, including using multi-stage builds, minimizing container size, and integrating with CI/CD pipelines.

Using Multi-Stage Builds

Multi-stage builds enable developers to use multiple build stages in a single Dockerfile, each with its own set of instructions and dependencies. This approach allows developers to separate the build environment from the runtime environment, reducing the final container size and improving security by excluding unnecessary tools and libraries. To use multi-stage builds, simply define multiple FROM statements in the Dockerfile, each pointing to a different base image.

Minimizing Container Size

Smaller container sizes offer several benefits, such as faster deployment times, reduced resource utilization, and improved security. To minimize container size, consider the following tips:

- Use multi-stage builds to separate build and runtime environments.

- Remove unnecessary files and dependencies using the

RUN rmcommand. - Use

.dockerignorefiles to exclude unnecessary files from the build context. - Minimize the number of base image layers by combining commands and using build arguments.

Integrating with CI/CD Pipelines

Integrating Docker with continuous integration and continuous delivery (CI/CD) pipelines can help automate the build, test, and deployment processes. To integrate Docker with CI/CD pipelines, consider the following tips:

- Use a dedicated build server, such as Jenkins or GitLab Runner, to automate the build process.

- Use container registry services, such as Docker Hub or Google Container Registry, to store and manage Docker images.

- Implement automated testing and validation processes to ensure container integrity and security.

- Use deployment tools, such as Kubernetes or Docker Swarm, to automate the deployment process and ensure high availability and scalability.

Conclusion

Following best practices and tips for building, testing, and deploying Docker containers can help organizations maximize the benefits of containerization technology Docker. By using multi-stage builds, minimizing container size, and integrating with CI/CD pipelines, organizations can improve resource utilization, deployment speed, and system stability while reducing costs and security risks.

The Future of Docker and Containerization Technology

Containerization technology Docker has revolutionized the software development industry, offering numerous benefits such as resource efficiency, faster deployment, and improved system stability. As containerization technology continues to evolve, it is essential to understand the future trends and developments that may shape the industry in the coming years. This section discusses potential challenges, opportunities, and innovations that may impact the future of Docker and containerization technology.

Challenges

While containerization technology offers numerous benefits, it also presents several challenges that must be addressed. These challenges include:

- Security: Containerization technology introduces new security risks, such as container escapes and image vulnerabilities. To address these risks, it is essential to implement robust security measures, such as image scanning, network segmentation, and access control.

- Complexity: Containerization technology can be complex to manage and maintain, especially in large-scale environments. To address this challenge, organizations can use container orchestration tools, such as Kubernetes or Docker Swarm, to automate container management and deployment processes.

- Integration: Integrating containerization technology with existing infrastructure and tools can be challenging. To address this challenge, organizations can use container runtime interfaces, such as containerd or CRI-O, to ensure compatibility and interoperability with different container runtimes and orchestration tools.

Opportunities

Containerization technology also presents several opportunities for innovation and growth. These opportunities include:

- Edge Computing: Containerization technology can be used to deploy applications and services on edge devices, such as IoT devices and mobile devices. This approach can improve performance, reduce latency, and enable new use cases and applications.

- Serverless Computing: Containerization technology can be used to implement serverless computing architectures, enabling organizations to build and deploy applications without managing infrastructure. This approach can improve scalability, reduce costs, and enable faster development and deployment cycles.

- Multi-Cloud Deployments: Containerization technology can be used to deploy applications and services across multiple clouds and platforms, enabling organizations to improve flexibility, reduce vendor lock-in, and optimize resource utilization.

Innovations

Containerization technology is also driving several innovations in the software development industry. These innovations include:

- Serverless Containers: Serverless containers enable organizations to combine the benefits of containerization technology and serverless computing, enabling faster development and deployment cycles, improved scalability, and reduced costs.

- GitOps: GitOps is a DevOps practice that uses Git repositories to manage and deploy containerized applications. This approach enables organizations to improve version control, collaboration, and automation, reducing errors and improving deployment speed and reliability.

- Container Image as Code: Container image as code is a practice that treats container images as code, enabling organizations to use the same tools and processes for building, testing, and deploying container images as they do for code. This approach can improve consistency, reproducibility, and security, reducing errors and improving deployment speed and reliability.

Conclusion

The future of Docker and containerization technology is promising, with numerous opportunities and innovations on the horizon. However, organizations must also address several challenges, such as security, complexity, and integration, to fully realize the benefits of containerization technology. By implementing best practices, using container orchestration tools, and embracing new innovations, organizations can stay ahead of the curve and leverage containerization technology to improve efficiency, scalability, and agility in the software development industry.