What is Containerization and Why Should You Care?

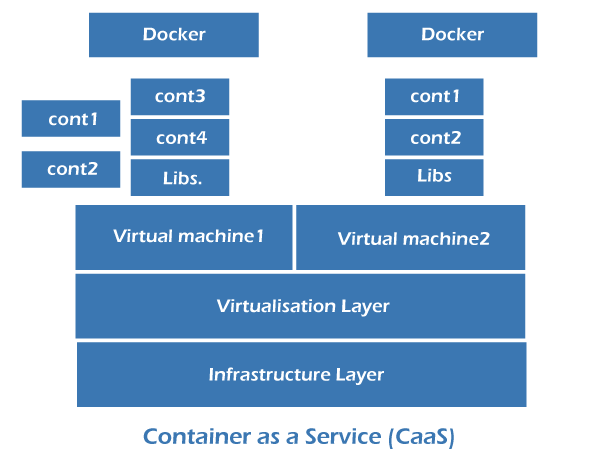

Imagine shipping goods across the globe. Each product needs its own protective packaging for safe transport. Containerization in computing works similarly. Containers package software code and all its dependencies into a single unit, ensuring it runs consistently across different environments. Unlike virtual machines (VMs) which virtualize the entire operating system, containers share the host OS kernel, making them significantly lighter and more efficient. This efficiency translates to faster deployments, reduced resource consumption, and substantial cost savings. The portability of containers allows developers to easily move applications between servers, clouds, or even personal laptops. This flexibility is a game-changer for modern software development. Container as a Service (CaaS) takes this a step further, providing managed solutions that automate the deployment, scaling, and management of containerized applications, greatly simplifying the process. CaaS handles the underlying infrastructure, allowing developers to focus on building and deploying applications.

The benefits of containerization are numerous. Portability allows applications to run seamlessly anywhere, improving deployment speed and reducing downtime. Scalability enables applications to easily handle fluctuating workloads by adding or removing containers as needed. The efficiency of containers minimizes resource usage, leading to lower infrastructure costs. This combination of portability, scalability, and efficiency makes container as a service an attractive option for businesses of all sizes, from startups to large enterprises. Moreover, CaaS platforms often include features like automated rollouts, health checks, and load balancing, streamlining operations and minimizing operational overhead. Consider it a modern, efficient way to manage your applications, offering flexibility and control.

Container as a service is revolutionizing how software is built, deployed, and managed. By abstracting away the complexities of infrastructure management, CaaS empowers developers to focus on what truly matters: building innovative applications. The advantages of using a CaaS platform extend beyond simple efficiency gains. Robust monitoring tools, built-in security features, and seamless integration with other cloud services contribute to a streamlined and secure development workflow. The benefits of this approach clearly outweigh the traditional methods of deploying and maintaining software. This makes container as a service a powerful tool for anyone looking to modernize their software infrastructure.

Exploring the Landscape of Container as a Service Platforms

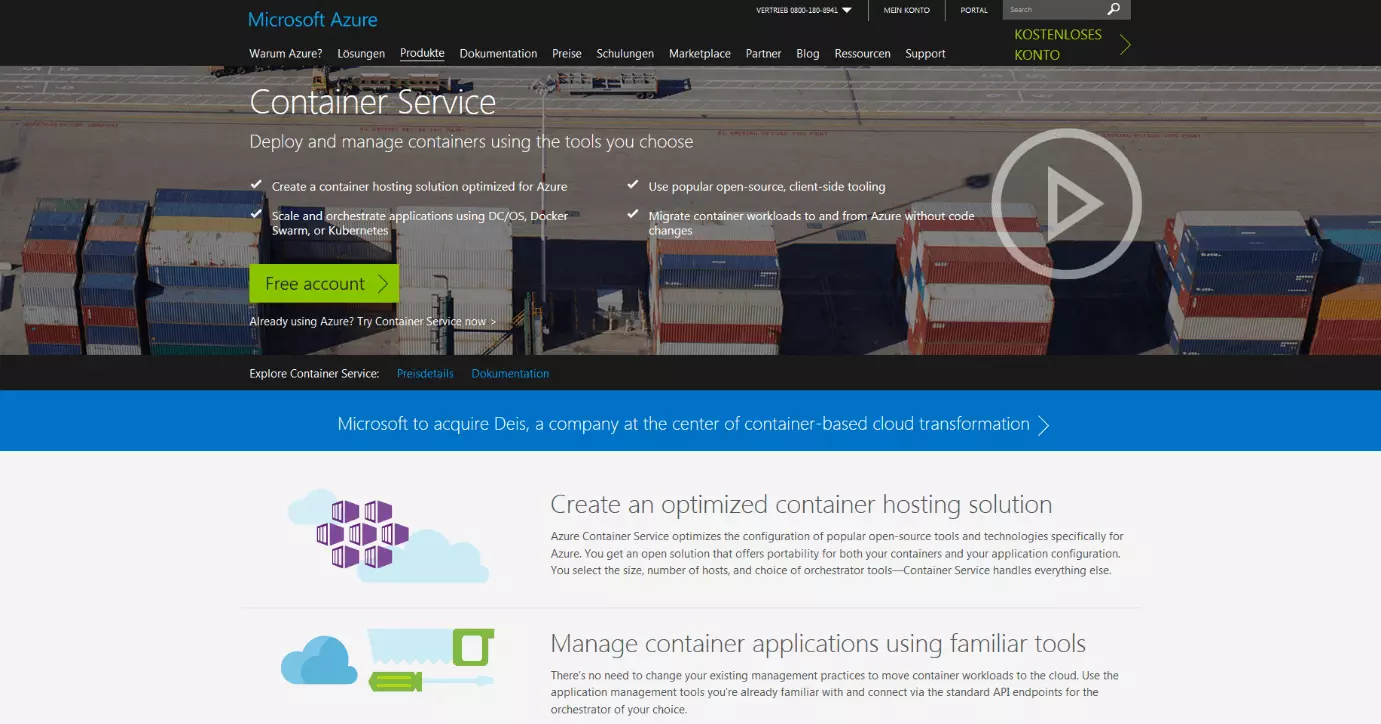

The container as a service (CaaS) market offers a variety of platforms, each with its own strengths and weaknesses. Docker, a foundational technology in containerization, provides the building blocks for creating and managing containers. Kubernetes, on the other hand, excels at orchestrating and managing clusters of containers at scale, automating deployments and scaling resources efficiently. This makes Kubernetes a popular choice for complex applications requiring high availability and scalability. Many cloud providers offer managed Kubernetes services, simplifying deployment and management further. These include Amazon Elastic Container Service (ECS), Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE). These managed services handle the underlying infrastructure, allowing developers to focus on their applications. While each offers similar core functionalities, nuances in pricing, integration with other cloud services, and specific features cater to different needs and preferences. Choosing the right platform often depends on existing infrastructure, team expertise, and specific application requirements.

Amazon ECS provides a managed container orchestration service that integrates seamlessly with other AWS services. It offers a simpler, potentially less complex approach compared to Kubernetes, suitable for smaller-scale deployments or those already heavily invested in the AWS ecosystem. Azure AKS offers a managed Kubernetes service within the Azure cloud, providing a robust and scalable solution integrated with other Azure services. Google Kubernetes Engine (GKE) is Google Cloud’s managed Kubernetes service, recognized for its performance and scalability, particularly well-suited for large-scale deployments and demanding applications. Selecting the optimal container as a service platform requires careful consideration of these factors. A thorough evaluation is necessary to align the platform’s capabilities with the unique demands of a specific application and organizational context. The diverse offerings in the CaaS landscape provide flexibility for businesses of all sizes.

Consider the scalability requirements of your application. Do you anticipate significant growth? Kubernetes-based solutions generally offer better scalability than simpler container services. Security is paramount; each platform has its own security features and integrations. Evaluate these features based on your specific security requirements. Your budget plays a critical role; managed container as a service platforms offer varying pricing models. Factor in both upfront costs and potential ongoing expenses. The expertise of your team is another important factor. Choosing a platform that aligns with your team’s skillset can significantly impact productivity. Finally, vendor support and the overall ease of use should be carefully considered. The best container as a service solution is the one that seamlessly integrates with your infrastructure and aligns with your organizational goals.

How to Choose the Right Container as a Service Platform for Your Needs

Selecting the optimal container as a service platform requires careful consideration of several key factors. Application requirements form the foundation of this decision. Does your application demand high scalability to handle fluctuating user loads? What are your security needs? Do you require specific compliance certifications? Answering these questions will significantly narrow your options. Budget constraints also play a crucial role. Different container as a service providers offer varying pricing models, from pay-as-you-go to tiered subscriptions. Carefully evaluate these models to align with your financial plan. The existing infrastructure must also be factored in. Does your organization already utilize a specific cloud provider? Choosing a container as a service offering from that provider can streamline integration and reduce complexity. Team expertise is equally important. A platform requiring specialized skills may not be suitable if your team lacks that proficiency. Finally, vendor support is critical. Look for providers offering robust documentation, responsive customer support, and a proven track record of reliability. A provider with strong community support can be a valuable asset.

To simplify the decision-making process, consider using a decision matrix. Create a table listing potential container as a service platforms (such as Docker, Kubernetes, AWS ECS, AKS, and GKE) along with the key factors outlined above. Assign weights to each factor based on its importance to your project. Then, score each platform for each factor based on how well it meets your requirements. The platform with the highest weighted score provides a strong initial candidate. Consider also using a visual flowchart that guides you step-by-step through this process. Begin with assessing your application’s needs, progress to evaluating your budget, and sequentially examine infrastructure, team expertise, and vendor support. Each decision point should lead to a relevant question to help you determine the best fit. This systematic approach ensures a well-informed decision aligning with your needs. Remember that choosing the right container as a service provider is a significant long-term commitment, so a thorough evaluation is essential. The right platform will significantly impact the efficiency, scalability, and cost-effectiveness of your deployments.

Beyond the technical aspects, remember to assess the overall value proposition. Some container as a service platforms offer extensive managed services, simplifying operations and reducing the burden on your team. Others prioritize flexibility and customization, providing greater control but potentially requiring more hands-on management. This choice depends on your team’s capabilities and preferences. Careful evaluation of pricing models—considering factors such as resource consumption, data transfer, and storage—is crucial for long-term cost management. It’s advisable to request trials or proof-of-concept projects with shortlisted platforms to gain practical experience and assess their suitability before committing fully. This hands-on approach helps validate your initial assessment and ensures a confident choice in selecting the right container as a service solution. The ultimate goal is to find a solution that seamlessly integrates with your existing workflow and empowers your team to focus on application development rather than infrastructure management. By employing this structured decision-making process, you can significantly increase your chances of success with your container as a service implementation. This strategic approach ensures a secure and efficient path forward for your organization.

Docker: A Deep Dive into the Industry Standard

Docker, a cornerstone of the container as a service ecosystem, simplifies the creation, deployment, and running of applications using containers. It packages an application and its dependencies into a standardized unit, ensuring consistent execution across different environments. This portability is a key advantage, allowing developers to build once and deploy anywhere, from laptops to cloud servers. Docker streamlines the development workflow, enabling faster deployments and improved collaboration. The lightweight nature of containers, compared to virtual machines, contributes to significant resource efficiency and cost savings in container as a service deployments.

A Docker container leverages the host operating system’s kernel, making it significantly more efficient than a virtual machine which emulates an entire operating system. This efficiency translates to reduced infrastructure costs and improved performance. Docker images, which serve as blueprints for containers, are stored in a registry – a central repository – allowing for easy sharing and management. Docker Hub, the official registry, offers a vast library of pre-built images, simplifying the process of integrating various software components into your applications. Docker Compose extends Docker’s functionality, enabling the management of multi-container applications through a single configuration file. This simplifies deployment of complex applications and improves overall manageability within your chosen container as a service platform.

Docker’s ecosystem extends beyond the core engine, encompassing tools and services that enhance the entire container lifecycle. Docker Swarm, Docker’s native container orchestration tool, allows for managing clusters of Docker hosts. While Kubernetes has become more widely adopted for large-scale orchestration, Docker Swarm remains a viable solution for simpler deployments. The integration of Docker with various container as a service platforms, such as AWS ECS, Azure AKS, and Google GKE, further underscores its significance in modern application deployment. Understanding Docker is crucial for anyone seeking to harness the full potential of container as a service technologies. Its ease of use, coupled with its powerful features, makes it an essential tool for developers and operations teams alike, driving efficiency and scalability in modern software deployments. The wide adoption of Docker within the container as a service space solidifies its position as an industry standard.

Kubernetes: Orchestrating Containers for Scalability and Efficiency

Kubernetes, often shortened to K8s, is a powerful and popular system for automating deployment, scaling, and management of containerized applications. Think of it as the conductor of an orchestra, where each container is a musician. Kubernetes ensures these musicians play in harmony, scaling resources up or down based on demand. This sophisticated container as a service solution handles complex tasks, allowing developers to focus on building applications rather than infrastructure management. A key benefit is its ability to handle the complexities of deploying and managing containerized applications across multiple machines. This significantly improves efficiency and reduces operational overhead.

Key concepts within Kubernetes include pods, deployments, and services. Pods are the smallest deployable units, typically containing one or more containers. Deployments manage the lifecycle of pods, ensuring the desired number of pods are always running. Services provide a stable IP address and DNS name for accessing pods, even as they are scaled or replaced. These components work together to create a robust and scalable container as a service environment. Understanding these core concepts is vital for effectively leveraging Kubernetes’ capabilities. Visualizations, such as diagrams illustrating the relationship between pods, deployments, and services, greatly enhance understanding. Consider a simple diagram showing how a service acts as a stable entry point to a set of dynamic pods, illustrating the flexibility and scalability provided by Kubernetes.

Kubernetes’ advantages extend beyond simple deployment. It offers advanced features like automated rollouts and rollbacks, self-healing capabilities, and automatic scaling. These features significantly reduce downtime and improve the overall reliability of applications. The ability to effortlessly scale applications up or down based on real-time demand is a game changer for many businesses. It allows organizations to optimize resource utilization and costs. Furthermore, robust community support and a vast ecosystem of tools and extensions make Kubernetes a versatile solution for diverse container as a service needs. Choosing Kubernetes often translates to greater scalability, resilience, and control over containerized applications.

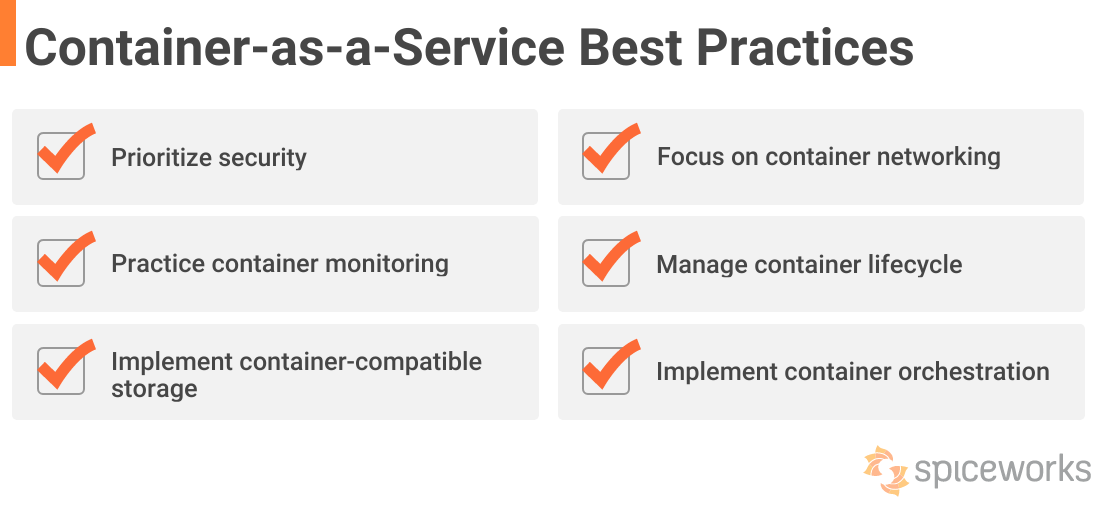

Security Best Practices in Containerized Environments

Containerization, while offering significant advantages in terms of scalability and efficiency for container as a service, introduces new security considerations. Protecting containerized applications requires a multi-layered approach, addressing vulnerabilities at each stage of the lifecycle. Image security is paramount. Developers should employ secure coding practices and leverage automated vulnerability scanning tools to identify and remediate weaknesses before deploying container images. Regularly updating base images and utilizing minimal images reduces the attack surface. Secure configuration is also critical. Container configurations should be hardened to minimize potential exploits. This includes disabling unnecessary services and restricting access to sensitive resources.

Network security plays a vital role in securing a container as a service deployment. Employing strong network segmentation helps to isolate containers from each other and the broader network, reducing the impact of potential breaches. Network policies, implemented through tools like Kubernetes Network Policies, can control traffic flow between containers and enforce least privilege access. Microsegmentation, breaking the network into smaller, isolated zones, adds an extra layer of protection. Implementing robust access control mechanisms is also essential. Utilize role-based access control (RBAC) to grant users only the necessary permissions to manage containers and related resources. Regularly audit access logs to identify and address any unauthorized activities. Strong authentication and authorization protocols should be employed to control access to container images and other sensitive components.

Vulnerability management is an ongoing process. Regularly scanning container images and the underlying infrastructure for vulnerabilities is crucial for proactive security. Employ automated vulnerability scanning tools integrated into the CI/CD pipeline to detect vulnerabilities early in the development cycle. Implement a robust patch management process to address discovered vulnerabilities promptly. Continuous monitoring of containerized applications and the underlying infrastructure using tools that provide real-time visibility into container activity, performance, and security is crucial. The use of container as a service platforms with built-in security features and regular security updates will strengthen the overall security posture. These combined strategies are crucial for building a secure and reliable container as a service environment.

Real-World Case Studies: Container as a Service Success Stories

Many organizations have successfully leveraged container as a service platforms to achieve significant improvements in their operations. Netflix, a pioneer in cloud adoption, famously utilizes Kubernetes to manage its massive-scale streaming infrastructure. This allows them to rapidly deploy and scale their services to meet fluctuating demands, ensuring a seamless viewing experience for millions of users worldwide. The benefits include increased agility, reduced infrastructure costs, and improved reliability. Their experience highlights the power of container as a service in handling exceptionally high traffic loads and complex deployments.

Another compelling example comes from the financial services sector. A major global bank implemented a container as a service strategy to modernize its legacy systems. By migrating applications to containers, the bank achieved faster deployment cycles, improved resource utilization, and enhanced security. This resulted in significant cost savings and improved compliance with regulatory requirements. This case demonstrates how container as a service can benefit even highly regulated industries by increasing efficiency and lowering operational costs while simultaneously bolstering security.

Beyond these large-scale deployments, numerous smaller companies have also benefited from container as a service. E-commerce platforms, for example, have utilized containerization to scale their operations during peak seasons, such as holiday shopping periods. The flexibility and scalability offered by container as a service allow these businesses to handle surges in traffic without experiencing performance degradation. This ultimately translates to a better customer experience and increased sales. These examples showcase the broad applicability of container as a service across various industries and company sizes, proving its value as a transformative technology. The ease of scalability and deployment offered by container as a service solutions provides a significant competitive advantage.

The Future of Container as a Service: Trends and Predictions

The container as a service landscape continues to evolve rapidly. Serverless containers represent a significant advancement, automating infrastructure management further and optimizing resource utilization. This approach allows developers to focus solely on code, reducing operational overhead. The rise of edge computing also impacts container as a service. Deploying containers closer to users reduces latency and improves application performance, particularly crucial for real-time applications and IoT devices. Expect to see increased integration between container as a service platforms and edge computing infrastructure.

Security remains a paramount concern. Future developments in container as a service will prioritize enhanced security features. This includes advanced vulnerability scanning, automated patching, and improved access control mechanisms. Artificial intelligence and machine learning will play an increasingly important role, improving threat detection and response within containerized environments. Expect to see more sophisticated security tools integrated directly into container as a service platforms, simplifying security management for developers and operations teams. The move toward immutable infrastructure, where containers are replaced rather than updated, will also strengthen security postures.

Further innovation in container orchestration tools like Kubernetes will drive efficiency and scalability. Improved automation and self-healing capabilities will simplify the management of complex container deployments. Expect tighter integration between container as a service platforms and other cloud services, creating seamless workflows for developers. The adoption of container as a service will continue to accelerate, transforming how applications are built, deployed, and managed. This will lead to increased agility, improved scalability, and reduced operational costs for organizations of all sizes. The ongoing evolution of container as a service promises a future where deploying and managing applications is more efficient and streamlined than ever before.