Understanding BigQuery’s Core Components

BigQuery architecture forms a robust and scalable data warehouse system. At its core, the bigquery architecture is built around fundamental components. Tables store structured data, forming the basis for data organization within BigQuery. Datasets logically group related tables, enabling effective organization and management of large volumes of data. Clusters, a key element of BigQuery architecture, represent the underlying computing resources. These components interact dynamically, enabling efficient querying and analysis of massive datasets. Users interact with these elements through the BigQuery console, a web-based interface, or programmatically using APIs. This structured approach optimizes data retrieval and analytical processing. Data management within the BigQuery environment is facilitated through this organized structure.

Datasets function as containers, organizing related tables. This organization enhances data management and enables easier retrieval. Tables are the fundamental units for data storage. Each table holds specific data, and datasets group related tables for logical organization. This structure allows users to manage and query their data effectively. Understanding these elements is vital for successfully utilizing the BigQuery platform. A well-defined schema ensures accurate data representation and efficient analysis in this environment. This architectural design facilitates comprehensive management and analysis of data within BigQuery. Understanding the relationships between tables, datasets, and clusters is crucial for optimized utilization of the bigquery architecture.

The organized structure of BigQuery’s tables and datasets streamlines data management. This hierarchical arrangement significantly improves the overall user experience. Users benefit from a logical structure that promotes data management and analysis effectiveness within BigQuery. The bigquery architecture facilitates data organization and query optimization. Users can access and query data effectively due to the organized structure. This approach is crucial for efficient data management and analysis within BigQuery. The system’s scalability and performance are optimized using this hierarchical organization.

Data Ingestion and Storage Mechanisms in BigQuery Architecture

BigQuery offers various methods for loading data, catering to different needs and use cases. Understanding these methods is crucial for optimizing bigquery architecture performance. Batch loading is suitable for large, static datasets, while streaming loading facilitates real-time data ingestion. BigQuery’s flexible data formats, such as CSV and Parquet, ensure compatibility and efficiency. This adaptability in data ingestion allows businesses to tailor their bigquery architecture to specific requirements. For example, real-time analytics might prefer streaming loads, while historical data analysis might use batch loads.

Batch loading excels for loading large amounts of data that don’t require immediate processing. This method typically involves uploading data files to Google Cloud Storage, a cloud storage service, and then loading them into BigQuery. BigQuery’s storage mechanisms are optimized for high performance by employing techniques like data compression, which reduces storage space and improves query speed. Streaming loads, on the other hand, allow continuous data ingestion, enabling real-time analytics and monitoring. Choosing the right loading method depends on the nature of the data and the required analysis cadence. The choice between batch and streaming loading in the bigquery architecture often impacts the overall system efficiency and responsiveness.

BigQuery’s storage mechanisms play a vital role in query performance. Data formats significantly impact loading speed and query efficiency. Parquet files, for instance, provide superior compression and data organization, leading to quicker query execution. BigQuery’s internal storage optimization ensures high performance, even with large datasets. The choice of data format directly influences data loading and querying speeds within the bigquery architecture. Users must select the most appropriate format based on the data’s characteristics and processing requirements. Understanding these factors is essential for building a robust and efficient bigquery architecture.

Querying and Processing Data in BigQuery

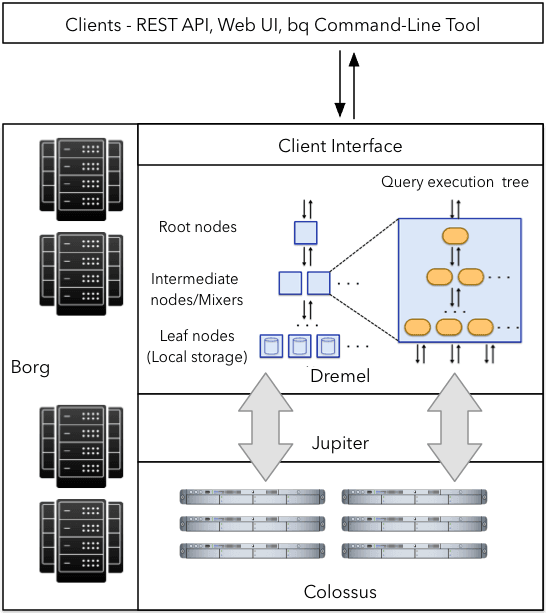

BigQuery’s powerful query language allows users to interact with and retrieve data efficiently. Users employ SQL-like queries to extract insights from the vast datasets stored in the bigquery architecture. This allows for complex analytical tasks, ranging from simple data summarization to advanced statistical modeling. BigQuery optimizes queries for speed, handling large datasets with remarkable performance.

BigQuery’s query engine employs sophisticated query optimization techniques. It automatically identifies the most efficient execution plan for each query, considering factors such as data distribution and storage format. This optimization leads to substantial performance gains, enabling quick responses to complex analytical requests. Users also benefit from features like DDL (Data Definition Language) to manage database objects and DML (Data Manipulation Language) to modify data within BigQuery tables. This structured approach facilitates data manipulation and query customization. Furthermore, materialization is a key technique that caches query results. This cached data is later used for faster retrieval, significantly improving performance for repeated analytical tasks within the bigquery architecture.

These functionalities are crucial for a range of business intelligence and data analysis tasks. Data analysts, business users, and data scientists can leverage these capabilities to perform various tasks such as trend analysis, forecasting, and identifying patterns within large datasets. The ease of querying data and performing complex analysis within the bigquery architecture makes it a suitable tool for comprehensive business decision-making.

BigQuery’s Scalability and Performance

BigQuery’s architecture is designed for exceptional scalability, enabling it to handle vast datasets and high query volumes. This scalability is a crucial aspect of its effectiveness in handling large-scale data analysis tasks. The serverless architecture is a key contributor to this scalability. Users don’t need to manage servers; BigQuery automatically provisions resources based on demand. This efficiency is a core component of the bigquery architecture’s performance and cost-effectiveness. Different cluster types are available, influencing performance based on factors like query complexity and data size. The system dynamically allocates resources, adapting to workload fluctuations.

Several factors influence query performance in BigQuery. Data size plays a significant role. Larger datasets might necessitate more processing power and time to retrieve the required information. Query complexity is another important factor. More intricate queries may take longer to execute compared to simpler ones. BigQuery employs various optimization techniques to minimize these impacts. The underlying architecture is optimized to execute complex queries efficiently, minimizing the time to generate results, a key strength of the bigquery architecture. Efficient data partitioning strategies contribute to performance enhancement, ensuring faster access to specific data subsets, speeding up the process of fulfilling user queries.

BigQuery’s scalability and performance are crucial for various business needs. From analyzing website traffic to understanding customer behavior, businesses rely on the system’s ability to handle large datasets and complex queries. The bigquery architecture is designed for this very task; users can confidently process data at scale. This enables quicker insights and decision-making, providing a competitive advantage. The ability to handle massive amounts of data and sophisticated queries is a significant benefit in a variety of data-driven business environments. This contributes to the efficiency of bigquery architecture allowing it to serve a variety of use cases.

Security and Access Control in BigQuery Architecture

Robust security is paramount in any data platform. BigQuery architecture incorporates various security measures to safeguard sensitive data. Access control is a critical aspect. Users manage access rights through Identity and Access Management (IAM). This granular control allows administrators to define specific permissions for different users and roles. Granular access control is crucial for data governance and prevents unauthorized data access. Different user roles can be assigned various privileges.

Data encryption is another vital security feature. BigQuery employs encryption at rest and in transit to protect data from unauthorized access. This ensures data confidentiality, especially during transmission and storage. Data encryption is critical for maintaining compliance with data security regulations like GDPR and HIPAA. Implementing encryption policies is a crucial step in a secure bigquery architecture. Employing best practices for data encryption safeguards sensitive user information. Data loss prevention policies are critical aspects.

Data security best practices, including data masking and access logs, are essential in a secure bigquery architecture. Regular security audits are important to identify and address vulnerabilities proactively. Robust logging and monitoring of access attempts are critical elements to establish a secure bigquery architecture. Implementing these practices helps maintain the integrity and confidentiality of data within the BigQuery platform. Security measures should be incorporated in the data lifecycle to prevent and mitigate risks.

BigQuery Integration with Other Google Cloud Services

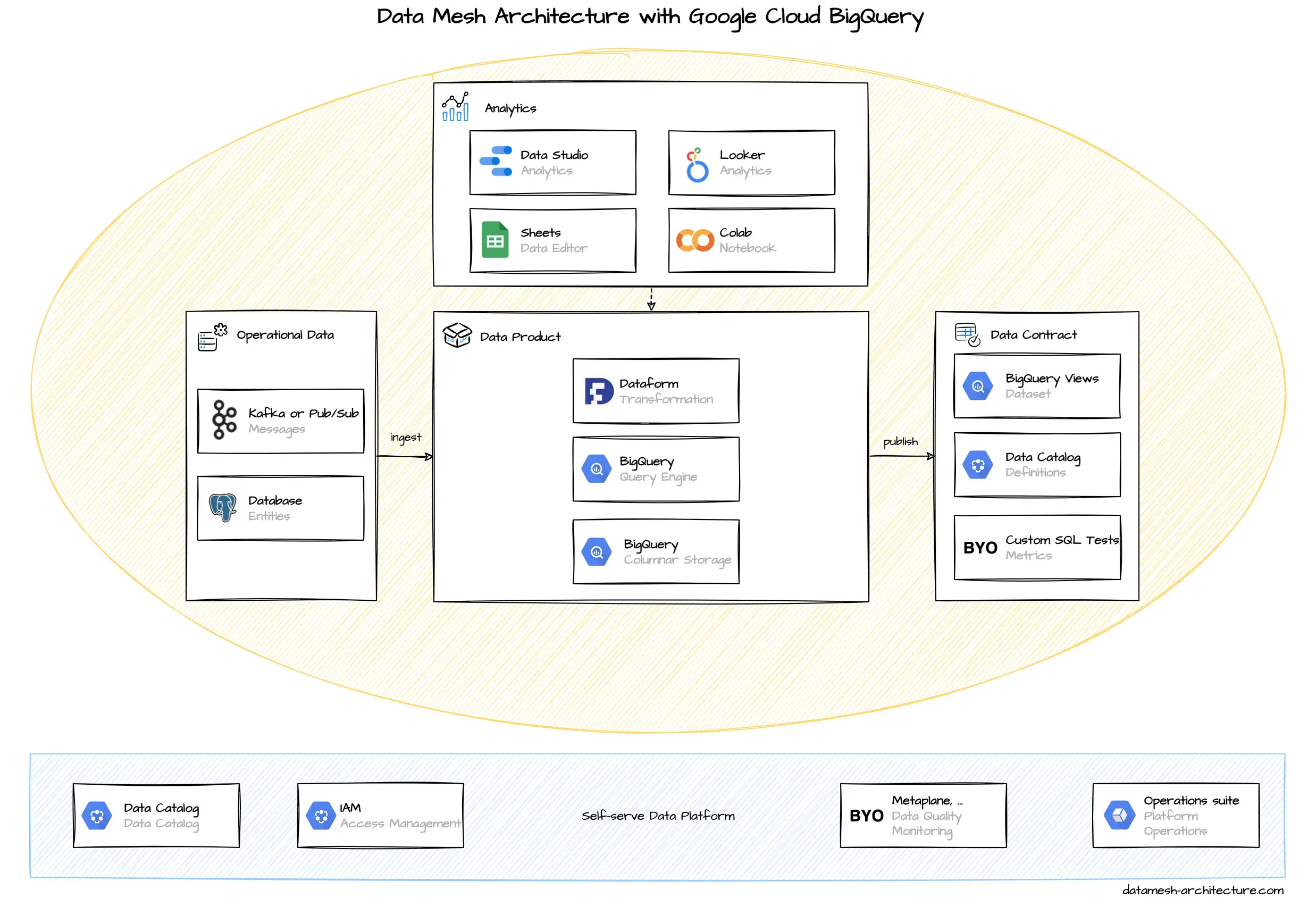

BigQuery seamlessly integrates with a wide array of Google Cloud Platform (GCP) services, enhancing its capabilities and simplifying data workflows. This integration streamlines data pipelines and empowers users to leverage the power of various services within GCP. Data flows effortlessly between BigQuery and other services, dramatically improving data processing. The integration features of BigQuery architecture prove extremely useful in enterprise settings. This flexibility is a key advantage of using BigQuery.

One crucial integration is with Cloud Storage. BigQuery can directly query data residing in Cloud Storage, eliminating the need for manual data transfer. This direct access significantly improves efficiency and speeds up data analysis. The integration with Dataproc, a powerful data processing framework, is another noteworthy aspect. Users can leverage Dataproc to perform complex data transformations on data within BigQuery, creating more sophisticated analysis capabilities. This enhanced integration within the BigQuery architecture is a boon for businesses needing high-level data processing.

Furthermore, BigQuery’s integration with Cloud Functions allows for automated data processing triggered by specific events. For example, when new data arrives in Cloud Storage, Cloud Functions can automatically load and process it into BigQuery. This automation significantly streamlines data pipelines, optimizing workflows and reducing manual intervention. These seamless integrations within the BigQuery architecture simplify data analysis tasks. BigQuery’s strategic integrations offer great potential to organizations.

Best Practices for BigQuery Architecture Design

Effective bigquery architecture design is crucial for building scalable and maintainable systems. Optimizing table design and implementing strategic partitioning strategies are key aspects. Data modeling techniques play a vital role in ensuring optimal query performance.

Partitioning data effectively within BigQuery is essential. This involves dividing large datasets into smaller, manageable partitions based on specific criteria, like time or geographical location. Partitioning significantly improves query performance by enabling the system to efficiently access only the relevant data segments. When designing tables, consider the expected data growth and potential query patterns. This proactive approach will minimize future performance bottlenecks. Employ appropriate data types for columns to ensure storage efficiency and data integrity. Data modeling techniques such as normalization or denormalization can also be applied to improve the overall efficiency of data queries and reduce data redundancy within bigquery architecture. BigQuery architecture optimization should emphasize clear and concise data modeling.

Implementing appropriate indexing strategies can dramatically improve query speed. Consider using composite indexes to speed up joins and filter operations, particularly for frequently accessed data. Regular monitoring and analysis of query performance are critical. Identify and address potential bottlenecks in the system promptly. Implementing robust error handling and logging mechanisms is essential for maintaining data integrity and allowing for easy debugging. This structured approach to bigquery architecture helps to optimize resource utilization and enhances the reliability of the system. Thoroughly testing the architecture and its components before deployment is crucial to prevent unexpected issues and ensure the efficient handling of data within BigQuery.

A Practical Example of a BigQuery Architecture

Consider a scenario where a retail company needs to analyze website traffic and customer behavior. A comprehensive bigquery architecture can provide valuable insights. This example demonstrates how the components of the bigquery architecture can be integrated to support such analysis. The company uses Google Cloud Platform (GCP) for its infrastructure. The first step involves ingesting website traffic data from various sources, like log files and web analytics platforms, into BigQuery. This raw data is transformed and processed using tools. A dedicated team of data engineers will ensure the data is accurate and suitable for analysis.

Next, the company creates a series of datasets within BigQuery. Each dataset is organized to contain related tables—one for website traffic data, another for customer demographics, and one for sales records. These datasets are designed to optimize querying and storage efficiency. The architecture considers specific business questions. For example, the website traffic data is partitioned by date to allow for fast retrieval of daily or weekly trends. A key part of this bigquery architecture involves utilizing specific data formats. Using formats like Parquet or Avro provides efficient compression and storage, and supports fast querying. Queries are crafted to identify trends, such as top-performing product categories or user journeys. The use of materialization is crucial in this bigquery architecture. Materialized views aggregate frequently used data for quick access, especially when dealing with intricate reports.

Finally, the company leverages BigQuery’s integration with other GCP services. The processed data is visualized and presented to business analysts through dashboards built using tools like Looker. This bigquery architecture efficiently streamlines and automates the data pipeline. Real-time insights from customer behavior and website traffic data are made available. Reporting and analysis tools allow business decision-makers to understand trends, identify opportunities, and make informed decisions. This illustrates how a well-designed bigquery architecture empowers data-driven decision-making in a real-world retail scenario.