Understanding Azure ETL Tools

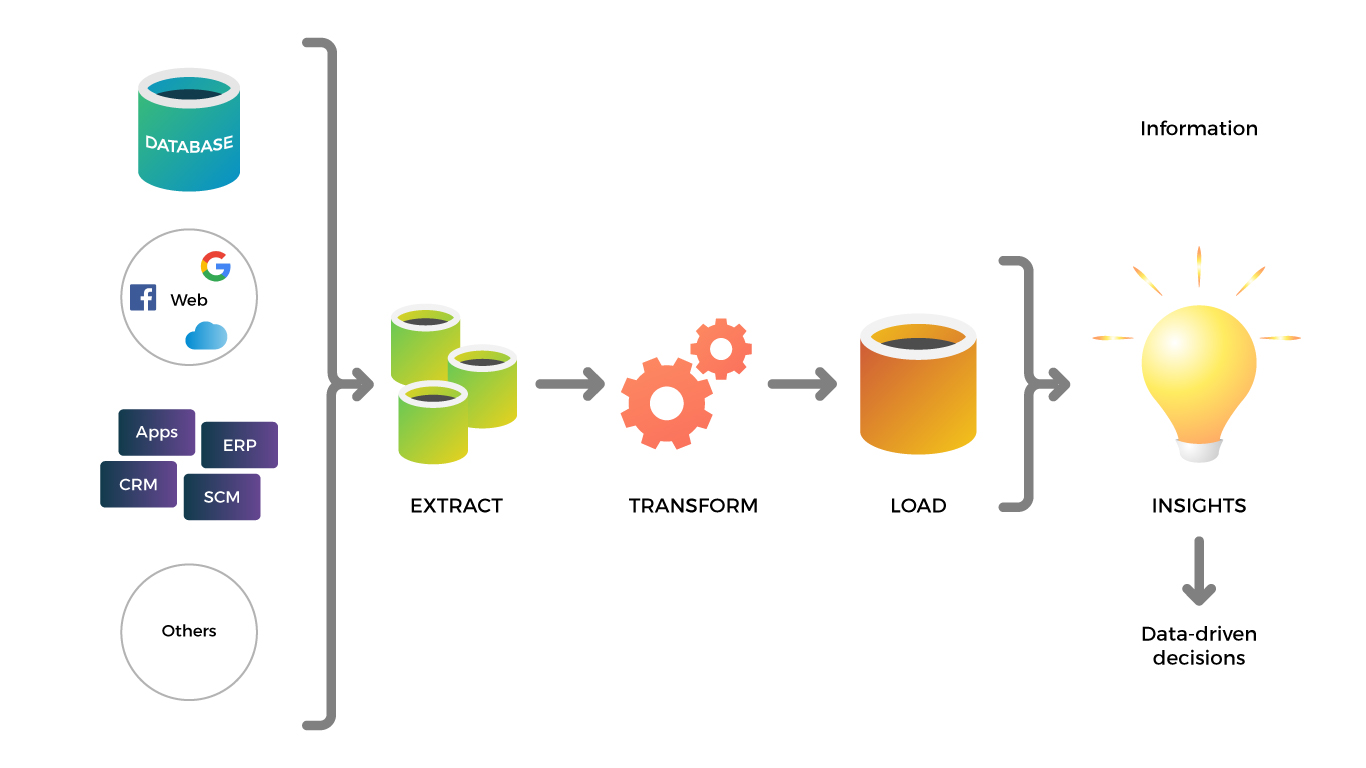

ETL, or Extract, Transform, Load, is a critical process in data integration and transformation, enabling organizations to consolidate and refine data from various sources for analysis and decision-making. Azure ETL tools are cloud-based solutions designed to facilitate ETL processes within the Microsoft Azure ecosystem. These tools offer numerous benefits, including cost-effectiveness, scalability, and seamless integration with other Azure services.

Choosing the right Azure ETL tool is essential for optimizing performance, streamlining workflows, and reducing costs. By evaluating factors such as ease of use, scalability, security, and compatibility with existing systems, organizations can ensure a successful ETL process and make the most of their data assets.

Key Features to Consider in Azure ETL Tools

When evaluating Azure ETL tools, it is crucial to consider several key features that contribute to a successful data integration and transformation process. These features include:

- Ease of use: An intuitive user interface and drag-and-drop functionality can significantly reduce the learning curve and enable faster deployment.

- Scalability: The ability to handle increasing data volumes and complex transformations without compromising performance is essential for future-proofing your data infrastructure.

- Security: Robust security measures, such as data encryption, access controls, and auditing, help protect sensitive information and ensure regulatory compliance.

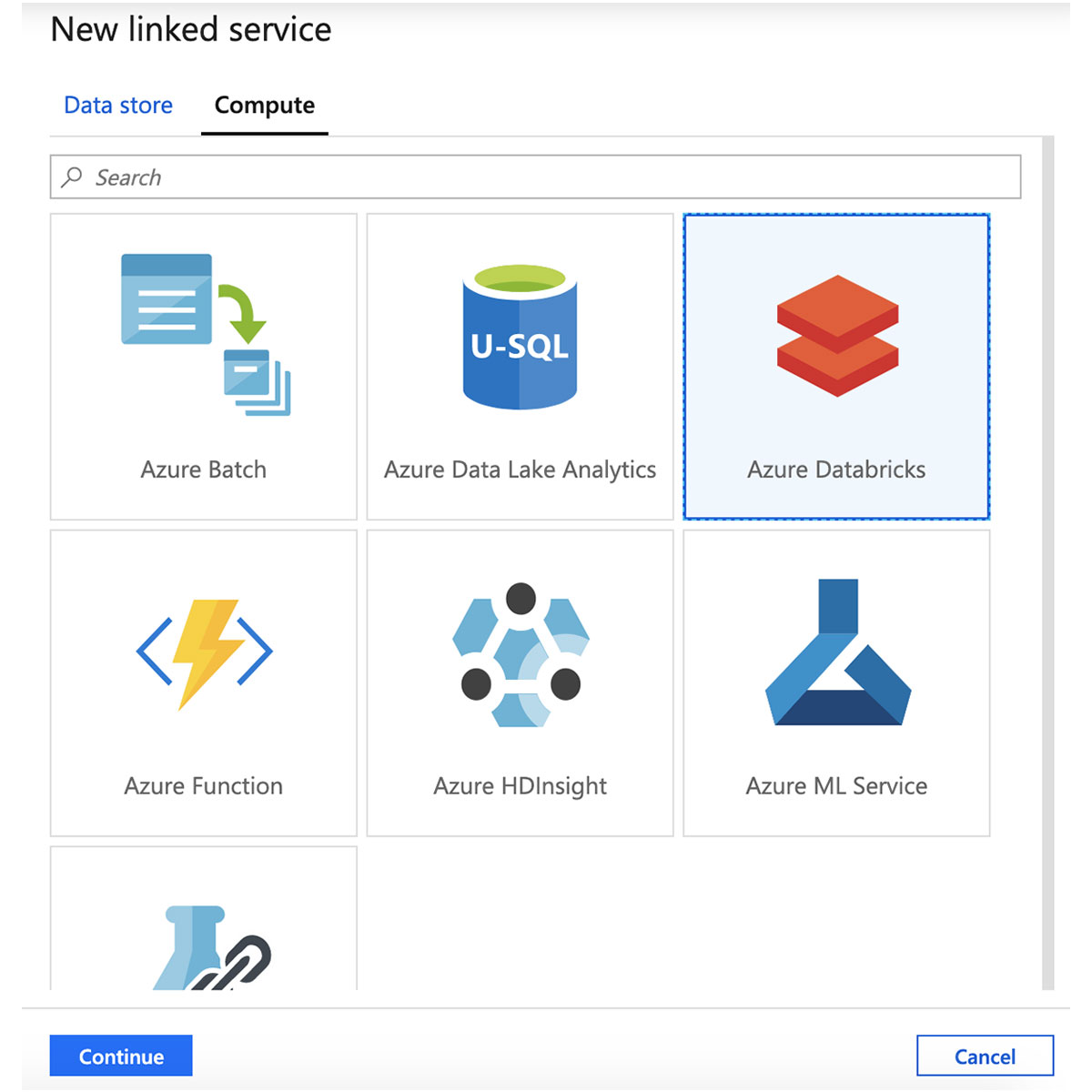

- Integration with other Azure services: Seamless integration with complementary Azure tools, such as Azure Data Lake Storage, Azure Synapse Analytics, and Azure Databricks, can streamline workflows and enhance functionality.

By prioritizing these features, organizations can choose an Azure ETL tool that meets their current needs and allows for growth and adaptation in the future. A well-chosen Azure ETL tool can help streamline data integration and transformation processes, reduce costs, and improve overall efficiency.

Top Azure ETL Tools in the Market

When it comes to Azure ETL tools, several popular solutions cater to various business needs and data requirements. Here is a brief overview of each tool’s features, strengths, and use cases:

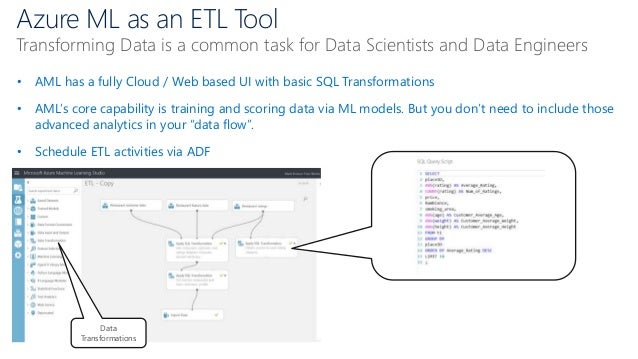

Azure Data Factory

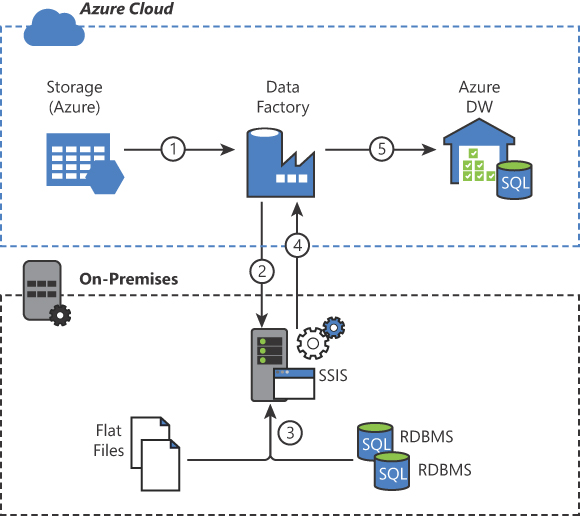

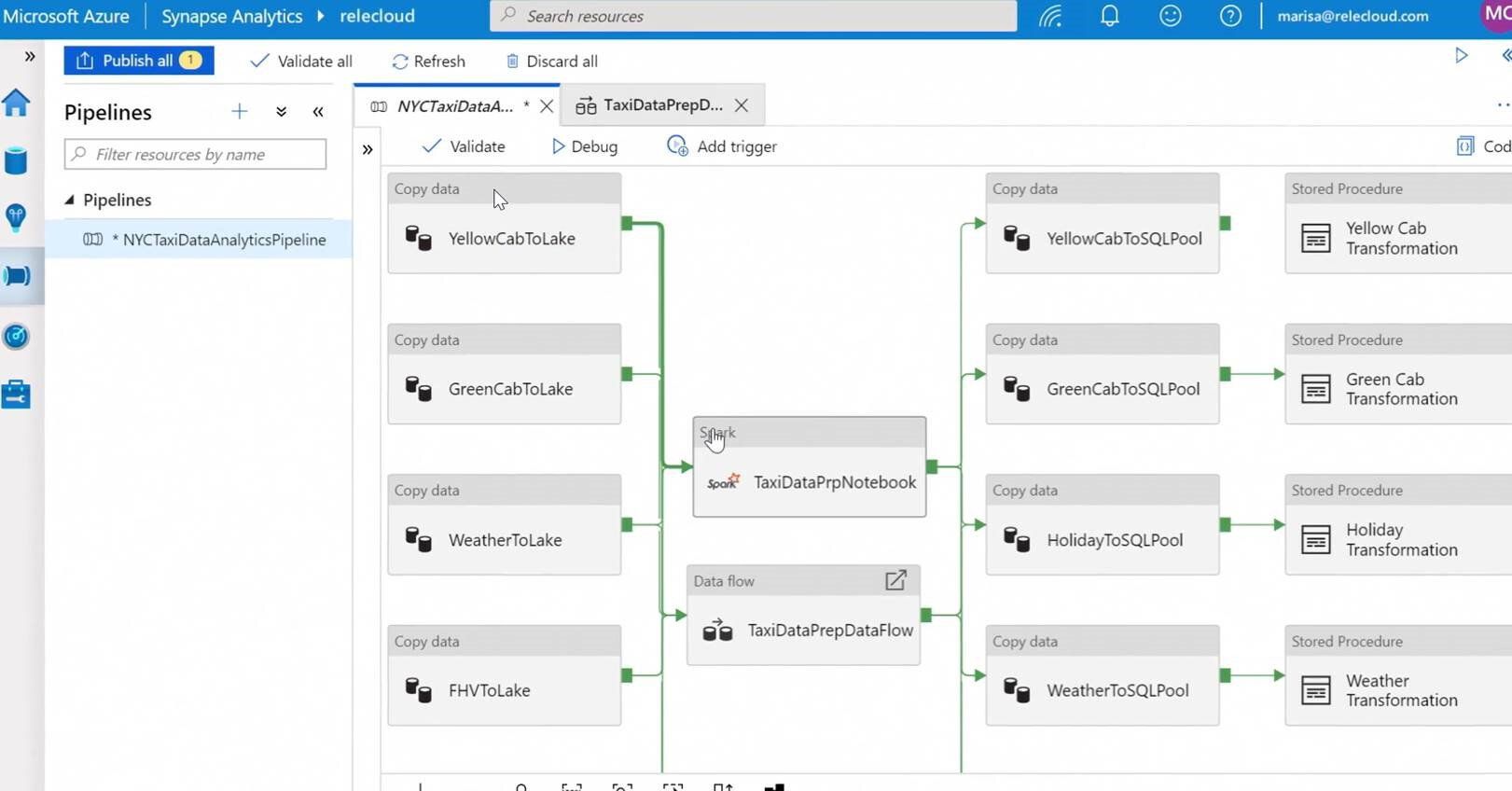

Azure Data Factory (ADF) is a fully managed, serverless data integration service that allows you to create, schedule, and manage your ETL workflows. ADF supports various data stores, including on-premises, cloud, and SaaS applications. Its visual interface, pre-built connectors, and support for custom activities make it a versatile choice for data integration and transformation tasks.

Talend

Talend is an open-source data integration platform that supports Azure ETL processes with its cloud-based solution, Talend Cloud. It offers a unified, graphical environment for building, testing, and deploying data pipelines. Talend Cloud’s key features include data mapping, data quality, and data governance, making it an excellent choice for organizations seeking a comprehensive data integration solution.

Informatica Intelligent Cloud Services

Informatica Intelligent Cloud Services (IICS) is a cloud-native, multi-tenant data management platform designed for hybrid data integration and big data management. IICS offers a wide range of data integration and transformation capabilities, including data quality, master data management, and data governance. Its AI-driven features, such as automated data mapping and recommendation-based transformations, make it a powerful choice for complex ETL processes.

These are just a few examples of the top Azure ETL tools available in the market. Each tool offers unique features and strengths, making it essential to consider your organization’s specific needs and requirements when selecting the most suitable solution.

How to Choose the Right Azure ETL Tool

Selecting the most suitable Azure ETL tool for your organization involves careful consideration of various factors. By evaluating these factors, you can ensure that your chosen tool aligns with your business needs, technical capabilities, and budget:

- Data volume, variety, and velocity: Assess your data requirements, including the volume of data, the variety of data sources, and the speed at which data is generated and needs to be processed. Different Azure ETL tools excel in different scenarios, so choose one that can handle your specific data needs.

- Technical capabilities: Evaluate your team’s expertise and familiarity with data integration and transformation tools. Some Azure ETL tools may require more advanced skills, while others offer user-friendly interfaces and drag-and-drop functionality. Select a tool that matches your team’s capabilities and is easy for them to learn and use.

- Budget: Consider the cost of each Azure ETL tool, including licensing fees, maintenance costs, and any additional resources required for implementation and management. Choose a tool that offers the best balance between features and affordability for your organization.

- Integration with existing systems: Ensure that the chosen Azure ETL tool integrates seamlessly with your existing data infrastructure and other Azure services. A well-integrated tool can streamline workflows, improve efficiency, and reduce costs.

By carefully considering these factors, you can choose the right Azure ETL tool for your organization and optimize your data integration and transformation processes.

Implementing Azure ETL Tools in Your Data Pipeline

Implementing an Azure ETL tool in your data pipeline involves several steps, from data source identification to data transformation and loading. Here is a general outline of the process, along with best practices and potential challenges:

- Identify data sources: Determine the various data sources you need to integrate and transform, such as databases, files, or SaaS applications. Ensure that you have the necessary permissions and credentials to access these data sources.

- Connect to data sources: Use the Azure ETL tool’s connectors or APIs to establish connections with your data sources. Ensure that the connections are secure and stable, and test them regularly to avoid disruptions.

- Design data transformations: Define the data transformation rules and logic using the Azure ETL tool’s visual interface or scripting language. Test the transformations on a small sample of data to ensure accuracy and performance.

- Schedule and automate data pipelines: Set up schedules for data extraction, transformation, and loading, and automate the process using the Azure ETL tool’s built-in features or third-party tools. Monitor the data pipelines regularly to ensure they run smoothly and on time.

- Monitor and maintain the Azure ETL tool: Implement monitoring techniques such as logging, alerting, and reporting to track the tool’s performance and identify potential issues. Perform regular maintenance tasks, such as updating the tool, optimizing performance, and addressing any issues that arise.

Potential challenges in implementing Azure ETL tools include data quality issues, complex transformation rules, and integration with legacy systems. Addressing these challenges early on and involving cross-functional teams in the implementation process can help ensure a successful deployment.

Optimizing Azure ETL Tool Performance

Optimizing the performance of your Azure ETL tool is essential for ensuring efficient data integration and transformation processes. By implementing strategies such as data partitioning, parallel processing, and caching, you can improve efficiency, reduce costs, and minimize downtime. Here’s how:

- Data partitioning: Partitioning large datasets into smaller, more manageable chunks can help improve performance and reduce processing time. Techniques such as horizontal partitioning (splitting data across multiple nodes) and vertical partitioning (splitting data into columns) can be used to optimize data processing and storage.

- Parallel processing: Leveraging parallel processing capabilities can help speed up data transformation tasks by dividing the workload into smaller, concurrent tasks. By processing multiple data streams simultaneously, you can significantly reduce processing time and improve overall performance.

- Caching: Implementing caching mechanisms can help improve performance by storing frequently accessed data in memory, reducing the need for repeated database queries. Caching can also help reduce costs by minimizing the amount of data transferred between storage and processing units.

By implementing these strategies, you can optimize the performance of your Azure ETL tool and ensure that your data integration and transformation processes run smoothly and efficiently. Regularly monitoring and maintaining your tool can also help identify potential performance issues and ensure long-term effectiveness.

Monitoring and Maintaining Azure ETL Tools

Monitoring and maintaining Azure ETL tools is crucial for ensuring their long-term effectiveness and efficiency. By implementing various monitoring techniques and following best practices for regular maintenance and updates, you can identify potential issues early on and keep your tool running smoothly. Here’s how:

- Logging: Implementing logging mechanisms can help track the performance and behavior of your Azure ETL tool. By logging events, errors, and warnings, you can identify potential issues and take corrective action before they become critical.

- Alerting: Setting up alerts can help notify you of potential issues or performance degradation in real-time. By configuring alerts based on specific thresholds or conditions, you can proactively address issues and minimize downtime.

- Reporting: Generating reports on tool performance, usage, and other key metrics can help you identify trends, patterns, and areas for improvement. By regularly reviewing these reports, you can make data-driven decisions and optimize your tool’s performance over time.

Best practices for regular maintenance and updates include:

- Regularly reviewing and updating your tool’s configurations and settings

- Applying patches and updates as soon as they become available

- Testing and validating updates in a staging environment before deploying to production

- Implementing a backup and recovery plan to protect against data loss or corruption

- Monitoring for security vulnerabilities and addressing them promptly

By following these best practices and implementing monitoring techniques, you can ensure the long-term effectiveness and efficiency of your Azure ETL tool, reducing costs and minimizing downtime.

Real-World Success Stories: Azure ETL Tools in Action

Azure ETL tools have been successfully implemented across various industries, enabling organizations to streamline their data integration and transformation processes. Here are some examples of real-world success stories:

Healthcare

A healthcare provider implemented an Azure ETL tool to integrate and transform data from various sources, such as electronic health records, medical devices, and claims data. By automating the data integration and transformation process, the provider was able to reduce manual errors, improve data accuracy, and enhance patient care.

Retail

A retail company used an Azure ETL tool to consolidate and transform data from multiple sources, such as point-of-sale systems, e-commerce platforms, and inventory management systems. By optimizing their data integration and transformation process, the retailer was able to gain real-time insights into their inventory levels, sales trends, and customer behavior, improving their decision-making and driving revenue growth.

Finance

A financial institution implemented an Azure ETL tool to automate the data integration and transformation process from various sources, such as trading platforms, risk management systems, and regulatory databases. By optimizing their data integration and transformation process, the financial institution was able to reduce costs, minimize downtime, and improve compliance with regulatory requirements.

These success stories demonstrate the value of using Azure ETL tools for data integration and transformation in cloud-based environments. By choosing the right tool, implementing best practices, and regularly monitoring and maintaining the tool, organizations can optimize their data integration and transformation processes, reduce costs, and improve decision-making.