Introducing Databricks on Azure: Your Unified Analytics Platform

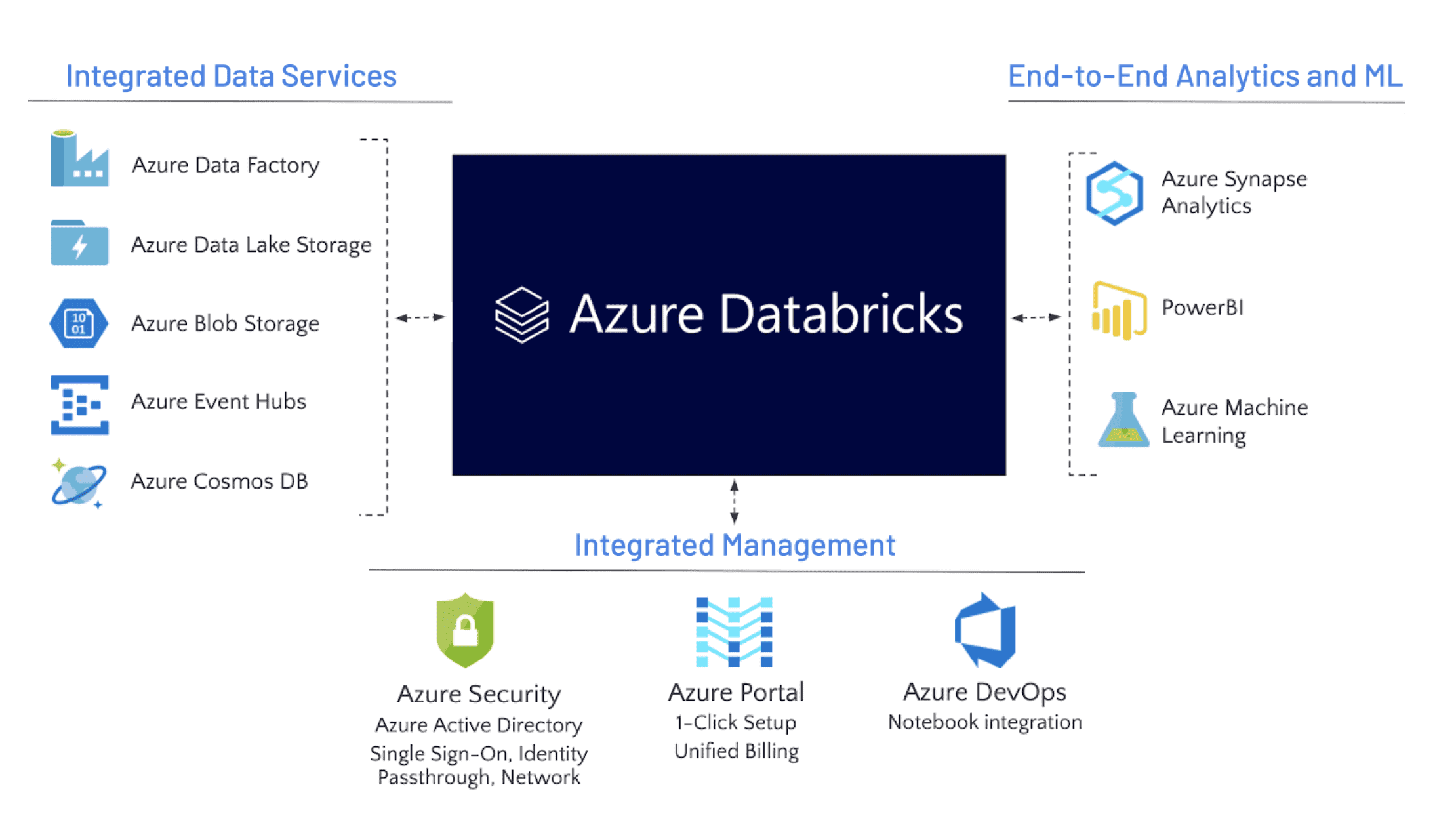

Databricks on Azure offers a unified analytics platform, handling diverse data types and workloads with ease. Its scalability ensures efficient processing of massive datasets. Robust security features protect sensitive information. User-friendly interfaces simplify complex tasks. Azure Databricks empowers organizations to unlock the full potential of their data, accelerating insights and driving innovation. This powerful combination of Azure’s cloud infrastructure and Databricks’s analytics engine provides a complete solution for data-driven businesses. The platform’s flexibility allows for seamless integration with existing data pipelines and tools, minimizing disruption during the transition. Azure Databricks streamlines the entire data lifecycle, from ingestion to analysis and deployment of machine learning models. Its collaborative environment facilitates teamwork and knowledge sharing, fostering a more efficient data science process. Databricks on Azure provides a robust, scalable, and secure environment for all your data analytics needs.

Organizations of all sizes benefit from the comprehensive features of Azure Databricks. Data scientists can focus on insights, not infrastructure. The platform’s auto-scaling capabilities adjust resources dynamically, optimizing cost-effectiveness. Its support for popular languages like Python, Scala, R, and SQL ensures broad accessibility. Advanced analytics, including machine learning and real-time streaming, are readily available. Azure Databricks fosters a data-driven culture, equipping teams with the tools to make informed decisions based on robust analytics. The platform is designed for both beginners and experienced users, offering a range of functionalities to cater to various skill levels. Azure Databricks delivers a comprehensive and versatile solution for data analysis and machine learning.

Data integration is simplified through seamless connectivity with various data sources. Data scientists can leverage powerful tools for data cleaning, transformation, and visualization. Azure Databricks simplifies the deployment and management of machine learning models, reducing time to market for new applications. The platform provides strong governance and security features, ensuring compliance with regulatory requirements. Azure Databricks promotes agility and efficiency, enabling organizations to respond quickly to changing business needs. This platform empowers organizations to gain a competitive edge by effectively utilizing their data assets. By using Azure Databricks, businesses can transform their data into actionable insights, driving growth and innovation.

How to Migrate Your Data to Azure Databricks Seamlessly

Migrating your data to Azure Databricks offers a streamlined path to unified analytics. This process involves several key steps, ensuring a smooth and efficient transition. First, assess your current data landscape. Identify all data sources, including on-premises databases, cloud storage services like Azure Blob Storage or Azure Data Lake Storage, and other relevant repositories. Understanding your data’s structure and volume is crucial for planning an effective migration strategy. Azure Databricks provides robust tools to handle diverse data formats and sources. The platform’s scalability ensures seamless handling of large datasets.

Next, choose the appropriate data migration method. For smaller datasets, direct import using Azure Databricks’ built-in utilities might suffice. Larger datasets often benefit from using tools like Azure Data Factory or Azure Synapse Analytics for optimized data movement. These tools offer features like parallel processing and data transformation capabilities. Remember to prioritize data security during the migration. Encryption both in transit and at rest should be implemented. Azure Databricks offers strong security features, including integration with Azure Active Directory for granular access control. Regularly monitor the migration process to identify and address any potential issues. Azure Databricks provides detailed monitoring tools for tracking data ingestion and transformation progress.

Finally, validate the migrated data. Verify data integrity and consistency after the migration is complete. This ensures the accuracy of your subsequent analytics and machine learning tasks. Azure Databricks offers data quality tools to help assess data accuracy and completeness. The platform supports various data validation techniques and provides mechanisms for data cleansing and transformation, further enhancing data quality. By following these steps, you can ensure a successful and secure data migration to Azure Databricks, unlocking the power of your data for advanced analytics and machine learning. This migration to Azure Databricks significantly enhances data accessibility and unlocks advanced analytics capabilities. The entire process is designed for efficiency and security, ensuring a smooth transition.

Mastering Databricks Workflows: From Ingestion to Insights

Data ingestion forms the foundation of any successful analytics project on Azure Databricks. This involves efficiently moving data from various sources into your Databricks workspace. Common sources include cloud storage like Azure Blob Storage and Azure Data Lake Storage, as well as on-premises databases and other cloud-based data warehouses. Azure Databricks offers robust connectors and utilities to simplify this process, ensuring data is readily available for subsequent analysis. Efficient data ingestion minimizes processing time and maximizes resource utilization. Understanding the various ingestion methods, such as using Delta Live Tables for automated data pipelines, is crucial for optimizing your Azure Databricks environment.

Once data resides in Azure Databricks, the transformation phase begins. This stage involves cleaning, structuring, and preparing the data for analysis. Apache Spark, the core engine powering Azure Databricks, provides a powerful and scalable framework for data transformation. Users can leverage Spark’s DataFrames and SQL capabilities to perform complex data manipulation tasks. Python and Scala are commonly used programming languages for interacting with Spark within Azure Databricks. Consider this simple Python example using PySpark: data = spark.read.csv("path/to/data.csv"). This line reads a CSV file into a Spark DataFrame. Subsequent transformations, like filtering, aggregation, and joining, can be performed efficiently using Spark’s built-in functions. Optimizing data transformation processes involves employing techniques like data partitioning and caching to accelerate query execution.

The final stage focuses on extracting valuable insights from the transformed data. Azure Databricks enables interactive data exploration using tools like Databricks SQL and notebooks. Users can leverage visualization libraries to create charts and dashboards, providing an intuitive way to interpret results. Advanced analytical techniques, such as machine learning, can be incorporated seamlessly into the workflow. Azure Databricks provides integrated support for popular machine learning libraries, facilitating the development and deployment of predictive models. By mastering these workflows, users can effectively unlock the power of their data within the Azure Databricks ecosystem, driving data-driven decision-making and achieving significant business value. Remember that careful planning and efficient execution are critical for maximizing the effectiveness of your Azure Databricks environment.

Building and Deploying Machine Learning Models with Azure Databricks

Azure Databricks provides a powerful platform for the entire machine learning lifecycle. Data scientists can leverage its integrated environment to build, train, and deploy models efficiently. Popular libraries like scikit-learn, TensorFlow, and PyTorch are readily available within the Azure Databricks workspace. This simplifies the process and allows for seamless collaboration among team members. The platform’s scalability ensures that even large-scale machine learning tasks can be handled effectively. Azure Databricks’ distributed computing capabilities accelerate model training, reducing the time required to achieve optimal results. Using Azure Databricks for machine learning projects offers significant advantages in terms of speed, scalability, and ease of use.

Building a predictive model using Azure Databricks often involves importing data, preprocessing it using Spark, training the chosen algorithm, and then evaluating its performance. For instance, you might use a Spark DataFrame to load and clean your data, followed by using scikit-learn within a Databricks notebook to train a model. After training, Azure Databricks’ model management capabilities help to monitor the model’s performance and retrain it when necessary. This ensures that the model continues to provide accurate predictions over time. The deployment of the model can be accomplished via various methods, such as using Databricks’ APIs or integrating the model into other applications. Azure Databricks simplifies the process, allowing for rapid model deployment and integration into existing workflows.

Anomaly detection and recommendation systems are further examples of machine learning applications readily implemented with Azure Databricks. Its distributed computing framework excels at processing large datasets crucial for identifying unusual patterns in data streams or recommending products based on user preferences. The platform’s collaborative features allow multiple users to work on the same project simultaneously, accelerating the development process. Azure Databricks’ extensive integration with other Azure services further enhances its capabilities, enabling seamless data pipelines and streamlined workflows. Utilizing Azure Databricks’ machine learning features facilitates rapid model development, deployment, and continuous monitoring, empowering data scientists to create impactful solutions quickly and efficiently.

Optimizing Performance and Scaling Your Databricks Workloads

Efficiently managing resources is crucial for optimal performance in Azure Databricks. Cluster configuration significantly impacts processing speed. Choose appropriate instance types based on workload demands. For example, memory-optimized instances excel with large datasets, while compute-optimized instances are better suited for complex computations. Azure Databricks offers autoscaling, automatically adjusting cluster resources based on workload fluctuations. This dynamic scaling minimizes costs while ensuring consistent performance. Proper configuration prevents bottlenecks and maximizes the value of your Azure Databricks investment.

Query optimization is another key aspect of performance enhancement. Techniques like using appropriate data structures, filtering data early in the pipeline, and leveraging Databricks’ built-in optimization features can drastically improve query execution times. Understanding data partitioning and using appropriate partitioning strategies also significantly impacts performance. Azure Databricks provides tools to monitor query performance and identify areas for improvement. Regularly reviewing query plans helps in identifying and addressing inefficiencies. By implementing these strategies, users can achieve substantial improvements in query speed and overall application performance within the Azure Databricks environment.

Resource management within Azure Databricks is critical for cost control and performance. Efficient cluster management involves careful consideration of cluster lifecycle management, including proper termination of inactive clusters to prevent unnecessary costs. Monitoring resource utilization provides insights into performance bottlenecks. Understanding how different cluster configurations impact performance allows for better resource allocation. Azure Databricks provides various monitoring tools to help manage resources effectively. By combining thoughtful configuration with proactive monitoring and management, organizations can optimize performance and simultaneously reduce expenses, thus maximizing the return on their Azure Databricks investment. Properly managing resources within azure databricks ensures optimal cost efficiency.

Data Security and Governance within the Databricks Ecosystem

Data security and governance are paramount when working with sensitive information. Azure Databricks provides a robust suite of features to ensure data protection and compliance. Access control mechanisms, such as role-based access control (RBAC), allow granular permission management, limiting access to authorized users and services only. This ensures only individuals with appropriate privileges can access specific data or perform certain actions within the Azure Databricks environment. Data encryption, both at rest and in transit, safeguards data from unauthorized access. Azure Databricks integrates seamlessly with Azure’s security infrastructure, leveraging features like Azure Active Directory for authentication and authorization. This tight integration enhances security posture and simplifies identity management.

Azure Databricks supports various compliance certifications, including those related to data privacy regulations such as GDPR and HIPAA. These certifications demonstrate Azure Databricks’ commitment to meeting stringent security standards. Organizations can leverage these certifications to ensure their use of Azure Databricks aligns with their regulatory obligations. Implementing robust security practices within the Azure Databricks platform requires a multi-layered approach. Network security groups (NSGs) restrict access to the Databricks workspace, preventing unauthorized network connections. Regular security audits and vulnerability assessments identify potential weaknesses and ensure proactive mitigation. Data loss prevention (DLP) policies can be implemented to prevent sensitive data from leaving the controlled environment. These measures, combined with Azure Databricks’ inherent security features, help to create a secure and compliant data analytics platform.

By leveraging the comprehensive security and governance features of Azure Databricks, organizations can confidently manage and protect their data. The platform’s integration with Azure’s broader security ecosystem simplifies security management and ensures a high level of protection. Regularly reviewing and updating security policies is crucial to maintain a secure and compliant Azure Databricks environment. Proactive security measures are essential for protecting valuable data and maintaining business continuity within the Azure Databricks ecosystem. Adopting a zero-trust security model further enhances protection, requiring continuous verification of user identities and access rights. This rigorous approach helps organizations mitigate risks and maintain the integrity of their data within Azure Databricks.

Cost Optimization Strategies for Azure Databricks

Managing costs effectively is crucial when leveraging the power of Azure Databricks. Understanding the various pricing models is the first step. Azure Databricks utilizes a consumption-based pricing model, charging based on the compute resources consumed, storage used, and data processed. Careful planning and resource allocation are key to minimizing expenses. Strategies like right-sizing clusters—choosing the appropriate number and size of worker nodes based on workload demands—prevent unnecessary spending. Autoscaling capabilities within Azure Databricks dynamically adjust resources based on real-time needs, ensuring optimal performance while avoiding over-provisioning. This intelligent scaling minimizes idle compute time, resulting in significant cost savings.

Efficient cluster management plays a vital role in cost optimization. Properly configuring your clusters, including specifying instance types appropriate for your workload, directly impacts your bill. Regular monitoring of cluster utilization helps identify potential areas for improvement. Unused clusters should be terminated promptly to avoid unnecessary charges. For tasks requiring significant processing power but infrequent execution, consider using on-demand clusters instead of always-on clusters. This approach allows you to pay only for the compute time used. Remember to leverage Databricks’ built-in monitoring tools to gain insights into your resource consumption, allowing for data-driven decisions to fine-tune your cost optimization strategies. Azure Databricks offers features such as Unity Catalog, which contributes to cost efficiency through enhanced data governance and collaboration.

Beyond cluster management, consider optimizing data processing techniques. Efficient data ingestion, transformation, and query optimization significantly reduce compute time, leading to lower costs. Techniques such as data compression, partitioning, and caching can improve query performance and reduce the overall resources consumed. Regularly reviewing your Azure Databricks usage reports and understanding the cost drivers will allow you to make informed decisions on resource allocation and refine your cost optimization approach. Proactive monitoring and analysis of your Azure Databricks environment are vital for long-term cost savings and the efficient use of resources. These strategies ensure that you harness the power of Azure Databricks while effectively managing your cloud expenditure.

Real-World Databricks on Azure Success Stories: Case Studies

Organizations across various sectors successfully leverage Azure Databricks to achieve significant business outcomes. One example is a major financial institution that used Azure Databricks to build a real-time fraud detection system. This system processes millions of transactions daily, identifying suspicious activities with unprecedented accuracy. The implementation of Azure Databricks resulted in a substantial reduction in fraudulent transactions and improved customer trust. The scalability and performance of the platform proved crucial in handling the high-volume data streams.

In the healthcare industry, a leading pharmaceutical company employed Azure Databricks for accelerating drug discovery. Researchers utilized the platform’s powerful analytics capabilities to analyze vast genomic datasets, identifying potential drug targets and significantly reducing research time. This accelerated development process led to faster time-to-market for new treatments and therapies. Azure Databricks provided the necessary computing power and collaborative environment for researchers to work effectively, leading to breakthroughs in drug development. The integration with other Azure services further enhanced the platform’s capabilities.

A retail giant also benefited from Azure Databricks by implementing a personalized recommendation engine. By analyzing customer purchase history and browsing patterns, the company created a highly targeted recommendation system that significantly boosted sales and customer engagement. The scalability of Azure Databricks allowed the company to handle the massive amounts of data generated by its online and offline channels, providing real-time recommendations to millions of customers. This personalized approach enhanced customer satisfaction, increased conversion rates, and ultimately improved the company’s bottom line. Azure Databricks’ ability to handle diverse data types and integrate with existing systems was key to this successful implementation.