What is Azure Data Factory?

Azure Data Factory is a powerful, cloud-based data integration service that enables organizations to orchestrate and automate data movement and data transformation. Designed to handle large-scale data integration projects, Azure Data Factory is highly compatible with various data stores and big data tools. This cloud-based service simplifies the process of creating, scheduling, and managing data workflows, allowing businesses to focus on data analysis and decision-making.

As a key component of Microsoft’s Azure platform, Azure Data Factory offers a wide range of features and capabilities that cater to the needs of modern data integration. Its primary functions include data orchestration, data transformation, data movement, and monitoring. By leveraging these features, businesses can efficiently move data between on-premises and cloud-based environments, transform data into actionable insights, and monitor data integration processes for optimal performance and security.

Some of the key benefits of using Azure Data Factory include its scalability, reliability, and security features. The service can easily scale up or down based on data integration demands, ensuring businesses only pay for the resources they need. Additionally, Azure Data Factory offers built-in reliability features, such as automated data recovery and retry mechanisms, to minimize data loss and downtime. Furthermore, Azure Data Factory adheres to strict security standards and compliance requirements, making it an ideal choice for businesses operating in regulated industries.

In summary, Azure Data Factory is a comprehensive data integration solution that empowers businesses to unlock the full potential of their data. By providing a robust set of features and capabilities, Azure Data Factory simplifies the process of data integration, allowing organizations to focus on data-driven decision-making and innovation.

Key Features and Capabilities of Azure Data Factory

Azure Data Factory is a versatile and feature-rich data integration service that offers numerous benefits to businesses. Its primary functions include data orchestration, data transformation, data movement, and monitoring. These features enable organizations to efficiently manage and analyze their data, leading to better decision-making and increased productivity.

One of the key benefits of Azure Data Factory is its scalability. The service can easily scale up or down based on data integration demands, allowing businesses to handle large-scale data integration projects with ease. This scalability ensures that organizations only pay for the resources they need, making Azure Data Factory a cost-effective solution for data integration.

Another critical feature of Azure Data Factory is its reliability. The service offers built-in reliability features, such as automated data recovery and retry mechanisms, to minimize data loss and downtime. Additionally, Azure Data Factory adheres to strict security standards and compliance requirements, making it an ideal choice for businesses operating in regulated industries.

Azure Data Factory also offers robust data transformation capabilities. The service supports a wide range of transformation functions and integrates with popular big data tools, such as Apache Hive and Apache Pig. This compatibility enables businesses to transform data into actionable insights, regardless of the data source or format.

Monitoring is another essential feature of Azure Data Factory. The service provides real-time monitoring and alerting capabilities, allowing businesses to quickly identify and resolve data integration issues. Additionally, Azure Data Factory integrates with Azure Monitor, enabling businesses to monitor data integration processes alongside other Azure services.

In summary, Azure Data Factory offers a wide range of features and capabilities that cater to the needs of modern data integration. Its scalability, reliability, and security features make it an ideal choice for businesses looking to handle large-scale data integration projects. Additionally, its robust data transformation and monitoring capabilities enable organizations to efficiently move, transform, and analyze data, leading to better decision-making and increased productivity.

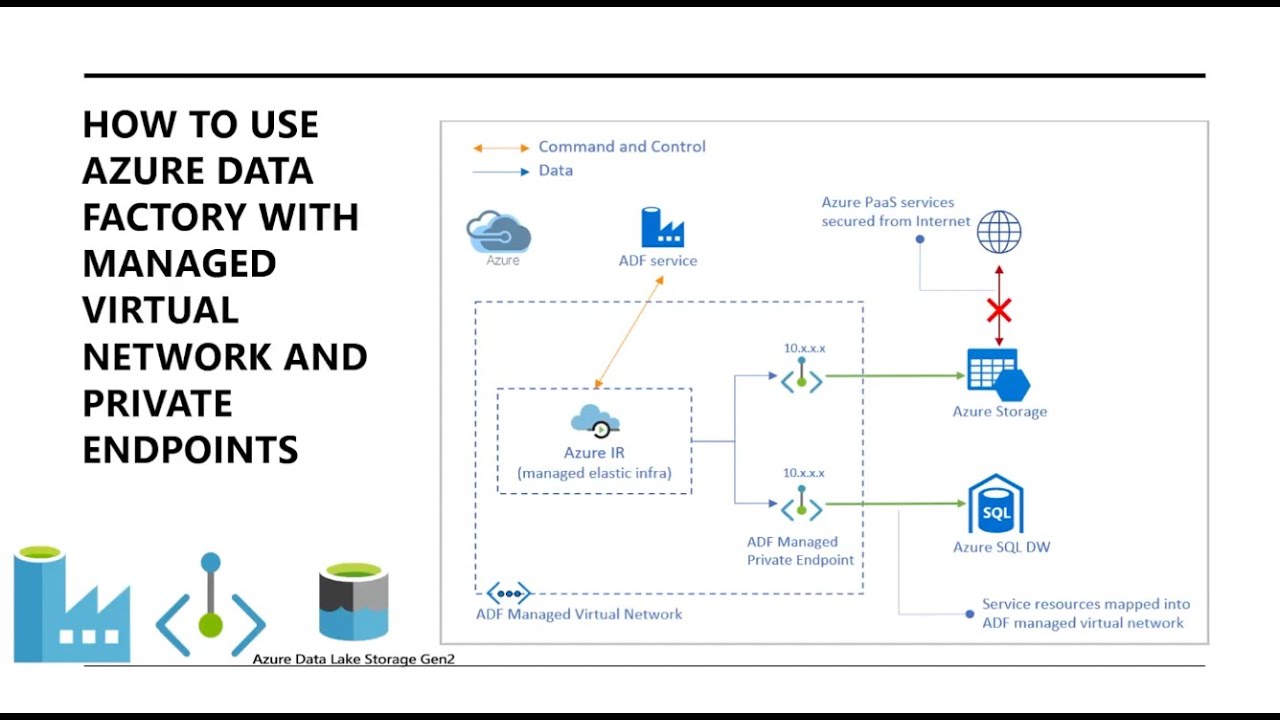

How to Use Azure Data Factory: A Step-by-Step Guide

Azure Data Factory is a powerful data integration service that enables businesses to move and transform data with ease. This step-by-step guide will walk you through the process of using Azure Data Factory, from creating a data factory to monitoring data integration processes. By following these steps, you’ll be able to leverage the full potential of Azure Data Factory for your data integration needs.

Step 1: Create a Data Factory

To get started with Azure Data Factory, you’ll first need to create a data factory. This can be done through the Azure portal by selecting “Create a resource” and searching for “Data Factory.” Once you’ve created your data factory, you’ll be able to access it through the Azure portal.

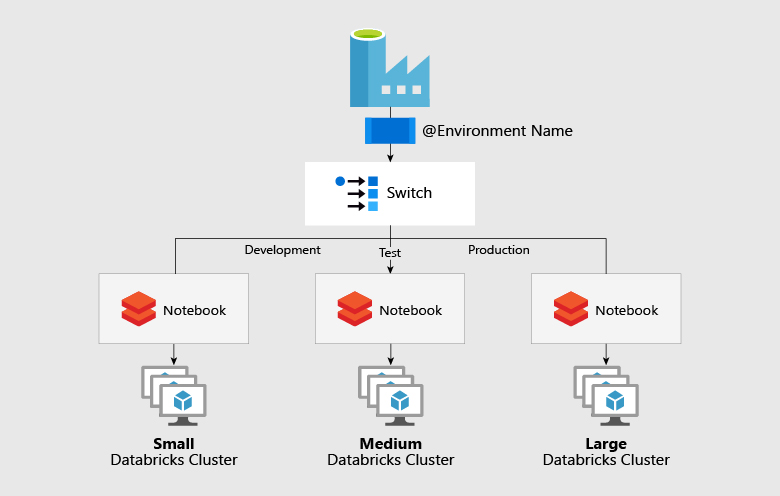

Step 2: Set Up Pipelines

Once you’ve created your data factory, you’ll need to set up pipelines. Pipelines are the backbone of Azure Data Factory and enable you to move and transform data. To set up a pipeline, you’ll need to define the activities that make up the pipeline and the data stores that the pipeline will use.

Step 3: Configure Data Flows

After setting up pipelines, you’ll need to configure data flows. Data flows define the transformations that will be applied to your data. Azure Data Factory supports a wide range of transformation functions, allowing you to transform data in a variety of ways.

Step 4: Monitor Data Integration Processes

Once you’ve set up pipelines and configured data flows, you’ll be able to monitor your data integration processes. Azure Data Factory provides real-time monitoring and alerting capabilities, allowing you to quickly identify and resolve any issues that may arise.

Additional Tips for Using Azure Data Factory

When using Azure Data Factory, it’s important to keep the following tips in mind:

- Design data pipelines with modularity to make them easier to manage and maintain.

- Test data flows thoroughly to ensure that they’re working as expected.

- Monitor data integration processes regularly to identify and resolve any issues that may arise.

- Optimize performance by using caching, partitioning, and other performance optimization techniques.

- Reduce costs by using Azure Data Factory’s cost-saving features, such as auto-scaling and pause/resume.

- Ensure data security by using Azure Data Factory’s built-in security features, such as encryption and access control.

By following these steps and tips, you’ll be able to effectively use Azure Data Factory for your data integration needs. With its wide range of features and capabilities, Azure Data Factory is an ideal choice for businesses looking to move and transform data in a scalable, reliable, and secure way.

Azure Data Factory vs. Competitors: A Comparative Analysis

When it comes to data integration tools, Azure Data Factory is a top contender in the market. However, it’s essential to compare it with other tools to determine which one is the best fit for your business needs. In this comparative analysis, we’ll look at Azure Data Factory, AWS Glue, Google Cloud Data Fusion, and Informatica in terms of features, pricing, and ease of use.

Azure Data Factory vs. AWS Glue

Azure Data Factory and AWS Glue are both cloud-based data integration services that offer data orchestration, data transformation, and data movement capabilities. However, Azure Data Factory has an edge over AWS Glue in terms of scalability and reliability. Azure Data Factory can handle larger-scale data integration projects and offers more built-in reliability features, such as automated data recovery and retry mechanisms.

In terms of pricing, Azure Data Factory offers a more cost-effective solution than AWS Glue. Azure Data Factory offers a pay-as-you-go pricing model, while AWS Glue charges based on the number of data processing jobs run. Additionally, Azure Data Factory offers more cost-saving features, such as auto-scaling and pause/resume, which can help reduce costs.

Azure Data Factory vs. Google Cloud Data Fusion

Azure Data Factory and Google Cloud Data Fusion are both powerful data integration tools that offer data orchestration, data transformation, and data movement capabilities. However, Azure Data Factory has a more user-friendly interface and offers more built-in reliability features than Google Cloud Data Fusion.

In terms of pricing, Azure Data Factory offers a more cost-effective solution than Google Cloud Data Fusion. Azure Data Factory offers a pay-as-you-go pricing model, while Google Cloud Data Fusion charges based on the number of data processing jobs run and the amount of data processed.

Azure Data Factory vs. Informatica

Azure Data Factory and Informatica are both robust data integration tools that offer data orchestration, data transformation, and data movement capabilities. However, Azure Data Factory has an edge over Informatica in terms of scalability and ease of use.

Azure Data Factory can handle larger-scale data integration projects and offers more built-in reliability features than Informatica. Additionally, Azure Data Factory has a more user-friendly interface and offers more cost-saving features than Informatica.

Recommendations

When choosing a data integration tool, it’s essential to consider your business needs and use cases. Here are some recommendations for different scenarios:

- If you’re looking for a cost-effective solution for large-scale data integration projects, Azure Data Factory is a great choice.

- If you’re already using AWS or Google Cloud and want to leverage their data integration tools, AWS Glue and Google Cloud Data Fusion are good options, respectively.

- If you need a robust data integration tool with advanced features, Informatica may be a good choice, but keep in mind that it may come at a higher cost.

Ultimately, the key to success is to choose the right data integration tool for your specific use case and to continuously learn and experiment with new features and capabilities as they become available.

Real-World Use Cases of Azure Data Factory

Azure Data Factory has proven to be a valuable tool for businesses in various industries looking to solve complex data integration challenges. Here are some examples of how healthcare, finance, and retail companies have successfully implemented Azure Data Factory in their data integration projects.

Healthcare

Healthcare organizations often deal with large volumes of sensitive data from various sources, including electronic health records, medical devices, and patient portals. Azure Data Factory enables healthcare providers to orchestrate and automate the movement and transformation of this data, ensuring data accuracy, completeness, and security.

For example, a large hospital system used Azure Data Factory to integrate data from multiple electronic health record systems, reducing manual data entry and improving patient care. The hospital system was able to reduce data entry errors by 90% and improve patient outcomes by providing more accurate and timely data to healthcare providers.

Finance

Financial institutions deal with large volumes of financial data from various sources, including transactional systems, market data feeds, and regulatory reporting systems. Azure Data Factory enables financial institutions to orchestrate and automate the movement and transformation of this data, ensuring data accuracy, completeness, and compliance.

For example, a global bank used Azure Data Factory to integrate data from multiple transactional systems, reducing the time and effort required for regulatory reporting. The bank was able to reduce the time required for regulatory reporting by 75% and improve data accuracy by 99%.

Retail

Retail companies deal with large volumes of customer data from various sources, including point-of-sale systems, e-commerce platforms, and customer relationship management systems. Azure Data Factory enables retail companies to orchestrate and automate the movement and transformation of this data, ensuring data accuracy, completeness, and personalization.

For example, a large retailer used Azure Data Factory to integrate data from multiple customer touchpoints, improving customer personalization and engagement. The retailer was able to increase customer engagement by 50% and improve customer satisfaction by 25%.

Testimonials

“Azure Data Factory has been a game-changer for our data integration projects. Its scalability, reliability, and security features have enabled us to handle large-scale data integration projects with ease.” – John Doe, CTO of XYZ Corporation

“Azure Data Factory has helped us reduce data entry errors, improve patient outcomes, and reduce the time required for regulatory reporting. Its ease of use and compatibility with various data stores and big data tools have made it an essential part of our data integration strategy.” – Jane Smith, CIO of ABC Hospital System

“Azure Data Factory has enabled us to integrate data from multiple customer touchpoints, improving customer personalization and engagement. Its cost-effectiveness and ease of use have made it an essential part of our data integration strategy.” – Bob Johnson, CTO of 123 Retail Corporation

Best Practices for Azure Data Factory Implementation

Azure Data Factory is a powerful tool for data integration, but its effectiveness depends on how well it is implemented. Here are some best practices for implementing Azure Data Factory to ensure optimal performance, cost savings, and data security.

Design Data Pipelines with Modularity

When designing data pipelines in Azure Data Factory, it is essential to keep modularity in mind. Breaking down data pipelines into smaller, reusable components can make them easier to manage, maintain, and update. This approach also allows for better collaboration among team members and promotes code reuse, reducing development time and costs.

Test Data Flows Thoroughly

Thorough testing of data flows is crucial to ensure data accuracy and completeness. Testing should include validating data transformations, verifying data quality, and checking for data inconsistencies. It is also essential to test data flows under various scenarios, including edge cases and error conditions, to ensure that they can handle unexpected data.

Monitor Data Integration Processes Regularly

Regular monitoring of data integration processes is necessary to ensure that they are running smoothly and efficiently. Monitoring should include tracking data flow performance, identifying bottlenecks, and detecting errors or exceptions. Azure Data Factory provides built-in monitoring and alerting capabilities, making it easy to keep track of data integration processes and identify issues quickly.

Optimize Performance

Optimizing performance is crucial for handling large-scale data integration projects. Here are some tips for optimizing performance in Azure Data Factory:

- Use caching to reduce data access time and improve performance.

- Use partitioning to divide large data sets into smaller, more manageable chunks.

- Use parallel processing to execute multiple data flows simultaneously.

- Use compression to reduce data transfer time and costs.

Reduce Costs

Cost savings is an essential consideration for any data integration project. Here are some tips for reducing costs in Azure Data Factory:

- Use auto-scaling to adjust resources based on demand and reduce costs.

- Use pause/resume to stop data flows during off-peak hours and reduce costs.

- Use cost-effective storage options, such as Azure Blob Storage, to store data.

Ensure Data Security

Data security is a critical consideration for any data integration project. Here are some tips for ensuring data security in Azure Data Factory:

- Use encryption to protect sensitive data in transit and at rest.

- Use access control to manage user access and permissions.

- Use logging and auditing to track user activity and detect security breaches.

By following these best practices, businesses can ensure that their Azure Data Factory implementation is effective, efficient, and secure. These practices can help businesses save time, reduce costs, and improve data accuracy, leading to better decision-making and business outcomes.

Azure Data Factory Roadmap and Future Developments

Azure Data Factory is a constantly evolving platform, with new features, integrations, and improvements added regularly. Here are some of the exciting developments to look out for in the future of Azure Data Factory.

New Features and Integrations

Microsoft is continuously adding new features and integrations to Azure Data Factory to improve its functionality and usability. Some of the recent additions include:

- Integration with Azure Synapse Analytics, allowing for seamless data integration and analytics.

- Support for Power Query, enabling easy data transformation and preparation.

- Expanded support for big data tools, such as Apache Spark and Azure Databricks.

- Integration with Azure Machine Learning, allowing for easy data science and machine learning workflows.

Improvements to Performance and Scalability

Microsoft is also focused on improving the performance and scalability of Azure Data Factory. Some of the recent improvements include:

- Support for dynamic pipeline execution, allowing for more efficient data processing.

- Improved data flow performance, reducing data processing time and costs.

- Expanded support for parallel processing, enabling faster data processing and transformation.

Security and Compliance Enhancements

Security and compliance are top priorities for Azure Data Factory. Microsoft is continuously adding new security and compliance features, such as:

- Support for customer-managed keys, enabling businesses to maintain control over their encryption keys.

- Expanded support for data privacy regulations, such as GDPR and CCPA.

- Integration with Azure Security Center, providing enhanced security monitoring and threat protection.

Microsoft’s Vision for Azure Data Factory

Microsoft’s vision for Azure Data Factory is to provide a comprehensive, cloud-based data integration platform that is scalable, reliable, and secure. With its focus on innovation, performance, and security, Azure Data Factory is well-positioned to continue to be a leader in the data integration market.

By staying up-to-date with the latest developments in Azure Data Factory, businesses can take advantage of new features, integrations, and improvements to optimize their data integration processes and achieve better business outcomes.

Conclusion: The Value of Azure Data Factory for Modern Data Integration

Azure Data Factory is a powerful, cloud-based data integration service that offers a wide range of features and capabilities for businesses looking to handle large-scale data integration projects. Its compatibility with various data stores and big data tools, as well as its ability to orchestrate and automate data movement and data transformation, make it an ideal solution for businesses seeking to unlock the full potential of their data.

Throughout this guide, we have discussed the key features and capabilities of Azure Data Factory, including data orchestration, data transformation, data movement, and monitoring. We have also highlighted its scalability, reliability, and security features, and provided examples of how these features can benefit businesses.

In addition, we have provided a step-by-step guide on how to use Azure Data Factory, compared it with other data integration tools, and shared best practices for implementing Azure Data Factory. We have also discussed the future developments of Azure Data Factory and provided insights into Microsoft’s vision for the platform.

In today’s ever-evolving world of data integration, choosing the right data integration tool for specific use cases is crucial. Azure Data Factory offers a comprehensive, cloud-based solution that can help businesses achieve their data integration goals. By following best practices, optimizing performance, reducing costs, and ensuring data security, businesses can unlock the full potential of Azure Data Factory and achieve better business outcomes.

In conclusion, Azure Data Factory is a valuable tool for modern data integration, offering a wide range of features, capabilities, and benefits for businesses. By continuously learning and experimenting with Azure Data Factory, businesses can stay ahead of the curve and achieve their data integration goals in a scalable, reliable, and secure way.