Understanding the Basics of Container Deployment

Containerization has revolutionized modern application development by offering a streamlined approach to packaging, deploying, and managing software. This technology encapsulates an application and its dependencies into a single, portable unit called a container. This ensures that the application runs consistently across different environments, from development to testing to production. The benefits of containerization are numerous, including increased portability, improved scalability, and efficient resource utilization. Containers consume fewer resources compared to traditional virtual machines, leading to cost savings and improved performance. This efficiency makes them ideal for cloud environments, where resource optimization is crucial.

Docker has emerged as the leading platform for containerization, providing a standardized way to build, ship, and run containers. Docker simplifies the process of creating container images, which serve as the blueprint for containers. These images are lightweight and self-contained, allowing developers to easily share and deploy applications. Docker’s command-line interface and extensive ecosystem of tools make it accessible to developers of all skill levels. The platform’s popularity has driven widespread adoption of containerization across various industries.

Containers are valuable in modern application development because they address several key challenges. They ensure consistency across different environments, eliminating the “it works on my machine” problem. They also enable faster deployment cycles, allowing teams to release updates and new features more frequently. Furthermore, containers facilitate scalability by allowing applications to be easily scaled up or down based on demand. The use of azure container instances leverages these benefits to provide a serverless container execution environment, further simplifying deployment and management. Embracing containerization with azure container instances empowers developers to focus on building great applications without the complexities of managing underlying infrastructure. The agility and efficiency offered by azure container instances make them a compelling choice for modern cloud-native applications and workloads for many companies, to save on costs azure container instances is very useful and efficient, since it has a pay-as-you-go billing model.

How to Launch Your First Application Using Azure’s Container Offering

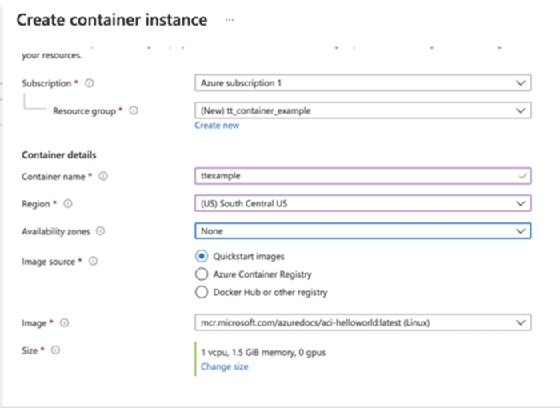

This section offers a step-by-step guide to deploying a simple containerized application using Azure Container Instances (ACI). The process is designed for simplicity and ease of use, allowing you to quickly understand the basics of serverless container deployment. First, an Azure account is required. If one does not exist, create one through the Azure portal. Next, set up the Azure CLI (Command-Line Interface). This tool will be the primary method for interacting with Azure services from your local machine. Installation instructions are available on the Microsoft Azure website, specific to the operating system being used.

With the Azure CLI installed and configured, the next step involves preparing the container image. A Docker image can be built from a Dockerfile, which defines the application’s environment and dependencies. Alternatively, a pre-built image from a public registry like Docker Hub can be used. For this example, consider using a simple “hello-world” image. To build a custom image, a Dockerfile must be created in the application’s root directory. The Dockerfile specifies the base image, copies the application code, installs dependencies, and defines the command to run when the container starts. Once the Dockerfile is ready, use the `docker build` command to create the image. Tag the image with a name and version for easy identification.

Finally, deploy the container to Azure Container Instances. Use the `az container create` command in the Azure CLI. This command requires several parameters, including a resource group name, the container name, and the Docker image to deploy. Resource groups are logical containers for Azure resources, and one may be created if it does not already exist. Specify the amount of CPU and memory allocated to the container. Azure container instances offers pay-per-second billing, so it is important to accurately allocate resources. After running the command, Azure will pull the specified image and deploy it to ACI. Once the deployment completes, Azure will provide a fully qualified domain name (FQDN) for the container. This FQDN can be used to access the application running inside the container. This streamlined process demonstrates the ease with which applications can be launched using Azure container instances, making it an ideal choice for simple, containerized workloads.

Comparing Azure Container Instances with Azure Kubernetes Service

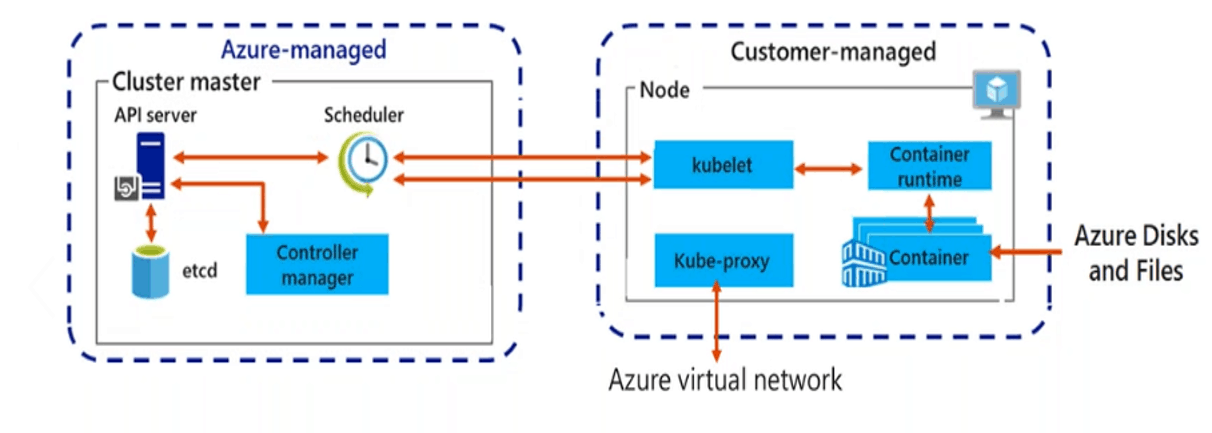

Azure offers two primary container orchestration services: Azure Container Instances (ACI) and Azure Kubernetes Service (AKS). Understanding the distinctions between them is crucial for selecting the right tool for your specific needs. ACI provides a serverless container execution environment, ideal for scenarios where you need to quickly deploy and run individual containers without managing any underlying infrastructure. AKS, on the other hand, is a fully managed Kubernetes service that provides a comprehensive platform for deploying, managing, and scaling containerized applications. Choosing between azure container instances and AKS depends largely on the complexity and scale of your application.

ACI shines in scenarios requiring fast deployments and simple container execution. It is well-suited for event-driven applications, task automation, and batch processing jobs. Because azure container instances doesn’t require you to manage virtual machines or orchestrators, it offers a streamlined experience for developers who want to focus solely on their containerized applications. The pay-per-second billing model of ACI makes it a cost-effective option for short-lived tasks. In contrast, AKS is designed for more complex applications composed of multiple microservices. It provides advanced features such as automated deployments, scaling, and self-healing, making it ideal for production environments demanding high availability and resilience. AKS is a robust solution for managing complex deployments. While AKS offers greater flexibility and control, it also introduces additional operational overhead.

When deciding between azure container instances and AKS, consider the following factors: application complexity, scalability requirements, operational overhead, and cost. If you need to run a single container or a small number of containers with minimal management overhead, ACI is the preferred choice. For applications requiring advanced orchestration, scalability, and management capabilities, AKS is the more appropriate solution. Many organizations use both ACI and AKS in conjunction, leveraging ACI for specific tasks and AKS for core application infrastructure. Selecting the right service ensures optimal performance, cost-efficiency, and manageability for your containerized workloads. Understanding these differences enables informed decisions, optimizing cloud resource utilization.

Optimizing Costs with Azure Container Instances

Effective cost management is crucial when leveraging cloud services. Azure Container Instances (ACI) offers a compelling pay-per-second billing model. This ensures that users are only charged for the exact duration their containers are running. This model differs significantly from traditional VM-based deployments, where resources are often allocated and billed even when idle. Understanding and utilizing ACI’s resource allocation features is key to optimizing costs. Users can specify the exact amount of CPU and memory required for their containers. Avoiding over-provisioning is essential to prevent unnecessary expenses. Azure provides tools like Azure Cost Management to monitor and analyze spending patterns, allowing users to identify areas for optimization within their ACI deployments. By carefully selecting the appropriate resource configurations and leveraging cost monitoring tools, organizations can significantly reduce their operational expenditure when using Azure container instances.

Autoscaling is another powerful feature for cost optimization in Azure Container Instances. While ACI doesn’t inherently offer autoscaling in the same way as Azure Kubernetes Service (AKS), it can be implemented using external orchestrators or event-driven architectures. For instance, Azure Functions can trigger the deployment and scaling of ACI containers based on specific events or demand. This approach ensures that containers are only active when needed, further minimizing costs. For example, a container performing batch processing can be automatically scaled up during peak hours and scaled down or terminated during off-peak times. Integrating Azure Logic Apps with Azure container instances also allows scheduling ACI deployments based on predefined schedules. By combining autoscaling techniques with the pay-per-second billing model, users can achieve significant cost savings and resource efficiency within Azure.

Beyond resource allocation and autoscaling, several other strategies contribute to cost optimization. Selecting the appropriate Azure region can influence pricing, as costs may vary across different regions. Utilizing reserved instances or Azure savings plans may provide discounted rates for sustained usage. Monitoring container resource utilization is crucial. Identify and address any inefficiencies in your application code or container configuration. Regularly review the performance of your Azure container instances deployments. Refine resource allocations as needed. By adopting a holistic approach to cost management, including resource optimization, autoscaling, and continuous monitoring, organizations can maximize the value of Azure container instances while minimizing their cloud expenditure. The strategic deployment of Azure container instances allows businesses to innovate rapidly. At the same time, it keeps infrastructure costs under control. Ultimately, efficient use of Azure container instances translates to a more agile and cost-effective cloud strategy.

Exploring Advanced Features of Microsoft’s Container Cloud Service

Azure Container Instances (ACI) extends beyond basic container deployment, offering a suite of advanced features that empower developers to build sophisticated and scalable applications. Container groups are a core element, allowing the deployment of multiple containers as a single unit. These containers share a lifecycle, resources, local network, and storage volumes. This is particularly useful for applications composed of multiple tightly coupled services. For instance, a web application might consist of a front-end container and an application server container, which can be deployed together in a container group using azure container instances.

Virtual network integration is another powerful feature. ACI can be deployed directly into an existing Azure Virtual Network, providing secure network communication with other Azure resources, such as virtual machines, databases, and storage accounts. This integration ensures that your containerized applications are isolated from the public internet and can securely access sensitive data. For example, you can deploy an azure container instances application that needs to access a database running on an Azure Virtual Machine. By deploying ACI within the same virtual network, you can establish secure, private communication between the application and the database. This eliminates the need to expose the database to the public internet, enhancing security.

Integration with other Azure services further enhances the capabilities of azure container instances. For example, you can use Azure Storage to persist data generated by your containers. Azure Key Vault can be used to securely store and manage secrets, such as database connection strings and API keys, which can then be accessed by your containers. Consider an application that processes sensitive data. You can store the encryption keys in Azure Key Vault and grant the ACI access to these keys. The application can then use these keys to encrypt and decrypt data, ensuring that sensitive information remains protected. These advanced features make azure container instances a versatile platform for building a wide range of containerized applications, from simple microservices to complex, multi-tiered systems. With careful planning and utilization of these features, you can build robust, secure, and scalable applications using serverless containers.

Securing Your Container Deployments in the Azure Cloud

Security is paramount when deploying applications, and securing your container deployments in the Azure cloud using Azure Container Instances (ACI) requires a multi-faceted approach. This includes network isolation, securing container registries, and implementing best practices for securing container images. Understanding these aspects is crucial for maintaining a robust and secure environment for your applications running on azure container instances.

Network isolation is a foundational element of container security. Azure provides several mechanisms to isolate your container instances. Virtual networks allow you to create a private network for your containers, preventing direct access from the public internet. Network security groups (NSGs) can be used to define rules that control inbound and outbound traffic to and from your azure container instances. Implementing these controls minimizes the attack surface and protects your applications from unauthorized access. When deploying to azure container instances, consider the use of private container registries to store your container images. Azure Container Registry (ACR) offers a secure, private registry for storing and managing your Docker container images. Access to ACR can be controlled using Azure Active Directory (Azure AD), ensuring that only authorized users and services can pull or push images. Regularly scan your container images for vulnerabilities using tools like Azure Defender for Container Registries. This helps identify and remediate potential security issues before they are deployed to azure container instances. Utilize features like content trust to ensure the integrity and authenticity of your container images. By signing your images, you can verify that they have not been tampered with and that they originate from a trusted source.

Securing the container images themselves is another critical aspect. Employ a minimal base image to reduce the attack surface. Avoid including unnecessary packages or dependencies in your container images. Regularly update your base images and dependencies to patch known vulnerabilities. Use a process to automate scanning and ensure all azure container instances are secure. Implement a least privilege principle for your container processes. Run your container processes as a non-root user to limit the potential impact of a security breach. Use tools like Azure Security Center and Azure Defender for comprehensive container security monitoring and threat detection. These tools provide insights into the security posture of your container deployments and can help you identify and respond to potential threats. By following these security best practices, you can ensure that your container deployments in Azure Container Instances are secure and protected from potential threats. This involves a combination of network controls, registry security, image hardening, and ongoing monitoring to maintain a secure environment for your applications running on azure container instances.

Troubleshooting Common Issues with Azure Container Instances

When working with azure container instances, encountering issues is a normal part of the development and deployment lifecycle. This section provides guidance on diagnosing and resolving common problems that may arise. Effective troubleshooting ensures smooth operation and efficient utilization of azure container instances. Debugging deployments often starts with examining the container logs. The Azure portal allows you to stream logs directly from your container, providing real-time insights into application behavior. Use the command `az container logs –name

Network connectivity issues can also hinder the functionality of azure container instances. Verify that your container instances can access external resources or other Azure services. Ensure that network security groups (NSGs) and user-defined routes (UDRs) are configured correctly to allow the necessary traffic flow. Use tools like `ping` or `traceroute` within the container (if available) to test network connectivity. If you’re using a virtual network, confirm that the container instances are placed in the correct subnet and that DNS resolution is working as expected. Azure’s network watcher can also assist in diagnosing connectivity problems. Addressing resource constraints is another key aspect of troubleshooting. Azure container instances allow you to specify CPU and memory requirements. If your application exceeds these limits, it may experience performance degradation or even crash. Monitor the resource utilization of your container instances using Azure Monitor. Adjust the CPU and memory allocations as needed to ensure that your application has sufficient resources to operate efficiently. Remember that over-provisioning resources can lead to increased costs, so finding the right balance is important.

Furthermore, issues during image pulling can prevent azure container instances from starting correctly. Double-check that the container image name and tag are correct. If you’re using a private container registry, ensure that the appropriate credentials are provided. Azure Active Directory (Azure AD) integration can simplify authentication with private registries. For persistent problems, consider recreating the container instance or redeploying your application. Review the Azure activity log for any errors or warnings that may provide additional context. Leverage the Azure community forums and documentation for solutions to common issues. By systematically addressing these potential problems, you can effectively troubleshoot and maintain your azure container instances deployments, guaranteeing optimal functionality and performance. Keep in mind that continuous monitoring and proactive debugging are essential for a healthy containerized environment.

Real-World Use Cases for Serverless Containers

Azure Container Instances (ACI) offer a compelling solution for various real-world scenarios, providing a serverless container execution environment that simplifies deployment and management. Organizations across diverse industries are leveraging ACI to address specific needs, benefiting from its agility and cost-effectiveness.

One prominent use case is batch processing. Consider a financial institution that needs to process a large volume of transactions overnight. Instead of maintaining a dedicated server infrastructure, the institution can utilize ACI to spin up containers on demand, process the transactions, and then automatically shut down, incurring costs only for the actual processing time. Another application lies in task automation. A marketing agency, for example, could automate image resizing and optimization tasks using ACI. When a new marketing campaign requires image processing, ACI can launch containers to handle the workload efficiently. Once completed, the containers are terminated, optimizing resource utilization. Event-driven applications also find a natural fit with Azure Container Instances. Imagine a scenario where an e-commerce platform receives image uploads from users. ACI can be triggered to process these images, generate thumbnails, and store them in Azure Storage. This architecture avoids the need for continuously running servers, scaling automatically based on the volume of incoming image uploads. Furthermore, ACI is proving beneficial for running microservices in a serverless fashion, especially when these microservices do not require the full orchestration capabilities of Azure Kubernetes Service (AKS). Development teams can deploy individual microservices as ACI containers, simplifying deployments and reducing operational overhead. These examples illustrate how the rapid provisioning and pay-per-second billing of azure container instances make them a practical choice for workloads with variable demands.

Beyond these specific examples, azure container instances are also finding traction in scenarios like running scheduled tasks, building CI/CD pipelines, and deploying proof-of-concept applications. The ease of deployment and management, coupled with the cost optimization benefits, makes ACI an attractive option for organizations looking to embrace containerization without the complexity of managing underlying infrastructure. By abstracting away the server management aspects, Azure Container Instances allows developers to focus on building and deploying applications more efficiently. The serverless nature of azure container instances empowers organizations to streamline their operations and accelerate their time to market. It truly stands as a versatile solution that caters to a wide range of cloud computing needs.