Why Choose Azure Kubernetes Service for Your Container Orchestration?

Azure Kubernetes Service (AKS) offers a compelling solution for organizations seeking robust container orchestration. Its managed nature significantly reduces operational overhead compared to self-managing Kubernetes clusters. AKS handles the complexities of infrastructure management, allowing teams to focus on application development and deployment. This managed service provides high availability and scalability, ensuring applications remain responsive under fluctuating demand. Azure’s robust security features integrate seamlessly with AKS, offering protection against various threats. AKS simplifies the management process, providing tools and interfaces for easier cluster configuration and monitoring. Many leading companies leverage Azure AKS for its reliability and performance benefits, streamlining their containerized workflows and maximizing resource utilization. The platform’s ease of use and comprehensive feature set makes it an ideal choice for businesses of all sizes.

Compared to other cloud providers’ Kubernetes offerings, Azure AKS stands out due to its deep integration with the broader Azure ecosystem. This integration streamlines workflows and improves efficiency. Services like Azure Active Directory provide seamless authentication, while Azure Monitor offers comprehensive monitoring and logging capabilities. Azure DevOps integrates tightly with AKS, facilitating CI/CD pipelines for automated deployments. This integrated approach minimizes the need for complex configurations and custom integrations, accelerating deployment cycles. The cost-effectiveness of AKS is also a key advantage. Azure’s flexible pricing models allow organizations to optimize costs based on their specific needs. This makes Azure AKS a financially attractive option, especially for businesses with varying resource demands. The managed nature of azure aks also ensures high availability and reduces the need for dedicated personnel to manage the underlying infrastructure.

Real-world examples demonstrate AKS’s effectiveness. Companies across various sectors, from startups to large enterprises, utilize Azure AKS to deploy and manage mission-critical applications. These deployments highlight the platform’s scalability, resilience, and ease of use. By selecting Azure AKS, organizations gain access to a fully managed, secure, and scalable platform for container orchestration, minimizing operational complexity and maximizing efficiency. The seamless integration with the broader Azure ecosystem further enhances the value proposition of Azure AKS. Choosing azure aks simplifies the path to a streamlined and effective containerized application deployment.

Setting Up Your First Azure Kubernetes Cluster: A Step-by-Step Guide

Creating your first Azure Kubernetes Service (AKS) cluster is straightforward. Begin by logging into the Azure portal. Navigate to the Kubernetes Services section. Select “Create” to start the cluster creation process. You’ll need to provide a resource group name, a globally unique cluster name, and choose a region for optimal performance and latency. Azure AKS offers various Kubernetes versions; select one that aligns with your application’s requirements and compatibility. Consider the long-term support and updates available for each version when making your choice. Proper version selection is crucial for the ongoing maintenance of your azure aks cluster.

Next, configure your node pools. Node pools define the worker nodes in your AKS cluster. Specify the number of nodes you require, selecting the appropriate virtual machine (VM) size based on your application’s resource needs. Azure offers a wide range of VM sizes to choose from, each with varying CPU, memory, and storage capabilities. Consider using different node pools for different workloads. For example, you might have one node pool for CPU-intensive tasks and another for memory-intensive tasks. Define your networking options. AKS integrates seamlessly with Azure Virtual Networks, allowing you to connect your cluster to your existing virtual network or create a new one. This integration ensures secure communication between your cluster and other Azure resources. Selecting the appropriate subnet and configuring network policies is essential for a secure azure aks deployment. Proper configuration ensures secure communication within and outside your cluster.

Once you’ve defined these parameters, Azure handles the provisioning and setup of your AKS cluster. This process typically takes a few minutes, depending on the cluster size and resource availability. After the deployment completes, you’ll receive the necessary connection details, including the Kubernetes configuration file (kubeconfig). This file allows you to connect to your cluster using the kubectl command-line tool, enabling you to manage and interact with your azure aks cluster. Remember to download the kubeconfig file. This file is your gateway to managing and interacting with your Kubernetes cluster. Securely store this file and follow best practices for access control. Successfully completing these steps creates a fully functional AKS cluster ready to deploy applications. Azure’s robust infrastructure ensures high availability and reliability for your applications deployed on azure aks.

Deploying Your Applications to Azure AKS: Containerization Best Practices

Before deploying applications to Azure AKS, proper containerization is crucial. This involves creating efficient and secure Docker images. Well-crafted Dockerfiles minimize image size, improving deployment speed and resource utilization within the Azure AKS environment. Consider using multi-stage builds to separate the build process from the runtime environment, further reducing image size and enhancing security. Image optimization techniques, such as using smaller base images and removing unnecessary files, are also essential for efficient deployments in Azure AKS. Regularly scan images for vulnerabilities to maintain a secure Azure AKS cluster.

Helm, a package manager for Kubernetes, simplifies application deployment and management within Azure AKS. Helm charts define, install, and upgrade applications, streamlining the process. Using Helm charts promotes consistency and reproducibility across deployments. This is especially beneficial in managing complex applications with multiple components. Azure AKS integrates seamlessly with Helm, making it a powerful tool for deploying and managing applications in your Azure AKS environment. Leveraging Helm’s features enhances the overall workflow and efficiency of managing applications on Azure AKS.

Pushing container images to a registry, such as Azure Container Registry (ACR), is a vital step. ACR provides a secure and scalable private registry for storing and managing your container images. Integrating ACR with Azure AKS simplifies the deployment pipeline. Azure AKS can pull images directly from ACR, minimizing latency and ensuring secure access to your container images. This tightly integrated approach between ACR and Azure AKS optimizes the deployment process, enhancing efficiency and security within your Azure AKS infrastructure. Using automated build processes further streamlines deployments.

Scaling and Managing Your Azure AKS Cluster: Ensuring High Availability and Performance

Scaling Azure AKS clusters efficiently is crucial for handling fluctuating workloads. Horizontal scaling adds more nodes to your cluster, increasing capacity to handle more pods. Vertical scaling involves increasing the resources of existing nodes, like CPU and memory. Azure AKS simplifies both processes. You can easily scale your cluster using the Azure portal, CLI, or Azure Resource Manager templates. Azure’s autoscaling capabilities automatically adjust the number of nodes based on resource utilization, ensuring optimal performance and cost efficiency. This dynamic scaling allows Azure AKS to adapt to varying demands, preventing bottlenecks and maintaining high availability. Effective scaling strategies in Azure AKS are essential for maintaining application performance and minimizing operational costs.

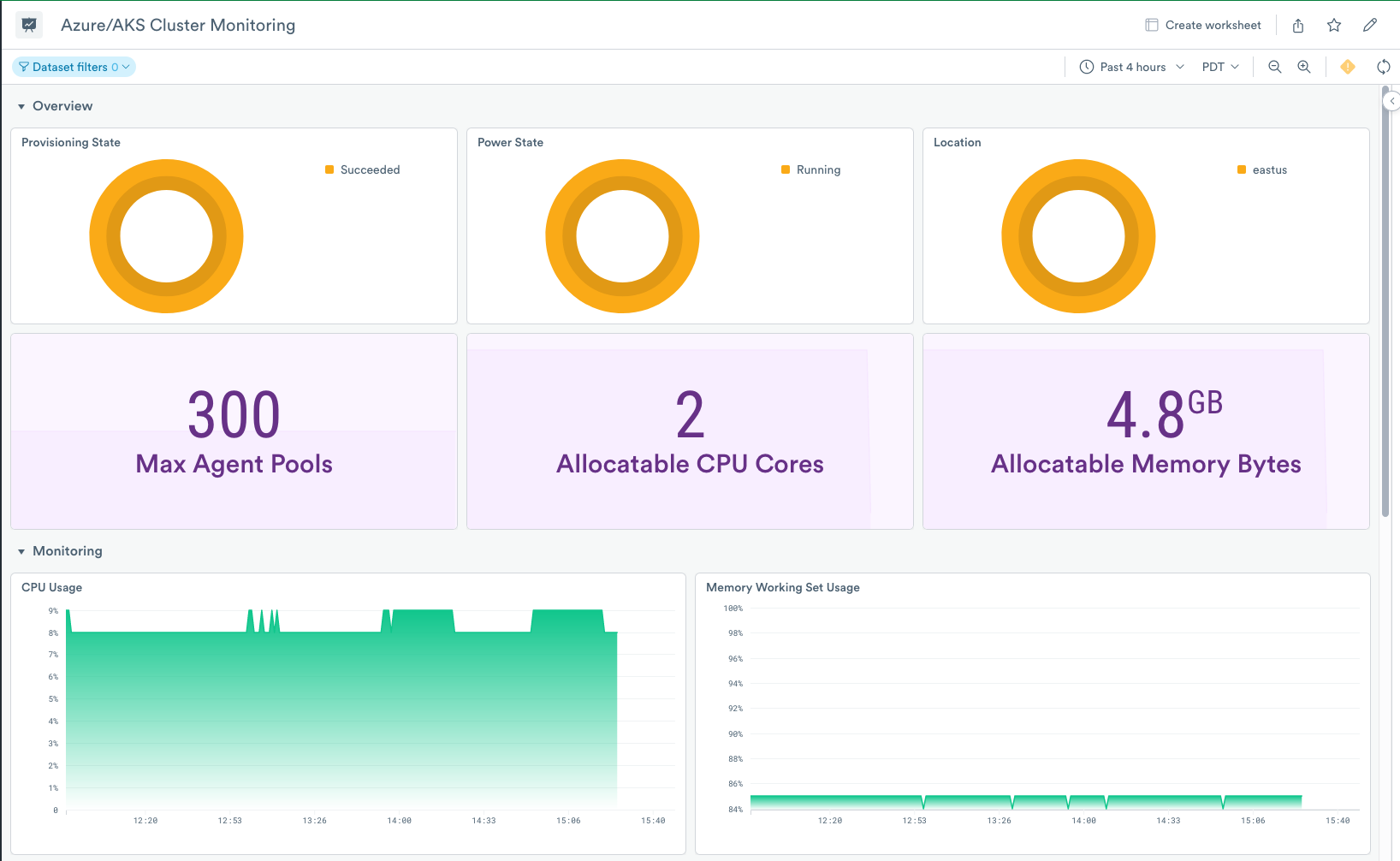

Monitoring cluster health and performance is equally vital for a successful Azure AKS deployment. Azure Monitor provides comprehensive monitoring capabilities, offering real-time insights into resource utilization, pod status, and application performance. It provides alerts for potential issues, allowing for proactive intervention. Understanding key metrics like CPU usage, memory consumption, and network latency enables informed decisions regarding scaling and resource allocation. By actively monitoring the health of your Azure AKS cluster, you can anticipate and address issues before they impact your applications. Proactive monitoring is key to ensuring the stability and reliability of your deployments. Using Azure Monitor alongside built-in Kubernetes metrics provides a holistic view of your cluster’s health.

High availability in Azure AKS is achieved through several strategies. Redundancy across nodes and availability zones ensures that your applications remain accessible even if individual nodes fail. Pod disruption budgets control the number of pods that can be simultaneously terminated during updates or maintenance, minimizing disruption. Implementing robust health checks and using readily available tools for automated rollouts and rollbacks ensure smooth and seamless updates. These strategies help maintain consistent application uptime, even during unexpected events. Azure AKS provides built-in support for these high-availability features, simplifying their implementation and enhancing resilience for your applications. The combination of redundancy, proactive monitoring, and intelligent scaling makes Azure AKS a highly reliable platform for containerized workloads.

Securing Your Azure AKS Cluster: Implementing Robust Security Measures

Securing an Azure AKS cluster requires a multi-layered approach. Network security forms the first line of defense. Azure Virtual Networks provide isolation and control over network traffic accessing your AKS cluster. Network policies, implemented using Kubernetes NetworkPolicy objects, further refine access control within the cluster itself, allowing granular control over communication between pods. This limits the blast radius of potential security breaches. Azure’s integration with Azure Firewall and Network Security Groups enhances this protection even further, offering additional filtering capabilities at the network perimeter. Properly configuring these network security features is paramount for protecting your Azure AKS deployment.

Identity and Access Management (IAM) in Azure provides fine-grained control over access to your AKS cluster resources. Leveraging role-based access control (RBAC), administrators can assign specific permissions to users and service principals. This ensures only authorized individuals can perform actions like deploying applications or managing cluster configurations. Principle of least privilege should be strictly adhered to. This minimizes the potential impact of compromised credentials. Regularly auditing IAM roles and permissions helps maintain a secure posture and prevents unauthorized access to sensitive data and resources within your azure aks environment. This proactive approach is crucial for maintaining the security of your AKS cluster.

Container image security is another crucial aspect of securing your Azure AKS deployments. Regularly scanning container images for vulnerabilities is essential. Tools like Azure Container Registry (ACR) offer built-in vulnerability scanning capabilities. This allows for the early detection and remediation of known security flaws. Implementing a robust CI/CD pipeline that incorporates automated image scanning helps ensure that only secure images are deployed to your azure aks cluster. Employing techniques like multi-stage Docker builds can help minimize the attack surface of your container images. Integrating with security information and event management (SIEM) systems facilitates centralized monitoring and alerts, enabling timely responses to potential security incidents within your Azure AKS environment. By implementing these security best practices, you can significantly improve the overall security posture of your Azure AKS cluster. Remember, security is an ongoing process requiring continuous monitoring and adaptation.

Integrating Azure AKS with Other Azure Services: Streamlining Your Workflow

Azure Kubernetes Service (AKS) excels at seamless integration with other Azure services. This integration streamlines development and operational workflows, boosting efficiency and reducing complexities. Azure Active Directory (Azure AD) provides robust authentication and authorization, ensuring secure access control to your azure aks cluster. This integration simplifies user management and aligns with existing enterprise security policies. By leveraging Azure AD, organizations can centralize identity management across their Azure deployments, including their AKS clusters.

Azure Monitor offers comprehensive monitoring and logging capabilities for your azure aks clusters. It provides real-time insights into cluster health, resource utilization, and application performance. This detailed monitoring helps in proactively identifying and resolving issues, improving application uptime and stability. Azure Monitor integrates seamlessly with AKS, providing dashboards and alerts tailored to your Kubernetes environment. This enables efficient monitoring and allows quick identification of potential problems within the azure aks infrastructure.

Azure DevOps provides a comprehensive platform for CI/CD pipelines. Integrating Azure DevOps with AKS allows for automated build, testing, and deployment of containerized applications. This automation improves the speed and reliability of software releases. Through automated deployment using Azure DevOps, updates and deployments to your azure aks clusters become significantly faster, more efficient, and less error-prone. This integration promotes a DevOps culture, fostering collaboration and faster innovation cycles. The synergy between Azure DevOps and azure aks boosts overall development agility.

Advanced AKS Concepts: Exploring Networking, Storage, and More

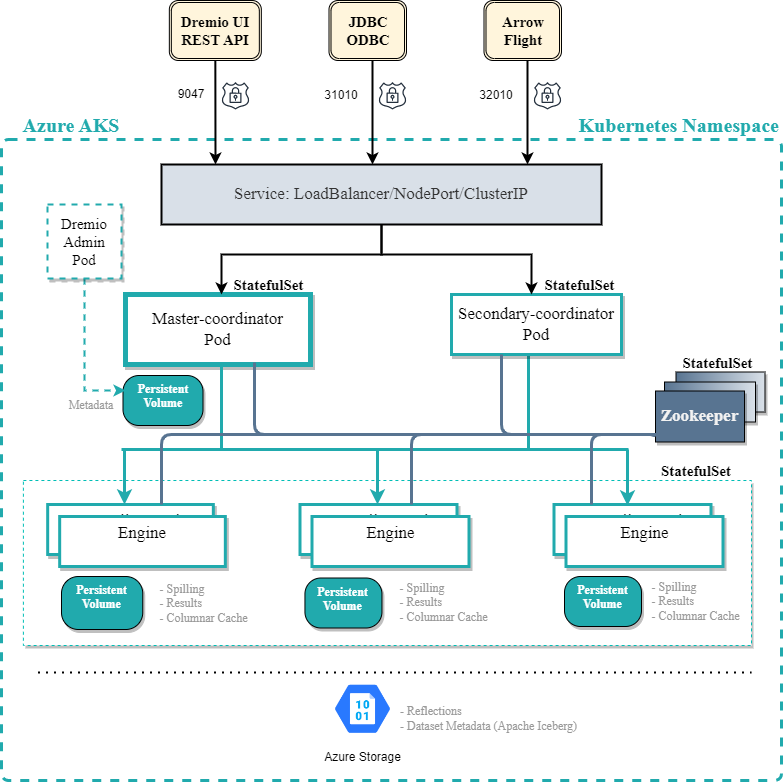

Azure AKS offers sophisticated networking capabilities beyond basic cluster setup. Ingress controllers, such as Nginx or Traefik, provide external access to applications running within the cluster. These controllers manage external DNS and route traffic to the appropriate services. Understanding service meshes, like Istio or Linkerd, is crucial for managing inter-service communication, enhancing security, and improving observability within a complex application architecture. Azure AKS integrates well with these tools, simplifying their deployment and management. Properly configuring networking is vital for application performance and security in Azure AKS.

Persistent storage is essential for applications requiring data persistence beyond the lifespan of a pod. Azure offers several options, including Azure Files, Azure Disks, and managed databases. Choosing the right storage solution depends on application requirements and performance needs. Azure Files provides network file shares accessible by pods, suitable for applications requiring shared storage. Azure Disks provide block storage, offering higher performance for applications demanding low latency. Integrating these storage solutions with Azure AKS requires careful configuration of persistent volumes and claims. Effective storage management enhances data reliability and application availability within your Azure AKS environment.

Securely managing secrets within an Azure AKS cluster is paramount. Storing sensitive information directly in deployments is risky. AKS integrates with Azure Key Vault, a secure secret management service. Key Vault allows applications to access secrets without exposing them directly in the cluster configuration. This approach greatly enhances the security posture of Azure AKS deployments. Implementing robust secret management practices is critical for protecting sensitive data, such as API keys and database credentials. Using Azure Key Vault in conjunction with Azure AKS ensures that your applications can access their required credentials securely.

Troubleshooting Common Azure AKS Issues: A Practical Guide to Problem Solving

Troubleshooting Azure AKS deployments often involves understanding the interplay between Kubernetes and the Azure platform. Common issues include connectivity problems, resource limitations, and deployment failures. When encountering problems, first check the AKS cluster’s overall health using the Azure portal. The portal provides a high-level overview of node status, pod status, and resource utilization. Low resource availability, such as insufficient CPU or memory, often manifests as pod failures or slow application performance. Azure Monitor provides detailed logs and metrics to identify resource bottlenecks. Investigate resource requests and limits defined in your deployments. Insufficient limits can lead to performance issues. Adjust these values to align with application requirements. Network connectivity issues are another frequent problem. Verify network policies, security groups, and virtual network configurations. Use kubectl commands like `kubectl get pods -n

Deployment failures in Azure AKS are often due to image pull failures, insufficient permissions, or misconfigurations in deployment files. Always ensure that your container images are correctly built and pushed to a container registry accessible by the AKS cluster. You can check image pull status within the Azure portal, scrutinizing logs for any potential errors. Check the deployment YAML or Helm chart files to make sure all configurations are accurate. Incorrect configurations are a common cause of deployment errors. Use kubectl describe commands to gain detailed information about specific resources such as deployments, pods, or services. These commands provide richer insights for debugging than a simple `get` command. Properly configured logging and monitoring in Azure AKS are essential for effective troubleshooting. Azure Monitor integrates seamlessly with AKS, offering comprehensive insights into cluster health, resource usage, and application performance. Use the built-in monitoring features to proactively identify and address potential issues. Familiarize yourself with common Azure AKS error messages and their solutions. Extensive online documentation provides many solutions to commonly encountered issues within the Azure AKS environment.

When troubleshooting, consider using the Azure CLI and kubectl commands in conjunction with the Azure portal. The Azure CLI allows for automation and scripting of troubleshooting steps. The `az aks` command group provides a wide range of functionalities for managing and monitoring your AKS cluster. Effective utilization of these tools and Azure Monitor data leads to rapid identification of the root cause of any issues. Proactive monitoring and regular checks of your Azure AKS cluster’s health and configuration are crucial for preventing and quickly resolving potential problems. Implementing a robust logging and monitoring strategy within the Azure AKS environment is critical for ensuring high availability and operational efficiency. Understanding Kubernetes concepts, such as namespaces, deployments, and services, is essential for effective Azure AKS troubleshooting. Effective usage of tools like kubectl and Azure Monitor allows administrators to swiftly address problems within their AKS environments.