Exploring the Core Principles of Responsible AI

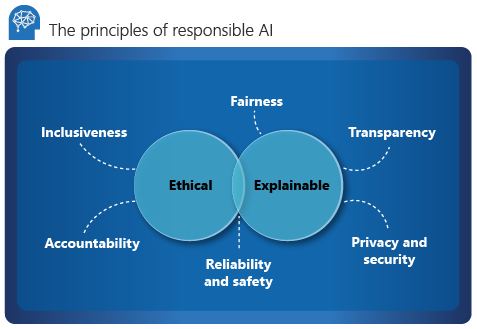

Microsoft’s commitment to responsible AI development is paramount. The company’s Azure AI services are built upon a foundation of six core principles: Fairness, Reliability & Safety, Privacy & Security, Inclusiveness, Transparency, and Accountability. These azure ai principles guide the creation of AI systems that are not only powerful but also ethical and beneficial to society. Understanding and applying these principles is crucial for building trustworthy AI solutions. The principles are interconnected, working together to ensure responsible AI practices. Each principle will be explored in more detail throughout this guide. The application of these azure ai principles ensures AI systems are developed and used responsibly.

Fairness ensures AI systems treat all individuals equitably, avoiding bias. Reliability & Safety focuses on building robust and dependable systems that minimize unintended harm. Privacy & Security prioritizes protecting sensitive data and user privacy, a critical aspect of azure ai principles. Inclusiveness aims to create AI systems accessible and beneficial to diverse populations. Transparency promotes understanding of how AI models work and their limitations. Accountability establishes mechanisms for addressing issues and taking responsibility for AI outcomes. These azure ai principles, when integrated effectively, establish a framework for ethical AI development. They represent a significant contribution to the broader conversation surrounding the responsible use of artificial intelligence. The practical application of these principles is discussed in the following sections.

Microsoft’s dedication to these azure ai principles is reflected in its commitment to ongoing research and development in responsible AI. The company actively collaborates with researchers, policymakers, and other stakeholders to advance the field of ethical AI. By prioritizing these principles, Microsoft strives to create AI systems that empower individuals and organizations while mitigating potential risks. This commitment underscores the importance of responsible AI development, which is not just a technical challenge but a societal imperative. Adhering to these principles is crucial for the long-term success and societal acceptance of AI. Microsoft’s implementation of these azure ai principles sets a high standard for the industry. This commitment to responsible AI fosters trust and encourages wider adoption of beneficial AI solutions.

Building Fair and Equitable AI Systems

The fairness principle within the azure ai principles is paramount. AI systems, while powerful, can inherit and amplify biases present in their training data. This leads to unfair or discriminatory outcomes. Data used to train AI models often reflects existing societal biases. For example, a facial recognition system trained primarily on images of light-skinned individuals may perform poorly on images of people with darker skin tones. This highlights the critical need for careful consideration of data representation and bias mitigation throughout the AI lifecycle.

To build fair AI systems, developers must proactively address bias at every stage. During data collection, diverse and representative datasets are crucial. This involves actively seeking out data that reflects the full spectrum of human diversity. Techniques like data augmentation can help to balance datasets and reduce the impact of skewed representations. During model training, techniques like adversarial debiasing can help to mitigate biases learned by the model. Regular audits and evaluations are needed to identify and address biases that may emerge even after deployment. Microsoft’s commitment to these azure ai principles is reflected in its ongoing research and development of tools and techniques to promote fairness in AI.

Furthermore, the development of explainable AI (XAI) techniques is vital for understanding and addressing bias. XAI methods help to shed light on the decision-making processes of AI models, making it easier to identify and correct biases. Transparency is another crucial component of fairness. Openly sharing information about data sources, model development processes, and limitations helps to build trust and allows for scrutiny. By adhering to the azure ai principles, developers can ensure that AI systems are not only accurate but also equitable and just, serving all members of society fairly. Continuous monitoring and iterative improvement are essential for maintaining fairness over time, as new data and societal changes may introduce new challenges.

Ensuring Reliability and Safety in your AI Solutions

The Reliability & Safety principle within the azure ai principles framework is paramount. Developing robust and dependable AI systems requires rigorous testing and validation throughout the entire lifecycle. This includes meticulous data analysis to identify and mitigate potential biases that could lead to inaccurate or unreliable outputs. Developers should employ various testing methodologies, including unit testing, integration testing, and system testing, to assess the model’s performance under different conditions. Furthermore, employing techniques like adversarial testing, which involves deliberately introducing challenging inputs to probe the model’s resilience, is crucial for ensuring safety and reliability. These azure ai principles guide the development of trustworthy AI solutions.

Continuous monitoring of deployed AI models is equally vital. Real-world data often differs significantly from training data. Continuous monitoring enables developers to identify and address unexpected behaviors or performance degradation promptly. This proactive approach helps maintain the reliability and safety of the AI system over time. Regular updates and retraining based on new data are essential components of this ongoing process. By adhering to the azure ai principles, developers can build AI systems that are not only accurate and efficient but also safe and trustworthy. Furthermore, implementing comprehensive logging and monitoring systems helps to quickly diagnose and resolve any issues that arise, ensuring continuous reliability.

Understanding the limitations of AI models is critical to ensuring their safe deployment. No model is perfect, and acknowledging inherent uncertainties is crucial. Transparency about model limitations, along with clear guidelines for appropriate use cases, are essential aspects of responsible AI development. This includes providing users with information about the model’s accuracy, its potential biases, and any known limitations. This commitment to transparency, guided by the azure ai principles, fosters trust and ensures responsible use. The focus is on creating dependable and safe AI systems that align with ethical standards and user expectations. By adhering to the principles of reliability and safety, developers contribute to building trustworthy and beneficial AI applications.

Protecting Privacy and Security with Azure AI

Azure AI services prioritize data privacy and security, aligning perfectly with the azure ai principles. Robust security measures are integrated throughout the platform, leveraging the broader Azure security infrastructure. Data encryption, both in transit and at rest, safeguards sensitive information. Azure’s comprehensive access control mechanisms ensure that only authorized personnel can access specific data and functionalities. This granular control minimizes the risk of unauthorized access and data breaches. Microsoft’s commitment to compliance with various international and regional data privacy regulations, including GDPR and CCPA, is paramount. Azure AI’s architecture incorporates these regulations into its core design, providing a secure and compliant environment for AI development and deployment.

Furthermore, Azure AI offers a range of features designed to enhance privacy. Differential privacy techniques, for example, allow for the analysis of data while minimizing the risk of identifying individuals. These techniques are particularly valuable in scenarios involving sensitive personal information. Azure’s commitment to transparency ensures developers understand the privacy implications of their choices. Comprehensive documentation and clear guidelines help developers build responsible AI systems that meet stringent privacy standards. Regular security audits and penetration testing further strengthen the platform’s security posture, proactively identifying and mitigating potential vulnerabilities. The platform also integrates seamlessly with other Azure security services, providing a holistic and layered approach to data protection. This integrated approach ensures that security is not an afterthought, but rather a fundamental aspect of the Azure AI ecosystem, reinforcing the core azure ai principles.

The robust security and privacy measures within Azure AI are not merely technical implementations; they reflect a deep commitment to responsible AI development. Microsoft actively invests in research and development to enhance security and privacy features. This ongoing commitment ensures that Azure AI remains at the forefront of secure AI development. By adhering to best practices and maintaining a strong security posture, Azure AI enables developers to build innovative AI solutions while upholding the highest standards of data protection. This commitment to security and privacy underscores the importance of the azure ai principles in building trustworthy and ethical AI systems. Developers can confidently leverage Azure AI, knowing that their data is protected and that they are adhering to industry best practices for responsible AI.

How to Design Inclusive AI Solutions for Diverse Populations

Designing inclusive AI solutions requires a proactive approach to ensure fairness and equal access for all users. Adherence to azure ai principles is paramount. Developers must carefully consider the potential for bias in datasets and algorithms. Representational diversity within datasets is critical. This means actively seeking and incorporating data that reflects the demographics and experiences of diverse populations. Failing to do so can lead to AI systems that perpetuate or even exacerbate existing inequalities. For example, a facial recognition system trained primarily on images of one ethnic group may perform poorly on others. This highlights the importance of thorough dataset auditing and mitigation strategies to address identified biases.

Beyond data, the design of the AI system itself must be inclusive. Consider accessibility features for users with disabilities. This includes providing alternative input methods for users who cannot use a mouse or keyboard, or designing interfaces that are easily navigable for people with visual or auditory impairments. Furthermore, the user experience should be tailored to be culturally sensitive and appropriate for different user groups. Language support, cultural nuances, and local regulations all need consideration. By adhering to azure ai principles, developers can create AI that benefits everyone. This proactive approach minimizes the risks of algorithmic bias and enhances the overall user experience.

Implementing these strategies requires collaboration across disciplines. Input from ethicists, social scientists, and accessibility specialists can significantly improve the inclusiveness of AI systems. Regular user testing with diverse groups provides valuable feedback and helps identify potential issues. Continuous monitoring and iterative improvement are essential to ensure that the AI system remains inclusive over time. By proactively addressing inclusivity from the outset, developers can create AI solutions that truly benefit everyone, aligning perfectly with the azure ai principles and fostering a more equitable and just society. The development process should be transparent and explainable, enabling audits and reviews that check for biases.

Implementing Transparency in Your AI Development Workflow

Transparency is paramount when applying azure ai principles. Openness about how AI models function, including their limitations and potential biases, builds trust and fosters responsible use. This transparency extends to the entire development lifecycle, from data acquisition and preprocessing to model training, validation, and deployment. Clear documentation of each stage, including the choices made and the rationale behind them, is crucial. This detailed record allows for scrutiny, reproducibility, and the identification of potential issues early in the process. Furthermore, providing users with easily accessible information about the AI system’s capabilities, limitations, and potential biases empowers them to make informed decisions and interpret results responsibly. This open approach aligns directly with the core tenets of responsible AI development. Understanding the inner workings and limitations promotes trust and responsible use, ultimately contributing to a more ethical implementation of AI systems.

Several practical strategies enhance transparency. Model cards, a concise summary of the model’s capabilities, limitations, and intended use cases, are a valuable tool. These cards provide a readily understandable overview of the system’s behavior, allowing users to assess its suitability for specific tasks. Similarly, detailed technical documentation, which includes algorithms used, training data characteristics, and performance metrics, ensures accountability and enables others to replicate or improve upon the model. Making this documentation publicly available, where appropriate, strengthens the commitment to transparency. Utilizing explainable AI (XAI) techniques helps users understand the reasoning behind the model’s predictions. This allows users to identify potential biases or errors, furthering responsible and ethical usage. By embracing these methods, developers ensure that their AI systems are not just functional, but also understandable and accountable, in line with the azure ai principles.

Beyond documentation, proactive communication about potential biases and limitations is key. This includes providing clear explanations of the model’s strengths and weaknesses, acknowledging potential biases present in training data, and outlining steps taken to mitigate these biases. Organizations should be transparent about the impact of their AI systems, considering both intended and unintended consequences. Regular audits and evaluations of the model’s performance and fairness, coupled with public reporting of these findings, demonstrate a genuine commitment to transparency and responsible AI development. By proactively addressing concerns and communicating openly, developers foster greater public trust and build confidence in the ethical application of azure ai principles within their projects. This commitment to ongoing transparency is fundamental to achieving truly responsible AI.

Establishing Accountability for AI Outcomes

The “Accountability” principle within the azure ai principles framework is paramount. It necessitates establishing clear lines of responsibility for AI systems’ actions and outcomes. When AI systems make decisions that impact individuals or society, mechanisms must exist to investigate and address potential harms. This includes understanding how an AI model arrived at a specific decision, allowing for transparency and the identification of biases or errors. Proactive measures, such as rigorous testing and validation, are crucial to minimize negative consequences. However, even with robust testing, unexpected situations may arise, demanding a clear process for addressing these issues effectively.

Establishing accountability involves several key steps. First, comprehensive documentation of the AI system’s development lifecycle, including data sourcing, model training, and deployment, is essential. This allows for traceability and helps pinpoint the source of any problems. Second, clear roles and responsibilities must be defined within the development team and the organization. Individuals or teams should be designated as accountable for specific aspects of the AI system’s operation. This clear assignment of responsibility simplifies the process of addressing issues and assigning blame when necessary. Third, mechanisms for feedback and reporting should be established, allowing users and stakeholders to raise concerns regarding the AI system’s behavior. This feedback loop is crucial for continuous improvement and responsible development.

Effective accountability in AI development often involves implementing robust auditing trails. These trails track all actions taken by the AI system and the individuals interacting with it. This enables post-hoc analysis of decisions, allowing for thorough investigation in case of problematic outcomes. Moreover, ethical review boards or similar oversight committees can play a crucial role in assessing the ethical implications of AI systems before their deployment and provide ongoing monitoring. Adherence to the azure ai principles, including transparency and accountability, ensures responsible innovation and mitigates potential risks associated with the use of AI. A robust accountability framework is fundamental for building trust and confidence in AI systems and their impact on society. By proactively addressing potential issues and establishing clear accountability mechanisms, organizations can foster responsible and ethical use of Azure AI.

Putting Azure AI Principles into Practice: A Case Study of Inclusive Healthcare

Imagine a scenario where a healthcare provider uses Azure AI to develop a diagnostic tool for a prevalent disease. Adhering to azure ai principles is paramount. The team prioritizes inclusiveness by using diverse datasets representing various demographics, ensuring the tool’s accuracy across different populations. This prevents biases that might otherwise lead to misdiagnosis or unequal access to care. They also prioritize transparency by documenting the model’s development, highlighting potential limitations, and making this information easily accessible to healthcare professionals. Data privacy and security are maintained through robust encryption and access controls, complying with all relevant regulations. The model undergoes rigorous testing and validation, ensuring reliability and safety. Regular monitoring is implemented to quickly identify and address any emerging issues. By prioritizing these azure ai principles, the healthcare provider builds trust and ensures equitable access to high-quality care. This commitment to responsible AI development is crucial.

Another key aspect of this case study is the focus on accountability. Clear lines of responsibility are established for all stages of the AI model’s lifecycle, from data collection to deployment and ongoing monitoring. This ensures that any issues arising from the use of the tool can be addressed promptly and effectively. The team incorporates feedback mechanisms to allow users to report potential biases or inaccuracies, fostering continuous improvement and refinement of the model. This iterative approach reflects the ongoing nature of responsible AI development. The commitment to the azure ai principles ensures the model remains reliable, fair, and beneficial for all users. Continuous learning and improvement are central to its success.

This hypothetical example demonstrates the practical application of azure ai principles in a high-impact domain. By proactively addressing ethical considerations throughout the development process, developers can build AI systems that are not only effective but also fair, reliable, and beneficial for society. The successful implementation hinges on a commitment to transparency, accountability, and a continuous improvement cycle. This showcases the tangible benefits of incorporating the azure ai principles in real-world applications, leading to trust, and equitable outcomes.