Understanding the AWS S3 Command-Line Interface

The AWS Command Line Interface (CLI) provides a powerful and flexible way to manage Amazon S3 buckets and objects. It offers significant advantages over the web console, particularly for automation. Tasks such as uploading large numbers of files, scripting complex workflows, or integrating S3 operations into other tools are much easier using the CLI. This guide primarily focuses on the `aws s3 cp` command, a simple and commonly used tool for file transfers to S3. However, for a complete understanding, it will also briefly explore the `aws s3 put-object` command, a more granular alternative offering greater control. Using the CLI, and specifically commands like `aws s3 put-object cli`, streamlines S3 interactions, improving efficiency and enabling advanced configurations. The `aws s3 put-object cli` command, for instance, allows fine-grained control over metadata during uploads.

The `aws s3 cp` command offers a user-friendly approach to file uploads, mirroring the functionality of the standard `cp` command. Its intuitive syntax simplifies the process, making it ideal for everyday tasks. However, understanding the underlying mechanisms is crucial for efficient management of S3 data. This understanding extends beyond simple file transfers to encompass concepts such as metadata control and error handling. Understanding how the `aws s3 put-object cli` command manages object metadata gives users more control over their S3 content. This control is particularly important when dealing with specific file types or when integrating with other AWS services.

While `aws s3 cp` provides a convenient method for uploading files, `aws s3 put-object` provides more detailed control over the upload process. This increased control allows for more precise management of metadata, such as setting custom headers or defining specific content types. This level of control is essential for applications requiring specific configurations or those integrating with systems reliant on detailed metadata. The ability to define metadata through `aws s3 put-object cli` helps ensure data integrity and facilitates efficient data processing. Mastering both commands will allow users to leverage the full power of the AWS S3 CLI, adapting to various scenarios and optimizing their workflow. The `aws s3 put-object cli` method can significantly improve your efficiency and provide better control in certain situations.

Setting Up Your AWS Credentials

To effectively utilize the AWS CLI, including commands like aws s3 put-object cli, proper configuration is essential. First, ensure the AWS CLI is installed. Instructions for installation can be found on the official AWS documentation website. This process varies slightly depending on your operating system (e.g., macOS, Linux, Windows). Once installed, configure your AWS credentials using the aws configure command. This command prompts you to enter your Access Key ID, Secret Access Key, default region name, and output format. These credentials provide the CLI with the necessary permissions to interact with your AWS account. Remember to treat these credentials with the utmost care, as they grant significant access to your AWS resources. Avoid hardcoding them directly into scripts; instead, leverage environment variables or more secure methods for managing credentials. Incorrectly configured credentials will prevent successful execution of commands such as aws s3 put-object cli and other AWS CLI operations.

During the configuration process, you might encounter errors. Common issues include incorrect Access Key ID or Secret Access Key entries, typos in the region name, or network connectivity problems. Carefully review your input for accuracy. If problems persist, check your internet connection. AWS provides extensive documentation and troubleshooting resources online to assist with configuration problems. These resources can help identify and resolve most common configuration errors. Successfully completing the configuration process is a critical first step in using the AWS CLI, empowering you to effectively manage your S3 buckets and objects using commands like aws s3 put-object cli.

Security best practices are paramount. Never share your Access Key ID and Secret Access Key with others. Regularly rotate your access keys to mitigate security risks. Consider using AWS Identity and Access Management (IAM) to create more granular permissions for your users. This prevents unnecessary access and improves security posture. With properly configured credentials, you can leverage powerful tools like the AWS CLI to seamlessly manage your S3 storage, including employing commands such as aws s3 put-object cli for precise control over your object uploads. Remember, responsible credential management ensures your AWS resources remain secure and compliant.

Uploading a Single File with aws s3 cp

The aws s3 cp command provides a straightforward method for uploading single files to Amazon S3. Its simple syntax makes it ideal for quick uploads. The basic command structure is: aws s3 cp. The source_file specifies the local file path, while destination_s3_uri indicates the S3 location, including the bucket name and object key. For example, to upload a file named my_file.txt located in the current directory to a bucket named my-s3-bucket with the object key my_file.txt, use: aws s3 cp my_file.txt s3://my-s3-bucket/my_file.txt. This command uses the default settings. Optional parameters allow for greater control; for instance, --acl public-read sets public read access. The aws s3 put-object cli offers more granular control but aws s3 cp is often sufficient for simple uploads.

Consider scenarios requiring specifying a different object key. Suppose you wish to upload my_file.txt to the same bucket but under a different name, say uploaded_file.txt. The command would be: aws s3 cp my_file.txt s3://my-s3-bucket/uploaded_file.txt. Observe that the destination path now uses uploaded_file.txt as the object key. Furthermore, you can upload to a subfolder within your S3 bucket. To upload my_file.txt to a subfolder named data within my-s3-bucket, the command would be: aws s3 cp my_file.txt s3://my-s3-bucket/data/my_file.txt. This flexibility simplifies organization within the S3 bucket. Remember, incorrect bucket names or file paths result in upload failures. Always double-check these details before executing the command. The aws s3 put-object cli provides similar functionality but uses a different command structure.

Error handling is crucial. Incorrect permissions lead to access denied errors. Network connectivity problems cause upload failures. Verifying the AWS CLI configuration and network connection resolves many issues. The command’s output provides clues to resolve problems; for example, error messages pinpoint the cause of failure. Successful uploads confirm the file’s presence in S3. You can verify the upload by using the AWS S3 console or other AWS management tools. For more complex metadata management or fine-grained control over the upload process, the aws s3 put-object command offers a more powerful solution. While aws s3 cp suffices for many single-file uploads, understanding aws s3 put-object cli expands your capabilities significantly.

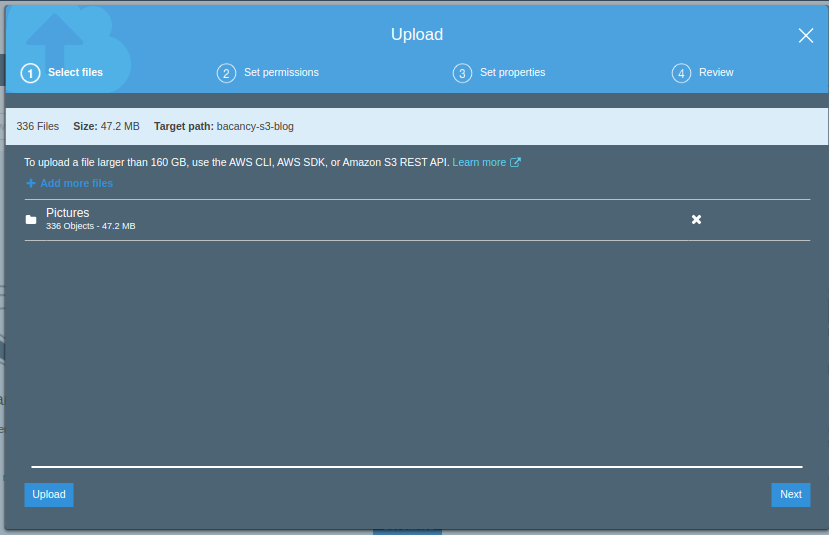

Uploading Multiple Files with aws s3 cp

The aws s3 cp command efficiently handles multiple file uploads. Using wildcards, users can specify patterns to upload numerous files simultaneously. For instance, aws s3 cp *.txt s3://my-bucket/my-folder/ uploads all text files in the current directory to the specified S3 folder. This simplifies the process significantly compared to uploading files individually. Remember to replace s3://my-bucket/my-folder/ with your actual bucket and folder path. The command intelligently handles file names containing spaces or special characters, ensuring a smooth upload process. For even greater control, consider using the aws s3 put-object cli for complex scenarios, although aws s3 cp often suffices for simple multi-file uploads. This method streamlines workflows, particularly useful when dealing with large numbers of files. The efficiency of this method is critical for productivity gains.

Uploading entire directories is also straightforward. The command aws s3 cp ./mydirectory/ s3://my-bucket/mydirectory/ --recursive uploads the entire mydirectory, including subdirectories, recursively. The --recursive flag is essential for this functionality. Without this flag, only the contents of the top-level directory would be uploaded. Careful attention to the source and destination paths is crucial to avoid unintended overwrites or incorrect file organization within the S3 bucket. The aws s3 put-object cli offers a different approach for complex directory structures; however, aws s3 cp provides a simpler, often more efficient solution for most common use cases. It’s essential to understand the implications of recursive uploads to prevent accidental data overwrites.

Handling files with unusual characters requires additional consideration. While aws s3 cp generally manages spaces and special characters automatically, issues can arise with certain characters. Properly encoding file paths can help to resolve such issues. Using URL encoding for file paths ensures that the upload process is successful, even with complex file names. While aws s3 put-object cli allows for detailed metadata control, aws s3 cp usually handles these scenarios adequately. For extremely complex situations, investigate using more advanced techniques like scripting with Python or Bash to preprocess the files before upload, ensuring a robust and reliable process. Always check the success of the upload process to avoid data loss.

How to Upload Files to S3 Using aws s3 put-object cli

The aws s3 put-object cli command offers a granular approach to uploading files to Amazon S3. Unlike aws s3 cp, which handles file transfers more directly, aws s3 put-object provides fine-grained control over metadata and other object attributes. This command is particularly useful when precise control is needed, such as setting custom metadata or managing content type explicitly. The basic syntax is: aws s3 put-object --bucket . Here, aws s3 put-object --bucket my-bucket --key my-file.txt --body ./my-file.txt uploads my-file.txt to the my-bucket bucket under the key my-file.txt. The aws s3 put-object cli command is a powerful tool.

To add metadata, use the --metadata option. This allows setting various attributes, such as Content-Type to specify the file type (e.g., --metadata Content-Type="image/jpeg") or custom metadata for organization (e.g., --metadata my-custom-key="my-custom-value"). Consider the following example: aws s3 put-object --bucket my-bucket --key image.jpg --body ./image.jpg --metadata Content-Type="image/jpeg" --metadata photographer="John Doe". This uploads image.jpg, setting its content type and adding custom metadata. Using the aws s3 put-object cli for precise metadata control is essential for managing files efficiently. The flexibility of aws s3 put-object cli makes it suitable for various scenarios.

Comparing aws s3 cp and aws s3 put-object, the former excels in simplicity for quick uploads, while the latter provides superior control over metadata and object attributes. Choosing between them depends on the specific task. For simple file transfers, aws s3 cp suffices. However, when detailed metadata management is crucial or when you need to exert more control over the upload process, using aws s3 put-object cli becomes essential. Mastering both commands enhances your proficiency with the AWS S3 CLI and allows you to adapt to a wider range of S3 management tasks. Remember to always adhere to AWS security best practices when utilizing these commands.

Working with Object Metadata

Metadata provides crucial information about objects stored in S3. It aids organization, management, and efficient retrieval. When uploading files, one can embed descriptive data such as content type, custom attributes, or other relevant details. This enhances searchability and facilitates automation. The `aws s3 cp` command offers limited metadata control. To exert more granular control over metadata, the `aws s3 put-object cli` command proves invaluable. Using `aws s3 put-object cli` allows for the precise specification of various metadata parameters during the upload process, offering greater flexibility in managing your S3 objects.

Setting the content type is a common use case. Accurately specifying the content type (e.g., `image/jpeg`, `text/html`) ensures proper rendering and handling by applications consuming these objects. The `aws s3 put-object cli` command achieves this via the `–content-type` parameter. Custom metadata, represented as key-value pairs, adds further descriptive context. This data, not directly affecting object rendering, aids in filtering and categorizing. For instance, you might use custom metadata to tag files with project names, timestamps, or other identifiers. Both `aws s3 cp` and `aws s3 put-object cli` support this, although the `aws s3 put-object cli` offers more direct control through parameters like `–metadata`. Proper metadata management improves S3 object discoverability and simplifies complex workflows.

Consider a scenario involving uploading images with metadata. Using `aws s3 put-object cli`, you can specify the content type as `image/jpeg` and add custom metadata such as “Project”: “Summer2024” and “Photographer”: “JaneDoe”. This approach is superior to the `aws s3 cp` command for detailed metadata control. The `aws s3 put-object cli` command provides the necessary flexibility and granularity to manage your metadata effectively. Remember that well-structured metadata enhances the overall organization and efficiency of your S3 storage. Efficient metadata management, especially when using the `aws s3 put-object cli`, contributes significantly to streamlined workflows and optimized object retrieval. This improves the discoverability and usability of your files within the S3 environment.

Handling Errors and Troubleshooting

When uploading files to S3 using the AWS CLI, several errors can occur. Incorrect bucket names frequently cause issues. Double-check the bucket name’s spelling and ensure it exists in your AWS account. The `aws s3 ls` command can list your buckets for verification. Network connectivity problems can also prevent successful uploads. Check your internet connection and AWS service status. Temporary network disruptions can impact uploads. Try again after a short wait. Permission errors are common. Confirm your AWS credentials grant sufficient permissions to upload objects to the specified bucket. The IAM (Identity and Access Management) console allows checking and modifying user policies.

Access denied errors often indicate insufficient permissions. AWS Identity and Access Management (IAM) controls access. Ensure your user or role has the `s3:PutObject` permission for the target bucket. Incorrect file paths cause upload failures. Verify that the specified local file exists and the path is correct. Using relative paths can be problematic; absolute paths are generally safer. Large files may encounter upload timeouts. Adjust the AWS CLI’s timeout settings if necessary, or consider using multipart uploads for larger files. Multipart uploads break large files into smaller parts, increasing upload reliability. The `aws s3 put-object cli` command offers configuration options for this. Error messages provide valuable clues. Carefully examine the error messages for specific details. The messages often pinpoint the root cause. AWS documentation provides comprehensive explanations of various error codes. Consult this documentation for detailed troubleshooting steps. For uncommon or persistent issues, explore AWS support resources.

Using the `aws s3 put-object cli` command, consider potential issues with object keys. Improperly formatted keys can lead to failures. Avoid using characters that are not URL-safe. For instance, spaces or special characters often require encoding. If you encounter errors related to metadata, double-check the metadata format. Ensure that the metadata conforms to the expected structure. Incorrect content types can lead to problems with file display or application integration. Setting the appropriate content type is crucial for optimal functionality. If issues persist, consider creating a test bucket with basic permissions. Testing in a separate environment avoids impacting production data. The aws s3 put-object cli command makes testing straightforward. Isolate the problem by systematically testing individual components. Remember to always follow AWS best practices for security and error handling.

Advanced Techniques and Best Practices for AWS S3 File Uploads

Automating AWS S3 interactions offers significant efficiency gains. Scripting languages like Python and Bash readily integrate with the AWS CLI. Consider using the `aws s3 put-object cli` within a script to upload files automatically as part of a larger workflow. This allows for scheduled backups, automated deployments, or seamless integration into CI/CD pipelines. Remember to manage credentials securely, avoiding hardcoding within scripts. Employ environment variables or dedicated secret management services for optimal security. The `aws s3 put-object` command provides fine-grained control, particularly useful in automated scenarios.

AWS S3 versioning provides a robust safeguard against accidental data loss or corruption. Enabling versioning preserves previous versions of uploaded objects, allowing for easy recovery if necessary. Combine versioning with lifecycle policies to manage object storage costs effectively. Lifecycle policies allow for automated actions based on object age or size. For example, automatically archiving older, less frequently accessed data to a lower-cost storage tier. Regularly review and optimize these policies to ensure cost-efficiency without sacrificing data accessibility. The `aws s3 put-object cli` can be incorporated into scripts that leverage lifecycle policies for advanced management.

Security and monitoring are paramount for any cloud storage solution. Regularly review access permissions to ensure only authorized users or applications can access your S3 buckets. Implement strong encryption both in transit and at rest to protect sensitive data. Monitor S3 usage patterns closely to identify potential anomalies or security threats. Tools like CloudTrail provide audit logs of all S3 activity, aiding in troubleshooting and security analysis. When using `aws s3 put-object cli`, integrating security best practices into your scripts is crucial. This includes employing encryption and careful management of IAM roles and policies. Proactive monitoring and logging can help prevent issues and maintain data integrity.