Navigating Amazon S3 with Command Line Tools

Interacting with Amazon Simple Storage Service (S3) through the command line interface (CLI) offers a powerful and efficient method for managing cloud storage. Unlike graphical user interfaces, the CLI provides a text-based interface, making it ideal for automation, scripting, and complex operations. The aws s3 command provides a streamlined way to perform tasks, from uploading and downloading files to managing access permissions and synchronizing entire directories. This approach benefits users needing to integrate storage management into their development workflows, offering greater control and precision compared to using the AWS Management Console. While the AWS console offers a visual interface, the CLI’s strength lies in its capability to automate repetitive tasks, enabling consistent and error-free execution through scripts and automation tools. This introduction will explore the core functionalities of the aws s3 command, setting the stage for more complex configurations and uses.

The versatility of the aws s3 command extends beyond simple file transfers; it empowers users to manage every aspect of their S3 storage solution. For instance, system administrators can use scripts to automatically back up data to S3 daily or ensure that files are stored with specific security and storage configurations. Developers find the aws s3 command useful in deploying applications, syncing web content, and handling large datasets, directly integrating storage management into deployment and data pipelines. This is particularly valuable in DevOps environments where speed and automation are paramount. The aws s3 command also enables users to set up lifecycle policies through command line instructions, ensuring data is managed efficiently over its lifetime. These capabilities showcase why the CLI approach offers advantages that the graphical console cannot achieve, marking a shift from manual operations to scripted, repeatable, and more manageable interactions with AWS S3.

How To Efficiently Manage Your S3 Buckets with the AWS CLI

Effective management of Amazon S3 buckets is paramount for any cloud-based data storage strategy, and the AWS CLI provides the tools necessary to accomplish this efficiently. The core operations revolve around creating, listing, and deleting buckets, each facilitated through specific aws s3 command variations. To create a new bucket, the command `aws s3 mb s3://your-bucket-name –region your-region` is used. Note that the bucket name must be globally unique, and the region specified should align with your needs. For instance, `aws s3 mb s3://my-unique-bucket-name –region us-west-2` creates a bucket named ‘my-unique-bucket-name’ in the us-west-2 region. When choosing a bucket name, adhere to naming conventions that avoid using uppercase letters or underscores and starting or ending with a hyphen. The regional configuration is crucial for latency and compliance, so select carefully. The AWS CLI provides this functionality via an aws s3 command, ensuring a consistent approach to bucket creation in various environments.

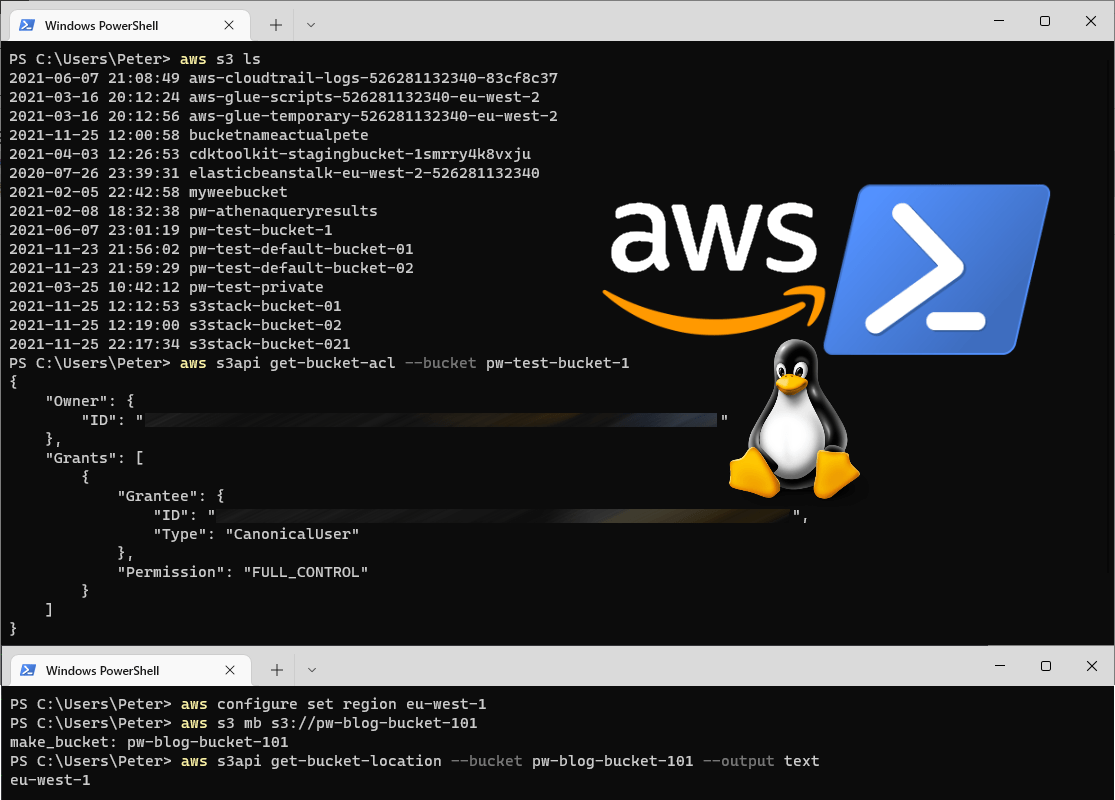

To list all existing S3 buckets, the command `aws s3 ls` is utilized. This action will output a list of all buckets owned by the AWS account that is currently authenticated with the AWS CLI. This aws s3 command provides a quick way to verify all available storage resources linked to an account. For situations requiring deletion of a bucket, the command `aws s3 rb s3://your-bucket-name` comes into play. However, before deleting a bucket, ensure it is completely empty, as deleting a bucket with contents will result in an error. To empty a bucket, all objects and versions need to be deleted. If a bucket is not empty, the deletion process can be simplified through a command that includes the `–force` option with extreme caution, such as `aws s3 rm s3://your-bucket-name –recursive –force`, as this irrevocably removes all the data from your bucket. This aws s3 command is powerful, making it important to use it with care. Understanding these fundamental aws s3 command operations for bucket management allows for a smooth and controlled storage infrastructure.

Uploading and Downloading Objects: Essential S3 CLI Commands

Transferring files to and from Amazon S3 is a fundamental task, and the AWS Command Line Interface (CLI) provides the necessary tools for efficient data movement. The primary command for this is `aws s3 cp`, which is used for both uploading and downloading. To upload a single file, the command follows the structure `aws s3 cp local_file.txt s3://your-bucket-name/`. This command copies the file ‘local_file.txt’ from your local system to the specified S3 bucket, placing it at the root level. You can also upload files to specific folders by adding a path like so `aws s3 cp local_file.txt s3://your-bucket-name/folder/`. For downloading, simply reverse the source and destination, for example, `aws s3 cp s3://your-bucket-name/file_on_s3.txt local_file.txt` downloads ‘file_on_s3.txt’ from your bucket to a file named ‘local_file.txt’ on your local machine. These aws s3 command variations can be adapted to move single files, yet, the power of the `aws s3 cp` command extends to managing entire directories by adding the `–recursive` option which copies an entire local directory to an S3 bucket with the same structure, e.g., `aws s3 cp my_local_directory s3://your-bucket-name/ –recursive`, and viceversa when downloading. Moreover, the command supports wildcards. For example, `aws s3 cp *.txt s3://your-bucket-name/folder/` would upload all files ending in ‘.txt’ to the folder in the s3 bucket. These methods provide efficiency and flexibility for managing your data.

Beyond basic transfers, the `aws s3 command` offers robust options to control storage characteristics and access. During uploads, you can specify the storage class using the `–storage-class` parameter, allowing you to choose between options like STANDARD, REDUCED_REDUNDANCY, STANDARD_IA, ONEZONE_IA, and GLACIER to optimize costs and access frequency. For instance, `aws s3 cp my_file.txt s3://your-bucket-name/ –storage-class REDUCED_REDUNDANCY` uploads ‘my_file.txt’ with the ‘REDUCED_REDUNDANCY’ storage class. Encryption can be applied during upload by using the `–sse` flag, either `aws s3 cp my_file.txt s3://your-bucket-name/ –sse` or `aws s3 cp my_file.txt s3://your-bucket-name/ –sse aws:kms –sse-kms-key-id

Syncing Data with S3: Keeping Your Local Files and Cloud Storage Aligned

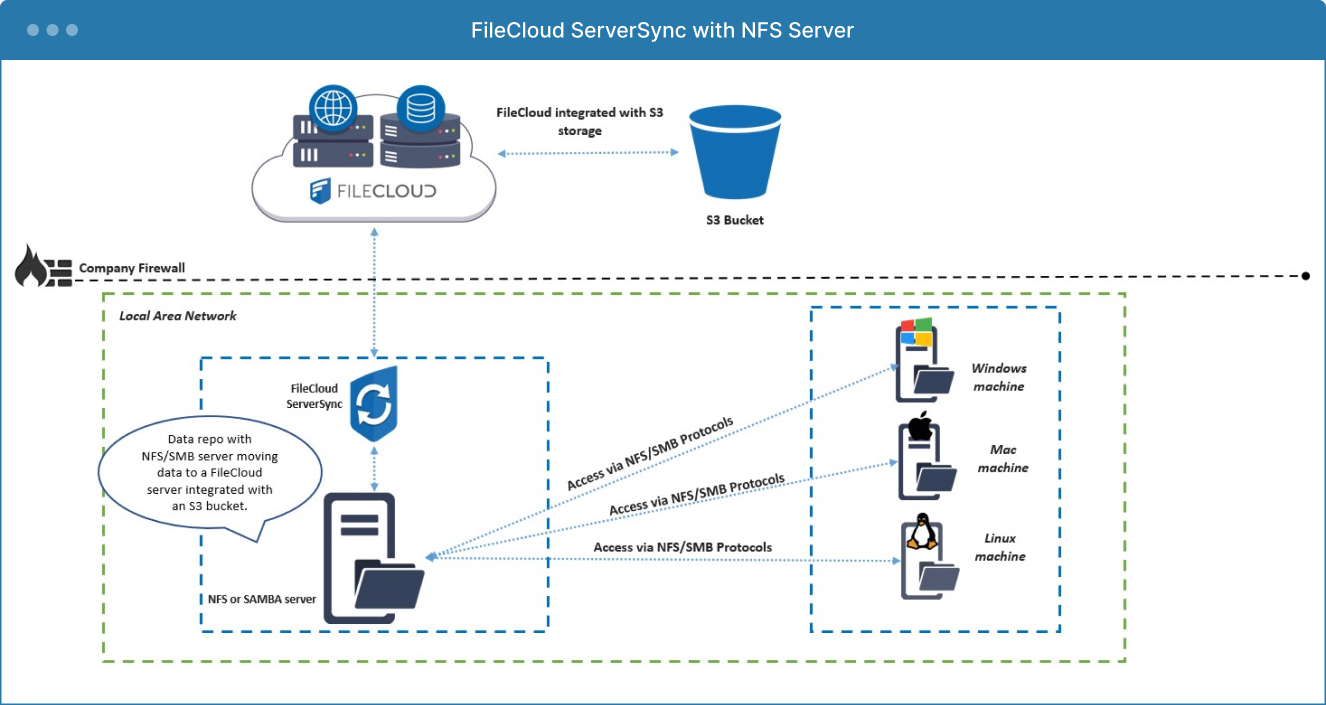

The `aws s3 sync` command is a powerful tool for synchronizing local directories with Amazon S3 buckets. Unlike basic upload and download operations, `sync` intelligently detects changes between the source and destination, ensuring that only modified or new files are transferred. This functionality is invaluable for maintaining up-to-date backups or mirroring local folders to cloud storage. When employing the `aws s3 command` for syncing, the utility analyzes timestamps and file sizes to determine which files need to be copied, thus optimizing bandwidth usage and transfer time. For instance, if a file is modified in your local directory, the `aws s3 sync` will recognize the change and update the corresponding file in the S3 bucket. Conversely, if a file is deleted locally, it will also be removed from the S3 bucket upon sync, offering a true reflection of your local directory in cloud storage.

The flexibility of the `aws s3 command` `sync` extends beyond simple backups. It can be utilized to maintain a complete mirror of your website’s assets in an S3 bucket, ready to serve static content. By using the sync command, administrators can quickly propagate changes across both their local development environment and the live hosting platform. Furthermore, you can specify options to include or exclude certain files based on patterns, allowing for fine-tuned control over what is synchronized, ensuring that only the intended data is transferred. This granular control, combined with the intelligent change detection, makes the `aws s3 command` `sync` a cornerstone in many development and deployment pipelines. The `aws s3 command` is also very versatile; it’s not limited to local-to-S3 syncs, it can also sync between different S3 locations, providing efficient bucket to bucket data replication.

Effectively utilizing the `aws s3 command` `sync` requires understanding its behavior and the available options. For example, you might use the `–delete` flag to ensure that files removed from the source are also removed from the destination during a synchronization operation. This option ensures that the target bucket precisely mirrors the source, essential for maintaining clean and accurate backups. Also, the `–exclude` and `–include` parameters offer ways to selectively omit or include files based on wildcard patterns, offering even more control over what is transferred. By becoming proficient with these options, users can maximize the efficiency and effectiveness of their data synchronization tasks. In short, the `aws s3 command` `sync` is a critical tool in any cloud data management strategy.

Managing Object Metadata and Properties Through the CLI

The aws s3 command provides powerful capabilities to manage object metadata and properties directly from the command line, enabling users to optimize storage and data lifecycle management. Metadata, essentially data about data, includes characteristics like storage class, tags, and content type which can be viewed, modified, and managed using specific aws s3 commands. To inspect an object’s metadata, the command `aws s3api head-object –bucket your-bucket-name –key your-object-key` retrieves all metadata associated with a particular object without downloading the content itself. This is beneficial for quick inspections and validations. Modifying the storage class of an object, for example from STANDARD to STANDARD_IA, can be done using the `aws s3api copy-object –bucket your-bucket-name –key your-object-key –copy-source your-bucket-name/your-object-key –storage-class STANDARD_IA`, saving storage costs by moving less frequently accessed objects to a less expensive tier. Tagging objects with key-value pairs using the command `aws s3api put-object-tagging –bucket your-bucket-name –key your-object-key –tagging ‘TagSet=[{Key=Environment,Value=Production}]’` allows for enhanced searchability and policy implementation. Similarly, the `aws s3api get-object-tagging` command helps in retrieving the already applied tags to verify the operations.

Retrieving object attributes to confirm operations is essential for maintaining system integrity. The aws s3 command allows users to directly verify the effects of modifications, ensuring changes made are as intended. For instance, after modifying the storage class or adding tags, users can confirm the updates by issuing the `aws s3api head-object` command again, to see that the storage class now reflects the change, or by using `aws s3api get-object-tagging` to verify the correct tags are now applied. This capability is invaluable when automating tasks, and it can be included in scripts to enhance monitoring and control. The ability to set and manage object metadata through the aws s3 command also facilitates complex lifecycle policies, such as moving objects to archive after a specific number of days or applying different policies based on tags. Correctly configuring and utilizing metadata is a critical aspect of effective S3 management using the command line interface. It streamlines workflows and automates data lifecycle management strategies. The aws s3 command functionalities extend to changing encryption settings, content types, and more, all contributing to fine-tuned object management.

The process to manipulate object properties using the aws s3 command includes several critical steps for effectively managing your resources. For instance, to change an object’s content type, you could use `aws s3api copy-object –bucket your-bucket-name –key your-object-key –copy-source your-bucket-name/your-object-key –metadata-directive REPLACE –content-type ‘application/json’`. This level of control through the CLI gives users granular control over their S3 storage. Understanding the full scope of the aws s3 command and metadata capabilities is vital for maintaining well-organized, efficient, and cost-effective data storage in Amazon S3. It allows users to implement complex rules and policies using the command line interface, making S3 management both flexible and robust. By verifying metadata using commands such as `aws s3api head-object`, users can confirm updates and ensure consistent system behavior, thus optimizing operations and avoiding potential data management issues.

Controlling Access to Your S3 Resources Using the CLI

Managing access to your Amazon S3 resources is crucial for security and data protection, and the AWS Command Line Interface (CLI) provides powerful tools to handle this directly. Understanding how to control who can access your buckets and objects is essential, and the CLI allows for both broad and granular permission configurations. This section focuses on manipulating bucket policies and Access Control Lists (ACLs) using aws s3 command, enabling precise control over your data. Bucket policies, written in JSON format, are attached to the bucket and define access permissions, while ACLs can be applied to individual objects as well as buckets and offer more simplified permission settings. The aws s3 command allows the modification of these policies, making it possible to grant or revoke permissions based on specific criteria such as users, roles, or even IP addresses, ensuring only authorized entities can interact with your data. When crafting policies, always follow the principle of least privilege to minimize security risks, granting only the minimum necessary permissions to each user or role. For example, a read-only policy for certain users or limiting write permissions to a specified group are common access control scenarios.

To modify a bucket policy using the aws s3 command, one would typically use the `aws s3api put-bucket-policy` command followed by the bucket name and the policy document that describes the access configurations. Similarly, the `aws s3api get-bucket-policy` command allows you to examine an existing policy to see its access rules. For instance, to grant a specific AWS user full access to a bucket, you would create a policy document with the correct user ARN and permissions, then use the put-bucket-policy command. ACLs are manipulated through commands like `aws s3api put-bucket-acl` for setting the overall bucket access and `aws s3api put-object-acl` for controlling object level permissions. The CLI facilitates adding grants for specific users with options like `grant-read`, `grant-write`, and `grant-full-control`, making access management easier to implement and maintain, and offering several options to control how users interact with the storage. It’s important to test and verify the applied policies and ACLs after using any aws s3 command to ensure correct access control is implemented.

When modifying access, you can also leverage conditional statements in your policies. For example, you might grant read access based on the source IP address. When working with ACLs, be aware of how they interact with policies; ACLs are not the preferred method for granular access control, policies offer more flexibility. The aws s3 command gives you the tools necessary to audit your permissions as well, so you can ensure the current configuration meets your security needs. This level of detail is critical for compliance and risk management. In essence, the AWS CLI’s capabilities allow for a meticulous and secure administration of your S3 environment, reducing vulnerabilities by granting necessary access while preventing unnecessary exposure. Understanding these command line methods is essential for anyone working with S3, and they provide the means to finely tune how data is managed in your cloud storage environment.

Advanced S3 CLI Techniques for Streamlined Workflows

The aws s3 command line interface extends beyond basic operations, offering powerful features that can significantly streamline workflows. One such feature is versioning, which allows you to preserve multiple versions of an object within a bucket. This is particularly useful for data recovery and tracking changes over time. To work with object versions, commands like `aws s3api get-object –bucket your-bucket –key your-file.txt –version-id version_id_here` can be employed, where you’ll need to specify the desired version. Another powerful tool is the creation of pre-signed URLs, which allow temporary, time-limited access to objects without requiring AWS credentials. The command `aws s3 presign s3://your-bucket/your-file.txt –expires-in 3600` generates a URL valid for one hour (3600 seconds), which can be shared with external users, effectively managing access to sensitive data. Additionally, for filtering through numerous objects, leveraging the `–query` option along with the powerful JMESPath query language is very helpful for targeted selection. For example, to list only files with specific metadata tags, you would combine the list command with a sophisticated query to return results based on tags, size, or other metadata attributes.

Further enhancing the capabilities of the aws s3 command, you can explore more intricate operations, such as batch operations for making changes across many objects or dealing with complex access scenarios. Using the `aws s3api` gives low level API access where more precise control is needed, allowing for operations not directly accessible via the more user-friendly `aws s3` commands. Batch operations, facilitated through features like `aws s3api create-multipart-upload`, allow you to manage and manipulate multiple objects efficiently, critical when dealing with large data sets. For example, to create a complex migration or backup strategy a combination of batch commands can be used to move, copy, or delete large number of objects across different locations in S3, this can improve efficiency, particularly if there are many resources to manage. The aws s3 command also allows the user to manage object expiry, using lifecycle policies which can automatically remove or transition objects to different storage classes, improving cost effectiveness. Understanding and utilizing these features is vital for efficiently managing data in S3, ensuring data is not only accessible but also cost optimized and secure.

Troubleshooting Common Issues When Using the S3 CLI

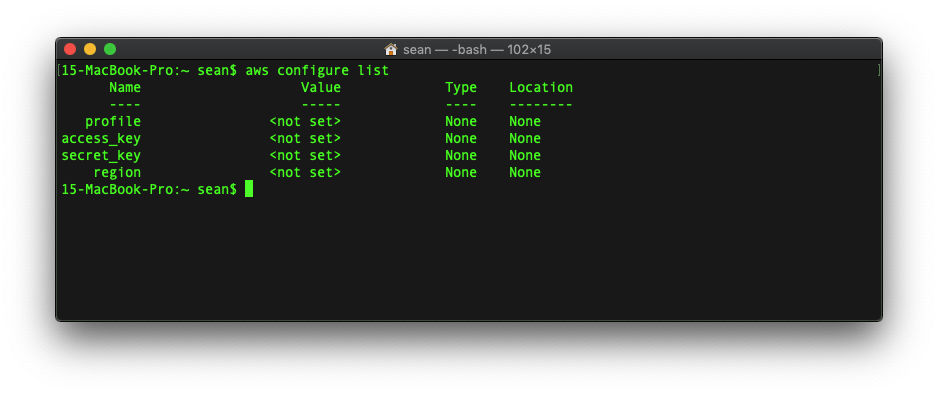

Encountering issues while using the aws s3 command is not uncommon, but with systematic troubleshooting, most problems can be resolved efficiently. One of the primary hurdles involves authentication. Users often face errors due to incorrect or missing AWS credentials. Ensure your AWS CLI is configured with the correct access key ID, secret access key, and default region. Utilize the command `aws configure` to review or update these settings. Another common source of problems are permission-related errors. When the aws s3 command returns “Access Denied,” it indicates the IAM user or role being used lacks the necessary permissions to perform the requested operation on the specified S3 resource. In such cases, review the IAM policies associated with your user or role, ensuring they grant appropriate S3 permissions. Incorrect bucket names or object keys are also frequent culprits, always verify the names and paths. Typographical errors in the aws s3 command can lead to unexpected outcomes or failed executions. Careful examination of the command syntax, including spaces, hyphens, and arguments, is necessary. Problems may arise with network connectivity, especially when handling large files. Check your network setup to ensure there are no firewalls or network policies blocking communication between your machine and AWS S3. Employing the `–debug` flag with the aws s3 command provides verbose output, often providing detailed insights into underlying issues.

Another set of challenges stems from misunderstanding command options or flags. For instance, forgetting to specify a storage class, encryption setting, or ACL when uploading objects, could lead to unintended consequences. When facing unexpected outcomes, always double-check the aws s3 command documentation for the correct usage of various options. Sync commands can also present unique challenges, such as unexpected deletions or uploads if the `–delete` flag is not used with caution. Moreover, when working with versioned buckets, it’s imperative to understand how the aws s3 command interacts with object versions, as incorrect version handling can lead to data loss or unexpected retrieval of older versions. To avoid common issues, always double-check your commands before execution, and it’s advisable to test with non-critical resources before running them on production data. When debugging, start with simpler commands and gradually increase the complexity. In the event of a persistent problem, use AWS CloudTrail to audit API calls and identify the root cause, often exposing the exact command that failed and its associated error. This approach empowers the user to tackle most issues related to the aws s3 command.