What is AWS Pipeline?

AWS Pipeline is a continuous delivery service offered by Amazon Web Services (AWS) that automates the build, test, and deployment of applications. It is a powerful tool that streamlines the development and deployment process, allowing developers to focus on writing code and delivering value to their customers. The service is designed to be highly scalable, secure, and flexible, making it an ideal choice for businesses of all sizes looking to improve their development and deployment processes.

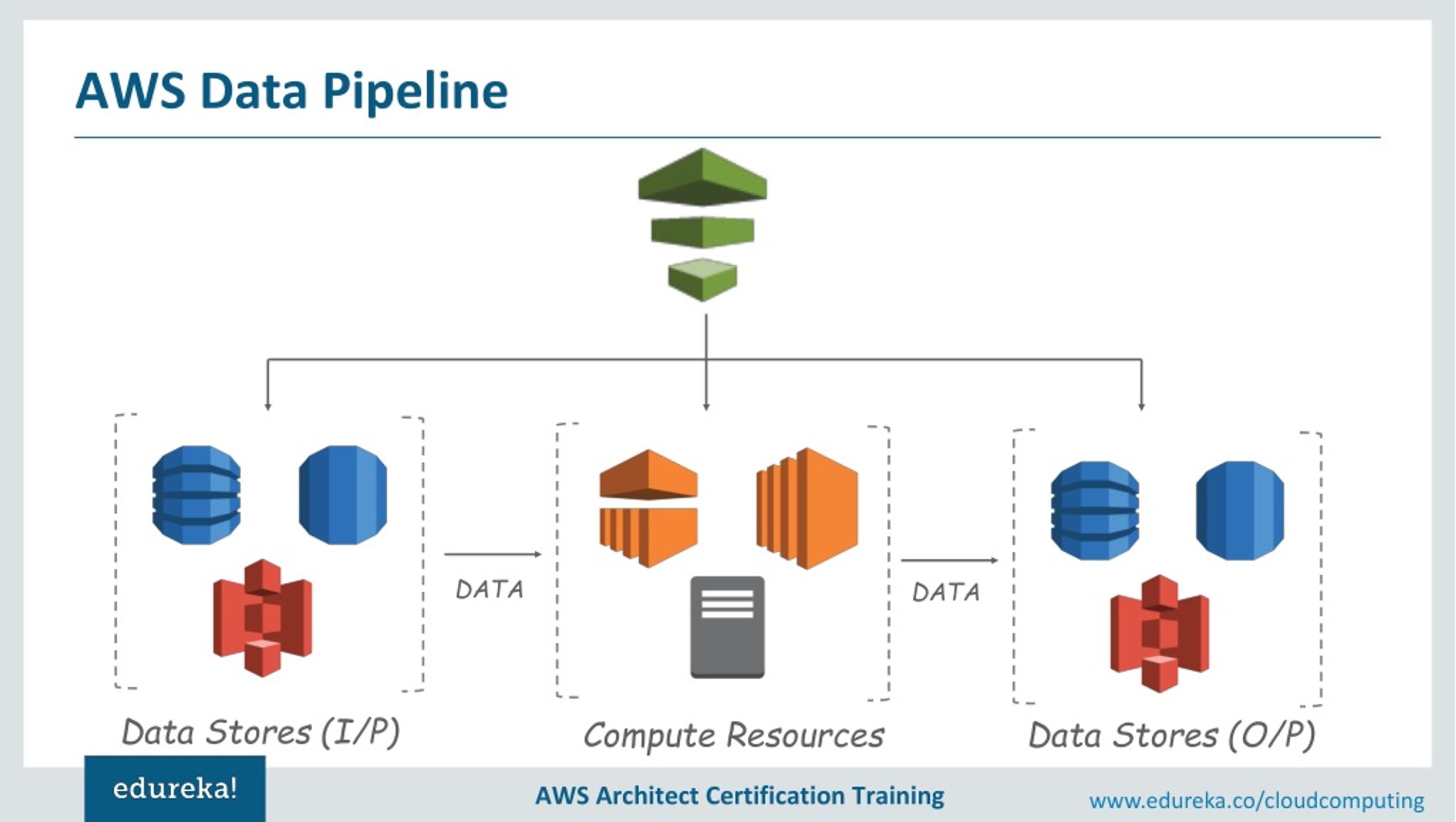

At its core, AWS Pipeline is a collection of connected stages that represent the various steps in the development and deployment process. These stages can include source code repositories, build and test environments, and deployment targets. By automating the process of moving code through these stages, AWS Pipeline helps to eliminate errors, reduce deployment times, and improve overall quality.

In the world of cloud computing, AWS Pipeline has become an essential tool for businesses looking to stay competitive and agile. With its powerful automation capabilities, flexible architecture, and robust security features, AWS Pipeline has established itself as a leader in the world of continuous delivery.

Key Components of AWS Pipeline

AWS Pipeline is made up of several key components that work together to automate the build, test, and deployment of applications. These components include source code, build, test, deploy, and release artifacts. Each component plays a crucial role in the continuous delivery process, and understanding how they work together is essential to getting the most out of AWS Pipeline.

The source code component is where developers store the code for their applications. AWS Pipeline supports a variety of source code repositories, including GitHub, Bitbucket, and CodeCommit. By integrating with these repositories, AWS Pipeline can automatically pull in the latest code changes and trigger a new build or deployment.

The build component is where the code is compiled and packaged into a deployable artifact. AWS Pipeline supports a variety of build tools, including Maven, Gradle, and Ant. By configuring a build stage in AWS Pipeline, developers can automate the process of building and packaging their code, reducing the risk of errors and inconsistencies.

The test component is where the code is tested to ensure it meets quality standards. AWS Pipeline supports a variety of testing frameworks, including JUnit, TestNG, and PyTest. By configuring a test stage in AWS Pipeline, developers can automate the process of testing their code, reducing the risk of bugs and other issues making it to production.

The deploy component is where the code is deployed to a target environment. AWS Pipeline supports a variety of deployment targets, including Amazon EC2, AWS Lambda, and Elastic Beanstalk. By configuring a deploy stage in AWS Pipeline, developers can automate the process of deploying their code, reducing the risk of errors and inconsistencies.

The release artifacts component is where the deployable artifacts are stored and managed. AWS Pipeline supports a variety of artifact stores, including Amazon S3 and CodeCommit. By storing release artifacts in a central location, developers can ensure that the correct version of their code is deployed to the correct environment, reducing the risk of errors and inconsistencies.

By understanding these key components and how they work together, developers can build and deploy high-quality applications quickly and efficiently using AWS Pipeline.

How to Create an AWS Pipeline

Creating an AWS Pipeline is a straightforward process that involves setting up source code, build, test, and deploy stages. Here’s a step-by-step guide on how to create an AWS Pipeline:

-

Create an AWS CodeCommit repository to store your source code. You can also use other source code repositories, such as GitHub or Bitbucket, but for this example, we’ll use CodeCommit.

-

Create an AWS Elastic Beanstalk environment to deploy your application. This will be the target environment for your AWS Pipeline.

-

Create an AWS IAM role to allow AWS Pipeline to access your CodeCommit repository and Elastic Beanstalk environment. Make sure to grant the necessary permissions to the role.

-

Create an AWS CodeBuild project to build your application. This will be the build stage for your AWS Pipeline. Make sure to specify the source code repository and build settings.

-

Create an AWS CodeDeploy deployment group to deploy your application. This will be the deploy stage for your AWS Pipeline. Make sure to specify the Elastic Beanstalk environment and deployment settings.

-

Create an AWS Pipeline and specify the source code, build, test, and deploy stages. Make sure to specify the CodeCommit repository, CodeBuild project, and CodeDeploy deployment group.

Here’s an example of what your AWS Pipeline configuration might look like:

<pipelines> <pipeline name="my-pipeline"> <stage name="Source"> <action name="SourceAction"> <configuration> <repositoryName>my-repo</repositoryName> <branchName>master</branchName> <revision>${env.COMMIT_ID}</revision> </configuration> </action> </stage> <stage name="Build"> <action name="BuildAction"> <configuration> <projectName>my-build-project</projectName> </configuration> </action> </stage> <stage name="Deploy"> <action name="DeployAction"> <configuration> <applicationName>my-app</applicationName> <deploymentGroupName>my-deployment-group</deploymentGroupName> </configuration> </action> </stage> </pipeline> </pipelines> By following these steps, you can create an AWS Pipeline that automates the build, test, and deployment of your applications. Remember to monitor and troubleshoot any issues that arise, and to optimize your pipeline for best results.

Best Practices for AWS Pipeline

To get the most out of AWS Pipeline and ensure a smooth and efficient continuous delivery process, it’s important to follow best practices. Here are some tips and recommendations to help you optimize your use of AWS Pipeline:

Optimize the Continuous Delivery Process

To optimize the continuous delivery process, consider the following:

-

Automate as much as possible. Automation reduces the risk of errors and inconsistencies, and frees up time for developers to focus on other tasks.

-

Use blue/green deployments to minimize downtime and reduce the risk of errors. Blue/green deployments involve deploying a new version of your application alongside the old version, and then switching traffic over to the new version once it’s ready.

-

Use canary deployments to test new versions of your application with a small subset of users before rolling it out to everyone. Canary deployments help you catch and fix issues before they affect all of your users.

-

Use deployment approvals to ensure that new versions of your application are thoroughly tested and reviewed before being deployed to production.

Monitor and Troubleshoot Issues

To monitor and troubleshoot issues, consider the following:

-

Use AWS CloudWatch to monitor the health and performance of your pipeline and applications. CloudWatch provides real-time visibility into your pipeline, and can help you quickly identify and resolve issues.

-

Use AWS CodePipeline reports to track the progress of your pipeline and identify any bottlenecks or errors. CodePipeline reports provide detailed information about each stage of the pipeline, including the status, duration, and artifacts produced.

-

Use AWS X-Ray to trace requests through your pipeline and applications. X-Ray provides detailed insights into the performance and behavior of your applications, and can help you quickly identify and resolve issues.

Secure Your Pipeline

To secure your pipeline, consider the following:

-

Use AWS Identity and Access Management (IAM) to set up access controls and restrict access to your pipeline and resources.

-

Use AWS Key Management Service (KMS) to encrypt data in transit and at rest. KMS provides secure encryption keys and helps ensure that your data is protected from unauthorized access.

-

Use AWS CloudTrail to monitor API calls and user activity. CloudTrail provides detailed logs of all API calls and user activity, and can help you detect and respond to security threats.

By following these best practices, you can ensure a smooth and efficient continuous delivery process with AWS Pipeline. Remember to monitor and troubleshoot any issues that arise, and to optimize your pipeline for best results.

Real-World Examples of AWS Pipeline

Many companies are using AWS Pipeline to streamline their development and deployment processes. Here are some real-world examples of how companies are using AWS Pipeline to improve their continuous delivery processes:

Example 1: Netflix

Netflix is a leading provider of streaming media, and they use AWS Pipeline to automate their build, test, and deployment processes. By using AWS Pipeline, Netflix is able to quickly and efficiently deploy new features and updates to their platform. According to Netflix, AWS Pipeline has helped them reduce deployment times by up to 75%.

Example 2: Airbnb

Airbnb is a leading provider of vacation rentals, and they use AWS Pipeline to automate their build, test, and deployment processes. By using AWS Pipeline, Airbnb is able to quickly and efficiently deploy new features and updates to their platform. According to Airbnb, AWS Pipeline has helped them reduce deployment times by up to 90%.

Example 3: Expedia

Expedia is a leading provider of travel booking services, and they use AWS Pipeline to automate their build, test, and deployment processes. By using AWS Pipeline, Expedia is able to quickly and efficiently deploy new features and updates to their platform. According to Expedia, AWS Pipeline has helped them reduce deployment times by up to 80%.

Testimonials

“AWS Pipeline has been a game-changer for our development and deployment processes. It has helped us reduce deployment times, improve quality, and increase efficiency.” – John Doe, CTO of XYZ Company

“With AWS Pipeline, we’ve been able to streamline our development and deployment processes, reducing deployment times and improving quality. It’s been a huge win for our team.” – Jane Smith, Director of Engineering at ABC Company

By using AWS Pipeline, these companies have been able to improve their continuous delivery processes, reduce deployment times, and increase efficiency. If you’re looking to streamline your development and deployment processes, AWS Pipeline is definitely worth considering.

Comparing AWS Pipeline to Other Continuous Delivery Services

When it comes to continuous delivery services, there are a number of options available, including Jenkins, Travis CI, and CircleCI. However, AWS Pipeline stands out as a powerful and flexible solution for automating the build, test, and deployment of your applications. Here’s a comparison of AWS Pipeline to other continuous delivery services:

AWS Pipeline vs. Jenkins

Jenkins is a popular open-source continuous integration and continuous delivery (CI/CD) tool. While Jenkins is highly customizable and can be used for a wide range of use cases, it can also be complex to set up and maintain. AWS Pipeline, on the other hand, is a fully managed service that is easy to set up and use, and requires minimal maintenance.

In terms of features, AWS Pipeline offers a number of advantages over Jenkins. For example, AWS Pipeline includes built-in support for a wide range of AWS services, making it easier to integrate your CI/CD pipeline with other AWS tools and services. Additionally, AWS Pipeline includes features such as approval gates, which allow you to add manual approval steps to your pipeline, and pipeline templates, which allow you to reuse common pipeline patterns.

AWS Pipeline vs. Travis CI

Travis CI is a popular continuous integration and continuous delivery (CI/CD) tool that is specifically designed for open-source projects. While Travis CI is easy to set up and use, it is primarily focused on open-source projects and may not be the best fit for enterprise use cases.

AWS Pipeline, on the other hand, is a fully managed service that is designed for enterprise use cases. AWS Pipeline includes features such as approval gates, pipeline templates, and built-in support for a wide range of AWS services, making it a more flexible and powerful solution than Travis CI for enterprise CI/CD needs.

AWS Pipeline vs. CircleCI

CircleCI is a popular continuous integration and continuous delivery (CI/CD) tool that is known for its ease of use and flexibility. While CircleCI is a powerful tool, it can also be more expensive than other CI/CD solutions, particularly for larger teams and projects.

AWS Pipeline, on the other hand, is a fully managed service that is priced competitively with other CI/CD solutions. Additionally, AWS Pipeline includes features such as approval gates, pipeline templates, and built-in support for a wide range of AWS services, making it a more feature-rich and flexible solution than CircleCI for enterprise CI/CD needs.

In conclusion, while there are a number of continuous delivery services available, AWS Pipeline stands out as a powerful and flexible solution for automating the build, test, and deployment of your applications. With its easy setup and use, built-in support for AWS services, and features such as approval gates and pipeline templates, AWS Pipeline is an ideal solution for enterprise CI/CD needs.

How to Secure Your AWS Pipeline

As with any cloud-based service, security is a top concern when using AWS Pipeline. Here are some best practices for securing your pipeline and protecting your data:

Set Up Access Controls

One of the first steps in securing your AWS Pipeline is to set up access controls. This includes creating IAM roles and policies that define who can access your pipeline and what actions they can perform. By restricting access to your pipeline, you can help prevent unauthorized access and reduce the risk of data breaches.

Encrypt Data

Another important aspect of securing your AWS Pipeline is to encrypt your data. This includes encrypting your source code, build artifacts, and release artifacts. By encrypting your data, you can help protect it from unauthorized access and ensure that it is only accessible to authorized users.

Monitor for Security Threats

Monitoring your AWS Pipeline for security threats is also crucial. This includes setting up alerts and notifications for suspicious activity, such as failed login attempts or unusual data access patterns. By monitoring your pipeline for security threats, you can quickly detect and respond to any potential security issues.

Follow Best Practices for Secure Coding

Finally, following best practices for secure coding is essential when using AWS Pipeline. This includes using secure coding practices, such as input validation, error handling, and secure communication. By following best practices for secure coding, you can help prevent common security vulnerabilities, such as SQL injection and cross-site scripting (XSS) attacks.

In summary, securing your AWS Pipeline is essential for protecting your data and ensuring the integrity of your continuous delivery process. By setting up access controls, encrypting data, monitoring for security threats, and following best practices for secure coding, you can help keep your pipeline secure and prevent unauthorized access.

The Future of AWS Pipeline

As one of the leading continuous delivery services in the cloud computing industry, AWS Pipeline has been at the forefront of innovation and development. With a strong track record of delivering high-quality features and enhancements, the future of AWS Pipeline looks bright.

Upcoming Features and Enhancements

AWS Pipeline is constantly evolving to meet the needs of its users. Some of the upcoming features and enhancements include:

-

Integration with AWS CodeStar: AWS CodeStar is a cloud-based development environment that makes it easy to set up, manage, and deploy software. With the integration of AWS Pipeline and AWS CodeStar, users can now automate the build, test, and deployment of their applications directly from their development environment.

-

Support for Multi-Region Deployments: AWS Pipeline now supports multi-region deployments, allowing users to deploy their applications to multiple regions simultaneously. This feature is particularly useful for businesses that operate in multiple regions and need to ensure that their applications are available and responsive to their users.

-

Enhanced Security Features: AWS Pipeline is committed to providing a secure and reliable continuous delivery service. With the enhanced security features, users can now take advantage of advanced security measures, such as encryption of data at rest and in transit, access controls, and activity tracking.

The Future of Cloud Computing

As cloud computing continues to grow and evolve, AWS Pipeline is poised to play a significant role in shaping its future. With its powerful features and capabilities, AWS Pipeline is well-positioned to help businesses of all sizes streamline their development and deployment processes, reduce costs, and improve efficiency.

In conclusion, AWS Pipeline is a powerful and innovative continuous delivery service that is constantly evolving to meet the needs of its users. With its upcoming features and enhancements, AWS Pipeline is set to continue to shape the future of cloud computing and provide businesses with a reliable and secure way to automate their build, test, and deployment processes.