Understanding the Benefits of Managed Redis Services

The adoption of in-memory data stores like Redis has surged due to their capacity to dramatically accelerate application performance. However, managing Redis infrastructure can be a complex and demanding task, involving server provisioning, software updates, scaling, and ensuring high availability. This is where managed Redis services, such as aws managed redis, provide substantial advantages. By opting for a managed solution, organizations offload the operational overhead associated with self-managed Redis deployments, allowing development teams to concentrate on building features and functionalities. Managed services handle routine tasks such as patching, backups, and monitoring, significantly reducing the administrative burden. Furthermore, aws managed redis offers automatic scaling capabilities, dynamically adjusting resources to meet fluctuating demands, a feat that would require manual intervention in a self-managed environment. The inherent high availability of managed Redis, often incorporating multi-AZ configurations, safeguards against infrastructure failures, ensuring continuous operation. This contrasts sharply with self-managed setups, where organizations must develop and maintain their own disaster recovery and failover mechanisms. AWS ElastiCache for Redis has risen as a popular choice due to its integration within the AWS ecosystem, coupled with its reliability, scalability, and ease of use, providing a robust aws managed redis service.

The operational efficiency gained through managed Redis services extends beyond mere convenience. The reduction in human error stemming from manual configurations and interventions translates to increased stability and predictability. A managed service, such as aws managed redis, standardizes infrastructure management, enabling consistent performance and a smoother experience. The automated scaling in a managed redis setup ensures that applications don’t encounter performance bottlenecks due to resource limitations, while also optimizing cost by adapting capacity to actual utilization. In contrast, scaling a self-managed redis service often involves complex coordination between different teams and carries the risk of over-provisioning or under-provisioning. High availability, a core feature of managed Redis solutions, offers peace of mind knowing that application uptime is not dependent on maintaining a complex high availability architecture from the ground up. These benefits clearly demonstrate the value of leveraging aws managed redis through services like ElastiCache. The ease of integration with other AWS services adds further appeal, allowing organizations to build more sophisticated and efficient solutions while streamlining the overall infrastructure management process.

How to Choose the Right AWS ElastiCache for Redis Deployment Option

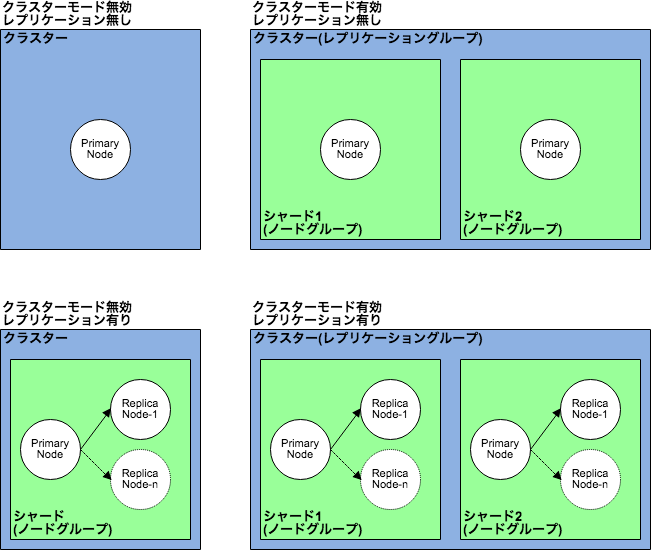

AWS ElastiCache for Redis offers several deployment options, each tailored to different needs and scaling requirements. The primary choice lies in whether to enable cluster mode. A cluster mode disabled deployment is simpler to manage and suitable for smaller applications with relatively predictable workloads. It involves a single Redis instance, making it easy to set up and administer. However, scalability is limited to the capacity of that single instance. This option is ideal for development or small-scale production environments where high availability isn’t paramount and the cost of a simple solution is prioritized. Choosing the right aws managed redis instance size is crucial to meet application demands in this scenario.

Conversely, enabling cluster mode unlocks significant scalability and high availability. This configuration utilizes multiple Redis nodes, forming a cluster that automatically distributes data and manages failovers. This approach is inherently more complex to manage than a single-instance deployment but provides substantial benefits in terms of resilience and scalability for large and demanding applications. Cluster mode allows for seamless scaling—adding or removing nodes as needed to meet fluctuating demands without application downtime. Different shard configurations within a cluster further allow for fine-grained control over data distribution and resource allocation. The selection between different cluster configurations hinges on the anticipated data volume, read/write ratios, and desired levels of high availability within your aws managed redis environment. Careful planning at this stage is crucial for optimal performance and cost efficiency.

Beyond cluster mode, considerations also involve selecting the appropriate node type, instance size, and network configuration. Larger node types offer greater processing power and memory, enhancing performance, but increase cost. Proper sizing is critical for avoiding bottlenecks and maximizing the efficiency of your aws managed redis deployment. Network configuration influences latency and bandwidth, impacting application responsiveness. Factors such as the proximity of the ElastiCache cluster to other AWS resources and client applications should also factor into the decision-making process. Understanding these factors is essential for optimizing the performance and cost-effectiveness of your aws managed redis solution, ensuring it aligns perfectly with your application’s requirements and growth trajectory.

Exploring Key Features of Amazon ElastiCache for Redis

Amazon ElastiCache for Redis offers a range of robust features designed to simplify the management and optimization of your Redis deployments. Data persistence is crucial for ensuring data durability, and ElastiCache provides two primary options: RDB (Redis Database) snapshots, which create point-in-time copies of your data at regular intervals, and AOF (Append Only File), which logs every write operation, enabling more granular recovery. Choosing between RDB and AOF involves a trade-off between recovery speed and data loss tolerance. RDB offers faster recovery but a slightly higher risk of data loss in case of a failure, while AOF ensures minimal data loss but can lead to slightly slower recovery times. Effective use of these persistence mechanisms within your aws managed redis setup is vital for business continuity. The selection depends heavily on your application’s specific requirements and recovery time objectives (RTO).

Security is paramount when dealing with sensitive data, and aws managed redis addresses this with comprehensive security features. Encryption in transit, using TLS/SSL, protects data as it travels between your application and the ElastiCache cluster. Encryption at rest, using AWS Key Management Service (KMS)-managed customer master keys (CMKs), safeguards your data while it’s stored. These measures ensure that your data remains protected throughout its lifecycle, complying with various security and compliance standards. Further bolstering security, ElastiCache supports various Redis versions, allowing you to select the version that best suits your application’s needs and security posture. Selecting the appropriate version ensures you benefit from the latest security patches and performance improvements offered by the aws managed redis service. Regularly updating your Redis version is a key component of maintaining a secure and efficient caching infrastructure.

Beyond persistence and security, ElastiCache for Redis provides features that enhance operational efficiency and scalability. It seamlessly integrates with other AWS services, such as CloudWatch for monitoring and IAM for access control. This tight integration streamlines management and allows for centralized monitoring and security management across your AWS environment. Furthermore, the ability to scale your ElastiCache cluster both vertically and horizontally allows you to easily adapt to changing application demands without significant downtime. This scalability is a major advantage of using aws managed redis, as it allows your caching infrastructure to grow alongside your application’s needs, ensuring consistent performance and reliability even during peak usage periods. Understanding and leveraging these features are fundamental to establishing a highly efficient and secure caching solution.

Optimizing Performance for Your AWS Managed Redis Cache

Optimizing the performance of an aws managed redis cluster involves a multifaceted approach encompassing various strategies. Connection pooling is crucial; it significantly reduces the overhead associated with establishing and closing connections to the Redis server. By reusing connections, applications can achieve substantial performance gains, especially under heavy load. This is particularly beneficial in scenarios where numerous short-lived requests are made to the aws managed redis instance. Efficient connection pooling minimizes latency and enhances the overall responsiveness of applications relying on aws managed redis for caching. Client-side caching, another critical technique, involves storing frequently accessed data within the application itself, reducing the number of requests to the aws managed redis cluster. This is especially effective for data that exhibits high read-to-write ratios. Properly sizing the node types within the aws managed redis cluster is also paramount. Choosing node types that align with the expected workload ensures that the cluster has sufficient resources to handle the anticipated traffic. Under-provisioning can lead to performance bottlenecks, while over-provisioning represents an unnecessary cost. Careful consideration of memory, CPU, and network capacity is necessary to strike the optimal balance between performance and cost-effectiveness with your aws managed redis solution.

Leveraging read replicas is a powerful strategy to improve the performance of read-heavy workloads. By distributing read operations across multiple replicas, the pressure on the primary node is significantly reduced, resulting in faster response times for read requests. This architecture is especially suitable for applications with a high volume of read operations compared to write operations. Furthermore, strategic use of read replicas enhances the overall availability and fault tolerance of the aws managed redis cluster, mitigating the impact of potential failures on the primary node. Careful consideration of the replication strategy, including the choice of synchronous or asynchronous replication, is necessary to balance performance with data consistency requirements. Understanding the trade-offs between these approaches is key to optimizing the performance and reliability of the aws managed redis deployment. For instance, synchronous replication ensures data consistency at the expense of potential latency increases, while asynchronous replication prioritizes performance but may result in slight data inconsistencies in exceptional circumstances.

Beyond these core strategies, other optimization techniques can further enhance the performance of your aws managed redis cluster. These include careful selection of appropriate data structures within Redis, such as using hashes for storing structured data or lists for managing ordered collections. Furthermore, employing efficient data serialization techniques can minimize the overhead associated with data transfer between the application and the aws managed redis instance. Regular monitoring and analysis of key performance indicators (KPIs) are also essential for identifying and addressing performance bottlenecks proactively. AWS CloudWatch provides comprehensive metrics that allow for detailed monitoring of various aspects of the aws managed redis cluster, enabling informed decision-making regarding capacity planning and performance optimization. By implementing these techniques, organizations can build highly responsive and scalable applications that leverage the capabilities of aws managed redis effectively.

Comparing ElastiCache Redis with Other Caching Solutions on AWS

When considering caching solutions on AWS, it’s crucial to understand how ElastiCache for Redis stacks up against alternatives like ElastiCache for Memcached and DynamoDB Accelerator (DAX). While all three serve caching purposes, their underlying architectures and use cases differ significantly. ElastiCache for Memcached is designed for simpler caching needs, focusing on ease of use and high speed, but lacking advanced data structures and persistence options available in Redis. Memcached operates on a basic key-value store model, making it ideal for stateless applications where data loss isn’t critical. The simplicity of Memcached translates to lower overhead, but it doesn’t offer the flexibility or feature set of an aws managed redis service. On the other hand, ElastiCache for Redis provides a richer set of data structures such as lists, sets, and hashes, and persistence options like RDB and AOF. This makes Redis a more versatile choice for complex application caching, session management, and even basic pub/sub functionalities. The aws managed redis service allows for more advanced operations and data handling capabilities that extend beyond basic key-value caching. This allows for more powerful usage cases, such as implementing leaderboards or message queues within your application. When choosing between Memcached and ElastiCache for Redis, the trade-off generally revolves around simplicity versus features and complexity.

DynamoDB Accelerator (DAX), unlike both ElastiCache options, is specifically designed as a caching layer for Amazon DynamoDB, a NoSQL database. DAX provides a write-through cache, meaning data written to DynamoDB is first written to DAX, which is a managed cache service tightly coupled with DynamoDB. This results in significantly faster read performance compared to reading directly from DynamoDB. While DAX effectively reduces latency and improves performance for DynamoDB reads, it is not a general-purpose caching solution. It doesn’t support various data types like ElastiCache for Redis, nor does it function independently from DynamoDB. Therefore, DAX isn’t a comparable replacement for an aws managed redis cache in scenarios that require a flexible cache outside of a DynamoDB environment. Choosing between ElastiCache for Redis, ElastiCache for Memcached and DAX depends heavily on the specific application requirements and underlying data infrastructure. If you need a flexible, feature-rich cache with data persistence and advanced data structures that is decoupled from the underlying database, ElastiCache for Redis is the more suitable choice. However, if your primary focus is on performance optimization of DynamoDB, DAX is the more appropriate fit; or a simple in-memory cache is all that your application needs, then Memcached might be the best option. Understanding these differences is key to making an informed decision that optimizes both performance and cost.

Step-by-Step Guide: Setting Up Your First ElastiCache for Redis Cluster

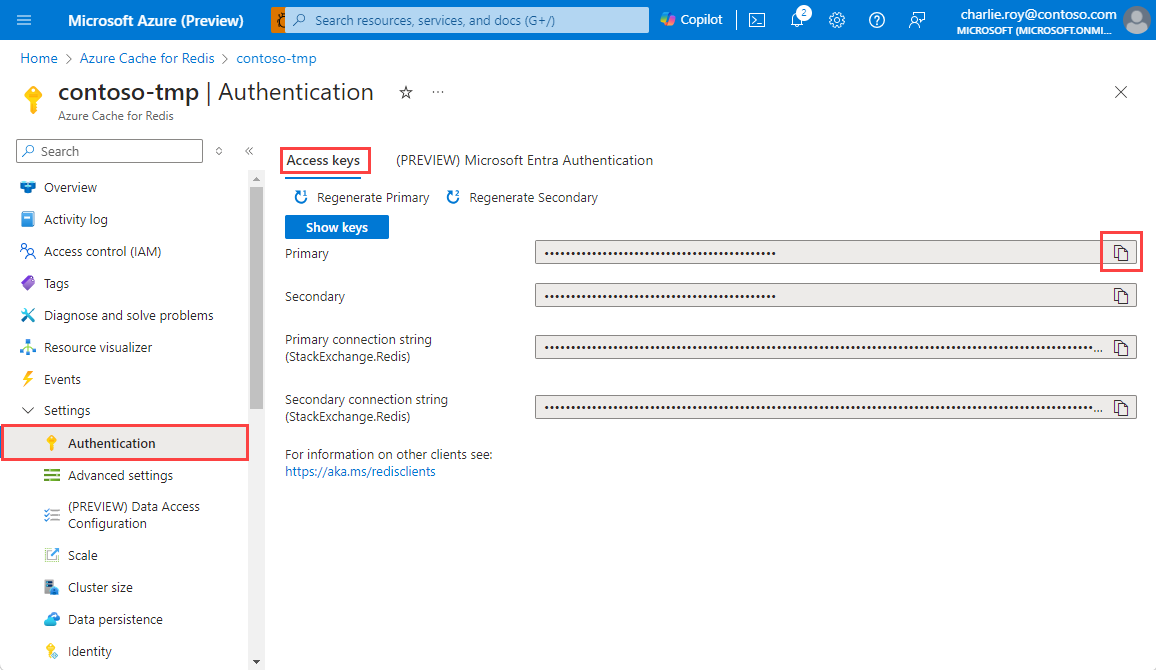

Creating an AWS managed Redis cluster is straightforward using either the AWS Management Console or the AWS Command Line Interface (CLI). For those who prefer a visual interface, the AWS Management Console provides an intuitive way to configure your cluster. To begin, navigate to the ElastiCache service within the console and select “Create.” You’ll be prompted to choose “Redis” as your engine type. Next, you’ll need to specify your deployment type, deciding between cluster mode enabled or disabled, which directly impacts scalability and data partitioning. When choosing cluster mode, consider your application’s throughput and latency needs. For simple applications, cluster mode disabled might suffice, whereas complex applications requiring horizontal scaling should opt for cluster mode enabled. Further configuration includes specifying the instance type, the number of nodes, the VPC settings for network isolation, and security group rules for controlling access. These settings impact performance and security for your aws managed redis cluster, so careful planning is crucial. Additionally, you will need to configure the port that Redis will be listening on, usually 6379, as well as enable encryption options if necessary, both in transit and at rest, to secure sensitive data.

Alternatively, the AWS CLI offers a programmatic way to deploy an aws managed redis cluster, suitable for automation and infrastructure-as-code workflows. Here’s a simplified example of a command using the AWS CLI to create a cluster (replace placeholders with your specific settings): aws elasticache create-replication-group --replication-group-id my-redis-cluster --replication-group-description "My First Redis Cluster" --engine redis --engine-version 6.x --cache-node-type cache.m5.large --num-cache-nodes 3 --security-group-ids sg-xxxxxxxxxxxxxxxxx --subnet-group-name my-subnet-group. Remember to tailor your security group and subnet group to your VPC configurations. The command initializes your ElastiCache cluster with your specifications; monitoring of your cluster status is available in the ElastiCache console under the Events section. Key parameters to consider are the node size, which affects cost and performance, the number of nodes, which influences redundancy and scalability, and the network configuration that determines your access control and how your aws managed redis instances interact with other services. It is important to monitor resources utilization using the Cloudwatch metrics available in the console or CLI, once the cluster is in running status.

Whether you choose the AWS Management Console or the AWS CLI, ensuring the right configuration settings for your aws managed redis setup is paramount for optimal performance. Pay close attention to security groups, VPC settings, and the selected node types to align with your application’s requirements and projected scalability. Once the cluster is up and running, you can begin integrating your application with Redis using its client libraries. Regularly reviewing performance metrics is important, so you can adjust settings and ensure that your aws managed redis meets your requirements over time. Always refer to AWS documentation for specific details and best practices.

Monitoring and Maintaining your ElastiCache for Redis Deployment

Effective monitoring is paramount to ensuring the health and performance of your aws managed redis deployment. Amazon CloudWatch provides a comprehensive suite of metrics that offer insights into various aspects of your ElastiCache for Redis cluster. Key metrics to track include CPU utilization, memory usage, cache hit rate, and network throughput. High CPU or memory usage can indicate the need to scale your cluster or optimize your application’s caching strategy. A low cache hit rate suggests that your data may not be effectively cached, warranting a review of your cache invalidation strategy and data access patterns. Network throughput metrics help to identify bottlenecks or unusual traffic patterns, while the number of connections to your aws managed redis instance informs on how well your application is handling concurrent user requests. Proactively monitoring these metrics can prevent potential performance issues and ensure a seamless user experience. Setting up CloudWatch alarms is crucial to automatically notify you of critical situations, allowing for rapid response to any issues.

Beyond CloudWatch metrics, ElastiCache events play a vital role in maintaining the availability of your aws managed redis cluster. These events provide real-time notifications regarding cluster maintenance, node failures, and other critical happenings. Subscribing to ElastiCache event notifications allows you to be immediately informed about changes in the status of your cluster. For example, if a node fails, you will receive a notification, enabling you to take action such as scaling, failover, or investigating the root cause. Moreover, regular monitoring of event logs can reveal patterns that might not be immediately apparent from the metrics. The combined use of CloudWatch metrics and ElastiCache event logs provides a robust framework for maintaining high availability and optimal performance of your aws managed redis setup. Implementing these practices is not only beneficial for your application but contributes significantly to your overall operational efficiency.

Maintaining a consistent performance of your aws managed redis cluster requires a multi-pronged approach, which includes regular monitoring, proactive alerting, and incident response strategies. Regularly review your configured alarms to make sure they align with your changing system needs, and periodically test your incident response protocols to ensure that they are effective and efficient. Incorporate these practices as part of your routine maintenance to minimize disruptions and maximize the value of your caching solution. With continuous monitoring and timely responses to alerts, you can ensure that your system delivers its intended performance and high availability, creating a reliable experience for users. Also, make sure to follow best practices for connection management and avoid keeping persistent connections alive for too long as these can lead to connection pool exhaustion and hinder your system’s performance.

Best Practices for Using AWS Managed Redis for Scalable Applications

Effective utilization of aws managed redis within scalable applications hinges on several key best practices. Proper connection handling is paramount; using connection pooling techniques significantly reduces the overhead associated with establishing and closing connections to the Redis server. This is particularly crucial in high-traffic environments where connection establishment can become a bottleneck. Libraries and frameworks often provide built-in connection pooling mechanisms, which should be leveraged to optimize performance and resource utilization. For instance, utilizing a connection pool ensures that a limited number of connections are reused across multiple requests, rather than repeatedly creating and destroying connections for each interaction with the aws managed redis instance. This proactive approach minimizes latency and enhances overall application responsiveness.

Designing robust cache invalidation strategies is equally vital for maintaining data consistency. Employing techniques such as cache-aside, write-through, or write-back patterns depends heavily on the application’s specific needs and tolerance for stale data. A well-defined strategy ensures that the aws managed redis cache reflects the most current data while minimizing the potential for inconsistencies. For applications with frequent data updates, a write-through approach—where data is simultaneously written to both the database and the cache—guarantees data integrity. Conversely, a write-back strategy, which defers writing to the database until later, could optimize performance if updates are less frequent. Selecting the optimal strategy necessitates a careful consideration of the trade-offs between data consistency and performance. The choice of invalidation approach directly impacts the overall performance and reliability of the application relying on aws managed redis.

Furthermore, choosing the appropriate Redis data structures is fundamental to optimizing performance. Understanding the strengths and weaknesses of different data structures—like lists, sets, hashes, and sorted sets—enables developers to select the most efficient structure for their specific use cases. For example, employing sorted sets can significantly improve performance for leaderboards or ranking systems, whereas hashes are ideal for storing complex objects. Utilizing the right data structure directly impacts query speed and memory usage within the aws managed redis environment. Effective selection requires a thorough understanding of the application’s data model and access patterns. Choosing the right data structure and implementing efficient cache invalidation strategies, combined with connection pooling, ensures an optimally performing and scalable application leveraging the capabilities of aws managed redis.