Understanding Kubernetes: Container Orchestration and Serverless Deployment

Kubernetes is a powerful system for automating the deployment, scaling, and management of containerized applications. It simplifies the complex process of orchestrating containers across a cluster of machines. Containers offer several advantages, including improved resource utilization, faster deployment times, and enhanced portability. Kubernetes addresses the challenges of managing these containers at scale, ensuring applications remain available and performant. This orchestration is particularly valuable in cloud environments, where resources can be dynamically allocated and scaled. The connection to serverless deployment lies in Kubernetes’ ability to manage applications that scale automatically based on demand, mirroring the core principles of a serverless architecture. Efficiently managing resources and scaling applications is crucial, and Kubernetes excels in this area. This makes it a natural fit for many serverless architectures, enabling developers to focus on application logic rather than infrastructure management. The rise of aws kubernetes solutions highlights its increasing importance in modern cloud deployments.

The benefits of using Kubernetes extend beyond simple container management. It provides features like automated rollouts and rollbacks, health checks, and self-healing capabilities. These features greatly improve application reliability and reduce operational overhead. Moreover, Kubernetes fosters a consistent deployment environment across various cloud providers and on-premises infrastructure. This portability is a significant benefit, allowing for easy migration and improved flexibility. For developers, Kubernetes simplifies the process of managing complex applications. It allows them to focus on building and deploying their applications without worrying about the underlying infrastructure. This improved developer experience is a key driver for Kubernetes’ adoption.

In the context of serverless deployment, Kubernetes allows for a more granular control over resource allocation and scaling compared to traditional serverless platforms. This finer-grained control is valuable for applications with more complex requirements or those needing more precise resource management. Kubernetes provides the tools and mechanisms to manage these complex applications effectively, automating many of the tasks previously handled manually. The flexibility offered by Kubernetes makes it an ideal choice for diverse applications, ranging from microservices to large-scale data processing workloads. The integration of Kubernetes with other cloud services further enhances its capabilities, making it a versatile platform for serverless and traditional applications alike. Its role in modern cloud computing is undeniable, and the increasing prevalence of aws kubernetes deployments showcases its practical use and relevance in a real-world setting.

AWS’s Kubernetes Offering: ECS and EKS

Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS) represent AWS’s primary offerings for deploying and managing containerized applications. ECS provides a managed container orchestration service that simplifies container deployment without requiring deep Kubernetes expertise. It abstracts away much of the underlying infrastructure management, making it a compelling choice for developers seeking a streamlined approach to deploying containerized workloads on aws kubernetes. This simplicity translates to faster deployment cycles and reduced operational overhead, particularly beneficial for simpler applications or teams less familiar with Kubernetes intricacies.

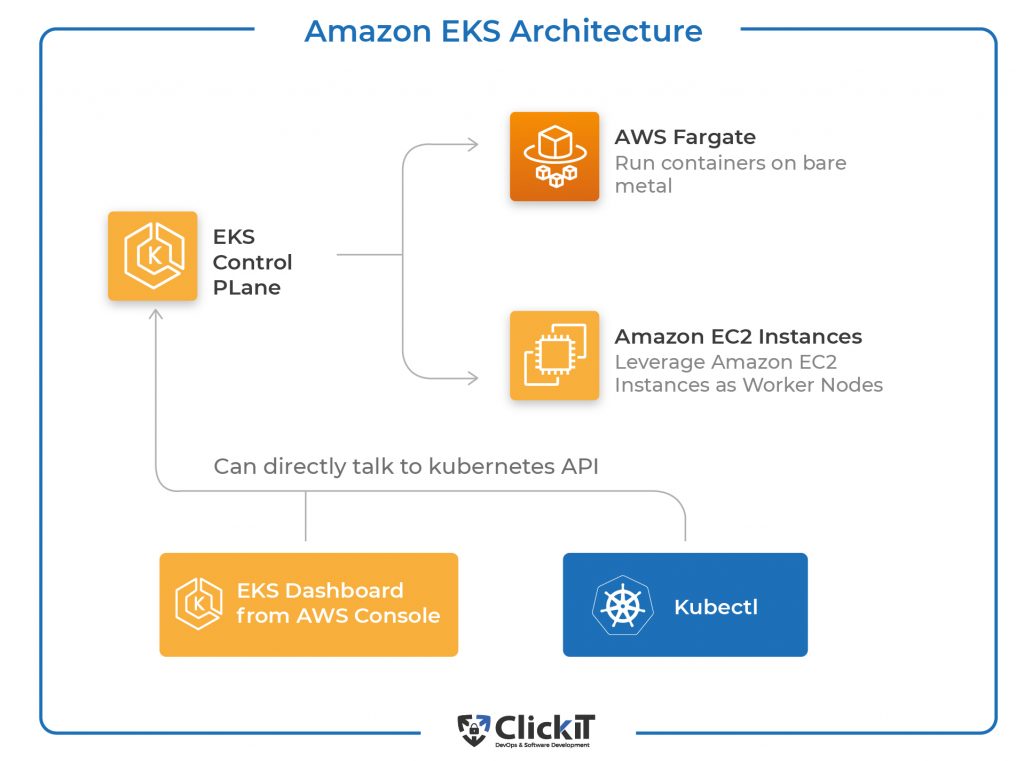

Conversely, Amazon Elastic Kubernetes Service (EKS) delivers a fully managed Kubernetes control plane. EKS provides a near-native Kubernetes experience, enabling users to leverage the full power and flexibility of the Kubernetes ecosystem. This approach is ideal for organizations with existing Kubernetes expertise or those requiring granular control over their cluster configurations. For complex applications demanding advanced features like custom resource definitions or specific Kubernetes integrations, EKS shines. The ability to use familiar Kubernetes tools and practices streamlines workflows and reduces the learning curve for experienced Kubernetes users working with aws kubernetes. This makes EKS a preferred choice for organizations prioritizing familiarity and control.

The key difference lies in the level of abstraction and control. ECS manages much of the infrastructure for you, simplifying operations but limiting customization. EKS provides a more hands-on approach, offering greater control but requiring more operational management. The optimal choice depends on your application’s complexity, your team’s Kubernetes expertise, and your organization’s specific requirements. For smaller projects or those new to container orchestration, ECS’s ease of use is a significant advantage. However, for large, complex applications or those that necessitate tight control over the Kubernetes environment, EKS offers the power and flexibility to meet the demands of aws kubernetes deployments. Both services are robust and offer managed capabilities to streamline the process of running containerized workloads on AWS. The choice is determined by project-specific needs and desired level of operational control.

Choosing Between ECS and EKS for Your AWS Kubernetes Needs

Selecting between Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS) for deploying your aws kubernetes applications hinges on several key factors. Application complexity plays a crucial role. For simpler applications with fewer microservices, ECS’s managed nature offers a streamlined deployment process. Its ease of use and reduced operational overhead make it ideal for teams prioritizing speed and simplicity. Conversely, complex applications benefit from EKS’s native Kubernetes experience. The robust control and scalability EKS provides are essential for managing sophisticated microservice architectures.

Your existing infrastructure also influences the decision. If your team already possesses significant Kubernetes expertise and infrastructure, EKS allows leveraging this existing knowledge for a seamless transition. The familiarity with Kubernetes tools and processes minimizes the learning curve. However, if your team is less familiar with Kubernetes, ECS’s managed services might prove a better starting point. Its simplified interface makes the initial deployment significantly easier, allowing teams to gradually acquire Kubernetes expertise.

Finally, the desired level of control over your aws kubernetes cluster is paramount. EKS provides a near-native Kubernetes experience, granting granular control over every aspect of the cluster. This is beneficial for organizations demanding fine-grained customization and advanced configuration options. However, this control also comes with increased operational responsibility. ECS, being more managed, reduces the operational burden, but sacrifices some control over underlying infrastructure elements. Ultimately, the best choice depends on striking a balance between application needs, team expertise, and desired level of control within the aws kubernetes ecosystem.

Deploying Kubernetes Applications on AWS (A Practical Example): Using EKS

This section demonstrates deploying a simple application on Amazon Elastic Kubernetes Service (EKS). We’ll use a sample Node.js application for clarity. First, create an EKS cluster using the AWS Management Console or the AWS CLI. Specify the desired cluster size and configuration, ensuring appropriate node instance types for your application’s resource needs. Proper configuration during cluster creation is crucial for efficient aws kubernetes resource utilization. Remember to configure appropriate VPC settings and security groups to secure your cluster and control network access.

Next, prepare your application for deployment. This typically involves containerizing your application using Docker. Create a Dockerfile that defines the application’s runtime environment and dependencies. Build the Docker image and push it to a container registry, such as Amazon Elastic Container Registry (ECR). This registry provides secure storage and management for your Docker images, ensuring efficient and secure deployments in your aws kubernetes environment. Once your image is in ECR, you can deploy it to your EKS cluster using tools like kubectl. You’ll need to define Kubernetes manifests (YAML files) that describe your application’s deployment, services, and other configurations. These manifests define how your containers will run within the cluster.

Finally, deploy your application using kubectl. The `kubectl apply -f` command applies the Kubernetes manifest files to your EKS cluster. This creates the necessary deployments, services, and other resources to run your application. Monitor your application’s deployment and health using the kubectl command-line tool or cloud-based monitoring tools integrated with your aws kubernetes environment. Continuous monitoring is essential to detect and resolve any issues promptly. You can scale your application horizontally by adjusting the replica count in your deployment manifest. This allows you to handle increased traffic loads efficiently within your aws kubernetes infrastructure. This practical example showcases the core steps involved in deploying applications on AWS EKS, highlighting the simplicity and efficiency provided by this managed Kubernetes service.

Managing Kubernetes Clusters on AWS: Best Practices

Effective management of AWS Kubernetes clusters is crucial for maintaining high performance and reliability. Scaling, a key operational aspect, involves adjusting the cluster’s resources to meet fluctuating demands. Horizontal Pod Autoscaling (HPA) automatically scales the number of pods based on CPU utilization or other metrics. Vertical Pod Autoscaling (VPA) adjusts the resources allocated to individual pods. Properly configured autoscaling ensures optimal resource utilization and cost efficiency in your aws kubernetes environment. Regular monitoring provides insights into cluster health, resource consumption, and application performance. Tools like Amazon CloudWatch and Prometheus offer comprehensive monitoring capabilities. These tools provide alerts for anomalies, allowing for proactive issue resolution. Logging aggregates information from various cluster components, aiding in troubleshooting and debugging. Effective logging strategies ensure the quick identification of problems, minimizing downtime.

Security best practices for aws kubernetes deployments are paramount. Network policies control communication between pods, enhancing security by limiting access based on namespaces and labels. Role-Based Access Control (RBAC) grants granular permissions to users and services, minimizing the attack surface. Regular security audits and vulnerability scans identify potential weaknesses, and implementing appropriate remediation strategies is vital. Data encryption both in transit and at rest protects sensitive information. Employing secrets management services, like AWS Secrets Manager, prevents hardcoding credentials directly into applications. Integrating security scanning into your CI/CD pipeline ensures that vulnerabilities are detected early, promoting a robust and secure aws kubernetes deployment. These security measures build a robust foundation for any aws kubernetes environment.

High availability and disaster recovery are essential considerations for production-ready aws kubernetes deployments. Employing multiple availability zones (AZs) distributes the cluster across geographically separate regions. This resilience protects against single-point failures and improves application availability. Regular backups and robust disaster recovery plans are vital. These plans ensure quick restoration of the cluster in the event of a failure. Efficient resource management optimizes cost while maintaining performance. Regularly review resource usage, identify areas for optimization, and implement strategies to reduce waste. These best practices, from scaling and security to high availability, are fundamental for successful aws kubernetes deployments. They ensure smooth operation and minimize the risk of operational disruptions. Following these guidelines will lead to a more robust and efficient aws kubernetes experience.

Securing Your AWS Kubernetes Deployments

Securing AWS Kubernetes deployments requires a multi-layered approach. Network policies, implemented using Kubernetes NetworkPolicy objects, control inter-pod communication within the cluster. This limits potential attack vectors by restricting access based on labels and namespaces. Properly configured network policies are crucial for isolating applications and preventing unauthorized access. AWS offers various networking services, like Virtual Private Clouds (VPCs) and security groups, which integrate seamlessly with EKS to further enhance network security for your aws kubernetes environment. These tools help to create a secure perimeter around your cluster.

Access control is paramount. Role-Based Access Control (RBAC) in Kubernetes allows granular control over cluster resources. Administrators can define roles with specific permissions, ensuring that only authorized users and services can access sensitive information or perform critical operations. Integrating AWS Identity and Access Management (IAM) with EKS provides further control, tying Kubernetes identities to existing AWS users and groups. This simplifies authentication and authorization, enhancing the overall security posture of your aws kubernetes infrastructure. Implementing strong secrets management practices is essential. Never hardcode sensitive information like passwords or API keys directly into your application code. Instead, leverage secrets management solutions like AWS Secrets Manager or HashiCorp Vault to securely store and manage secrets, making them available to your applications via environment variables or other secure mechanisms. Regular audits of access controls and security policies are also important components of maintaining a secure AWS kubernetes setup.

Data protection is another critical aspect of aws kubernetes security. Encrypting data both in transit and at rest protects sensitive information from unauthorized access. Using encryption at rest for persistent volumes and employing TLS/SSL for communication between pods ensures data confidentiality. Regular security scans and vulnerability assessments help identify and remediate potential security weaknesses. Staying updated with the latest security patches for Kubernetes components and related AWS services is crucial to mitigate known vulnerabilities. Implementing a robust security strategy, incorporating these measures, ensures a secure and reliable AWS kubernetes deployment, protecting your applications and data from threats.

Scaling and Optimizing AWS Kubernetes Workloads

Effective scaling is crucial for any production-ready aws kubernetes deployment. Horizontal Pod Autoscaling (HPA) dynamically adjusts the number of pods based on CPU utilization or custom metrics. This ensures your application handles fluctuating demand efficiently. Consider using metrics like request latency and error rates for more nuanced scaling. AWS provides robust monitoring tools to gather this data, enabling informed scaling decisions. Properly configured HPA prevents resource waste during low-traffic periods and avoids performance bottlenecks during peak demand. For even more granular control, explore Kubernetes Deployments’ advanced features like rolling updates and canary deployments. These techniques minimize disruption during updates and allow for controlled testing of new versions.

Performance optimization in aws kubernetes involves several strategies. Efficient resource allocation is key. Use resource requests and limits to define the resources each container needs. This prevents resource contention and ensures predictable performance. Optimize container images for size to reduce deployment times and improve resource utilization. Choose appropriate instance types for your workloads, considering factors like CPU, memory, and network performance. Effective resource utilization impacts both performance and cost. Regularly analyze resource usage to identify areas for improvement. AWS provides tools to visualize resource consumption, assisting in making data-driven optimizations. Efficiently utilizing available resources contributes to the overall cost-effectiveness of your aws kubernetes deployment.

Beyond resource management, network optimization plays a significant role. Ensure pods can communicate efficiently within the cluster. Employ services like AWS Elastic Load Balancing (ELB) to distribute traffic across multiple pods. Properly configure network policies to enhance security and control traffic flow. Consider using AWS PrivateLink for secure access to other AWS services, further enhancing performance and security. Regularly monitor network performance to identify potential bottlenecks. Addressing network latency and other network-related issues improves the overall user experience and application performance. These strategies ensure optimal performance and scalability for your aws kubernetes applications on AWS.

Beyond the Basics: Advanced Features and Use Cases of AWS EKS

AWS EKS offers a range of advanced features that significantly enhance the capabilities of aws kubernetes deployments. One notable feature is EKS Fargate, a serverless compute engine for Kubernetes. This eliminates the need to manage and provision EC2 instances, simplifying operations and reducing operational overhead. EKS Fargate allows developers to focus on application code rather than infrastructure management, making it an ideal solution for microservices architectures and applications that require rapid scaling. The integration with other AWS services, such as IAM for access control and CloudWatch for monitoring, further strengthens its capabilities for managing and securing aws kubernetes clusters. These integrations provide a robust ecosystem, enhancing the overall security and performance of the deployment.

Another key aspect of advanced aws kubernetes deployments is the implementation of sophisticated networking strategies. EKS supports various networking options, including VPC networking and AWS PrivateLink, enabling secure communication between services and enhanced isolation. Implementing advanced networking configurations, such as network policies and service meshes, allows for better control of traffic flow within the cluster, improving both security and application performance. Using these features in conjunction with features like Kubernetes Ingress controllers and application load balancers provides highly available and scalable applications. Efficiently leveraging these features in your aws kubernetes infrastructure is crucial for building reliable and scalable applications. Careful planning and implementation are key to maximizing these benefits.

The application of aws kubernetes extends to various use cases beyond simple deployments. Consider scenarios like deploying machine learning models, where EKS provides a scalable and efficient platform for running computationally intensive workloads. Similarly, implementing real-time data processing pipelines using tools like Apache Kafka and Spark within an EKS cluster is feasible. These advanced use cases demonstrate the versatility of aws kubernetes, enabling the implementation of complex applications across diverse domains. The flexibility and scalability of aws kubernetes make it an ideal solution for handling fluctuating demands and ensuring applications remain performant and readily available. The ability to seamlessly integrate with other AWS services greatly simplifies the management and optimization of these advanced applications. Exploring and leveraging these capabilities unlock a higher level of operational efficiency for aws kubernetes deployments.