The Power of Cloud-Based DevOps

DevOps represents a significant shift in software development and deployment practices. It emphasizes collaboration between development and operations teams. The goal is to accelerate the software delivery process. This approach promotes agility and faster feedback loops. Traditional DevOps models often involve on-premises infrastructure. This can lead to limitations in scalability and resource management. However, leveraging cloud platforms like AWS provides significant advantages. Cloud-based DevOps allows teams to build, test, and deploy applications more efficiently. It also helps achieve increased agility and scalability. This is because cloud resources can be provisioned and scaled on demand. aws for devops further enhances these advantages. It provides a range of services and tools designed to automate various DevOps tasks. This includes building, testing, and deploying applications. The adoption of cloud-based DevOps dramatically reduces the time and effort needed for deployments. Cloud platforms offer built-in scalability, allowing for rapid adjustment to changing demands.

Key benefits of leveraging AWS for DevOps include faster deployment cycles. Teams can automate deployment pipelines. This reduces manual errors and speeds up the release process. Scalability is another crucial benefit. Cloud resources can scale up or down in response to traffic or usage fluctuations. This ensures that applications remain responsive and available. Additionally, cloud platforms provide robust security features. These help protect infrastructure and applications. This includes encryption and access management. Cloud-based DevOps environments also foster innovation. They allow teams to experiment with new technologies and ideas without the restrictions of traditional infrastructure. Ultimately, the combination of DevOps principles and aws for devops capabilities leads to significant improvements in software delivery and operational efficiency. The transition from traditional to cloud DevOps means embracing a new era of flexibility and speed.

Comparing traditional and cloud DevOps further highlights the advantages of the latter. Traditional models involve lengthy procurement processes for hardware. They can also struggle with scalability limitations. These issues can lead to delays and inefficiencies. Cloud-based DevOps, on the other hand, allows for immediate access to resources. Teams can quickly provision the infrastructure they need. This eliminates wait times and accelerates development cycles. The use of infrastructure as code also enables consistency and repeatability. This improves management and minimizes errors. Furthermore, cloud platforms often provide comprehensive monitoring and logging tools. These tools enable teams to track application performance and identify issues. This results in improved reliability and a more resilient system. Embracing aws for devops offers a path to more efficient and agile development workflows.

How to Implement a Robust CI/CD Pipeline on AWS

Creating a Continuous Integration/Continuous Deployment (CI/CD) pipeline on AWS is fundamental for modern software development. This process automates the building, testing, and deployment of applications. It ensures faster, more reliable releases. AWS provides a suite of services to streamline this process. CodeCommit serves as a secure, scalable source code repository. It is where your code will reside. Then, CodeBuild takes over, compiling your source code. It runs unit tests and prepares the application for deployment. Next, CodeDeploy automates the deployment of your application to various targets. This can include EC2 instances, Lambda functions, or even on-premises servers. Finally, CodePipeline orchestrates this entire process. It defines the steps of your CI/CD workflow. This integrates various AWS services. It also integrates with third-party tools. Setting up a pipeline involves defining a source stage. This is where code changes are detected. A build stage follows, using CodeBuild to compile the application. Then, a deploy stage uses CodeDeploy to release the application. This is how you achieve automated software releases. All this contributes to an effective setup for aws for devops.

Implementing a CI/CD pipeline on aws for devops greatly improves development cycles. Begin by creating a repository in CodeCommit for your source code. Configure a build project in CodeBuild specifying the build environment and build instructions. For deployment, set up a deployment group in CodeDeploy. This defines the target environment for the release. After those configurations, create a pipeline in CodePipeline. This connects CodeCommit to CodeBuild and CodeBuild to CodeDeploy. You can add test stages before the deploy. This will ensure comprehensive test coverage. This setup allows automatic initiation of the pipeline. It triggers every time changes are committed. Every push to the repository will trigger a new build and deploy cycle. The pipeline can be modified based on different release strategies. Consider using blue/green deployment or canary releases. This will enhance system resilience. AWS services also support integration with various other devops tools. These integrations can enhance your workflow.

To get started, AWS Management Console or AWS CLI can be used to configure these services. Make sure IAM roles have required permissions. This will ensure all operations are executed securely. Monitoring is a must. It helps to track the execution of the pipeline. Services like CloudWatch are useful for monitoring build and deploy processes. This ensures quick identification of issues. Regular testing should be part of the CI/CD workflow. Consider unit testing. Perform also integration and system testing. Incorporating these processes provides a robust pipeline. This will facilitate the fast-paced delivery of reliable software. Using aws for devops reduces manual effort. Also, it improves the reliability of software releases. AWS provides the necessary tools. It helps create effective automated development workflows. This results in a more efficient and agile development process.

Infrastructure as Code: Managing AWS Resources with Automation

Infrastructure as Code (IaC) is a foundational practice for effective DevOps, particularly when leveraging aws for devops. It involves managing and provisioning infrastructure through machine-readable definition files, rather than manual configurations. This approach treats infrastructure like software code, enabling version control, repeatability, and faster deployments. By using IaC, teams can ensure consistency across various environments, such as development, testing, and production. The benefits include reduced human errors, quicker provisioning times, and simplified change management. IaC also enhances collaboration since infrastructure changes can be reviewed and approved like code modifications. Implementing IaC with aws for devops is crucial for maintaining agility and reliability in modern software development. It provides a standardized way to build, modify and delete infrastructure and is essential for scaling operations efficiently and reliably. With IaC teams can focus more on delivering valuable code and less on manual resource management tasks.

AWS provides several tools to facilitate IaC, notably CloudFormation and Terraform. CloudFormation is a native AWS service that allows you to model and set up your AWS resources using JSON or YAML templates. These templates describe the desired state of your infrastructure. CloudFormation then handles the provisioning and management of these resources, including compute instances, storage, and network configurations. Terraform, on the other hand, is an open-source tool that supports multiple cloud providers, not just AWS. Terraform uses its own configuration language (HCL) and also offers the ability to define infrastructure as code. Both tools allow for consistent resource provisioning and modifications across different environments. For example, a simple CloudFormation template might define an EC2 instance with specific configurations. This setup can then be replicated in any AWS region by just applying the same template. This is a powerful feature for consistency in aws for devops workflows.

Let’s illustrate the concept with a basic CloudFormation example snippet. In a JSON template, you could have a section to define an S3 bucket: “Resources”: {“MyS3Bucket”: { “Type”: “AWS::S3::Bucket”, “Properties”: {“BucketName”: “my-unique-bucket-name”}}}. This simple configuration defines a bucket named “my-unique-bucket-name”. Executing this template using CloudFormation, the S3 bucket is automatically provisioned as desired. Similar logic applies for managing other aws for devops resources. The use of IaC significantly reduces the chance of configuration drift because resources are always deployed based on the configuration files. IaC is a crucial aspect in aws for devops, helping to establish a repeatable, automated, and consistent deployment process.

Monitoring and Logging in AWS: Ensuring Optimal Performance and Reliability

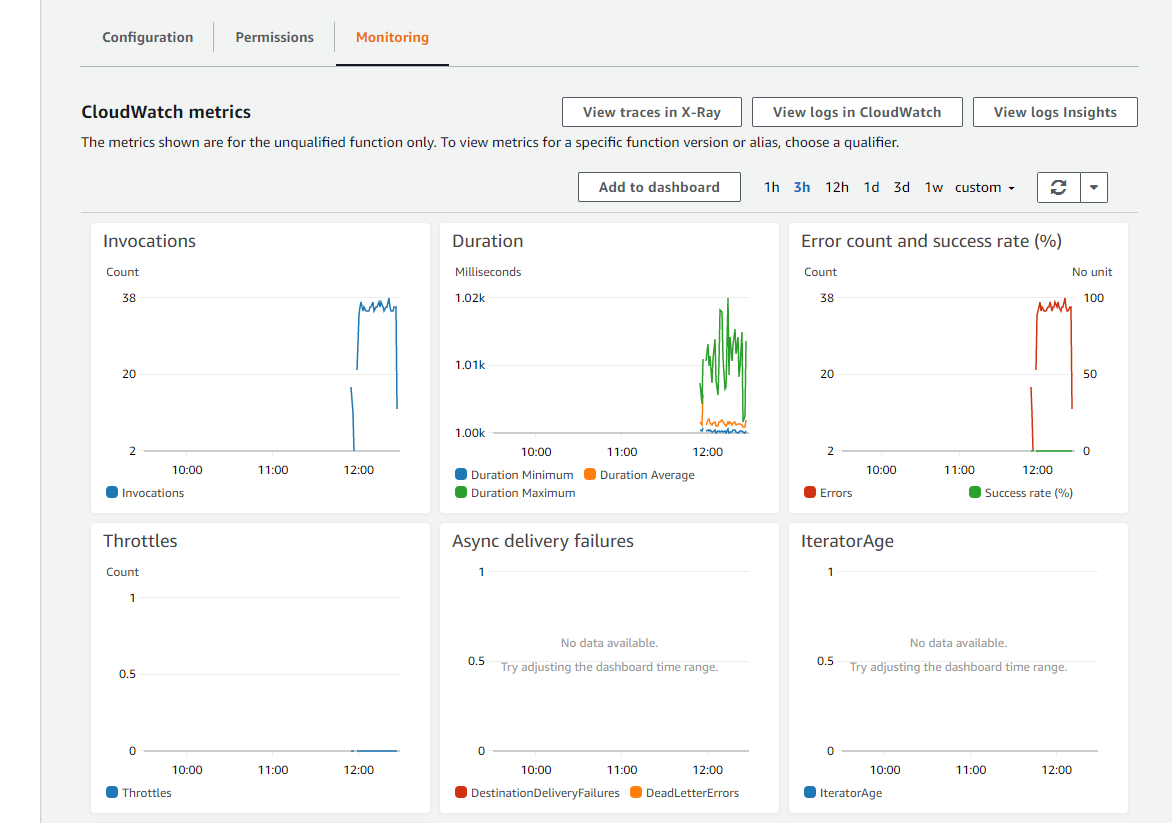

In a robust DevOps environment, meticulous monitoring and logging are paramount. They are crucial for maintaining application health and quickly addressing issues. Efficient monitoring provides real-time insights. This allows teams to identify bottlenecks and anomalies early. AWS offers a suite of tools designed for this purpose. Services such as CloudWatch, CloudTrail, and X-Ray play vital roles in this process. CloudWatch collects metrics and logs, providing a unified view of application and infrastructure performance. CloudTrail tracks user activity and API usage, enhancing security auditing. X-Ray helps analyze and debug distributed applications. These services form the backbone of effective monitoring in aws for devops. Setting up basic monitoring dashboards and alerts is straightforward. This proactive approach ensures teams are alerted to issues before they impact users. This level of vigilance is key for a successful AWS DevOps strategy.

Implementing effective monitoring requires a strategic approach. Start by defining the key metrics relevant to your application. These might include CPU utilization, memory usage, and request latency. CloudWatch enables the creation of custom dashboards, visually representing these metrics. Setting up alerts is equally important. Alerts should be triggered based on predefined thresholds. This helps to proactively address potential problems. CloudTrail’s activity tracking helps secure the environment. It ensures that all actions are documented for compliance and security analysis. X-Ray offers invaluable insights into the performance of microservices based applications. It enables teams to visualize the flow of requests and identify points of failure. Using these aws for devops tools effectively will significantly improve application reliability and user experience.

Logging is another crucial aspect of this process. Comprehensive logging helps in detailed analysis. It is essential for troubleshooting and identifying root causes of problems. Centralizing logs from different AWS resources simplifies this process. CloudWatch Logs facilitates log aggregation and analysis. This centralized view is invaluable in complex deployments. The logs, combined with monitoring data, provide a holistic view of system health. This approach to aws for devops allows teams to react swiftly to incidents. Implementing these practices improves the stability and performance of applications on AWS. This contributes to a more robust and reliable DevOps environment.

Containerization with AWS: Leveraging Docker and ECS/EKS for Scalable Deployments

Containerization has become a cornerstone of modern software development, and its integration with aws for devops is significantly transforming how applications are built and deployed. Docker, a leading containerization platform, packages applications and their dependencies into standardized units. This approach ensures consistent performance across different environments. AWS offers two primary services to manage containers: Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS). ECS provides a managed container orchestration service, streamlining the deployment and scaling of containerized applications. It is a good option for those who seek simplicity and a lower operational overhead. ECS integrates seamlessly with other AWS services. EKS, on the other hand, provides a managed Kubernetes service. It offers greater flexibility and control over container deployments. EKS is suitable for organizations that require advanced orchestration capabilities and want to leverage the broader Kubernetes ecosystem. Both services enable the creation of highly scalable and fault-tolerant applications on aws for devops. The adoption of containerization with Docker, ECS, and EKS accelerates the software development lifecycle and simplifies application management.

The benefits of using containerization in an aws for devops environment are numerous. Portability is a significant advantage. Containers can run consistently across various environments, from development laptops to production servers, eliminating compatibility issues. Consistency ensures that applications behave the same way throughout the entire pipeline. Scalability is another key advantage. Containerized applications can be easily scaled up or down based on demand. This dynamic approach optimizes resource utilization. It reduces costs. Microservices architectures often benefit from containerization. Each microservice can be developed, deployed, and scaled independently. This enhances agility and resilience. In short, containerization greatly enhances aws for devops initiatives. It empowers teams to develop and deploy software more efficiently and reliably. The combination of Docker with ECS and EKS offers powerful tools for building modern, scalable applications.

Choosing between ECS and EKS largely depends on the specific needs of your project. ECS offers a simpler getting-started experience and is excellent for teams looking for a fully managed service. It is also a cost effective option for organizations already invested in the aws ecosystem. EKS, on the other hand, offers greater customization and control over Kubernetes deployments. It is more suitable for organizations with existing Kubernetes expertise and the need for fine-grained control. Regardless of the choice, the advantages of leveraging containers in aws for devops are undeniable. They improve the overall efficiency and agility of the software development lifecycle. The combination of Docker and AWS container services empowers organizations to build and deploy more robust and scalable applications. Understanding the nuances of ECS and EKS is crucial for any team seeking to modernize its aws for devops practices.

Security Best Practices for DevOps in the AWS Cloud

Security is paramount in a cloud-based DevOps environment. Implementing robust security measures is crucial for protecting sensitive data and infrastructure. Identity and Access Management (IAM) is the cornerstone of AWS security. IAM allows precise control over who has access to what resources. This principle of least privilege minimizes the attack surface. Encryption should be employed both in transit and at rest. Services like AWS Key Management Service (KMS) facilitate encryption key management. Network segmentation is another critical practice. This involves dividing the network into isolated zones. Each zone has its own security controls. This limits the impact of any security breach. Regular vulnerability scanning is also a necessity. Tools like Amazon Inspector can automatically assess the security posture of your AWS resources. These scans help identify potential vulnerabilities early. Security in an aws for devops environment needs to be a continuous practice.

Furthermore, consider incorporating specialized security services within the aws for devops framework. AWS Security Hub provides a comprehensive view of security alerts across your AWS environment. It aggregates findings from various security services, offering a centralized place to monitor and manage threats. GuardDuty uses machine learning to analyze logs and identify suspicious activities. This helps to proactively detect threats. This tool automatically detects malicious behavior. The continuous monitoring of infrastructure is essential. This includes logs, configurations, and user activity. Implementing these measures helps to identify potential security issues quickly. Security is an ongoing process not a one time setting. Proper configuration of firewalls is vital. You should only allow needed traffic to pass through your systems. This helps to prevent unauthorized access to your resources and maintain system security.

Adopting a “security-as-code” approach is also recommended. This involves defining security policies and configurations as code. This enables automated deployment and auditing of security measures. This approach integrates security directly into the development lifecycle. This minimizes manual intervention. It also reduces the likelihood of human error. Regular security training for development and operations teams is crucial. This helps create a security-conscious culture. It is important to stay up to date with the latest security threats. This will help enhance your overall security posture. Applying these security best practices can help you build a secure and robust aws for devops pipeline. Implementing these measures will reduce risk significantly. This leads to safer and more reliable applications. Remember a secure system builds trust with your end user.

Leveraging AWS Lambda for Serverless DevOps

AWS Lambda represents a significant shift in how applications are built and deployed within a DevOps framework. This serverless compute service allows you to run code without provisioning or managing servers. It executes your code only when needed and scales automatically. This pay-per-use model translates to considerable cost savings. In an aws for devops environment, Lambda offers several compelling use cases. Event-driven automation is one such powerful application. Lambda functions can be triggered by changes in other AWS services, such as a new file being uploaded to S3 or a message arriving in an SQS queue. This event-driven model facilitates highly responsive and automated workflows that are not possible with traditional server based methods. Lambda functions can also be employed as serverless APIs. They can create custom endpoints for your CI/CD pipeline. These custom endpoints can be used to initiate builds, deployments, or any other action based on your needs. Lambda is a very valuable option when used correctly for aws for devops.

Consider a practical scenario within your CI/CD pipeline. Imagine you need to notify your team when a new code commit occurs. A Lambda function can automate this process. When a commit is pushed to your code repository, a trigger could invoke the function. The Lambda function would then extract relevant commit data, format a message, and send it to a communication platform like Slack or Microsoft Teams. All of this happens without having to run or manage any servers. This is a core concept behind leveraging aws for devops. Another good example for Lambda usage would be creating custom APIs that trigger specific events when needed within a pipeline. For example, trigger tests, quality code checks and any other important task on your pipelines. This function can also be used for cleanup tasks, such as removing temporary files or resources after a pipeline completes. This is all done automatically. This approach eliminates the overhead associated with traditional server management and infrastructure.

The benefits of using AWS Lambda for DevOps extend beyond automation. Its inherent scalability and pay-as-you-go pricing model makes it attractive for teams of all sizes. You only pay for the compute time your function consumes. This makes it extremely cost effective. Using Lambda also reduces operational overhead. There is no need for patching, updating or managing servers. This allows your team to focus on core development activities and optimize your development workflows. This helps promote a more agile and efficient aws for devops culture. By embracing serverless technologies, development teams can significantly reduce their operational burden and accelerate their pace of innovation. Ultimately, Lambda enables a more scalable, reliable and cost-effective development process. It is a great asset to consider using when looking to leverage aws for devops.

Best Practices for Optimizing AWS DevOps for Long-Term Success

Achieving lasting success with aws for devops requires a commitment to continuous improvement. It is not a one-time setup. Iterative improvements are crucial. Regularly assess your pipeline. Look for areas of friction or bottlenecks. Performance tuning should be ongoing. Optimize your infrastructure for cost and efficiency. Monitor resource utilization. Identify opportunities to scale down during off-peak hours. Implement automated testing. Ensure changes are deployed reliably. Encourage team collaboration. Foster a culture of shared responsibility. Share knowledge and best practices within the team. This creates a more robust and adaptable environment. Regular audits of your setup are vital. They help ensure compliance with security standards. They also confirm that best practices are followed. aws for devops evolves constantly. Therefore, continuous learning is essential. Stay up-to-date with new AWS services and features. Explore how they can enhance your DevOps practices.

Effective monitoring is not just about tracking metrics. It’s also about understanding trends. Proactive monitoring of your applications is key. Set up alerts for unusual behavior or errors. Analyze logs to identify root causes. Implement alerts for critical issues. This way you can address problems before they impact users. Embrace feedback loops. Gather input from different teams and stakeholders. Adjust your pipeline based on performance data. This helps ensure your aws for devops setup remains optimal. Consider A/B testing for changes in your pipeline. This helps to evaluate impact before a full implementation. Security best practices are vital in DevOps. Implement strong IAM policies and encryption. Conduct regular vulnerability scans. Continuously evaluate and update security protocols. This is to ensure ongoing protection. Focus on automation for repetitive tasks. Free your team to focus on more strategic initiatives.

Document your processes. Make sure everyone understands the pipeline. Maintain up-to-date documentation. This will help new team members onboarding process. It helps prevent knowledge silos. Automate the deployment process to reduce human error. Utilize version control for all your infrastructure-as-code. This allows for easy tracking of changes. It also facilitates rollbacks if needed. Remember that aws for devops is not static. It is an ongoing journey of learning and adaptation. By embracing change and focusing on iterative improvement, you can create a powerful and reliable development pipeline on AWS. You can also leverage the capabilities of the platform.