A Simple Guide: Uploading to S3 with AWS CLI

This article offers a straightforward, step-by-step guide on how to aws cli upload file to s3. It is designed for both beginners and intermediate users who want to leverage the command line interface for efficient file transfers to Amazon S3. The guide will cover essential commands and techniques, simplifying the process of managing your files in the cloud. Using the AWS CLI to aws cli upload file to s3 provides a powerful and versatile way to interact with your S3 buckets.

The focus is on providing practical instructions and clear examples that will enable readers to quickly aws cli upload file to s3. You’ll learn how to configure your AWS CLI environment, upload single files, and even manage entire directories. This guide prioritizes simplicity and clarity, ensuring that even those with limited command-line experience can successfully aws cli upload file to s3. Discover the ease and efficiency of managing your Amazon S3 storage through the AWS CLI.

This tutorial will walk you through everything you need to know to aws cli upload file to s3 effectively. From setting up your environment to optimizing your uploads, each step is explained in detail. By the end of this guide, you’ll be able to confidently aws cli upload file to s3 and manage your files with greater control and flexibility. This is an invaluable resource for anyone looking to master the AWS CLI for S3 file management. The AWS CLI streamlines the aws cli upload file to s3 process.

Why Use the AWS CLI for S3 File Transfers?

The AWS CLI offers significant advantages when performing an aws cli upload file to s3. It provides automation capabilities that the AWS Management Console lacks. Users can script file transfers, integrating them into automated workflows and CI/CD pipelines. This is particularly useful for batch uploads or recurring data synchronization tasks. The CLI grants granular control over upload parameters, allowing customization for specific needs. Users can define storage classes, encryption settings, and access control policies directly from the command line.

Compared to the AWS Management Console, the AWS CLI excels in scenarios requiring automation and precise control. The console provides a user-friendly interface for manual uploads. However, it is less suited for automated tasks. Consider a scenario where numerous files need uploading to S3 daily. A script using the AWS CLI can automate this process efficiently. The script eliminates the need for manual intervention. Conversely, the console is better for one-off uploads or when exploring S3 features. For example, a user might prefer the console to create a new bucket.

Furthermore, the AWS CLI facilitates version control of upload configurations. These configurations can be stored alongside application code. This ensures consistency and reproducibility across different environments. The ability to script aws cli upload file to s3 operations makes it ideal for incorporating into deployment scripts. This is also helpful for backup procedures. The AWS CLI offers a powerful, flexible, and efficient way to manage file transfers to S3. It especially helps in situations where automation, scriptability, and precise control are crucial. When deciding between the AWS Management Console and the AWS CLI, consider the specific requirements of the task. Prioritize automation and control, and the AWS CLI becomes the clear choice for seamless aws cli upload file to s3 operations.

Prerequisites: Setting Up the AWS CLI Environment

Before initiating any aws cli upload file to s3 operations, a proper environment setup is crucial. This involves installing the AWS CLI, configuring your AWS credentials, and ensuring you have an S3 bucket ready for file storage. The AWS CLI serves as the bridge between your local system and Amazon Web Services, enabling you to manage your AWS resources through command-line instructions.

The installation process varies slightly depending on your operating system. For Windows, you can download the AWS CLI MSI installer from the AWS website and follow the on-screen instructions. macOS users can utilize pip, the Python package installer (`pip install awscli`), or Homebrew (`brew install awscli`). Linux distributions typically offer package managers like apt or yum to install the AWS CLI. After installation, verify the installation by opening a terminal or command prompt and typing `aws –version`. This should display the installed AWS CLI version.

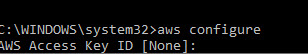

Configuring your AWS credentials is the next essential step. This involves creating an IAM user in the AWS Management Console with appropriate permissions to access S3. Specifically, the IAM user needs the `AmazonS3FullAccess` policy or a custom policy that grants permissions for `s3:GetObject`, `s3:PutObject`, `s3:DeleteObject`, and `s3:ListBucket` actions. Once the IAM user is created, generate an access key ID and a secret access key. Run the command `aws configure` in your terminal. The AWS CLI will prompt you for your AWS Access Key ID, AWS Secret Access Key, default region name (e.g., us-east-1), and default output format (e.g., json or text). Input the values obtained from the IAM user creation process. Finally, ensure you have an existing S3 bucket. If not, you can create one through the AWS Management Console or using the AWS CLI with the command `aws s3 mb s3://your-unique-bucket-name`. Replace `your-unique-bucket-name` with a globally unique name for your bucket. With these prerequisites in place, you’re ready to aws cli upload file to s3 effectively and securely.

Basic Command: Uploading a Single File

The foundation of transferring data to Amazon S3 using the AWS CLI rests on the `aws s3 cp` command. This command facilitates copying files between your local system and S3 buckets. The basic syntax follows this structure: `aws s3 cp

Consider this practical example: `aws s3 cp Documents/my_document.pdf s3://my-s3-bucket/documents/my_document.pdf`. In this scenario, `Documents/my_document.pdf` represents the path to the file on your local machine. `s3://my-s3-bucket` specifies the target S3 bucket. The `documents/my_document.pdf` part defines the object key or the file’s name and path within the S3 bucket. If you omit the optional object key, the file will be uploaded to the root of the bucket using its original name. This command efficiently manages the aws cli upload file to s3 process for individual files.

Understanding the parameters is vital for effective usage. The `

Advanced Techniques: Recursive Uploads for Directories

Uploading entire directories to Amazon S3 is a common requirement, and the AWS CLI simplifies this process with its recursive upload functionality. This method is particularly useful when needing to transfer multiple files and subdirectories while preserving the original directory structure within the S3 bucket. The `aws s3 cp` command, combined with the `–recursive` option, enables the efficient transfer of entire directory trees to S3. This avoids the need to upload each file individually, saving considerable time and effort.

To perform a recursive aws cli upload file to s3, the command syntax is straightforward: `aws s3 cp

When performing recursive uploads, the AWS CLI maintains the directory structure from the source location within the specified S3 bucket. This ensures that the files are organized logically and can be easily retrieved later. The `aws cli upload file to s3` using the recursive option simplifies managing large numbers of files and directories. For instance, if “my_website” contains subdirectories like “images,” “css,” and “js,” these subdirectories and their contents will be replicated under the “website/” prefix in the S3 bucket. This method streamlines website deployments, backup processes, and any scenario where preserving directory structure during the aws cli upload file to s3 is essential. The recursive upload capability provides a powerful and efficient way to manage and transfer directory-based data to Amazon S3.

Optimizing Uploads: Utilizing Parallel Processing and Configuration

To enhance the speed of your `aws cli upload file to s3` operations, you can leverage parallel processing and fine-tune your AWS CLI configuration. The default settings are often suitable for smaller files and standard network conditions, but adjusting them can significantly improve performance when dealing with larger files or slower connections. Optimizing your `aws cli upload file to s3` process involves modifying parameters such as `multipart_threshold` and `max_concurrent_requests`.

The `multipart_threshold` determines the size at which the AWS CLI will switch to multipart uploads. Multipart uploads divide a large file into smaller parts, uploading them concurrently, which can lead to faster overall transfer times. The default value is typically 8MB. If you’re consistently uploading files larger than this, consider increasing the `multipart_threshold`. To modify this, edit the AWS CLI configuration file, usually located at `~/.aws/config` or `C:\Users\YOUR_USER\.aws\config` on Windows. Add or modify the `multipart_threshold` parameter under the `[default]` or a specific profile section. For example:

[default] multipart_threshold = 64M

This sets the threshold to 64MB. Similarly, the `max_concurrent_requests` parameter controls the maximum number of parts that can be uploaded in parallel during a multipart upload. Increasing this value can further improve upload speed, especially on networks with high bandwidth. Adjust this setting in the same configuration file:

[default] max_concurrent_requests = 20

Experiment with different values to find the optimal setting for your environment. A higher number may not always be better, as it can potentially strain network resources. It’s crucial to monitor network performance while adjusting these settings to avoid performance bottlenecks. These optimizations directly impact how efficiently you `aws cli upload file to s3`.

Furthermore, consider your network conditions when tuning these parameters. A stable and fast network will benefit more from increased `max_concurrent_requests` than a slower, less reliable connection. For slower connections, reducing the number of concurrent requests might improve stability. Regularly evaluate and adjust these settings based on your file sizes, network performance, and the specific requirements of your `aws cli upload file to s3` workflows. By thoughtfully configuring these options, you can significantly reduce the time it takes to `aws cli upload file to s3`, especially when dealing with large datasets or frequent uploads.

Securing Your Data: Encryption Options During Upload

Protecting data during the upload process is paramount. The AWS CLI offers robust encryption options when you aws cli upload file to s3, ensuring data confidentiality both in transit and at rest. Server-Side Encryption (SSE) is a common method, with several variations available. SSE-S3 utilizes keys managed by Amazon S3, providing a straightforward encryption solution. Using the AWS CLI, specify this with the `–sse` parameter set to `AES256`. For example: `aws s3 cp my_file.txt s3://my_bucket/my_file.txt –sse AES256`. This simple addition ensures that the file is encrypted using Amazon S3-managed keys.

For enhanced control over encryption keys, Server-Side Encryption with KMS-managed keys (SSE-KMS) is an excellent choice. SSE-KMS leverages AWS Key Management Service (KMS) to manage the encryption keys. This allows you to define key policies, control access to keys, and maintain an audit trail of key usage. To implement SSE-KMS during an aws cli upload file to s3, use the `–sse-kms-key-id` option, specifying the ARN of the KMS key. An example command would be: `aws s3 cp sensitive_data.txt s3://secure_bucket/sensitive_data.txt –sse-kms-key-id arn:aws:kms:us-east-1:123456789012:key/my-kms-key`. Proper key management practices, including regular key rotation and restricting access, are crucial when using SSE-KMS.

Finally, Server-Side Encryption with Customer-Provided Keys (SSE-C) allows you to manage the encryption keys entirely on your end. However, AWS does not store the encryption key itself. Each time you aws cli upload file to s3 or download the object, you must provide the key. While offering maximum control, SSE-C also places the greatest responsibility on you to securely manage and transmit the encryption key. To use SSE-C, you’ll need to use options such as `–sse-c` and `–sse-c-key`. When choosing an encryption method, consider your security requirements, compliance mandates, and key management capabilities to select the most appropriate option for your aws cli upload file to s3 workflow. Implementing encryption is a critical step in securing sensitive data within Amazon S3.

Verifying Uploads: Checking File Integrity in S3

Ensuring the integrity of files after an aws cli upload file to s3 is a crucial step to guarantee data correctness. This involves verifying that the uploaded file is complete and hasn’t been corrupted during the transfer. One common method is to compare checksums between the original local file and the object stored in S3.

S3 provides an ETag, which often represents the MD5 checksum of the uploaded object. However, for larger files uploaded using multipart upload, the ETag is not a simple MD5 checksum but a more complex identifier. Therefore, relying solely on the ETag for large files can be misleading. To accurately verify an aws cli upload file to s3, it’s best to generate a local checksum of the original file using tools like `md5sum` (on Linux/macOS) or `Get-FileHash` (on Windows). Then, retrieve the ETag from the S3 object using the AWS CLI or AWS Management Console. If the file was not uploaded using multipart upload, comparing the locally generated checksum with the ETag will confirm the file’s integrity. For multipart uploads, alternative strategies for verifying integrity are required, such as comparing the size of the local file with the size of the S3 object and/or implementing custom checksum verification logic during or after the aws cli upload file to s3.

Here’s an example of generating an MD5 checksum locally:

md5sum your_file.txt

And here’s how you might retrieve object metadata, including the ETag, using the AWS CLI:

aws s3api head-object --bucket your-bucket --key your_file.txt

The output will include the ETag. Compare this ETag with the MD5 checksum generated earlier. If they match (and the file wasn’t uploaded using multipart upload), the aws cli upload file to s3 was successful and the file is intact. For robust verification, especially with large files and frequent aws cli upload file to s3 operations, consider implementing automated checksum verification as part of your workflow. This can involve scripting the checksum generation, ETag retrieval, and comparison processes to ensure ongoing data integrity within your S3 buckets. Remember that verifying the file integrity after an aws cli upload file to s3 contributes significantly to the reliability of the entire data storage solution.