Understanding AWS API Gateway Caching: An Overview

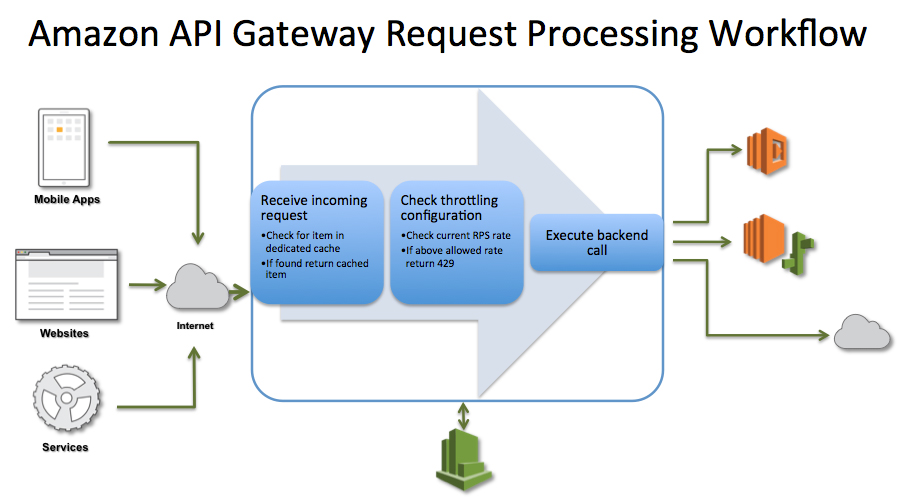

AWS API Gateway Caching is a powerful feature designed to optimize the performance of APIs and reduce costs. By storing frequently accessed data in memory, caching significantly decreases the time it takes to retrieve and process information. This results in improved latency and reduced resource usage, ultimately contributing to cost savings.

Key Benefits of Implementing AWS API Gateway Caching

AWS API Gateway Caching offers several significant advantages for enhancing API performance and reducing costs. By caching frequently accessed data, this feature reduces latency and resource usage, leading to substantial cost savings. Specific benefits include:

- Improved latency: Caching reduces the time it takes to retrieve and process data, resulting in faster response times and a better user experience.

- Reduced resource usage: By serving frequently accessed data from memory instead of relying on backend systems, caching minimizes the load on origin servers, reducing resource usage and associated costs.

- Cost savings: By offloading work from origin servers and minimizing the need for additional resources, caching can lead to substantial cost savings.

How to Enable Caching in AWS API Gateway

To harness the benefits of AWS API Gateway Caching, you need to enable it and configure cache behavior, keys, and TTL values. Follow these steps:

- Navigate to the AWS Management Console and open the API Gateway service.

- Select the API you want to enable caching for and click on “Cache Settings” in the “Settings” tab.

- Toggle the “Enable API Cache” option to “Yes” and specify the cache size in MB.

- Configure cache behavior by selecting the desired caching strategy, such as “Use Default Behavior” or “Custom Behavior.”

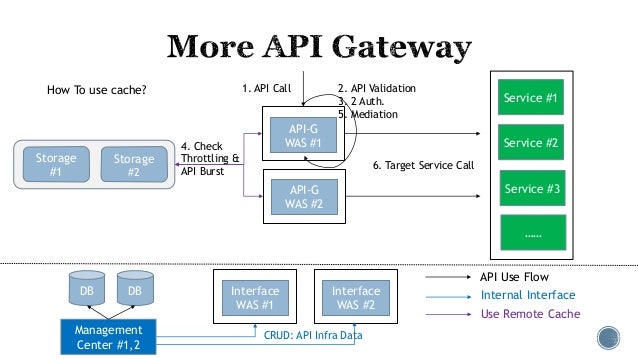

- Specify cache keys by selecting the desired data elements, such as query strings, headers, or path variables, to uniquely identify cached responses.

- Set the Time-to-Live (TTL) value, which determines how long AWS API Gateway should cache responses before fetching new data from the backend systems.

Best Practices for AWS API Gateway Caching

To maximize the benefits of AWS API Gateway Caching, consider these recommended practices:

- Set appropriate TTL values: Adjust TTL values based on the frequency of data updates and the importance of serving fresh data. Shorter TTL values ensure data freshness but may increase resource usage, while longer TTL values reduce resource usage but may serve stale data.

- Monitor cache usage: Regularly monitor cache usage to identify potential issues, such as insufficient cache size or ineffective cache keys. Adjust cache settings accordingly to maintain optimal performance and cost efficiency.

- Fine-tune cache keys: Specify cache keys that accurately represent unique API responses to avoid serving stale or irrelevant data. Experiment with different cache key configurations to find the optimal balance between cache hits and cache misses.

- Implement cache invalidation patterns: Use cache invalidation patterns, such as versioning or invalidation requests, to ensure that stale data is not served when the underlying data changes.

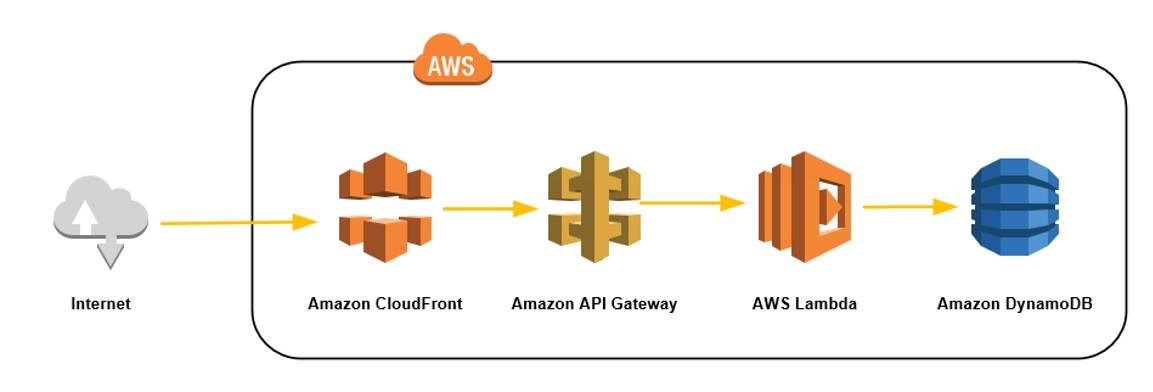

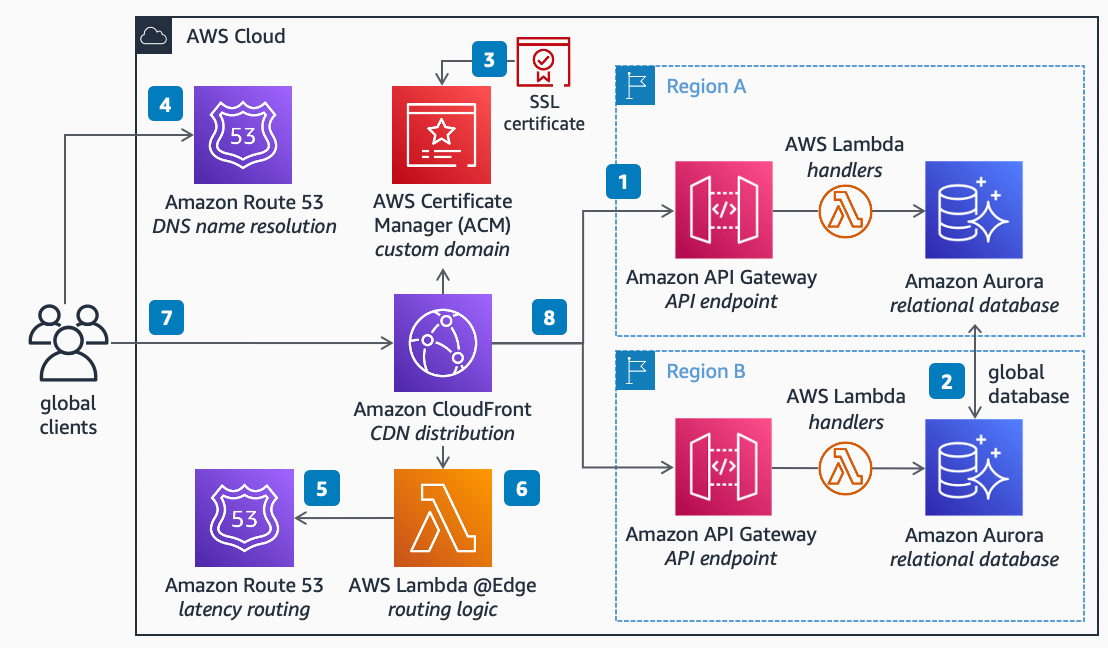

- Leverage AWS services: Utilize AWS services like CloudFront and ElastiCache to enhance caching capabilities and improve overall performance.

AWS API Gateway Caching vs. Client-Side Caching: A Comparative Analysis

AWS API Gateway Caching and client-side caching are two common caching strategies that serve different purposes and have unique strengths and weaknesses. Understanding their differences can help you determine the best approach for your specific use case.

AWS API Gateway Caching

- Centralized caching: AWS API Gateway Caching is performed at the API Gateway level, reducing the load on origin servers and improving overall performance.

- Easy setup and management: Enabling caching in AWS API Gateway is straightforward, with minimal configuration required.

- Limited control: AWS API Gateway Caching offers limited control over caching behavior and cache invalidation.

- Shared cache: AWS API Gateway Caching uses a shared cache, which may lead to cache contention and reduced performance for concurrent requests.

Client-Side Caching

- Decentralized caching: Client-side caching is performed at the client level, reducing the load on both the API Gateway and origin servers.

- Greater control: Client-side caching provides more control over caching behavior, cache invalidation, and cache management.

- Increased complexity: Implementing client-side caching can be more complex than setting up AWS API Gateway Caching, requiring additional development and testing efforts.

- Variable performance: Client-side caching performance can vary based on the client’s capabilities, network conditions, and caching configuration.

Real-World Examples of Successful AWS API Gateway Caching Implementations

AWS API Gateway Caching has been successfully implemented in various real-world scenarios, leading to improved performance and cost savings. Here are a few examples:

Example 1: Content Delivery Network (CDN) Integration

A media company integrated AWS API Gateway Caching with Amazon CloudFront, a fast content delivery network service, to cache and serve media assets. This setup significantly reduced latency and resource usage, improving the user experience and reducing costs.

Example 2: Microservices Architecture

An e-commerce platform adopted AWS API Gateway Caching to cache responses from various microservices, reducing the load on origin servers and improving overall performance. This resulted in faster response times, increased system reliability, and cost savings.

Example 3: Mobile Application Backend

A mobile app developer used AWS API Gateway Caching to cache API responses for their mobile application backend. This approach reduced the number of API calls and minimized the load on backend servers, leading to improved app performance and reduced costs.

Potential Limitations and Challenges of AWS API Gateway Caching

While AWS API Gateway Caching offers numerous benefits, it is essential to be aware of its potential limitations and challenges. Addressing these issues can help ensure optimal performance and cost savings.

Cache Invalidation

Cache invalidation is the process of removing or updating cached data when the underlying data changes. Inadequate cache invalidation can lead to stale data being served, causing inconsistencies and user frustration. Implementing cache invalidation patterns, such as versioning or invalidation requests, can help address this challenge.

Stale Data

Stale data can occur when cached data is not invalidated promptly or when the TTL value is set too high. Ensuring appropriate TTL values and monitoring cache usage can help minimize the risk of serving stale data.

Cache Size Management

Effective cache size management is crucial to prevent performance degradation due to cache contention or insufficient cache space. Regularly monitoring cache usage and adjusting cache size settings can help maintain optimal cache performance.

Strategies for Overcoming AWS API Gateway Caching Limitations

To address the potential limitations and challenges associated with AWS API Gateway Caching, consider implementing the following strategies:

Implement Cache Invalidation Patterns

Cache invalidation patterns, such as versioning or invalidation requests, can help ensure that stale data is not served. By invalidating or updating cached data when the underlying data changes, you can maintain data consistency and improve user experience.

Monitor Cache Usage

Regularly monitoring cache usage can help identify potential issues, such as insufficient cache size or ineffective cache keys. Adjusting cache settings based on these insights can help maintain optimal performance and cost efficiency.

Leverage AWS Services

AWS offers several services that can help enhance caching capabilities and address limitations. For instance, Amazon CloudFront, a fast content delivery network service, can be integrated with AWS API Gateway Caching to improve performance and reduce costs. Similarly, Amazon ElastiCache, an in-memory data store, can be used to offload read-intensive database workloads, improving application performance and scalability.

Fine-Tune Cache Keys

Specifying cache keys that accurately represent unique API responses can help minimize cache contention and ensure that relevant data is served. Experimenting with different cache key configurations can help find the optimal balance between cache hits and cache misses.