Understanding the Power of In-Memory Caching

In-memory caching is a pivotal technique for enhancing application performance in today’s demanding digital landscape. It involves storing frequently accessed data in a computer’s RAM, enabling rapid retrieval and significantly reducing latency. This approach contrasts sharply with retrieving data from slower storage mediums like hard drives or solid-state drives. By minimizing the need to access these slower storage systems, in-memory caching drastically improves application responsiveness and overall user experience.

The benefits of in-memory caching extend beyond mere speed improvements. It plays a crucial role in reducing the load on databases. When applications repeatedly request the same data, the database can become a bottleneck. In-memory caching alleviates this pressure by serving frequently requested data directly from the cache, thereby freeing up database resources and allowing it to handle more complex queries. This is especially critical for applications experiencing high traffic volumes or complex data interactions. For these scenarios, a solution like amazon elasticache for redis is ideal, ensuring seamless scalability and high availability.

In essence, in-memory data management is no longer a luxury but a necessity for modern applications. Users expect instant results and seamless experiences. In-memory caching, particularly solutions like amazon elasticache for redis, delivers on these expectations by providing the speed and efficiency required to handle demanding workloads. Implementing amazon elasticache for redis empowers developers to build applications that are not only faster but also more scalable and resilient. By strategically leveraging in-memory caching, businesses can unlock significant performance gains, improve user satisfaction, and ultimately achieve a competitive edge. This positions amazon elasticache for redis as a cornerstone of modern application architecture.

ElastiCache for Redis: A Deep Dive

Amazon ElastiCache for Redis is a fully managed, in-memory data store and caching service provided by Amazon Web Services (AWS). It simplifies the process of deploying, operating, and scaling a Redis environment in the cloud. This service alleviates the operational overhead associated with managing Redis infrastructure, allowing developers to focus on building high-performance applications. Amazon ElastiCache for Redis is designed for use cases that require ultra-fast data access with low latency.

Key features of Amazon ElastiCache for Redis include seamless scalability, robust security, and ease of use. Scalability is achieved through features like cluster mode, which allows you to shard data across multiple Redis nodes, increasing both read and write capacity. Security is paramount, with support for Redis AUTH, encryption in transit and at rest, and integration with AWS Identity and Access Management (IAM). Setting up and managing an Amazon ElastiCache for Redis cluster is straightforward, thanks to the AWS Management Console, AWS CLI, and SDKs. Amazon ElastiCache for Redis excels in scenarios such as session management, caching frequently accessed data, real-time analytics, gaming leaderboards, and message brokering.

Amazon ElastiCache for Redis supports a rich set of Redis data structures, including strings, lists, sets, sorted sets, hashes, and streams. These versatile data structures enable developers to implement complex caching strategies and data models efficiently. For example, lists can be used to manage queues, while sorted sets are ideal for leaderboards. Hashes are useful for storing and retrieving objects, and streams are well-suited for real-time data ingestion and processing. The combination of high performance and flexible data structures makes Amazon ElastiCache for Redis a powerful tool for enhancing the speed and responsiveness of modern applications. By leveraging the capabilities of amazon elasticache for redis, businesses can significantly improve user experience and reduce database load, leading to cost savings and increased efficiency.

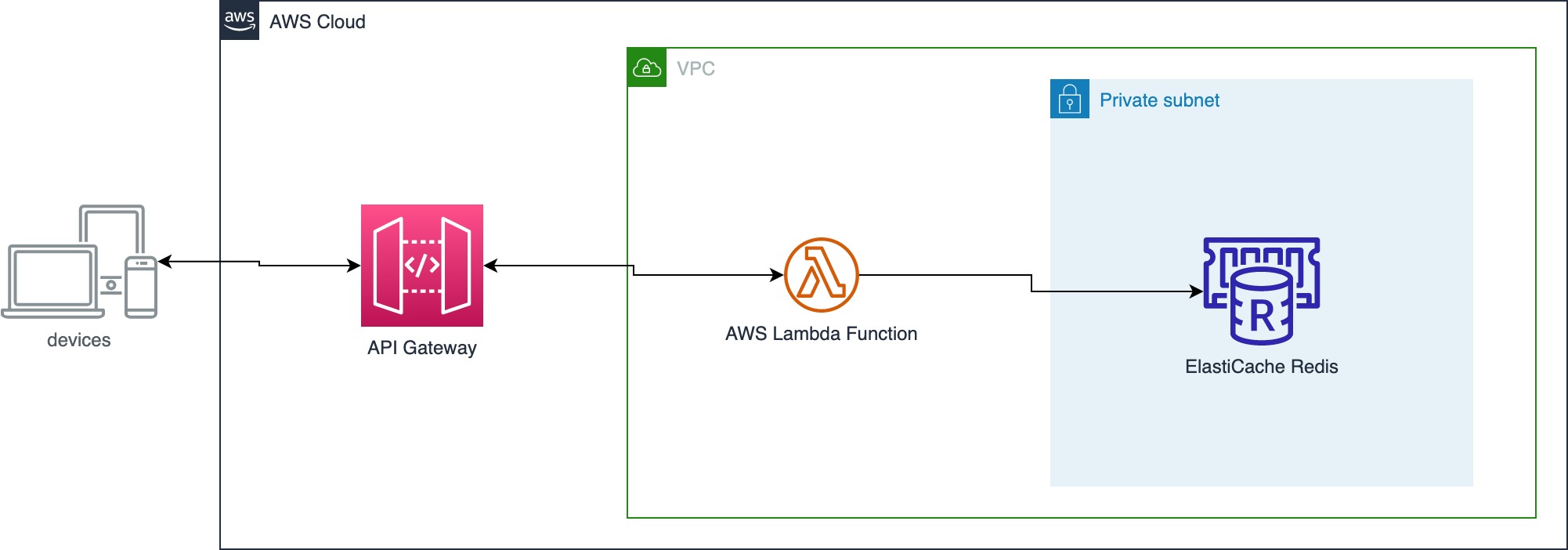

Boosting Application Speed: How to Implement ElastiCache with Redis

Implementing Amazon ElastiCache for Redis can significantly boost application speed. The first step involves creating an ElastiCache cluster. Navigate to the Amazon ElastiCache service in the AWS Management Console. Choose Redis as the engine type. Specify the instance type, number of nodes, and other cluster settings based on your application’s needs. Consider factors like memory requirements and expected traffic when selecting instance sizes. Proper planning ensures optimal performance and cost-effectiveness. Configuration also includes setting up a subnet group for your cluster. This subnet group defines the VPC subnets where your ElastiCache nodes will reside, ensuring network connectivity within your AWS environment.

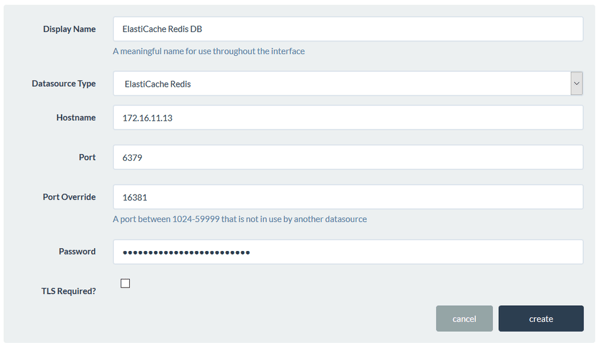

Configuring security groups is crucial for controlling access to your Amazon ElastiCache for Redis cluster. Security groups act as virtual firewalls, allowing you to specify which IP addresses or CIDR blocks can connect to your Redis nodes. Create a security group that allows inbound traffic on the Redis port (6379 by default) from your application servers. Restricting access to only authorized sources enhances the security posture of your caching infrastructure. After the cluster is created and the security groups are configured, you can connect your application. You will need the cluster’s endpoint, which can be found in the ElastiCache console. Several client libraries are available for various programming languages, including Python, Java, Node.js, and PHP. These libraries simplify the process of interacting with your Redis cluster.

To connect your application, install the appropriate client library for your language. Use the cluster’s endpoint to establish a connection. For example, in Python, you might use the `redis-py` library. The code would involve creating a Redis client object using the endpoint and port. Once connected, you can use the client library’s methods to perform caching operations. Common operations include setting values, retrieving values, and expiring keys. Implementing a caching strategy involves identifying frequently accessed data and storing it in Amazon ElastiCache for Redis. This reduces the load on your database and improves response times. Remember to handle cache invalidation appropriately to ensure data consistency. By following these steps, you can effectively implement Amazon ElastiCache for Redis and enhance the performance of your applications.

Redis vs. Memcached: Choosing the Right Caching Solution

When selecting an in-memory caching solution within the Amazon ElastiCache ecosystem, a crucial decision involves choosing between Redis and Memcached. Both are powerful tools, but they cater to different needs and use cases. Understanding their fundamental differences is key to optimizing your application’s performance. ElastiCache for Memcached is designed for simplicity and raw speed. It excels at caching simple objects. It operates as a distributed, in-memory key-value store, making it ideal for scenarios where high-volume, low-latency caching is paramount. Memcached’s architecture is inherently multi-threaded. This allows it to handle numerous requests concurrently. It’s a great fit for applications needing basic caching functionality without complex data structures.

ElastiCache for Redis, on the other hand, offers a richer set of features. While also functioning as an in-memory data store, Redis provides support for various data structures. These include lists, sets, hashes, and sorted sets. This versatility allows Redis to handle more complex caching scenarios. It supports use cases beyond simple key-value caching. Redis also offers advanced features like Pub/Sub messaging and transactions. This makes it suitable for applications requiring real-time data processing. Redis delivers persistence options. This is useful for scenarios where data durability is a concern. The choice between Redis and Memcached often hinges on the complexity of your application’s data and the features required. If you need only basic caching, Memcached provides speed and simplicity. For complex data structures and advanced features, Redis is often the better choice. Understanding the nuances of amazon elasticache for redis against Memcached will help you select the right caching engine.

Consider your application’s specific needs carefully. Evaluate the data structures you will be caching. Assess the importance of features like persistence and Pub/Sub. For simple object caching where speed is critical, ElastiCache for Memcached remains a strong contender. If you need advanced data structures, data persistence and complex operations, amazon elasticache for redis provides more capability. Amazon ElastiCache for Redis offers a broader set of features. It can support more sophisticated application architectures. Ultimately, the best caching solution depends on a careful evaluation. Weigh the trade-offs between speed, simplicity, features, and cost. Optimize for your specific use case. By understanding the strengths and weaknesses of each engine, you can make an informed decision. You will improve your application’s performance and scalability using amazon elasticache for redis.

Optimizing ElastiCache Performance: Best Practices for Redis

To fully leverage the capabilities of Amazon ElastiCache for Redis, optimization is crucial. Effective memory management is paramount. Redis operates in-memory, so efficiently using available memory impacts performance. One key aspect is setting appropriate memory limits. Configure the `maxmemory` setting to prevent Redis from consuming excessive resources. When the memory limit is reached, Redis applies an eviction policy to remove keys. Choosing the right eviction policy is essential for maintaining performance.

Several eviction policies are available, including Least Recently Used (LRU), Least Frequently Used (LFU), and Random. LRU evicts the least recently accessed keys, while LFU evicts the least frequently accessed keys. Random eviction selects keys randomly for removal. The optimal policy depends on the application’s data access patterns. Monitoring is another vital component of optimizing Amazon ElastiCache for Redis performance. Amazon CloudWatch provides metrics such as CPU utilization, memory usage, and cache hit rate. Closely monitor these metrics to identify potential bottlenecks. A low cache hit rate suggests that the cache is not effectively storing frequently accessed data. Increasing the cache size or adjusting the eviction policy can improve the hit rate.

Scaling is also crucial for handling increased workloads. Amazon ElastiCache for Redis supports scaling both vertically and horizontally. Vertical scaling involves increasing the instance size, while horizontal scaling involves adding more nodes to the cluster. Horizontal scaling with Redis Cluster provides sharding which allows you to distribute data across multiple nodes. This can significantly improve performance and scalability. Furthermore, when using Amazon ElastiCache for Redis, understand how to identify and resolve performance bottlenecks efficiently. Slow queries can significantly impact overall performance. Use the Redis `SLOWLOG` command to identify queries that exceed a specified execution time. Once identified, optimize these queries by rewriting them or adding appropriate indexes. By implementing these best practices, the performance of Amazon ElastiCache for Redis deployments can be significantly enhanced, resulting in faster application response times and a better user experience.

Redis Security Considerations with ElastiCache

Security is paramount when deploying applications, and using Amazon ElastiCache for Redis is no exception. A robust security strategy is crucial to protect sensitive data and maintain the integrity of your application. Neglecting security best practices can expose your system to vulnerabilities and potential attacks. Therefore, understanding and implementing appropriate security measures is non-negotiable.

One of the fundamental security measures is utilizing Redis AUTH. This feature requires clients to authenticate before they can execute commands. Setting a strong, unique password for Redis AUTH adds a layer of protection against unauthorized access. Another vital aspect involves configuring security groups within your Virtual Private Cloud (VPC). Security groups act as virtual firewalls, controlling the inbound and outbound traffic to your Amazon ElastiCache for Redis cluster. By carefully defining rules that permit only necessary traffic from authorized sources, you can significantly reduce the attack surface. Furthermore, encrypting data both in transit and at rest is highly recommended. For data in transit, enable TLS (Transport Layer Security) to encrypt communication between your application and the Redis cluster. Amazon ElastiCache for Redis supports TLS encryption, ensuring that data is protected during transmission. For data at rest, consider using encryption at rest provided by AWS Key Management Service (KMS). This protects data stored on disk, mitigating the risk of unauthorized access if the storage media is compromised.

Beyond these core measures, it’s essential to stay informed about potential security vulnerabilities and proactively address them. Regularly review security advisories and apply necessary patches to your Amazon ElastiCache for Redis deployment. Implement role-based access control (RBAC) to restrict user privileges to the minimum required for their tasks. This principle of least privilege minimizes the potential damage from compromised accounts. Audit your security configuration regularly to identify and rectify any weaknesses. By implementing these security best practices, you can create a secure and resilient environment for your Amazon ElastiCache for Redis deployments. Remember that security is an ongoing process, requiring continuous monitoring and adaptation to emerging threats. Protecting your data with Amazon ElastiCache for Redis is a shared responsibility, and diligent security practices are key to a successful and secure deployment. Prioritizing these security considerations is a commitment to maintaining a robust and trustworthy application environment leveraging the benefits of amazon elasticache for redis.

Monitoring and Maintaining Your Redis Cluster

Effective monitoring and maintenance are crucial for ensuring the health and optimal performance of your amazon elasticache for redis cluster. Without consistent oversight, potential issues can go unnoticed, leading to performance degradation or even outages. Amazon CloudWatch is a powerful tool that allows you to track key metrics and gain insights into the behavior of your cluster. Regularly reviewing these metrics enables you to proactively address any emerging problems and maintain a stable environment.

Key metrics to monitor for your amazon elasticache for redis cluster include CPU utilization, memory usage, and cache hit rate. High CPU utilization may indicate that your cluster is under heavy load and requires scaling. Monitoring memory usage is crucial to prevent your Redis instance from running out of memory, which can lead to data loss or service interruptions. The cache hit rate is a vital indicator of how effectively your cache is serving requests. A low cache hit rate suggests that you may need to adjust your caching strategy or increase the size of your cache. Other important metrics include connection counts, replication lag (if applicable), and the number of evictions. Establishing baselines for these metrics allows you to quickly identify anomalies and take corrective action. Amazon CloudWatch provides customizable dashboards and alerting capabilities, enabling you to receive notifications when metrics exceed predefined thresholds. This proactive approach helps you to address issues before they impact your application’s performance.

Maintaining your amazon elasticache for redis cluster involves several essential tasks, including scaling, patching, and performing backups. Scaling your cluster allows you to adjust its capacity to meet changing demands. You can scale horizontally by adding or removing nodes, or vertically by increasing the instance size. Regular patching is crucial for ensuring that your cluster is protected against known security vulnerabilities. Amazon ElastiCache provides a streamlined patching process that minimizes downtime. Backups are essential for data recovery in the event of a failure. Amazon ElastiCache allows you to create automated backups of your Redis data. Regularly testing your backup and restore procedures is vital to ensure that you can quickly recover from any unforeseen incidents. By diligently monitoring and maintaining your amazon elasticache for redis cluster, you can ensure its reliability, performance, and security.

Real-World Applications: Success Stories with ElastiCache and Redis

Many organizations across diverse industries leverage Amazon ElastiCache for Redis to enhance application performance and scalability. These real-world applications demonstrate the tangible benefits of in-memory data management. Consider a leading e-commerce platform that utilizes amazon elasticache for redis to cache product catalogs and user session data. This implementation significantly reduces database load during peak shopping seasons, ensuring a seamless and responsive user experience. By serving frequently accessed information directly from the cache, the platform avoids overwhelming the database with requests, resulting in faster page load times and improved customer satisfaction.

Another compelling example involves a global gaming company. They employ amazon elasticache for redis to manage real-time leaderboards and game state data. The low-latency nature of Redis enables them to provide players with instantaneous updates, fostering a competitive and engaging gaming environment. Furthermore, the company uses ElastiCache to cache player profiles and game configurations, minimizing latency during game loading and transitions. This ensures optimal responsiveness, a critical factor in maintaining player engagement and satisfaction. The scalability of amazon elasticache for redis allows the company to accommodate fluctuating player traffic without sacrificing performance.

A financial services firm provides a final example. They use amazon elasticache for redis to power their real-time analytics dashboard. By caching market data and transaction information, they enable analysts to quickly identify trends and make informed decisions. The speed of in-memory processing allows them to perform complex calculations and generate reports in near real-time. This enhances their ability to respond swiftly to market changes and mitigate potential risks. These success stories show the versatility of amazon elasticache for redis in addressing diverse challenges, from improving e-commerce experiences to enhancing gaming performance and enabling real-time analytics. The adoption of this technology empowers businesses to unlock new levels of performance, scalability, and responsiveness.