What is a Continuous Delivery Pipeline in AWS?

Continuous delivery pipelines are essential for modern software development, streamlining the process of releasing software updates quickly and reliably. These pipelines automate the steps required to build, test, and deploy code changes, minimizing manual intervention and reducing the risk of errors. AWS offers robust solutions, notably awscodepipeline, to facilitate the creation and management of these pipelines, although we will refer to it as AWS Pipelines to provide a clearer explanation to new users.

AWS Pipelines, a key component of the AWS ecosystem, provides a visual workflow that automates the software release process. By defining a series of stages, such as source code retrieval, building, testing, and deployment, the awscodepipeline automates the entire lifecycle. This automation leads to several significant benefits, including faster release cycles, improved code quality, and reduced operational overhead. The awscodepipeline also allows teams to integrate various AWS services, such as CodeCommit, CodeBuild, and CodeDeploy, into a cohesive and automated workflow. Furthermore, integrating security scans into the pipeline is a great way to improve application security.

The advantages of using awscodepipeline to automate software delivery are numerous. It speeds up the time it takes to get new features and bug fixes into the hands of users, giving businesses a competitive advantage. It makes it easier to find and fix problems early in the development process, which improves the quality of the software. It frees up developers to focus on writing code rather than dealing with deployments manually, which makes them more productive. By implementing awscodepipeline, organizations can achieve a more agile and efficient software delivery process, leading to faster innovation and improved customer satisfaction. Moreover, AWS Pipelines’ pay-as-you-go pricing model ensures cost-effectiveness, allowing organizations to scale their pipelines based on actual usage without incurring significant upfront expenses. The awscodepipeline empowers development teams to deliver value faster and more reliably.

How to Build a Simple Deployment Pipeline on AWS

Creating a basic deployment pipeline on AWS involves several key steps. This process leverages the capabilities of AWS to automate software delivery, ensuring efficiency and reducing manual intervention. While we aim to provide information about awscodepipeline, we will focus on the underlying concepts applicable regardless of the specific tool.

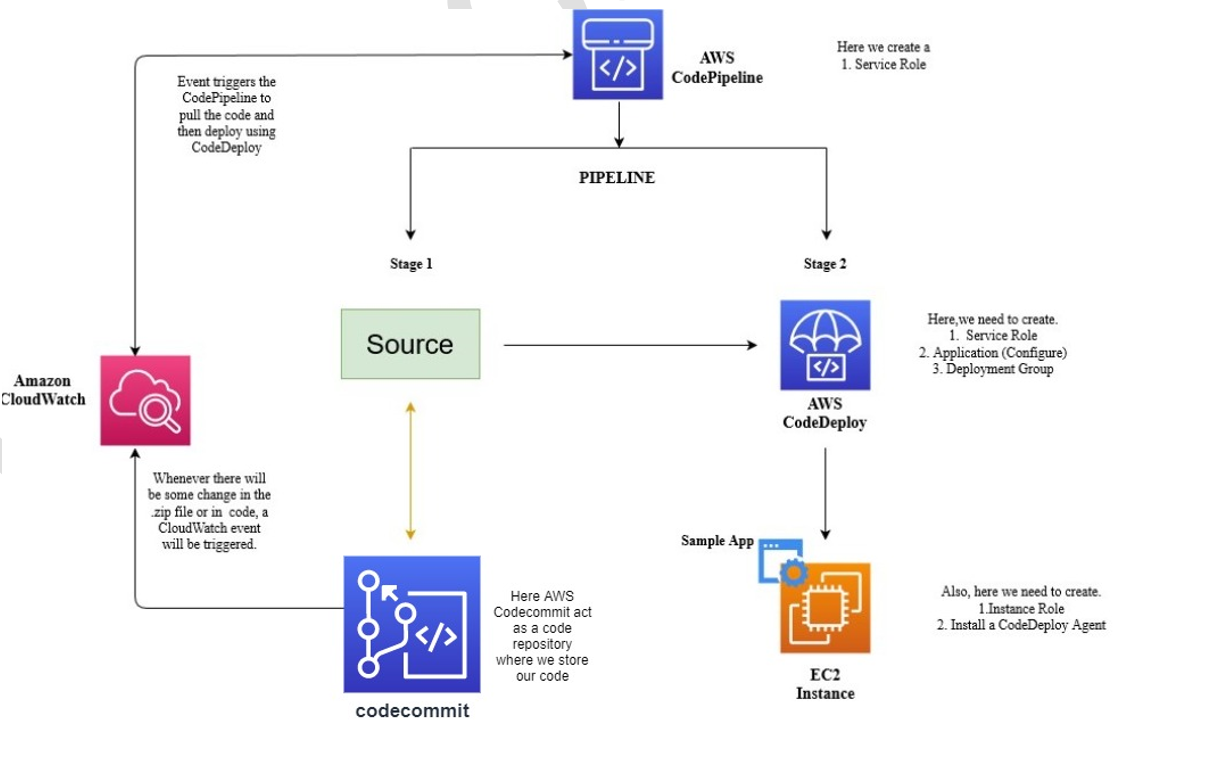

The first step is to select a source code repository. AWS CodeCommit offers a private Git repository service, or you can use external options like GitHub or even an S3 bucket. Once the repository is chosen, the next phase involves defining the build stages. This is where services such as AWS CodeBuild come into play. CodeBuild compiles the source code, runs tests, and packages the application into deployable artifacts. Configuration files, typically in YAML format, instruct CodeBuild on how to execute these tasks. These configuration files define the build environment, commands to run, and artifact locations. Ensure that appropriate IAM roles are configured to grant CodeBuild the necessary permissions to access resources, including your source repository and deployment targets. AWS CodePipeline automates the software release process, from source code to deployment, making it easier to continuously deliver updates.

Finally, specify the deployment targets. AWS Elastic Beanstalk offers an easy way to deploy web applications, while AWS CodeDeploy provides more control over deployments to various compute services, including EC2 instances and Lambda functions. The pipeline configuration defines how artifacts from the build stage are deployed to these targets. This involves specifying the deployment provider, target environment, and any pre- or post-deployment scripts. For instance, when using AWS CodeDeploy, you’ll need to create an application and deployment group, defining the instances or Lambda functions to be updated. Each stage is carefully orchestrated to provide a seamless and repeatable deployment process. By following these steps, users can create a foundational deployment pipeline that streamlines software delivery on AWS. This enables faster release cycles, reduced errors, and improved overall efficiency, which are key benefits of using awscodepipeline.

Key Components of an AWS Software Pipeline

An AWS Pipeline is composed of several core elements that work together to automate the software delivery process. Understanding these components is crucial for designing, building, and managing efficient and reliable deployment workflows. The awscodepipeline’s architecture allows for a modular and flexible approach to continuous integration and continuous delivery (CI/CD).

Source providers are the starting point of any awscodepipeline. They define where the source code for the application resides. Common source providers include AWS CodeCommit, GitHub, and Amazon S3. The pipeline monitors the specified source location for changes, such as code commits. Once a change is detected, the pipeline is automatically triggered, initiating the subsequent stages. Build providers are responsible for compiling, testing, and packaging the application. AWS CodeBuild is a popular choice for this purpose, offering a fully managed build service. Other build providers can be integrated as well. During the build stage, various tasks are performed, such as running unit tests, performing static code analysis, and creating deployable artifacts. These artifacts are then passed on to the next stage in the pipeline. Artifact stores play a vital role in managing the output of each stage. They serve as a repository for storing the artifacts generated during the build process. Amazon S3 is commonly used as an artifact store, providing a scalable and durable storage solution. Artifacts are versioned and stored securely, ensuring that the correct versions are used throughout the deployment process. Deploy providers handle the deployment of the application to the target environment. AWS CodeDeploy is a deployment service that automates application deployments to various compute services, such as EC2 instances, Lambda functions, and ECS clusters. Other deployment providers, such as AWS Elastic Beanstalk, can also be used, depending on the specific deployment requirements.

The transition logic connects the different stages of the awscodepipeline. It defines the flow of artifacts and the conditions under which the pipeline progresses from one stage to the next. Transitions can be configured to be automatic or manual, allowing for control over the deployment process. For example, a manual approval stage can be added to require human intervention before deploying to a production environment. The tight integration between awscodepipeline and other AWS services is a key advantage. This integration simplifies the process of building and managing CI/CD workflows, allowing developers to focus on writing code rather than managing infrastructure. By understanding the core components of an AWS Pipeline, organizations can build robust and automated software delivery pipelines that accelerate release cycles and improve software quality. The awscodepipeline facilitates collaboration between development and operations teams, enabling faster feedback loops and continuous improvement. Security checks can be incorporated into the pipeline, ensuring that vulnerabilities are identified and addressed early in the development lifecycle.

Integrating Security Checks Into Your CI/CD Pipeline

Security should be a fundamental aspect of the software development lifecycle, not an afterthought. Integrating security checks into the CI/CD pipeline ensures that potential vulnerabilities are identified and addressed early in the process, minimizing risks and reducing the cost of remediation. This approach, often referred to as “shifting security left,” promotes a proactive security posture. An effective awscodepipeline should include automated security checks at various stages.

Static code analysis is a valuable technique for identifying potential security flaws in the source code. Tools like SonarQube can be integrated with AWS CodeBuild to automatically scan code for vulnerabilities, coding errors, and adherence to security best practices. Vulnerability scanning tools can also be incorporated into the pipeline to detect known vulnerabilities in third-party libraries and dependencies. These tools can be configured to automatically fail the build if critical vulnerabilities are detected, preventing insecure code from being deployed. By integrating these checks directly into the awscodepipeline, development teams receive immediate feedback on security issues, enabling them to address them quickly and efficiently.

Furthermore, security testing, such as dynamic application security testing (DAST) and penetration testing, should be included in the later stages of the awscodepipeline. DAST tools analyze the running application for vulnerabilities by simulating real-world attacks. Penetration testing involves security experts manually assessing the application for weaknesses. These tests can uncover vulnerabilities that static analysis and vulnerability scanning might miss. Integrating these security checks into the awscodepipeline requires careful planning and configuration, but the benefits of increased security and reduced risk outweigh the effort. By automating security checks throughout the CI/CD process, organizations can build more secure and resilient software.

Troubleshooting Common AWS Pipeline Issues

When working with awscodepipeline, encountering issues is a common part of the development process. Identifying and resolving these problems efficiently is crucial for maintaining a smooth continuous delivery workflow. This section addresses some frequent challenges and offers practical troubleshooting steps.

One common issue is pipeline failure. These failures can stem from various sources, including build errors, deployment problems, or integration issues with other AWS services. When a pipeline fails, the first step is to examine the pipeline execution history within the AWS console. This history provides detailed logs for each stage and action, offering valuable clues about the cause of the failure. Build errors, often originating from issues in the source code or build configuration, are another frequent culprit. Examining the build logs generated by AWS CodeBuild can pinpoint the exact location and nature of the error. These logs often contain error messages from the compiler or testing framework, indicating the specific code that needs attention. Deployment issues, where the application fails to deploy correctly to the target environment, can also lead to pipeline failures. These problems could arise from misconfigured deployment settings, insufficient permissions, or issues with the target infrastructure. Checking the deployment logs from services like AWS CodeDeploy or AWS Elastic Beanstalk can reveal the root cause. Integration problems between awscodepipeline and other AWS services or third-party tools can also cause unexpected failures. Ensure that all necessary IAM roles and permissions are correctly configured to allow the pipeline to interact with these services. Reviewing the logs from the integrated services can often provide insights into the source of the integration problem.

Effective troubleshooting involves interpreting error messages and identifying common configuration mistakes. Error messages, while sometimes cryptic, provide crucial information about the nature of the problem. Carefully analyzing these messages can often lead to the identification of the faulty configuration or code. Common configuration mistakes include incorrect IAM role settings, missing dependencies in the build environment, and misconfigured deployment settings. Verify that the IAM roles used by awscodepipeline have the necessary permissions to access the required AWS resources. Ensure that the build environment has all the necessary dependencies installed, such as specific versions of programming languages or libraries. Double-check the deployment settings to ensure they are correctly configured for the target environment. When troubleshooting awscodepipeline issues, it’s essential to adopt a systematic approach. Start by examining the pipeline execution history and logs, carefully analyzing error messages, and checking for common configuration mistakes. By following these steps, you can efficiently identify and resolve problems, ensuring a smooth and reliable continuous delivery workflow using awscodepipeline.

Optimizing Your AWS Continuous Delivery Workflow

To achieve peak efficiency with your software delivery process in AWS, several optimization strategies can be implemented. Parallel execution of build and deployment stages is a key technique. By running stages concurrently, rather than sequentially, you significantly reduce the overall pipeline execution time. awscodepipeline allows for defining dependencies between stages, ensuring that only independent tasks run in parallel, maximizing resource utilization and minimizing delays. Caching dependencies is another crucial optimization. Utilizing caching mechanisms for frequently used dependencies, such as libraries and packages, prevents redundant downloads and installations during each build. This speeds up the build process and reduces network traffic. AWS offers various caching solutions that can be seamlessly integrated with awscodepipeline and AWS CodeBuild.

Infrastructure-as-code (IaC) plays a vital role in optimizing the continuous delivery workflow. Tools like AWS CloudFormation or Terraform enable you to define and manage your infrastructure resources in a declarative manner. This ensures consistency and repeatability across different environments, automating the provisioning and configuration of resources required for your applications. IaC eliminates manual intervention, reduces errors, and accelerates the deployment process. Furthermore, it allows you to version control your infrastructure configurations, providing a clear audit trail and enabling easy rollback to previous states if needed. Awscodepipeline can be configured to trigger infrastructure changes defined through IaC, leading to a fully automated environment.

Effective monitoring and alerting are essential for identifying and resolving bottlenecks in your awscodepipeline workflow. Amazon CloudWatch provides comprehensive monitoring capabilities, allowing you to track key metrics such as pipeline execution time, build duration, and deployment success rates. By setting up alerts based on predefined thresholds, you can proactively identify potential issues and take corrective actions before they impact the delivery process. Analyzing logs and metrics provides insights into areas where optimization is needed. For example, identifying slow-running builds or frequent deployment failures can help you pinpoint specific problems and implement targeted solutions. awscodepipeline integrates seamlessly with CloudWatch, providing real-time visibility into the health and performance of your pipelines, ensuring a smooth and efficient software delivery workflow.

Comparing AWS Pipelines with Other CI/CD Tools

The software development landscape offers a variety of CI/CD tools, each with its strengths and weaknesses. AWS Pipelines (often referred to as awscodepipeline) provides a compelling option for teams heavily invested in the AWS ecosystem, but understanding its position relative to tools like Jenkins, GitLab CI, and CircleCI is crucial for making informed decisions. This comparison aims to highlight key differentiators to aid in that assessment.

Jenkins, a widely adopted open-source automation server, boasts a vast plugin ecosystem and extensive customization options. However, managing a Jenkins instance can require significant overhead in terms of server maintenance, configuration, and scaling. GitLab CI, tightly integrated with the GitLab platform, offers a streamlined experience for projects already using GitLab for source code management. CircleCI, known for its ease of use and quick setup, provides a cloud-based solution with a focus on developer productivity. Awscodepipeline distinguishes itself through its native integration with other AWS services. This tight coupling simplifies deployments to services like AWS Elastic Beanstalk, AWS Lambda, and Amazon ECS/EKS. Managing access control through IAM roles is more straight forward, since awscodepipeline inherit all security settings. Additionally, awscodepipeline’s pay-as-you-go pricing model can be advantageous for teams with fluctuating build and deployment workloads, avoiding the need to pay for idle server capacity.

The choice between awscodepipeline and other CI/CD tools depends heavily on specific project requirements and organizational priorities. If your infrastructure is already deeply entrenched within AWS and you value seamless integration with AWS services, awscodepipeline presents a strong contender. Its managed nature reduces the operational burden associated with self-hosted solutions. However, organizations seeking greater flexibility and control over their CI/CD environment may find Jenkins or other tools more suitable. Ultimately, a thorough evaluation of each tool’s features, pricing, and integration capabilities is essential to determine the best fit for your software delivery needs. A key aspect to consider is the maturity of the awscodepipeline, and its adherence to cloud-native best practices, ensuring a scalable and resilient delivery pipeline. Awscodepipeline excels when dealing with aws resources and simplifies their management, improving deployments speed and reducing errors due to its architecture.

Enhancing Pipelines with AWS Services

AWS CodePipeline’s true power lies in its ability to integrate seamlessly with other AWS services, creating a robust and comprehensive software delivery ecosystem. This integration allows for enhanced functionality, increased automation, and improved monitoring across the entire development lifecycle. By leveraging the strengths of various AWS offerings, awscodepipeline workflows can be tailored to meet specific project requirements and optimize the delivery of applications and services. Consider how AWS Lambda functions can introduce serverless capabilities, Amazon ECS/EKS empowers the deployment of containerized apps, and Amazon CloudWatch provides centralized monitoring and logging.

One significant enhancement involves integrating awscodepipeline with AWS Lambda. Lambda functions can be triggered at various stages of the pipeline to perform tasks such as custom validation checks, data transformations, or infrastructure provisioning. For example, a Lambda function could be invoked after a build stage to verify the integrity of the built artifacts or to automatically update configuration files. This tight integration allows developers to incorporate complex logic into their pipelines without managing servers or infrastructure. Similarly, for containerized applications, integrating awscodepipeline with Amazon ECS (Elastic Container Service) or Amazon EKS (Elastic Kubernetes Service) simplifies the deployment process. The pipeline can be configured to automatically build container images, push them to Amazon ECR (Elastic Container Registry), and deploy them to ECS or EKS clusters. This integration streamlines the container deployment workflow and ensures consistent deployments across different environments. The goal is to use awscodepipeline to orchestrate other AWS services.

Monitoring and logging are crucial aspects of any software delivery pipeline, and awscodepipeline integrates seamlessly with Amazon CloudWatch to provide comprehensive visibility into the pipeline’s performance. CloudWatch can be used to monitor pipeline execution times, track error rates, and set up alerts for critical events. By leveraging CloudWatch metrics and dashboards, developers can quickly identify and resolve bottlenecks or issues in the pipeline. Furthermore, CloudWatch Logs can be used to collect and analyze logs from various stages of the pipeline, providing valuable insights into the build and deployment processes. This level of monitoring helps ensure the reliability and stability of the software delivery process. Ultimately, the integration of awscodepipeline with these and other AWS services empowers teams to build a fully automated, secure, and efficient software delivery pipeline, leading to faster release cycles, reduced errors, and improved overall software quality. The awscodepipeline service serves as the backbone for many enterprises AWS deployments, so it should be properly considered and integrated.