Understanding Recurrent Neural Networks (RNNs): A Beginner’s Guide

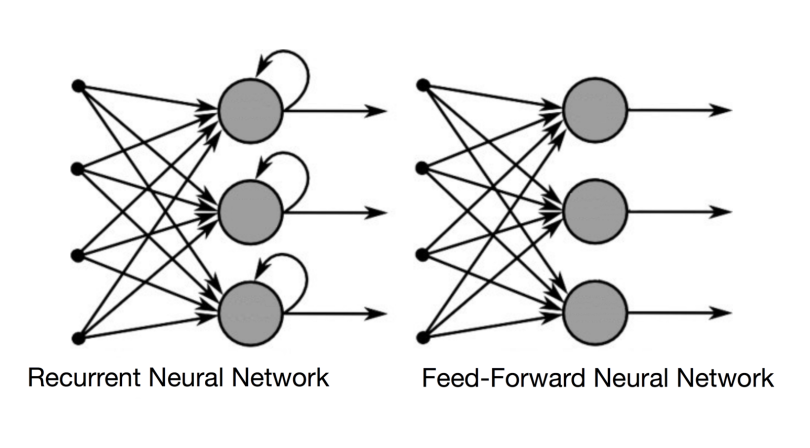

Recurrent neural networks (RNNs) are a powerful type of artificial neural network designed for processing sequential data. Unlike feedforward networks, which process data in a single pass, RNNs possess a “memory” mechanism. This memory allows them to consider previous inputs when processing current inputs. Imagine reading a sentence: you understand each word better because you know the words that came before. RNNs do something similar. They maintain an internal “hidden state” that’s updated at each time step. This hidden state summarizes the information from past inputs, influencing how the network processes the current input. The basic architecture of an RNN includes an input layer, a hidden layer (with the hidden state), and an output layer. The hidden layer receives inputs from both the current input and the previous hidden state, enabling the network to learn temporal dependencies in data. This fundamental characteristic makes rnn neural networks particularly well-suited for tasks involving sequences.

The hidden state acts as a form of memory, carrying information from previous time steps. At each time step, the RNN receives a new input. This input, along with the current hidden state, is used to compute a new hidden state. The new hidden state contains information from both the current input and the past inputs, summarized by the previous hidden state. This process repeats for each time step in the sequence. The final hidden state or an output from each time step is then used to generate the network’s output. RNNs can be viewed as a chain of neural networks, each connected to the next. This chain-like structure enables the network to learn relationships between elements in a sequence, making them excellent tools for handling sequential data. Understanding how this hidden state evolves over time is key to understanding the power of an rnn neural network. The ability of RNNs to maintain and utilize context makes them far superior to traditional feedforward networks in certain applications.

RNNs find extensive use in various applications dealing with sequential information, such as time series analysis, natural language processing, and speech recognition. They excel where the order of data matters significantly. For example, predicting stock prices requires understanding past price movements. Similarly, understanding a sentence hinges on the order of words. In contrast to traditional machine learning algorithms which struggle with sequential data, the rnn neural network architecture allows for sophisticated modelling of temporal dependencies. This makes it a powerful tool capable of effectively learning complex patterns within sequential data, leading to better performance in various prediction and classification tasks. The rnn neural network’s ability to learn long-term dependencies is a significant factor in its success. A properly trained rnn neural network can accurately identify and utilize long-range relationships within data sequences, which are often missed by other methods.

Why RNNs Excel in Time-Series Data Analysis

Recurrent neural networks (RNNs) possess a unique architecture that makes them exceptionally well-suited for processing sequential data. Unlike feedforward neural networks, which treat each input independently, RNNs maintain an internal state, or memory, that allows them to consider past inputs when processing the current one. This characteristic is crucial for analyzing time-series data, where the order of events matters significantly. The rnn neural network’s ability to retain information across time steps enables it to identify patterns and dependencies that would be missed by other network types. This makes RNNs ideal for applications like financial market prediction, where past price movements influence future trends, and speech recognition, where the meaning of a word often depends on its context within a sentence.

The power of the rnn neural network is clearly demonstrated in its superior performance in numerous real-world applications. In natural language processing (NLP), RNNs are frequently used for tasks such as machine translation and sentiment analysis. They excel at understanding the nuances of language, capturing the contextual relationships between words and sentences, which are essential for accurate translation or sentiment classification. For instance, an RNN can accurately interpret the sentiment of a sentence like “The movie was amazing, but the ending was disappointing” by considering the entire sentence context, rather than just individual words. Similarly, in time-series forecasting, RNNs demonstrate the ability to predict future values with a high degree of accuracy. Applications range from weather prediction, where past weather patterns inform future forecasts, to predicting stock prices, where historical performance helps inform predictions of future market movement. This capability stems directly from the rnn neural network’s inherent ability to learn temporal dependencies within sequential data.

Examples of RNN applications abound. In finance, RNNs help predict stock prices and assess investment risks. In healthcare, they analyze patient data to improve disease diagnosis and treatment plans. In manufacturing, they optimize production processes and predict equipment failures. The rnn neural network’s ability to learn from sequential data, combined with its capacity to adapt to new information over time, makes it a powerful tool across various domains. The versatility of RNNs extends to other areas like robotics, where they control robot movements based on past sensory inputs, and anomaly detection, where they identify unusual patterns in data streams. The architecture of the rnn neural network ensures a persistent internal state allowing it to successfully handle and understand the patterns inherent in various time-dependent processes. This persistent state is fundamental to the network’s success in diverse applications.

Different Types of Recurrent Neural Networks

Recurrent neural networks (RNNs) come in various architectures, each designed to address specific challenges in sequential data processing. The basic RNN, the simplest form, directly feeds the hidden state from one time step to the next. However, this basic RNN architecture suffers from the vanishing gradient problem, limiting its ability to learn long-term dependencies in sequences. This means the rnn neural network may struggle to connect information from earlier time steps to later ones. Consequently, it is less effective with long sequences.

To overcome the limitations of the basic RNN, more sophisticated architectures like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) were developed. LSTMs address the vanishing gradient problem through a clever mechanism of gates: input, output, and forget gates. These gates regulate the flow of information into and out of the cell state, enabling the network to remember information over extended periods. This makes LSTM rnn neural networks exceptionally well-suited for tasks involving long-range dependencies, such as machine translation or speech recognition. GRUs, on the other hand, simplify the LSTM architecture by combining the cell state and hidden state, and using fewer gates. This reduces computational complexity, making GRUs faster to train than LSTMs, while still effectively capturing long-term dependencies. The choice between LSTM and GRU often depends on the specific application and the trade-off between accuracy and computational efficiency. For shorter sequences, a basic RNN might suffice, but for complex, lengthy sequences, LSTM or GRU rnn neural networks generally provide superior performance.

The selection of the optimal rnn neural network architecture depends heavily on the nature of the sequential data and the task. For instance, if the task involves short sequences and computational resources are limited, a basic RNN might be sufficient. However, for tasks requiring the capture of long-range dependencies, like processing lengthy sentences in natural language processing or analyzing extensive time series data, LSTMs or GRUs are preferred due to their capacity to retain information over longer durations. The vanishing gradient problem, a significant hurdle for basic RNNs, is significantly mitigated in LSTMs and GRUs, allowing them to learn patterns and relationships across extensive temporal contexts. Understanding these architectural differences and their implications is crucial for building effective and efficient RNN models.

How to Build and Train Your First RNN

This section provides a practical, step-by-step guide to building and training a simple rnn neural network using PyTorch. The example focuses on predicting the next character in a sequence of text. This task is well-suited for demonstrating the core concepts of RNNs. First, import necessary libraries: import torch, torch.nn as nn. Next, define the RNN model. This involves creating a class that inherits from nn.Module. The class will contain an nn.RNN layer, followed by a linear layer for output. The RNN layer processes the sequential input, while the linear layer maps the hidden state to the output space. The input size corresponds to the vocabulary size, and the hidden size determines the dimensionality of the hidden state. The output size should match the vocabulary size for character prediction.

Then, prepare the data. This involves converting the text into numerical representations. A simple approach involves creating a mapping between unique characters and numerical indices. The text is then transformed into sequences of these indices. These sequences are used to train the rnn neural network. The training process involves iterating through the dataset, feeding sequences to the model, computing the loss (e.g., cross-entropy loss), and updating the model’s parameters using an optimizer (e.g., Adam). The loss function quantifies the difference between the model’s predictions and the actual next characters. The optimizer adjusts the model’s weights to minimize this loss, leading to improved predictions. Regularization techniques, such as dropout, can help prevent overfitting. Monitoring the loss during training helps assess the model’s progress and detect potential issues.

Finally, evaluate the model’s performance. After training, the rnn neural network’s ability to predict the next character can be assessed using a held-out test set. Metrics like accuracy or perplexity can be used to quantify the model’s performance. This example demonstrates a basic RNN. More complex tasks may require variations or more advanced architectures like LSTMs or GRUs. The core concepts, however, remain the same: processing sequential data, updating the hidden state, and making predictions based on the learned patterns. Remember, proper data preprocessing and careful hyperparameter tuning are crucial for successful rnn neural network training. This simple rnn neural network example provides a foundation for understanding and implementing more sophisticated RNN models for various applications.

Overcoming Challenges in RNN Training

Training recurrent neural networks (RNNs) presents unique challenges not encountered in other neural network architectures. One major hurdle is the vanishing gradient problem. During backpropagation through time, gradients can shrink exponentially as they propagate through many time steps. This makes it difficult for the RNN to learn long-term dependencies in the sequence data. Essentially, the network struggles to “remember” information from earlier time steps when making predictions later on. This significantly impacts the RNN’s ability to capture context over extended sequences, especially in applications requiring long-range dependencies. The rnn neural network’s effectiveness is hindered by this limitation.

Conversely, the exploding gradient problem represents the opposite extreme. Gradients can grow exponentially large during training, leading to instability and numerical overflow. This can manifest as erratic weight updates, preventing the rnn neural network from converging to a good solution. Exploding gradients disrupt the learning process, making it difficult to train effectively. The network’s weights may become excessively large, leading to unpredictable and unreliable predictions. Both vanishing and exploding gradients stem from the inherent nature of RNNs and their reliance on repeatedly applying the same weight matrix across time steps.

Fortunately, several techniques exist to mitigate these issues. Gradient clipping is a common approach that limits the magnitude of gradients during training, preventing them from becoming excessively large or small. Careful initialization of the RNN’s weights can also help to prevent exploding gradients. Using activation functions like hyperbolic tangent (tanh) that have a bounded output range can assist in controlling gradient magnitudes. Furthermore, advanced RNN architectures like LSTMs and GRUs are specifically designed to address the vanishing gradient problem more effectively than basic RNNs. These architectures incorporate mechanisms to regulate information flow and maintain long-term dependencies within the rnn neural network, thus improving their capacity to handle lengthy sequences and complex patterns in the data.

Advanced RNN Architectures and Applications

Beyond the basic RNN, LSTM, and GRU architectures, more sophisticated rnn neural network designs offer enhanced capabilities. Bidirectional RNNs process sequential data in both forward and backward directions, capturing contextual information from both past and future time steps. This is particularly beneficial in tasks like natural language processing where understanding the entire sentence is crucial for accurate interpretation. For example, in sentiment analysis, a bidirectional rnn neural network can better understand the nuanced meaning of a phrase by considering the words that come before and after a particular word. This architecture enhances the rnn neural network’s ability to capture long-range dependencies and improve overall accuracy.

Encoder-decoder models are another powerful class of RNN architectures. These models consist of two RNNs: an encoder that compresses the input sequence into a fixed-length vector, and a decoder that generates the output sequence from this vector. This architecture finds extensive applications in machine translation, where the encoder processes the source language sentence and the decoder generates the translation in the target language. Sequence-to-sequence models, a type of encoder-decoder model, are specifically designed for tasks involving mapping input sequences to output sequences of varying lengths. These rnn neural networks excel in tasks such as machine translation, where the length of the input and output sentences might differ significantly. They are also commonly used in chatbot development and other natural language generation tasks.

The versatility of advanced rnn neural network architectures extends to diverse applications. In speech recognition, RNNs are used to transcribe audio into text, leveraging their ability to model temporal dependencies in audio signals. Image captioning involves generating descriptive text for images, requiring the rnn neural network to capture spatial information from the image and generate a coherent sentence. The continued advancements in these architectures, alongside the ongoing research into novel memory mechanisms and training techniques, promises to further expand the applications and capabilities of RNNs in various fields.

Comparing RNNs with Other Deep Learning Models

Recurrent neural networks (RNNs) hold a unique position in the deep learning landscape. Unlike feedforward networks, RNNs excel at processing sequential data, making them ideal for tasks involving time series, text, and speech. However, other architectures offer compelling alternatives depending on the specific application. Convolutional Neural Networks (CNNs), for instance, shine in image processing and tasks involving spatial relationships. CNNs leverage convolutional filters to extract features from image data, a process less effective for sequential data. RNNs, with their inherent memory mechanism, handle sequential dependencies far better than CNNs. This makes RNN neural networks a preferred choice in natural language processing, where the order of words is critical.

Transformers represent a more recent advancement in deep learning. These models employ an attention mechanism that allows them to process entire sequences simultaneously, avoiding the sequential processing limitations of RNNs. This parallel processing capability makes transformers significantly faster to train than RNNs for long sequences. Furthermore, transformers tend to capture long-range dependencies more effectively than traditional RNN architectures, such as LSTMs or GRUs. While transformers have surpassed RNNs in many NLP tasks, RNN neural networks still retain advantages in specific situations. For example, when dealing with short sequences or when computational resources are limited, RNNs can be a more efficient and practical choice. The selection of the appropriate architecture hinges on the nature of the data and the desired task. The computational cost and training time should also factor into the decision-making process.

The choice between an RNN neural network and other deep learning models depends on several factors. For sequential data requiring the understanding of temporal relationships, RNNs often provide superior performance. However, the limitations of RNNs in processing very long sequences, coupled with the computational cost, often lead researchers and practitioners to prefer transformers for tasks like machine translation. CNNs remain the dominant architecture for image-based tasks. The strengths and weaknesses of each architecture necessitate a careful consideration of the specific problem being addressed. The optimal model selection ensures both accuracy and efficient resource utilization. Therefore, understanding these differences is crucial for selecting the most appropriate model for any given task.

The Future of RNNs and Emerging Trends

The field of recurrent neural networks (RNN) is constantly evolving. Researchers continue to explore innovative memory mechanisms to enhance the ability of RNNs to handle long-range dependencies within sequential data. This includes advancements in architectures like the Neural Turing Machine and Differentiable Neural Computers, which aim to equip RNN neural networks with external memory capabilities far surpassing the limitations of standard RNN architectures. These improvements allow for the processing of significantly longer sequences and the retention of information over extended periods. The development of more efficient training algorithms also contributes to the ongoing progress in RNN technology. This allows for the training of larger and more complex rnn neural networks, unlocking their potential in increasingly challenging applications.

Novel architectures are constantly emerging, pushing the boundaries of what RNNs can achieve. For example, researchers are exploring hybrid models that combine the strengths of RNNs with other deep learning architectures, such as convolutional neural networks (CNNs) and transformers. These hybrid models often demonstrate improved performance on specific tasks by leveraging the unique advantages of each component architecture. The integration of attention mechanisms, initially popularized in transformer models, is also transforming RNNs. Attention mechanisms allow RNNs to focus on the most relevant parts of the input sequence, improving efficiency and performance, especially in tasks like machine translation and text summarization. The application of RNN neural networks is expanding into new and exciting domains, including robotics, drug discovery, and personalized medicine. The ability of RNNs to model sequential data makes them ideally suited for applications where temporal dynamics play a crucial role.

The continued relevance of RNNs in the broader deep learning landscape is undeniable. While transformer models have gained significant popularity for many natural language processing tasks, RNNs remain a powerful and versatile tool for tackling sequential data challenges. Their intuitive architecture and adaptability to various problem domains ensure their continued importance in research and practical applications. Further breakthroughs in memory mechanisms, training techniques, and hybrid architectures promise to enhance the capabilities of RNN neural networks, solidifying their role in the future of artificial intelligence. The exploration of novel applications in diverse fields will also drive further innovation and development within the rnn neural network space.