Understanding Kubernetes Fundamentals

Kubernetes, often abbreviated as k8s, is a powerful container orchestration system. It automates the deployment, scaling, and management of containerized applications across a cluster of machines. This kubernetes detailed architecture simplifies application deployment and management, allowing developers to focus on code rather than infrastructure. Key concepts include pods, the smallest deployable units; deployments, managing application versions; services, exposing applications; and namespaces, organizing resources. Understanding these foundational elements is crucial before delving into the intricacies of a kubernetes detailed architecture.

A kubernetes detailed architecture comprises several core components. Pods group one or more containers sharing resources. Deployments ensure high availability and manage application updates. Services provide stable network access to pods. Namespaces isolate resources logically, improving organization and security. These components work together to create a robust and scalable application environment. Mastering these concepts forms the basis for understanding a kubernetes detailed architecture, enabling effective resource management and application deployment.

The architecture’s modular design offers flexibility and scalability. It allows for easy integration with various tools and technologies, enhancing its adaptability to different needs. A thorough understanding of Kubernetes’ architecture provides the skills to build and manage highly available, fault-tolerant applications. This knowledge is essential for anyone working with containerized applications at scale. Exploring the control and data planes further reveals the depth and power of a kubernetes detailed architecture.

The Control Plane: Orchestrating the Kubernetes Cluster

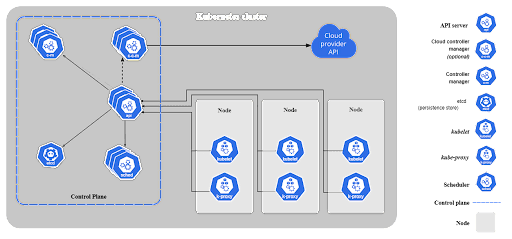

The Kubernetes control plane acts as the brain of the cluster, managing and orchestrating all cluster resources. It ensures the smooth and efficient operation of the entire system. A deep understanding of the control plane is crucial for anyone aiming to master kubernetes detailed architecture. This central component comprises several key elements working in concert. The etcd database serves as the persistent key-value store, reliably storing the complete state of the cluster. All cluster data resides here, providing a crucial foundation for the system’s functionality. Changes are recorded and tracked, ensuring data consistency and availability.

The kube-apiserver acts as the single point of access for all communication with the control plane. All requests to manage the cluster—deployments, scaling, or configuration adjustments—pass through the kube-apiserver. It validates these requests, ensuring security and authorization. The kube-scheduler then intelligently assigns pods to available nodes, considering various factors such as resource availability and constraints. This intelligent allocation maximizes resource utilization and ensures even distribution of workloads across the cluster. The kube-controller-manager is responsible for maintaining the desired state of the cluster. It continuously monitors resources and takes corrective actions to bring the actual state in line with the defined configuration. This active management is vital to a kubernetes detailed architecture’s reliability and stability.

Understanding how these components interact is critical to grasping the complexities of a Kubernetes cluster. The etcd database provides persistent storage, the kube-apiserver handles communication and authorization, the kube-scheduler optimizes resource allocation, and the kube-controller-manager maintains the desired state. Together, these elements form the robust control plane that orchestrates the entire Kubernetes system, showcasing a key aspect of the kubernetes detailed architecture. Their seamless integration allows for a highly scalable, automated, and resilient container orchestration system.

The Data Plane: Where Containers Run in a Kubernetes Detailed Architecture

The Kubernetes data plane comprises the worker nodes. These are the physical or virtual machines where containers actually execute. Each worker node runs a crucial component called the kubelet. The kubelet acts as an agent, constantly communicating with the control plane. It receives instructions on which containers to run, monitors their health, and ensures they remain operational. The kubelet’s role is vital in maintaining the desired state of the application within the Kubernetes detailed architecture. It manages container lifecycles, resource allocation, and overall container health on the node. Understanding the kubelet is essential for grasping the intricacies of the Kubernetes architecture.

Another key component residing on each worker node is kube-proxy. This network proxy manages the networking within the Kubernetes cluster. It implements the Kubernetes Service abstraction, enabling stable network identities for pods. Kube-proxy routes traffic to the appropriate pods based on the service configuration. It is responsible for enabling communication between pods both within and outside the cluster. Through service discovery and network routing, kube-proxy ensures seamless communication within the complex environment of a Kubernetes detailed architecture. Its functionality is directly related to the overall scalability and performance of applications running in the cluster.

The interplay between the control plane and the data plane is crucial for a functional Kubernetes cluster. The control plane orchestrates the overall deployment and management of the application, while the data plane provides the execution environment. The kubelet and kube-proxy on the worker nodes are essential for translating the high-level instructions of the control plane into the actual running containers and network configurations. This collaboration between control and data planes, as part of the kubernetes detailed architecture, ensures the reliable and scalable operation of applications in a containerized environment. The efficiency and resilience of this interaction are key to a well-functioning Kubernetes cluster.

Pods: The Fundamental Building Blocks of Kubernetes Detailed Architecture

Pods represent the smallest deployable units within a Kubernetes cluster. Understanding pods is crucial for grasping the kubernetes detailed architecture. A pod encapsulates one or more containers, sharing resources like network namespaces and storage volumes. This shared environment allows containers within a single pod to communicate efficiently. Pods are ephemeral; Kubernetes automatically creates and destroys them based on application needs and desired state. A pod’s specification, typically defined in YAML, dictates its configuration including the containers it runs, resource requests, environment variables, and volume mounts. This ensures consistent and predictable behavior across deployments.

Containers within a pod benefit from shared resources, improving performance and resource utilization. This efficiency is a key advantage in a kubernetes detailed architecture. Shared network namespaces enable easy communication between containers in the same pod without complex networking configurations. Similarly, shared volumes provide a convenient way for containers to share data and configuration files. The lifecycle of a pod is managed by the Kubernetes control plane, responding to changes in the desired state and ensuring high availability. This self-healing characteristic is a vital aspect of the overall kubernetes detailed architecture. When a pod fails, Kubernetes automatically restarts it on a healthy node. This resilience is vital for building robust and reliable applications.

Creating and managing pods involves understanding their specifications and leveraging Kubernetes APIs or command-line tools. The specification includes details about the container image, resource limits (CPU and memory), environment variables, and ports. Kubernetes offers tools to monitor pod health and resource usage. This enables proactive management and optimization within your kubernetes detailed architecture. Effective pod management is paramount for scaling applications, improving efficiency, and ensuring application availability. Properly configured pods are foundational for creating a robust and scalable application deployment within a Kubernetes environment. The understanding of pods is essential when exploring the comprehensive kubernetes detailed architecture. Advanced concepts such as init containers and sidecars extend pod capabilities, allowing for complex application deployments.

Deployments: Managing Application Versions in Kubernetes Detailed Architecture

Deployments are crucial components within the kubernetes detailed architecture, offering a declarative method for managing application deployments. They provide a robust mechanism for ensuring high availability, facilitating effortless scaling, and simplifying updates and rollbacks. A deployment defines the desired state of your application, and Kubernetes works diligently to maintain that state. This eliminates the manual effort previously required for managing application versions and ensures smooth operations. This is a significant advancement over traditional deployment methods.

The power of deployments lies in their ability to handle rolling updates. Instead of abruptly replacing all instances of your application, deployments allow for a gradual transition. New versions are deployed incrementally, minimizing downtime and ensuring a smooth user experience. Should any issues arise with the new version, rollbacks are equally straightforward, allowing for a quick reversion to the previous stable version. This provides a level of resilience rarely seen in traditional systems. This feature is vital for maintaining application stability and user satisfaction in a kubernetes detailed architecture.

Scaling applications is another key function of deployments. By specifying the desired number of replicas, you can easily scale your application up or down to meet changing demands. Kubernetes automatically handles the creation or deletion of pods to match your specified replica count. This ensures efficient resource utilization and optimal application performance. The dynamic scaling capabilities of deployments, a cornerstone of the kubernetes detailed architecture, significantly improve resource management and operational efficiency. Understanding and leveraging this functionality is paramount for anyone working with Kubernetes.

Kubernetes Services: Exposing Applications

Kubernetes services provide a stable networking layer for accessing pods within a cluster. They abstract away the constantly changing IP addresses of pods, offering a consistent endpoint for applications. This is crucial for the kubernetes detailed architecture, as it ensures that applications remain reachable even as pods are created, updated, or deleted. Understanding service types is essential for effective application deployment and management within a kubernetes detailed architecture.

Several service types cater to different needs within a kubernetes detailed architecture. A ClusterIP service creates a cluster-internal IP address, accessible only from within the cluster. NodePort services expose applications on a static port on each node’s IP address, allowing external access. A LoadBalancer, often relying on cloud provider features, automatically creates an external load balancer, distributing traffic across multiple pods. Finally, an Ingress controller provides an entry point for external traffic, enabling advanced routing and load balancing capabilities. Choosing the right service type depends on the application’s accessibility requirements and the kubernetes detailed architecture’s design.

Consider a simple example: a web application deployed as a set of pods. A LoadBalancer service would be ideal for exposing this application to the internet, distributing traffic across healthy pods. The service definition specifies the target pods, and the load balancer automatically manages traffic distribution, ensuring high availability. For internal services within the cluster, a ClusterIP might suffice. This illustrates how service types contribute to a robust and scalable kubernetes detailed architecture. Efficient service design is a critical aspect of any production-ready Kubernetes deployment, ensuring your application is both accessible and resilient.

Networking in Kubernetes: Connecting Pods

Understanding networking within a Kubernetes detailed architecture is crucial for application communication. Pods, the fundamental building blocks, need to interact seamlessly both internally and externally. This is achieved through a robust networking layer, often implemented using a Container Network Interface (CNI). A CNI plugin provides the necessary functionality to create and manage network interfaces for containers, ensuring connectivity within the Kubernetes cluster. Different CNI plugins offer various features and capabilities, allowing administrators to tailor the network configuration to specific requirements. The choice of CNI plugin significantly impacts the overall performance and scalability of the kubernetes detailed architecture.

Service discovery is another essential aspect of Kubernetes networking. Services provide a stable, abstract endpoint for accessing pods. Even if pods are dynamically created and destroyed, the service remains accessible through its consistent IP address and port. This simplifies application development and deployment, as developers do not need to track individual pod IP addresses. Kubernetes uses a service discovery mechanism to map service names to the actual IP addresses of the pods that back the service. This allows pods to communicate with each other using service names instead of IP addresses, which enhances portability and maintainability. This mechanism is key to the efficient operation of a kubernetes detailed architecture.

Network policies provide a crucial layer of security within a Kubernetes cluster. They allow administrators to define fine-grained access control rules, regulating how pods can communicate with each other and with external networks. This is important for isolating sensitive applications and preventing unauthorized access. Network policies operate at the pod level, enabling granular control over network traffic. By using network policies effectively, organizations can build secure and robust kubernetes detailed architectures. Implementing well-defined network policies is a best practice for securing applications deployed within a Kubernetes environment, promoting a more resilient and secure kubernetes detailed architecture.

How to Design a Resilient Kubernetes Architecture

Designing a robust and scalable Kubernetes architecture requires careful consideration of several key factors. High availability is paramount. Employ multiple control plane nodes to ensure continued operation even if one node fails. Similarly, distribute worker nodes across different availability zones or regions to mitigate the impact of regional outages. This redundancy is crucial for maintaining application uptime in the event of unexpected failures. Resource allocation must be optimized. Utilize resource quotas and limits to prevent resource exhaustion and ensure fair sharing among applications. Regular monitoring and logging are essential for maintaining the health of the entire Kubernetes detailed architecture. Implement comprehensive monitoring solutions to track resource usage, pod status, and application performance. Utilize logging tools to capture and analyze application logs, enabling efficient troubleshooting and performance optimization. These measures are vital for proactively identifying and resolving issues before they impact users. This comprehensive approach strengthens the resilience of your deployment significantly.

Replication and scaling are fundamental to building a resilient Kubernetes detailed architecture. Deploy multiple replicas of your applications across multiple nodes to ensure high availability. Utilize horizontal pod autoscaling (HPA) to automatically scale your applications based on resource utilization or custom metrics. This dynamic scaling mechanism adapts to changing demands, ensuring optimal performance under varying loads. Network policies are vital for security. Implement network policies to control network traffic flow within the cluster, limiting access to sensitive resources and reducing the attack surface. This enhances the overall security posture of your applications deployed in the Kubernetes environment. Implementing robust security measures is a critical part of building a resilient architecture. Regular security audits and vulnerability scans are essential practices. Consider using network segmentation to isolate different parts of the cluster, adding another layer of security to your overall Kubernetes detailed architecture.

Effective monitoring and logging are critical for maintaining a healthy and performant Kubernetes detailed architecture. Implement a comprehensive monitoring solution that provides real-time visibility into the health and performance of your cluster and applications. This enables proactive identification and resolution of issues. Centralized logging is essential for efficient troubleshooting and performance analysis. Integrate your logging system with a central dashboard for efficient log aggregation and analysis. By leveraging the power of logs, you can gain valuable insights into application behavior and system health. This ensures faster response times and greater operational efficiency in managing your Kubernetes environment. Remember to consider disaster recovery planning as a crucial aspect of a resilient design. Implement a robust disaster recovery plan that includes regular backups and a well-defined process for restoring your cluster and applications in the event of a major outage. This proactive planning is vital for minimizing downtime and ensuring business continuity. A carefully planned and implemented Kubernetes detailed architecture ensures high availability, scalability and resilience.