Understanding the Power of AWS Data Pipelines

AWS Data Pipelines represent a crucial element in contemporary data management. These robust pipelines offer significant advantages, including scalability, reliability, and cost-effectiveness, surpassing many alternative solutions. Their ability to handle massive datasets efficiently makes them invaluable for businesses of all sizes. Amazon pipelines excel at streamlining complex data processing tasks, ensuring data integrity and availability. The versatility of these pipelines extends to a wide range of applications, including Extract, Transform, Load (ETL) processes, data warehousing, and real-time data streaming. This adaptability makes them a cornerstone of modern data infrastructure. Businesses leverage amazon pipelines to gain actionable insights from their data, driving data-driven decision-making.

Scalability is a key feature. Amazon pipelines seamlessly adapt to increasing data volumes and processing demands. This ensures consistent performance even during peak loads. Reliability is paramount, with mechanisms in place to prevent data loss and maintain operational continuity. Redundancy and fault tolerance are built-in features. The cost-effectiveness stems from the pay-as-you-go pricing model. Users only pay for the resources consumed, reducing unnecessary expenses. This makes AWS Data Pipelines a highly attractive option compared to on-premise solutions which require significant upfront investment and ongoing maintenance. These pipelines are vital for organizations seeking to manage data efficiently, reliably, and cost-effectively.

Various use cases demonstrate the broad applicability of AWS Data Pipelines. ETL processes benefit significantly from the ability to automate data extraction, transformation, and loading into data warehouses. Real-time data streaming uses cases include processing sensor data, financial transactions, and social media feeds. Data warehousing applications involve consolidating data from diverse sources into a central repository for analysis and reporting. These examples highlight the versatility and power of AWS Data Pipelines, making them a valuable asset for organizations across numerous sectors. Amazon pipelines are central to efficient data management in today’s data-driven landscape. Understanding their capabilities is key to harnessing the potential of cloud-based data processing.

Choosing the Right AWS Data Pipeline Service for Your Needs

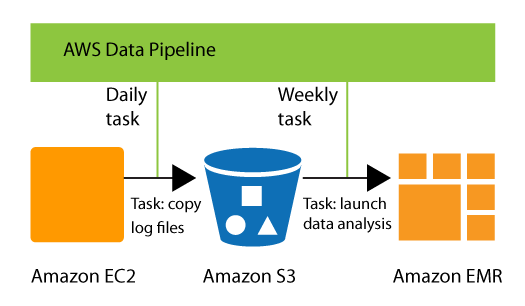

Constructing efficient and scalable amazon pipelines requires careful consideration of various AWS services. AWS offers a rich ecosystem of tools, each suited to different data characteristics and processing needs. Key players include AWS Glue, a serverless ETL service ideal for batch processing and data transformation; Amazon Kinesis, a real-time data streaming service perfect for handling high-velocity data streams; Amazon S3, providing cost-effective object storage for data at rest; and Amazon EMR, a managed Hadoop framework for large-scale data processing. The choice depends on factors like data volume, velocity, and complexity. For example, a low-volume, batch-oriented ETL process might leverage AWS Glue’s simplicity. Conversely, high-volume, real-time data ingestion would benefit from the speed and scalability of Amazon Kinesis, feeding data into downstream services like Amazon Redshift for analytical processing.

Understanding the strengths and weaknesses of each service is crucial for building optimal amazon pipelines. AWS Glue excels in its ease of use and serverless nature, reducing operational overhead. However, it might not be the best choice for extremely high-throughput, low-latency applications. Amazon Kinesis, while adept at handling real-time data streams, requires more complex application development compared to the relative simplicity of AWS Glue. Amazon S3 provides robust, durable storage, but it’s not inherently a data processing service. Amazon EMR provides a powerful environment for complex data transformations, particularly suitable for large-scale batch processing using Hadoop or Spark, but involves more management overhead. Selecting the appropriate service demands a careful analysis of the specific requirements of your data pipeline, ensuring the chosen combination maximizes efficiency and cost-effectiveness.

Practical examples highlight the selection process. Imagine a scenario with a large volume of sensor data arriving constantly. Amazon Kinesis would be the natural choice due to its real-time capabilities. In contrast, a monthly reporting process involving a relatively small dataset stored in an S3 bucket might best utilize AWS Glue for ETL to a data warehouse like Amazon Redshift. For highly complex data transformations requiring distributed computing, Amazon EMR’s power and flexibility become invaluable. Efficient amazon pipelines are not built using a one-size-fits-all approach; rather, they demand an understanding of the strengths of each service, combined with a thoughtful strategy to leverage them optimally. Choosing the correct components ensures that your amazon pipelines are not only effective but also economically sound.

Building a Simple Amazon Data Pipeline: A Step-by-Step Guide Using AWS Glue

This tutorial demonstrates building a basic ETL pipeline using AWS Glue, a fully managed extract, transform, load (ETL) service. We’ll move data from a CSV file in Amazon S3 to an Amazon Redshift data warehouse. This common use case illustrates the power and simplicity of Amazon pipelines. First, you’ll need an AWS account and access to S3 and Redshift. Upload your CSV file to an S3 bucket. Note the bucket name and file path. Next, open the AWS Glue console. Create a new crawler to scan your S3 bucket. The crawler will identify the schema of your CSV data. This metadata is crucial for the subsequent ETL process. Configure the crawler to run automatically or manually, based on your needs. Ensure the IAM role assigned to the crawler has sufficient permissions to access your S3 bucket.

After the crawler completes, create a new Glue job. You’ll write a Glue script in Python (PySpark) to perform the ETL. The script will read data from your S3 bucket using the schema defined by the crawler. It will then transform the data as needed, for example, cleaning or filtering data. Finally, the script will write the transformed data into your Redshift cluster. The Glue job needs to be configured with an IAM role having access to both S3 and Redshift. Specify the S3 location for input and the Redshift connection details for output. You can test the Glue job using sample data to ensure it’s working correctly before processing your full dataset. Amazon pipelines offer robust monitoring and logging tools to track job progress and identify errors. Review these logs regularly to guarantee smooth data flow and identify any potential issues early on. Consider using parameters to make your Glue job more reusable and adaptable for different datasets or transformations.

Efficiently managing your amazon pipelines involves using the right tools and configurations. Optimize your Glue job for performance by considering parallel processing options. Use partitioning and bucketing in Redshift to improve query performance. AWS Glue offers various optimization strategies to manage large datasets and maintain acceptable performance. Remember to regularly review job performance metrics and adjust your configurations as needed to maximize efficiency. Monitor the performance of your Amazon pipelines by tracking key metrics such as job runtime, data throughput, and error rates. CloudWatch provides comprehensive monitoring tools to help you identify bottlenecks and areas for improvement in your amazon pipelines. By proactively monitoring and optimizing your pipelines, you can ensure reliable and cost-effective data processing.

Optimizing Your AWS Data Pipeline for Performance

Optimizing amazon pipelines for peak performance requires a multifaceted approach. Data transformation processes should be carefully designed for efficiency. Consider using optimized data formats like Parquet or ORC, which offer significant compression and query performance improvements over CSV. Employ parallel processing techniques, such as using multiple EMR nodes or Glue jobs, to handle large datasets concurrently. This dramatically reduces overall processing time. Data stored in S3 should be organized efficiently. This includes using appropriate partitioning and compression strategies to minimize data retrieval latency. Effective data organization significantly improves query performance in downstream processes.

Efficient data storage is paramount. Amazon S3 offers various storage classes, each with different pricing and performance characteristics. Selecting the appropriate storage class based on access patterns is crucial for cost optimization and performance. For frequently accessed data, consider using S3 Intelligent-Tiering or S3 Standard. For less frequently accessed data, S3 Standard-IA or S3 Glacier might be more appropriate. Schema optimization plays a critical role. Well-defined schemas reduce processing overhead and improve query speed. Employing techniques like data normalization and denormalization, based on specific requirements, ensures the pipeline handles data efficiently. Caching intermediate results can significantly speed up repetitive operations. Implementing a caching layer, such as Redis, can reduce the load on your data sources and improve response times.

Advanced techniques further enhance performance. Data compression reduces storage costs and improves network transfer speeds. Employing compression algorithms like Snappy or Zstandard significantly minimizes data volume. Consider using techniques like data deduplication to remove redundant data, saving both storage space and processing time. Monitoring tools, such as Amazon CloudWatch, provide valuable insights into pipeline performance. These insights allow for proactive identification and resolution of bottlenecks. Regular monitoring and analysis enable continuous optimization of amazon pipelines, leading to improved efficiency and reduced operational costs. By consistently implementing these strategies, organizations can ensure their data pipelines deliver optimal performance while minimizing expenses.

Handling Errors and Monitoring Your AWS Data Pipeline

Effective error handling and monitoring are crucial for maintaining the reliability and performance of amazon pipelines. CloudWatch provides comprehensive monitoring capabilities, allowing users to track key metrics such as data throughput, latency, and resource utilization. Setting up appropriate CloudWatch alarms enables proactive notification of potential issues, facilitating timely intervention and preventing major disruptions. Amazon pipelines benefit from well-defined logging strategies, capturing detailed information about processing events and errors. This allows for thorough analysis to identify patterns and root causes of issues, which are essential for optimizing amazon pipelines. Detailed logs are invaluable in troubleshooting and improving the overall robustness of the system. Effective logging is a cornerstone of a dependable, high-performing pipeline.

Debugging techniques for amazon pipelines leverage the detailed logs generated by various services within the pipeline. Analyzing these logs helps pinpoint the exact location and nature of errors. AWS X-Ray provides distributed tracing capabilities, offering insights into the flow of data through the pipeline and helping identify performance bottlenecks. By using X-Ray, developers can gain a clear understanding of how different components interact and efficiently pinpoint problematic areas. This powerful tool is especially useful when working with complex pipelines involving multiple services. Combining the power of CloudWatch and X-Ray equips developers with a comprehensive monitoring and troubleshooting arsenal.

Strategies for handling errors include implementing retry mechanisms for transient failures. This ensures resilience and allows the pipeline to recover from temporary issues without significant interruption. Implementing error handling routines that automatically redirect failed records to a separate error queue facilitates detailed analysis and facilitates subsequent resolution. This proactive approach prevents data loss and reduces manual intervention. Amazon pipelines’ robust error handling mechanisms, combined with effective monitoring practices, help maintain a stable, efficient, and reliable data processing system. These error management strategies are integral for the long-term success of any amazon pipelines implementation.

Securing Your AWS Data Pipeline: Best Practices for Data Protection

Protecting data within amazon pipelines is paramount. Robust security measures safeguard sensitive information throughout its lifecycle. Implementing granular access control, using Identity and Access Management (IAM) roles, is crucial. These roles define permissions, limiting access to only authorized users and services. This principle of least privilege minimizes the impact of potential breaches. Amazon pipelines integrate seamlessly with AWS’s comprehensive security infrastructure. This ensures data is protected from unauthorized access, modification, or deletion.

Data encryption, both at rest and in transit, is essential for securing data within amazon pipelines. Services like Amazon S3 offer server-side encryption, protecting data stored in S3 buckets. For data in transit, utilize HTTPS and Virtual Private Clouds (VPCs) to encrypt communication between pipeline components. Regular security assessments and penetration testing identify vulnerabilities and help proactively mitigate risks. Compliance with relevant industry regulations, such as HIPAA or PCI DSS, is also critical depending on the nature of the data being processed. These regulations dictate specific security measures that must be implemented. Adherence ensures the pipeline operates within legal and ethical boundaries.

Regular monitoring and logging are essential aspects of pipeline security. AWS CloudTrail logs API calls, providing an audit trail of all activities within the pipeline. CloudWatch monitors the pipeline’s health and performance, alerting administrators to potential issues. These tools facilitate proactive security management and rapid response to incidents. By integrating security best practices at every stage of the pipeline’s design and implementation, organizations ensure data confidentiality, integrity, and availability. This approach to data security within amazon pipelines is critical for maintaining trust and compliance. Following these practices fosters a secure and reliable data processing environment.

Scaling Your AWS Data Pipeline to Handle Growing Data Volumes

As data volumes increase, amazon pipelines must adapt to maintain performance and efficiency. Scaling strategies ensure your data processing remains robust. Several techniques can handle this growth. Auto-scaling automatically adjusts resources based on demand, preventing bottlenecks. This dynamic approach ensures optimal performance without manual intervention. Amazon pipelines leverage this effectively for consistent throughput.

Parallel processing divides tasks across multiple computing units. This significantly speeds up processing time, especially for large datasets. Amazon’s services support parallel processing, enabling efficient handling of massive data volumes. By distributing the workload, amazon pipelines avoid single points of failure and maintain responsiveness. Consider sharding, a technique that divides data into smaller, manageable chunks, each processed independently. This distributes the load across multiple systems, further improving scalability and resilience.

Choosing the right architecture is crucial for scalability. Consider a serverless approach for flexibility and cost-effectiveness. Serverless architectures scale automatically based on demand, minimizing infrastructure management. For more complex pipelines requiring fine-grained control, a hybrid approach combining serverless components with managed services provides a robust and scalable solution. Amazon offers various managed services to support various scaling needs for amazon pipelines. Careful planning and selection of services are critical to achieving efficient and cost-effective scalability. The correct scaling strategy depends on your specific data pipeline and growth projections. Regularly review and adjust your approach as data volumes continue to evolve.

Cost Optimization Strategies for AWS Data Pipelines

Optimizing the cost of Amazon pipelines is crucial for long-term success. Several strategies can significantly reduce expenses. Careful service selection plays a vital role. AWS offers various services for data pipelines, each with different pricing models. Choosing the most cost-effective option for specific needs is paramount. For instance, using Amazon S3 for data storage is generally cheaper than using a managed database service unless specific features are essential. Analyzing resource utilization helps identify areas for improvement. Monitor compute instance usage, storage consumption, and data transfer costs. This allows identifying underutilized resources and optimizing them. Right-sizing instances ensures that resources match actual needs. Over-provisioning leads to unnecessary costs. Regular monitoring and adjustment based on demand avoid this. Amazon’s cost management tools provide insights and recommendations for cost savings.

Leveraging AWS cost optimization features improves efficiency. Spot instances offer significant discounts for unused compute capacity. These instances are ideal for less critical tasks or batch processing within Amazon pipelines. Data compression reduces storage costs and speeds up data transfer. Efficiently compressing data before storage minimizes the amount of space required. Data deduplication techniques can also reduce storage costs by eliminating redundant data. Careful schema design optimizes query performance and reduces processing time, impacting overall costs. Well-designed schemas minimize data redundancy and unnecessary data processing. Implementing these strategies directly improves the efficiency of Amazon pipelines.

Regularly reviewing and refining pipeline architecture ensures ongoing cost optimization. Identify bottlenecks and inefficiencies. Explore alternative approaches to data processing and storage. Implementing automation for scaling resources based on demand avoids over-provisioning. This ensures resources are only used when needed. Using serverless computing options like AWS Lambda reduces the need for managing servers, simplifying operations and often lowering costs. Amazon offers various cost management tools that provide detailed analysis and recommendations. Regularly reviewing reports from these tools keeps you informed of spending patterns and areas for improvement. By consistently applying these strategies, organizations can significantly reduce the operational costs associated with their Amazon pipelines while maintaining performance and reliability.