Understanding the Power of Real-time Data Processing

In today’s fast-paced digital world, businesses face the challenge of extracting actionable insights from massive data streams. Traditional batch processing methods, where data is processed in large chunks at set intervals, are often too slow to meet the demands of real-time applications. This delay can significantly impact decision-making and operational efficiency. Real-time data processing, on the other hand, allows for immediate analysis and response to incoming data, unlocking opportunities for improved business outcomes. The benefits are clear: faster fraud detection, proactive maintenance in IoT deployments, and immediate insights from log analysis. Amazon Kinesis provides a robust solution for managing and processing these high-velocity data streams, offering a powerful alternative to inefficient batch processing systems. Applications like fraud detection, where immediate action is critical, benefit significantly from the speed and scalability of an aws kinesis stream. Real-time analytics is no longer a luxury; it is a necessity for staying competitive. The ability to react instantly to events—a core benefit of the aws kinesis stream—allows businesses to identify and address critical situations quickly, minimizing potential losses and enhancing performance.

Consider the scenario of an e-commerce platform. Detecting fraudulent transactions instantly minimizes financial losses. An aws kinesis stream can process transaction data in real-time, flagging suspicious activity and triggering immediate actions. Similarly, in the realm of IoT, an aws kinesis stream can ingest and analyze data from connected devices, enabling predictive maintenance and preventing costly downtime. The sheer volume of data generated by connected devices necessitates real-time processing capabilities. The flexibility and scalability of an aws kinesis stream makes it an ideal platform for managing and analyzing this ever-growing data flow. The key advantage of using a solution like Kinesis lies in its ability to handle the complexity of large-scale data ingestion and processing seamlessly. By using an aws kinesis stream, organizations can build applications that respond to events immediately, leading to a significant competitive advantage.

This capability extends beyond e-commerce and IoT. Log aggregation, for instance, greatly benefits from real-time analysis. An aws kinesis stream can ingest and process log data from multiple sources, providing immediate visibility into system health and performance. This allows for quick identification and resolution of issues, preventing larger-scale problems. The ability to react in real-time, made possible by an aws kinesis stream, significantly reduces downtime and enhances overall operational efficiency. Ultimately, the power of real-time data processing lies in its capacity to transform raw data into actionable insights, empowering businesses to make informed decisions and achieve better results. The choice of an appropriate technology, such as an aws kinesis stream, is crucial in harnessing this potential and gaining a competitive edge in today’s dynamic market.

Diving Deep into Amazon Kinesis Streams: A Comprehensive Overview

Amazon Kinesis Streams is a fully managed service offered by AWS. It allows for the capture, processing, and storage of real-time, streaming data at massive scale. The aws kinesis stream service excels at handling large volumes of data, making it ideal for applications requiring immediate data ingestion and analysis. Understanding its core components is crucial for effective implementation. A key concept within the aws kinesis stream architecture is the shard. Shards are essentially partitions of the stream, enabling parallel processing and scaling. Each shard maintains its own sequence number, providing a mechanism to track data order and ensure exactly-once processing.

Data enters the aws kinesis stream through producers. These producers can be various applications or devices, sending data in the form of records. These records are then distributed across available shards based on a partitioning key. This key allows for data organization and ensures that records with the same key consistently end up in the same shard. After ingestion, consumers access and process data from the shards. This consumption can be achieved through various methods, including polling or using client libraries. The aws kinesis stream architecture is designed for high availability and fault tolerance. Data replication ensures data durability, and the service automatically handles shard scaling to accommodate fluctuating data volumes. Visualizing data flow through this system with a diagram would enhance understanding of this powerful AWS service.

The aws kinesis stream service offers several key features that enhance its capabilities. These include features like enhanced fan-out, which enables efficient distribution of data to multiple consumers. Moreover, it provides robust mechanisms for managing and monitoring stream health and performance. Through the use of sequence numbers and efficient data management, the aws kinesis stream service guarantees reliable and ordered data delivery. This reliability is critical for applications that depend on timely and accurate data processing. Effective use of the aws kinesis stream involves careful consideration of shard allocation, consumer strategies, and error handling. Choosing the appropriate number of shards balances processing power with cost efficiency. Similarly, understanding consumer patterns and implementing proper error handling ensures data integrity and system stability. The flexibility and scalability of the aws kinesis stream make it a versatile solution for various real-time data processing needs.

Choosing the Right Amazon Kinesis Service

Amazon Kinesis offers several services for real-time data processing, each designed for specific use cases. Understanding these differences is crucial for selecting the optimal solution. The core service, aws kinesis stream, excels at handling high-volume, low-latency data streams. It provides fine-grained control over data ingestion and consumption, making it ideal for applications demanding precise management of data flow. Its flexibility allows for custom processing solutions, catering to a wide array of real-time needs. However, the complexity associated with managing an aws kinesis stream might be a barrier for simpler scenarios.

Kinesis Data Firehose, in contrast, offers a simpler, serverless approach. This service is perfect for applications requiring reliable, batch-oriented delivery of data to destinations like S3 or Redshift. It simplifies data ingestion by handling much of the infrastructure management automatically. While less flexible than an aws kinesis stream, it is a highly efficient option for workloads that don’t need the fine-tuned control of Kinesis Streams. The ease of use and reduced operational overhead make it a cost-effective choice for applications with straightforward data loading requirements. For example, collecting and storing log data from numerous sources might best be handled by Firehose.

Kinesis Data Analytics provides a managed service for real-time application development. It allows developers to build applications directly on top of the data streaming infrastructure using SQL or Apache Flink. This option is best suited for situations that require complex data transformations or real-time analytics, often building directly from an aws kinesis stream. It significantly reduces the need for managing the underlying infrastructure. However, the setup and maintenance may prove more intricate than using aws kinesis stream directly. Ultimately, the choice depends on the balance between the required level of control, complexity, and cost. The selection process should carefully weigh the specific application requirements against the features and capabilities of each service to arrive at the best fit. Consider the complexity of your data processing needs when making this crucial decision.

Building a Robust aws kinesis stream Data Ingestion Pipeline

Creating a reliable data ingestion pipeline for an aws kinesis stream involves several key steps. Producers, applications that send data, require appropriate libraries to interact with the stream. The AWS SDKs for various programming languages provide convenient methods for interacting with aws kinesis stream. These SDKs handle much of the underlying complexity, allowing developers to focus on data preparation and delivery. Choosing the correct SDK depends on the programming language of your producer application. For example, Python developers would utilize the boto3 library, while Java developers would use the AWS SDK for Java.

Data reliability is paramount when working with aws kinesis stream. Network issues and other unforeseen events can lead to data loss. Robust producers implement strategies to mitigate these risks. These strategies include retries for failed sends, acknowledgments to confirm successful delivery, and batching to improve efficiency. Batching combines multiple data records into a single request, reducing the overhead of individual sends. This optimization significantly increases throughput and reduces latency. Error handling is essential to maintain data integrity; producers should log errors comprehensively to aid in debugging and monitoring. Proper error handling ensures efficient and reliable data ingestion to the aws kinesis stream.

Example Python code using boto3 demonstrates a simple producer: It shows how to create a Kinesis client, and send records to a specified aws kinesis stream. Proper error handling is included to catch and handle exceptions during the process. Remember to replace placeholders like ‘your_stream_name’ and ‘your_aws_credentials’ with your actual values. This basic example provides a foundation for more sophisticated producers, showcasing best practices such as using batches for improved throughput and implementing retries to increase data reliability. The AWS SDKs provide more advanced features that can be incorporated into your production-ready pipeline, including enhanced metrics and sophisticated error handling mechanisms for a more resilient system. Careful design and implementation are vital for creating a robust and reliable aws kinesis stream data ingestion pipeline. The right choices in libraries, error handling, and batching methods significantly impact the overall performance and reliability of your data streaming system.

Efficiently Consuming Data from Your AWS Kinesis Stream

Consuming data from an aws kinesis stream involves using consumer applications designed to read and process the stream’s data. Several consumer patterns exist, each with its strengths and weaknesses. Simple polling, a straightforward approach, involves periodically checking the stream for new records. This method, while easy to implement, may not be the most efficient for high-throughput scenarios. It can also lead to increased latency and missed data if polling intervals are too long. The AWS SDKs provide helpful tools for this pattern.

For more sophisticated consumption, consider using enhanced fan-out techniques. This involves distributing the stream’s data across multiple consumers, significantly increasing throughput and reducing processing time. Libraries like the AWS Kinesis Client Library (KCL) simplify this process by managing shard assignment, checkpointing, and failure recovery. KCL ensures that data is processed reliably and efficiently, even in the face of application failures. Efficient consumption of an aws kinesis stream is crucial for real-time applications. Properly implemented, it ensures low latency and high throughput, maximizing the value of your real-time data.

Efficiently consuming data from your aws kinesis stream requires careful consideration of several factors. Choosing the right consumer pattern is critical. Simple polling works well for low-throughput applications with relaxed latency requirements. However, for high-throughput, low-latency scenarios, enhanced fan-out using the KCL is highly recommended. Regardless of your chosen pattern, robust error handling and efficient resource management are crucial for building a reliable and scalable data processing pipeline. The aws kinesis stream provides tools to simplify the process. Implement proper retry mechanisms to handle temporary failures and monitor resource usage to ensure optimal performance. Remember to leverage the capabilities of the AWS SDKs and the KCL for simplified development and increased efficiency.

Scaling Your AWS Kinesis Stream for High Throughput

Efficiently scaling an AWS Kinesis stream is crucial for handling increasing data volumes. The core mechanism for scaling involves adjusting the number of shards. Shards are the fundamental building blocks of an aws kinesis stream, each representing a parallel processing unit. Increasing the shard count allows the stream to ingest and process more data concurrently. Careful planning is essential; insufficient shards can lead to bottlenecks and data backlog, while excessive shards may unnecessarily increase costs. The optimal number of shards depends on your application’s throughput requirements and data distribution. AWS provides tools and metrics to help determine the appropriate shard count. Begin by analyzing your data ingestion rate and desired latency. Tools like the AWS Kinesis Data Streams Client Library help manage shard allocation efficiently.

Monitoring your aws kinesis stream’s performance is vital for proactive scaling. Amazon CloudWatch provides comprehensive metrics, including data ingestion rate, provisioned throughput, and consumer lag. Regularly review these metrics to identify potential bottlenecks. For example, consistently high consumer lag might indicate insufficient shards. Setting up CloudWatch alarms for critical metrics enables timely intervention before performance degradation significantly impacts your application. Automated scaling strategies can be implemented using AWS services like AWS Lambda to dynamically adjust the shard count based on predefined thresholds. This approach ensures your aws kinesis stream automatically adapts to fluctuating data volumes, maintaining optimal performance and cost efficiency.

Cost optimization is a key consideration when scaling an aws kinesis stream. While increasing shards enhances throughput, it also increases costs. Therefore, a balanced approach is crucial. Regularly assess your shard count to ensure it aligns with your actual throughput needs. Avoid over-provisioning shards, as this leads to unnecessary expenses. Utilize features like on-demand capacity to optimize costs further. Strategies such as data compression can reduce the volume of data processed, resulting in cost savings and improved efficiency. By combining careful planning, proactive monitoring, and cost-conscious strategies, you can effectively scale your aws kinesis stream to handle growing data volumes while maintaining a balance between performance and cost-effectiveness. Remember to always consider the trade-off between throughput and cost when making scaling decisions for your aws kinesis stream.

Monitoring and Managing Your Amazon Kinesis Streams with Amazon CloudWatch

Effectively monitoring an aws kinesis stream is crucial for maintaining its health and performance. Amazon CloudWatch provides comprehensive monitoring capabilities for Kinesis Streams. You can track key metrics such as PutRecord latency, GetRecords latency, consumed records, and shard throughput. Setting up CloudWatch alarms based on these metrics allows for proactive identification of potential issues. For example, an alarm triggered by high latency can alert you to problems requiring immediate attention. Regularly reviewing these metrics helps you understand the overall performance of your aws kinesis stream and identify areas for optimization.

Troubleshooting an aws kinesis stream often involves analyzing CloudWatch metrics to pinpoint the root cause of performance degradation. High PutRecord latency might indicate producer-side issues, such as network congestion or inefficient data formatting. Conversely, high GetRecords latency could point towards consumer-side bottlenecks or insufficient consumer capacity. Amazon CloudWatch provides detailed logs that offer further insights into individual record processing and potential error patterns within your aws kinesis stream. By correlating these logs with the relevant metrics, you can effectively diagnose and resolve problems quickly, ensuring your stream operates at peak efficiency. This proactive monitoring approach minimizes disruptions and maintains data integrity.

Cost optimization is a key consideration when managing an aws kinesis stream. CloudWatch provides detailed cost allocation reports, allowing you to track your spending based on various factors. By analyzing these reports, you can identify areas where costs can be reduced. For example, you might find that reducing the number of shards, if throughput permits, can significantly lower your operational expenses. Understanding how shard usage correlates with cost, alongside monitoring data volume and latency, allows for informed decisions about scaling and resource allocation. Efficiently managing an aws kinesis stream requires a balanced approach: prioritizing optimal performance while maintaining cost-effectiveness. CloudWatch empowers you to achieve both goals. Regularly examining your CloudWatch data enables informed, data-driven decisions to manage your stream and minimize expenses.

Securing Your Kinesis Data Streams: Best Practices and Security Considerations

Protecting your AWS Kinesis stream data is paramount. Implement robust security measures from the outset. Utilize Identity and Access Management (IAM) roles and policies to control access to your streams. Grant only necessary permissions to specific users or services. This granular control minimizes the risk of unauthorized access and data breaches. Regularly review and update your IAM policies to ensure they align with your evolving security needs. Consider using least privilege access, granting only the minimum permissions required for each user or service interacting with the aws kinesis stream.

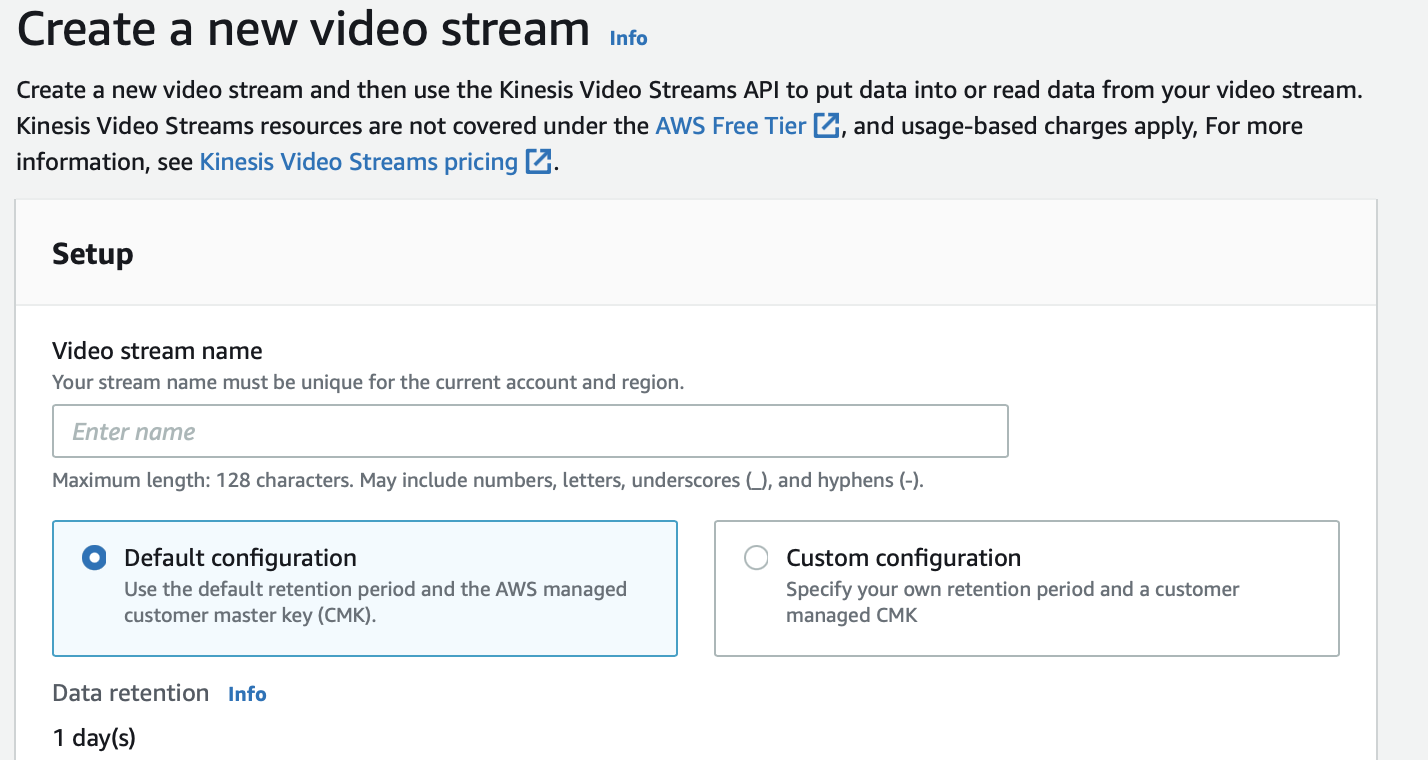

Data encryption is a crucial aspect of securing your aws kinesis stream. Encrypt data both in transit and at rest. For data in transit, utilize HTTPS to secure communication between your applications and the Kinesis service. For data at rest, leverage server-side encryption (SSE) offered by AWS. SSE-KMS uses AWS Key Management Service (KMS) to manage encryption keys, providing a secure and robust solution. Consider the different encryption options available, choosing the one that best meets your security requirements and compliance needs. Regularly monitor and rotate encryption keys to strengthen the overall security posture of your aws kinesis stream. This proactive approach enhances data protection against potential compromise.

Compliance with relevant security standards and regulations is vital. Depending on your industry and data sensitivity, you may need to adhere to standards like HIPAA, PCI DSS, or others. Understand these regulations and ensure your Kinesis stream configuration complies. Regularly audit your security practices and implement necessary controls to maintain compliance. Consider incorporating security monitoring tools to detect any suspicious activity. Promptly investigate and address any security alerts to minimize the impact of potential breaches. By diligently implementing these security best practices, you can effectively protect your aws kinesis stream data and maintain a secure data processing environment.