Understanding Recurrent Neural Networks (RNNs): A Deep Dive

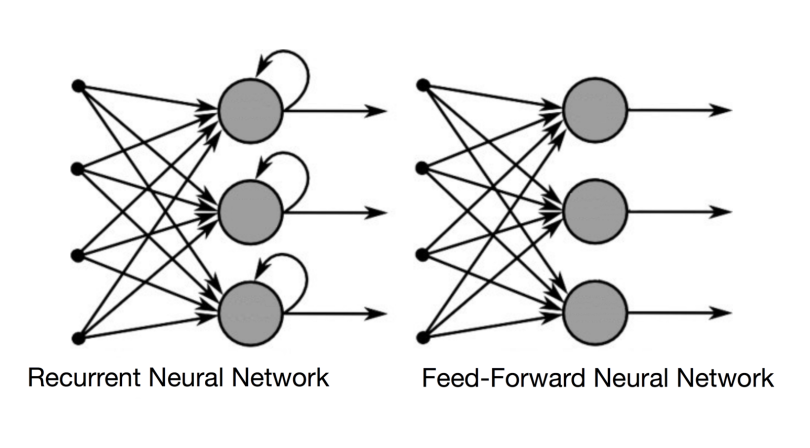

Recurrent neural networks (RNNs) represent a powerful class of neural networks specifically designed to handle sequential data. Unlike feedforward neural networks, which process data in a single pass, RNNs possess an internal memory. This memory allows them to maintain information about past inputs, influencing the processing of current inputs. Imagine reading a sentence: you understand each word in the context of the preceding words. RNNs work similarly, leveraging this “memory” to understand sequences such as text, time series, or audio. This fundamental difference makes RNNs exceptionally well-suited for tasks involving temporal dependencies, where the order of data matters significantly. Early RNNs, simple in structure, laid the groundwork for today’s sophisticated models. Their applications are vast and varied, impacting fields ranging from machine translation to speech recognition and beyond.

The core concept of a recurrent neural network lies in its recurrent connections. These connections allow information to persist across time steps. At each step, the network receives an input and its hidden state (memory) is updated. This updated hidden state, incorporating both current input and past information, is then used to generate an output. The process repeats, unfolding through the entire sequence. This feedback loop distinguishes RNNs from other neural network types, giving them their unique capacity for sequential data processing. This capability to learn temporal patterns is what makes recurrent neural networks indispensable for tasks like natural language processing, where understanding the context of a sentence requires remembering previous words. The ability to learn long-term dependencies, however, presents significant challenges that spurred the development of advanced RNN architectures.

The history of recurrent neural networks is rich and intertwined with the broader development of artificial neural networks. Early work in the 1980s established the foundational concepts, but limitations in computational power and training algorithms hindered widespread adoption. Significant advancements in training techniques and hardware have fueled the resurgence of recurrent neural networks, leading to their current prominence in deep learning applications. Understanding the different architectures of recurrent neural networks, their strengths, and limitations is crucial for applying them effectively. The journey from simple RNNs to sophisticated LSTMs and GRUs reflects a constant quest to overcome inherent challenges and unlock the full potential of this powerful class of neural network models. The recurrent neural network architecture is constantly evolving and improving.

RNN Architectures: From Simple to Sophisticated

Recurrent neural networks (RNNs) encompass a variety of architectures, each designed to handle sequential data in unique ways. Two fundamental architectures are Elman networks and Jordan networks. Elman networks, a foundational recurrent neural network design, utilize feedback connections from the hidden layer to itself. This self-connection allows the network to maintain an internal state, remembering information from previous time steps. This internal memory is crucial for processing sequential data, enabling the recurrent neural network to understand context and dependencies within the sequence. The network’s hidden state is updated at each time step, influenced by both the current input and the previous hidden state. This mechanism allows the recurrent neural network to capture temporal dependencies.

In contrast, Jordan networks differ by feeding back the output of the network to itself. While both architectures utilize a form of memory, Jordan networks focus on the network’s overall output, reflecting a summary of the processed sequence. Elman networks, however, provide a more nuanced representation by focusing on the hidden state. Visual representations illustrate these differences clearly. An Elman network shows a loop connecting the hidden layer back to itself, whereas a Jordan network exhibits a loop from the output layer to the input. Each recurrent neural network architecture possesses strengths and weaknesses; Elman networks are better suited for tasks needing fine-grained temporal dependencies, while Jordan networks excel when a summarized representation is needed. Choosing the appropriate architecture depends on the specific application and nature of the data. The information flow in recurrent neural networks involves a cyclical process: the hidden state (memory) informs processing of new inputs, which updates the hidden state, creating a continuous flow.

Understanding the intricacies of these recurrent neural network architectures is crucial for effectively leveraging their power in various applications. Both Elman and Jordan networks form the building blocks for more complex recurrent neural networks, and their underlying principles influence the design of advanced models like LSTMs and GRUs. The core concept of maintaining an internal state, however, remains consistent throughout these architectures. This ability to remember and incorporate past information is what differentiates recurrent neural networks from standard feedforward networks and makes them uniquely suited for time-series and sequential data processing. The choice between Elman and Jordan networks, therefore, represents a fundamental decision in designing a suitable recurrent neural network for a given task. The recurrent neural network architecture selection profoundly impacts the model’s performance and efficiency.

The Challenge of Vanishing and Exploding Gradients in Recurrent Neural Networks

Standard recurrent neural networks face a significant hurdle: the vanishing and exploding gradient problem. This issue severely limits their capacity to learn long-term dependencies within sequential data. During the backpropagation process, gradients—used to update network weights—can either shrink exponentially to near zero (vanishing gradients) or grow exponentially to extremely large values (exploding gradients). Vanishing gradients hinder the network’s ability to learn information from earlier time steps, effectively truncating the memory of the recurrent neural network. Conversely, exploding gradients lead to unstable training, resulting in numerical overflow and erratic weight updates.

The mathematical underpinnings of this problem lie in the repeated matrix multiplications involved in backpropagation through time (BPTT). In a recurrent neural network, the weight matrices are repeatedly multiplied during the unfolding of the network over time. If the spectral radius (the largest eigenvalue) of these matrices is less than one, gradients will shrink exponentially with increasing time steps, leading to vanishing gradients. Conversely, a spectral radius greater than one causes exploding gradients. The severity of the problem depends on the architecture of the recurrent neural network, the activation functions used, and the length of the input sequence. A longer sequence dramatically increases the risk of gradient vanishing or explosion. This limitation significantly impacts the performance of standard recurrent neural networks, especially on tasks requiring the modeling of long-range dependencies, such as machine translation or long-term forecasting. The recurrent neural network’s effectiveness is thus hampered by its inability to effectively capture these crucial relationships.

Understanding the vanishing and exploding gradient problem is crucial for appreciating the need for advanced recurrent neural network architectures, such as LSTMs and GRUs. These sophisticated architectures incorporate mechanisms designed specifically to mitigate the challenges posed by vanishing and exploding gradients, allowing them to successfully handle long-term dependencies. The core of these advanced designs revolves around carefully controlling the flow of information through the network, preventing gradients from diminishing or growing uncontrollably. Researchers have developed strategies to address these gradient issues, thereby significantly enhancing the capabilities of recurrent neural networks. By understanding and addressing this fundamental problem, the effectiveness of recurrent neural networks in various applications has improved considerably.

Long Short-Term Memory (LSTM) Networks: A Solution to Gradient Issues

Long Short-Term Memory (LSTM) networks represent a significant advancement in recurrent neural network architecture. They address the vanishing gradient problem, a major limitation of standard RNNs, enabling the effective learning of long-term dependencies in sequential data. LSTMs achieve this through a sophisticated cell structure incorporating three gates: an input gate, a forget gate, and an output gate. These gates regulate the flow of information into, out of, and within the LSTM cell, preventing the loss of information over extended sequences. The cell state acts as a kind of conveyor belt, carrying information down the chain, with the gates carefully regulating what gets added or removed from this information stream. This mechanism allows LSTMs to maintain information over much longer sequences than standard recurrent neural networks, making them particularly well-suited for tasks involving long-range temporal dependencies, such as machine translation or speech recognition. The careful control of information flow within the LSTM cell allows for the preservation of relevant information from earlier parts of the sequence, enhancing the accuracy and effectiveness of the overall recurrent neural network.

Understanding the LSTM’s internal workings involves visualizing the interaction of these gates. The input gate decides what new information will be stored in the cell state. The forget gate determines which information from the previous cell state should be discarded. The output gate then carefully selects which parts of the cell state will be used to influence the network’s output at each time step. Imagine a conveyor belt carrying information along a sequence. The input gate adds new packages to the belt, the forget gate removes outdated or irrelevant packages, and the output gate selects which packages to send to the next stage of processing. This precise control ensures that important information remains available throughout the processing of the entire sequence, addressing the vanishing gradient problem and allowing the LSTM recurrent neural network to capture long-term relationships within the data. The result is a more robust and powerful recurrent neural network model capable of learning complex sequential patterns.

LSTMs demonstrate their effectiveness through improved performance on tasks where long-term dependencies are crucial. For example, in natural language processing, LSTMs excel at tasks like machine translation, where the meaning of a word can depend on words many steps earlier in the sentence. A standard recurrent neural network would struggle to maintain this context, while the LSTM recurrent neural network, thanks to its gate mechanism, can effectively preserve and utilize this information to produce more accurate and coherent translations. Similarly, in time-series analysis, LSTMs can accurately predict future values based on patterns observed over extended periods, demonstrating the value of its ability to manage and learn from long-term dependencies. The architecture of the LSTM recurrent neural network allows for a significantly improved performance compared to standard recurrent neural networks in a wide variety of applications needing memory of past events.

Gated Recurrent Units (GRUs): A Simplified Alternative to LSTMs

Gated Recurrent Units (GRUs) offer a streamlined approach to handling long-term dependencies in sequential data, providing a compelling alternative to Long Short-Term Memory (LSTM) networks. Unlike LSTMs, which employ three gates (input, forget, and output), GRUs utilize only two: a reset gate and an update gate. This simplification reduces the computational burden and the number of parameters, often leading to faster training and comparable performance. The reset gate controls how much of the previous hidden state to ignore, effectively allowing the GRU to selectively forget past information. The update gate determines how much of the new information to incorporate into the current hidden state. This mechanism enables the recurrent neural network to efficiently capture relevant information from earlier time steps, mitigating the vanishing gradient problem. The architecture’s elegance stems from its ability to balance memory retention and information update with fewer components than an LSTM.

A key difference lies in how GRUs manage information flow. LSTMs maintain a separate cell state to carry information across time steps, while GRUs integrate this functionality into their hidden state. This simplification streamlines the network, making it less complex and easier to train. The reduced complexity translates to faster training times and a decreased risk of overfitting, particularly beneficial when working with limited data. GRUs also require fewer parameters than LSTMs, making them attractive for applications with limited computational resources. This characteristic makes them a practical choice for deployment on resource-constrained devices. Choosing between LSTMs and GRUs often involves weighing the trade-off between performance and computational efficiency; in many cases, GRUs offer a robust and efficient solution for various recurrent neural network applications.

The following table summarizes the key differences between LSTMs and GRUs:

| Feature | LSTM | GRU |

|—————–|————————————|————————————–|

| Gates | Input, Forget, Output | Reset, Update |

| Hidden State | Separate cell state and hidden state | Single hidden state |

| Complexity | More complex | Less complex |

| Computational Cost | Higher | Lower |

| Training Speed | Slower | Faster |

| Parameter Count | Higher | Lower |

| Performance | Often superior for very long sequences | Often comparable to LSTM, especially for shorter sequences |

While LSTMs might exhibit a slight performance advantage in scenarios requiring the processing of exceptionally long sequences, GRUs often provide a compelling alternative for many recurrent neural network tasks, striking a balance between accuracy and efficiency. The choice depends on the specific needs of the application and the characteristics of the dataset.

Implementing a Recurrent Neural Network using TensorFlow/Keras

This section provides a practical tutorial on implementing a recurrent neural network (RNN), specifically a Long Short-Term Memory (LSTM) network, using TensorFlow/Keras. The chosen task is text generation, a classic application showcasing RNN capabilities. This example demonstrates how to build, train, and use a recurrent neural network for a specific purpose. The process involves data preprocessing, model creation, training, and prediction. The code is designed for clarity and reproducibility, offering a foundation for further exploration of recurrent neural networks.

First, necessary libraries are imported: tensorflow and keras. Then, a sample text dataset is loaded. This dataset is preprocessed, converting text into numerical sequences suitable for the recurrent neural network. The sequence length determines how many words are considered in each input sequence. A vocabulary is built, mapping unique words to numerical indices. The sequences are then padded to ensure uniform length. This prepares the data for use in the recurrent neural network model. The LSTM network architecture is defined next. The model comprises an embedding layer, an LSTM layer, and a dense output layer. The embedding layer converts numerical word indices to dense vectors. The LSTM layer processes sequential data, capturing temporal dependencies. The dense layer generates prediction probabilities for each word in the vocabulary. The model is compiled using an appropriate loss function (e.g., categorical cross-entropy) and an optimizer (e.g., Adam).

The model is then trained using the prepared data. Training involves iteratively feeding the model input sequences and corresponding target sequences. The model adjusts its weights to minimize the loss function, improving its ability to predict the next word in a sequence. The training process involves monitoring metrics such as loss and accuracy to gauge the model’s performance. After training, the model can generate new text. This is achieved by feeding the model an initial sequence and repeatedly generating the next word based on the model’s predictions. The generated text reflects the patterns learned during training. This implementation illustrates the practical application of a recurrent neural network. This simple example provides a clear understanding of the steps involved in building and applying a recurrent neural network using TensorFlow/Keras. Experimenting with different hyperparameters and datasets allows for further refinement and exploration of the recurrent neural network’s capabilities.

Advanced RNN Applications and Future Trends

Recurrent neural networks (RNNs) have revolutionized numerous fields. Their ability to process sequential data makes them ideal for natural language processing (NLP) tasks like machine translation and sentiment analysis. For example, RNNs power sophisticated translation engines, accurately converting text between languages. In speech recognition, RNNs excel at transforming spoken words into text, understanding nuances in pronunciation and context. These networks also find applications in time series analysis, predicting stock prices or weather patterns based on historical data. The effectiveness of recurrent neural networks in these areas stems from their capacity to learn temporal dependencies within data.

Beyond these established applications, RNNs are impacting various industries. In healthcare, they analyze medical images and patient data for disease diagnosis and treatment planning. In finance, recurrent neural networks predict market trends and manage risk. In manufacturing, they optimize production processes and detect equipment malfunctions. The versatility of the recurrent neural network architecture allows adaptation to a wide range of problems involving sequential data. These networks continuously evolve, and researchers constantly explore new applications. The potential of recurrent neural networks to tackle complex, real-world problems is immense.

Current research focuses on enhancing RNN capabilities. Attention mechanisms, for instance, allow the network to focus on specific parts of the input sequence, improving performance on long sequences. Transformers, a newer architecture inspired by RNNs, have demonstrated impressive results in NLP tasks. They address some of the limitations of traditional recurrent neural networks, particularly regarding parallel processing and long-range dependencies. While LSTM and GRU networks remain valuable tools, ongoing research continually refines recurrent neural network architectures and expands their capabilities, ensuring their continued relevance in the field of deep learning. The future of recurrent neural networks looks bright, with many promising avenues of research and development.

Choosing the Right Recurrent Neural Network Architecture for Your Task

Selecting the optimal recurrent neural network (RNN) architecture—simple RNN, LSTM, or GRU—depends heavily on the specific task and dataset characteristics. Sequence length plays a crucial role. For short sequences with minimal long-term dependencies, a simple RNN might suffice. However, for longer sequences where capturing relationships between distant data points is vital, LSTMs or GRUs are necessary to mitigate the vanishing gradient problem. The complexity of the dependencies within the sequence is another key factor. Simple RNNs struggle with intricate, long-range dependencies. LSTMs and GRUs, with their sophisticated gating mechanisms, excel at handling such complexity, making them suitable for applications like natural language processing where the meaning of a word often depends on words many steps earlier in the sentence. Consider the computational resources available. Simple RNNs are computationally less expensive than LSTMs and GRUs. LSTMs, while powerful, are more computationally demanding due to their intricate architecture. GRUs offer a balance, often achieving comparable performance to LSTMs with reduced computational overhead. Therefore, the choice involves a trade-off between performance and resource efficiency. The recurrent neural network architecture should be selected carefully.

The nature of the data itself also influences the choice of RNN architecture. Time series data, for instance, often benefits from the ability of LSTMs and GRUs to learn long-term temporal dependencies. In contrast, for tasks involving shorter sequences with less complex relationships, a simple recurrent neural network might be adequate. Furthermore, the specific application also guides this decision. For tasks like sentiment analysis or part-of-speech tagging, where contextual information is essential, LSTMs or GRUs are typically preferred. In machine translation, where sentences can be quite long, LSTMs are often the more robust choice for effectively capturing the underlying relationships between words across long sequences. Therefore, a thorough understanding of the task at hand and the properties of the data is crucial for making an informed decision.

In summary, a simple RNN offers a good starting point for simpler tasks with short sequences. LSTMs provide superior performance for complex tasks and long sequences, but at a higher computational cost. GRUs provide a middle ground, often offering performance comparable to LSTMs with less computational burden. The choice of recurrent neural network architecture should reflect the balance between performance requirements, data characteristics, and available resources. Careful consideration of these factors ensures the selection of the most effective and efficient architecture for the specific application. Understanding the strengths and weaknesses of each architecture is vital for successful implementation of a recurrent neural network.