Why a Sound Application Deployment Approach Matters in Kubernetes

A well-defined deployment strategy kubernetes is crucial for modern application management. It directly impacts application reliability and user experience. A robust strategy minimizes downtime during updates. It also reduces risks. Faster rollbacks are possible when failures occur. These benefits are vital in today’s complex microservices architectures.

In Kubernetes, a solid deployment strategy kubernetes ensures seamless transitions between application versions. Without a proper strategy, deployments can become chaotic. This can lead to service disruptions and unhappy users. Minimizing downtime is paramount. Users expect applications to be available always. A carefully planned deployment strategy kubernetes addresses this expectation. It allows updates and upgrades to occur without interrupting service.

Reducing risk is another key advantage of a good deployment strategy kubernetes. Updates inherently carry risk. A new version might introduce bugs or compatibility issues. A well-designed strategy includes mechanisms for detecting and mitigating these risks. Canary deployments and A/B testing are examples of strategies that help identify problems early. Faster rollbacks are essential. If a new version fails, the ability to quickly revert to the previous version is critical. This minimizes the impact on users and prevents prolonged outages. Choosing the right deployment strategy kubernetes greatly improves overall application health. This ensures a positive user experience, and enables teams to manage complex deployments effectively.

Rolling Updates: The Default Kubernetes Deployment Method Explained

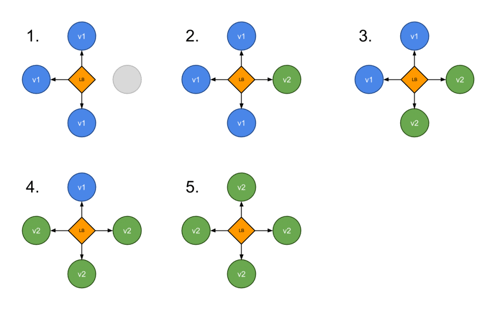

RollingUpdate deployments represent the default deployment strategy kubernetes employs. This approach updates applications with zero downtime. It achieves this by incrementally replacing old pods with new ones. Key configurations that govern the rollout process include `maxSurge` and `maxUnavailable`. `maxSurge` specifies the maximum number of pods that the deployment can create above the desired number of pods. `maxUnavailable` defines the maximum number of pods that can be unavailable during the update. These parameters allow fine-tuning the deployment strategy kubernetes implements, balancing update speed with application availability. Rolling updates present several advantages. Zero downtime is a major benefit, ensuring continuous service availability during the update process. Incremental updates reduce the risk associated with deploying a new version, as problems can be detected and addressed early in the rollout. This deployment strategy kubernetes offers, minimizes user impact during updates.

However, RollingUpdate deployments also have limitations. The rollout process can impact performance, especially if resources are constrained. The gradual replacement of pods may lead to temporary resource contention. It is crucial to monitor application performance during the rollout to mitigate these issues. Verifying the health of the new version before completing the update can be challenging. While Kubernetes health checks can help, they may not catch all potential problems. Thorough testing and monitoring are essential to ensure the new version functions correctly in a production environment. Despite these limitations, RollingUpdate remains a widely used and effective deployment strategy kubernetes offers for many applications. By carefully configuring `maxSurge` and `maxUnavailable`, and closely monitoring application health, teams can leverage rolling updates to deploy new versions with minimal disruption.

Choosing the correct deployment strategy kubernetes provides is crucial for application reliability. While zero downtime is a key advantage, potential performance impacts and the need for comprehensive monitoring should be carefully considered. Modern applications, often composed of microservices, benefit significantly from automated and controlled deployment strategies. Rolling updates provide a balanced approach, allowing for iterative improvements and reduced risk during the deployment strategy kubernetes orchestrates. Understanding its mechanics and limitations enables informed decisions that align with application requirements and operational capabilities, ensuring a smooth and reliable update process.

Blue-Green Deployments: Implementing Zero-Downtime with Kubernetes

Blue-Green deployments offer a robust deployment strategy kubernetes for achieving true zero-downtime updates. This method involves running two identical environments, conventionally named “Blue” and “Green.” At any given time, only one environment handles live traffic. The other environment remains idle, ready to receive updates. This deployment strategy kubernetes ensures continuous service availability.

The switchover process is the core of this deployment strategy kubernetes. Once the “Green” environment is updated with the new application version and thoroughly tested, traffic is redirected from the “Blue” environment to the “Green” environment. This redirection can be achieved through various Kubernetes mechanisms, such as updating a Service object’s selector to point to the new deployment. The switchover is typically instantaneous, resulting in zero perceived downtime for users. A significant advantage is the ability to instantly rollback. If issues arise in the “Green” environment after the switchover, traffic can be immediately routed back to the “Blue” environment, minimizing impact. This deployment strategy kubernetes offers a safety net unavailable in simpler deployment methods.

However, Blue-Green deployments present challenges. Maintaining two full environments doubles infrastructure costs. The complexity of managing two identical environments also increases operational overhead. Ensuring configuration parity between the “Blue” and “Green” environments is crucial to prevent unexpected behavior after the switchover. Consider a scenario where you have a Kubernetes setup with two deployments, `app-blue` and `app-green`, each representing one of the environments. A Service, `app-service`, initially points to `app-blue`. To deploy a new version, `app-green` is updated. After verifying `app-green`, the `app-service` selector is modified to point to `app-green`, effectively switching traffic. This deployment strategy kubernetes, while powerful, requires careful planning and execution.

Canary Releases: Testing New Features with a Subset of Users on K8s

Canary deployments offer a strategic approach to releasing new software versions within a Kubernetes environment. This deployment strategy kubernetes focuses on mitigating risks by exposing the updated application to a small segment of users before a full-scale rollout. The “canary,” representing the new version, receives a fraction of the total traffic, allowing for observation and analysis in a real-world setting. This controlled exposure helps identify potential issues or performance bottlenecks that might not be apparent in testing environments. The existing version continues to serve the majority of users, ensuring a stable experience while the canary is under evaluation.

Monitoring is crucial during a canary deployment. Key metrics to track include error rates, response times, resource utilization, and user behavior. Comparing these metrics between the canary and the existing version provides insights into the new version’s stability and performance. Analyzing user behavior, such as conversion rates or feature usage, helps determine whether the new version positively impacts the user experience. Based on the observed data, a decision is made: either proceed with a full deployment strategy kubernetes, rollback to the previous version if critical issues arise, or iterate on the canary release to address identified problems. Careful observation dictates the next steps of the deployment strategy kubernetes.

Service meshes, such as Istio, significantly simplify the implementation and management of canary deployments in Kubernetes. These meshes provide features like traffic management, observability, and security, enabling fine-grained control over traffic routing and monitoring. Istio allows you to easily define rules for directing a specific percentage of traffic to the canary deployment strategy kubernetes, simplifying the process of gradually increasing exposure. Furthermore, service meshes provide detailed metrics and tracing capabilities, facilitating comprehensive analysis of the canary’s performance and user impact. By leveraging service meshes, organizations can streamline their canary deployment strategy kubernetes, reduce operational complexity, and improve the reliability of their application releases.

How to Implement a Phased Rollout Strategy in Kubernetes

A phased rollout, a crucial deployment strategy kubernetes offers, provides a controlled method for releasing new application versions. It mitigates risks by gradually exposing the new version to increasing subsets of users. This approach allows for close monitoring and quick intervention if issues arise, ensuring minimal impact on the overall user experience. The implementation leverages core Kubernetes resources, including Deployments and Services, to orchestrate the rollout process. Careful planning and execution are essential for a successful phased deployment strategy kubernetes provides.

The initial step involves configuring a Deployment with the new application version. Next, a Service is used to manage traffic routing. Instead of immediately directing all traffic to the new Deployment, a small percentage is diverted. This is often achieved using Kubernetes’ built-in features or, more effectively, with a service mesh like Istio. The service mesh allows for fine-grained traffic management based on weights or other criteria. During this initial phase, comprehensive monitoring is crucial. Metrics such as error rates, response times, and resource utilization must be closely observed. Any anomalies trigger an immediate rollback to the previous stable version. The deployment strategy kubernetes makes possible ensures stability.

Once the initial phase proves successful, the percentage of traffic routed to the new version is incrementally increased. This gradual escalation continues while maintaining vigilant monitoring at each stage. Kubernetes manifests define the desired state, and `kubectl` commands facilitate the updates. For instance, `kubectl set image deployment/my-app my-app=new-image:latest` updates the image, and `kubectl scale deployment/my-app –replicas=X` adjusts the number of replicas. Integrating metrics into the rollout process is paramount. Automated analysis of key performance indicators (KPIs) can trigger automatic rollbacks or pauses, ensuring a self-healing deployment strategy kubernetes enables. This iterative approach minimizes risk and ensures a smooth transition to the new application version, a key advantage of choosing the right deployment strategy kubernetes offers.

A/B Testing on Kubernetes: Optimizing User Experience Through Experimentation

A/B testing serves as a powerful deployment strategy kubernetes, focused on optimizing user experience and product features through direct experimentation. This method involves presenting different versions of an application or specific features to distinct segments of users, meticulously measuring their responses to determine which version yields superior performance. By analyzing user behavior across these variations, businesses can gain invaluable insights into what resonates best with their target audience. This data-driven approach empowers informed decisions regarding design, functionality, and overall user flow, ultimately leading to improved conversion rates, enhanced engagement, and achievement of other vital business metrics.

Implementing A/B testing within a Kubernetes environment necessitates specific tools and techniques. Traffic splitting is crucial, enabling the controlled distribution of users between different application versions. This can be achieved through Kubernetes Services, Ingress controllers, or more advanced service meshes like Istio, which offer fine-grained traffic management capabilities. Furthermore, robust data analysis tools are essential for capturing and interpreting user behavior. These tools allow you to track key performance indicators (KPIs) such as click-through rates, bounce rates, time spent on page, and conversion rates. By carefully monitoring these metrics, it becomes possible to objectively compare the performance of different versions and identify the winning variant. Choosing the correct deployment strategy kubernetes can accelerate this process.

Consider real-world examples to illustrate the effectiveness of A/B testing as a deployment strategy kubernetes. An e-commerce platform might A/B test different checkout flows to identify the one that minimizes cart abandonment. A media company could experiment with various headline styles to optimize click-through rates on articles. A SaaS provider might test different pricing models to determine the optimal balance between revenue and user acquisition. The insights derived from A/B testing contribute directly to a better user experience and improved business outcomes. Therefore, businesses that prioritize user-centric design and data-driven decision-making should explore integrating A/B testing into their Kubernetes deployment strategy kubernetes to achieve continuous optimization and a competitive edge.

Choosing the Right Kubernetes Application Rollout Approach: Key Considerations

Selecting the optimal deployment strategy Kubernetes requires careful consideration of various factors. Application architecture plays a pivotal role; monolithic applications might benefit from simpler strategies, while microservices often demand more sophisticated approaches. Risk tolerance is another crucial aspect. Are you comfortable with potential downtime, or is zero-downtime a strict requirement? Downtime needs directly influence the choice between Rolling Updates, Blue-Green deployments, or other strategies.

Monitoring capabilities also significantly impact the selection process. Effective monitoring allows for quick detection and resolution of issues during the rollout. Without robust monitoring, advanced strategies like Canary releases and A/B testing become considerably riskier. Here’s a brief overview of the strategies. Rolling Updates provide a balance between simplicity and minimal downtime, suitable for many applications. Blue-Green deployments offer true zero-downtime and instant rollbacks but introduce complexity and cost. Canary releases allow testing new features with a subset of users, ideal for risk mitigation. A/B testing helps optimize user experience through experimentation.

Security considerations are vital for each deployment strategy Kubernetes. With Rolling Updates, ensure proper security context for new pods. Blue-Green deployments require mirrored security policies across environments. Canary deployments demand careful management of traffic routing and data isolation. A/B testing needs robust data privacy measures to protect user information. Evaluate the pros and cons of each deployment strategy Kubernetes in light of your specific needs. For instance, a high-traffic e-commerce site might prioritize Blue-Green for its zero-downtime capabilities, while a smaller internal tool could suffice with Rolling Updates. The right deployment strategy Kubernetes depends on a holistic view of your application, infrastructure, and business requirements. When selecting a deployment strategy Kubernetes, it’s essential to consider the trade-offs between complexity, cost, and risk mitigation. Effective monitoring and automated rollbacks are essential components of any successful deployment strategy Kubernetes.

Automating Kubernetes Deployments: CI/CD Pipelines and Best Practices

The automation of deployments using CI/CD pipelines is crucial for efficient Kubernetes management. A robust CI/CD pipeline offers benefits like accelerated deployment speeds, fewer manual errors, and consistent application delivery. This directly enhances the effectiveness of any chosen deployment strategy kubernetes. By integrating CI/CD, organizations can streamline the process of updating and deploying applications on Kubernetes, ensuring a smoother and more reliable workflow.

Several CI/CD tools integrate seamlessly with Kubernetes, providing the necessary infrastructure for automating the deployment strategy kubernetes. Popular options include Jenkins, GitLab CI, CircleCI, and Argo CD. These tools facilitate automated builds, tests, and deployments, aligning perfectly with the chosen deployment strategy kubernetes. Best practices for designing effective CI/CD pipelines involve version control for all application code and configurations, automated testing at various stages of the pipeline, and well-defined rollback strategies. Furthermore, comprehensive monitoring and alerting should be integrated to provide immediate feedback on the health and performance of deployed applications, allowing for quick intervention if issues arise within your deployment strategy kubernetes.

Infrastructure as Code (IaC) plays a vital role in automating Kubernetes deployments. Tools like Terraform and Ansible enable the management of Kubernetes cluster setups through code. This approach allows for consistent and repeatable infrastructure provisioning, ensuring that the deployment strategy kubernetes is executed in a standardized environment every time. By defining infrastructure configurations in code, organizations can easily track changes, collaborate on infrastructure updates, and automate the creation and management of Kubernetes resources, directly supporting and enhancing any selected deployment strategy kubernetes. This holistic approach ensures that applications are deployed consistently and reliably across all environments, maximizing efficiency and minimizing potential risks.