What is Google Cloud Observability? A Comprehensive Overview

Google Cloud Observability, previously known as stackdriver, provides a suite of tools designed to offer complete visibility into the health, performance, and availability of applications and infrastructure within Google Cloud Platform (GCP). It encompasses four key functionalities: monitoring, logging, tracing, and profiling, each playing a crucial role in delivering a unified view of the operational landscape. These components enable users to proactively identify issues, troubleshoot problems efficiently, and optimize application performance, resulting in improved reliability and user experience. Stackdriver‘s integrated approach allows for correlation of data across different sources, providing valuable insights that would be difficult to obtain from isolated systems.

Cloud Monitoring within Google Cloud Observability allows users to track metrics, define alerts, and visualize data through customizable dashboards. This component is vital for understanding resource utilization, application responsiveness, and overall system health. Cloud Logging aggregates logs from various GCP services and applications, enabling users to search, filter, and analyze log data to identify errors, debug issues, and gain insights into system behavior. Stackdriver Logging facilitates proactive issue detection and faster resolution times. Cloud Trace helps developers understand the latency distribution of their applications by tracing requests as they propagate through different services. This allows for identification of performance bottlenecks and optimization of critical code paths. Stackdriver Trace offers valuable data for improving application efficiency and responsiveness.

Cloud Profiler completes the Google Cloud Observability suite by providing insights into the resource consumption of application code. It identifies the functions that consume the most CPU time or memory, enabling developers to optimize code and reduce costs. Stackdriver Profiler helps improve application performance and efficiency. By integrating these four components, Google Cloud Observability offers a comprehensive solution for managing and optimizing applications running on GCP. The holistic view provided by stackdriver ensures that teams can effectively monitor, troubleshoot, and optimize their applications for peak performance and reliability. The synergy between monitoring, logging, tracing, and profiling empowers organizations to proactively manage their applications, reduce downtime, and deliver exceptional user experiences. Understanding stackdriver‘s capabilities is crucial for organizations seeking to maximize their investment in Google Cloud Platform.

How to Setup Google Cloud Observability: A Step-by-Step Guide

Setting up Google Cloud Observability, previously known as stackdriver, within your Google Cloud Platform (GCP) project is a straightforward process. This guide provides a beginner-friendly approach to get you started with monitoring, logging, tracing, and profiling your applications. First, ensure you have a GCP project. If not, create one via the Google Cloud Console. Next, enabling the necessary APIs is crucial. Navigate to the API Library in the Cloud Console and search for “Cloud Monitoring API,” “Cloud Logging API,” “Cloud Trace API,” and “Cloud Profiler API.” Enable each of these APIs for your project. These APIs are the foundation for Google Cloud Observability’s functionality.

The Ops Agent is the recommended way to collect logs and metrics from your Compute Engine instances. To install the Ops Agent, connect to your instance via SSH and follow the installation instructions provided in the Google Cloud documentation. The instructions typically involve running a script that configures the agent to send data to Cloud Monitoring and Cloud Logging. Once the agent is installed, configure it to collect the specific logs and metrics relevant to your applications. This may involve modifying the agent’s configuration file to specify which log files to monitor and which metrics to collect. Remember that effective stackdriver configuration begins with precise agent setup.

Finally, setting up basic dashboards and alerts allows you to visualize your data and receive notifications when issues arise. In the Cloud Monitoring section of the Cloud Console, create custom dashboards to display key performance indicators (KPIs) such as CPU utilization, memory consumption, and request latency. You can add charts and graphs to visualize these metrics over time. Furthermore, create alerting policies to trigger notifications when metrics exceed predefined thresholds. For example, you might create an alert that sends an email when CPU utilization exceeds 80%. Start with simple dashboards and alerts and gradually expand them as your monitoring needs evolve. This initial stackdriver setup provides a solid foundation for proactive performance management and effective troubleshooting within your GCP environment.

Leveraging Cloud Monitoring for Proactive Performance Management

Cloud Monitoring, a core component of Google Cloud Observability (formerly known as Stackdriver), empowers users to proactively manage application performance. It facilitates the creation of custom metrics tailored to specific application needs. These metrics capture critical performance indicators beyond the standard system metrics. Defining custom metrics allows for focused monitoring of business-critical aspects. Alerting policies can then be configured based on defined thresholds for these metrics. When a metric breaches a predefined threshold, an alert is triggered. This proactive approach enables swift responses to potential issues before they escalate into major incidents. Cloud Monitoring provides robust visualization capabilities through dashboards. These dashboards present data in an easily digestible format. Charts, graphs, and tables provide a comprehensive view of application health and performance trends. This visual representation aids in identifying patterns, anomalies, and areas requiring attention. Stackdriver’s Cloud Monitoring is essential for maintaining optimal application performance.

To effectively use Cloud Monitoring, it’s crucial to understand how to create custom metrics. Custom metrics can be based on logs, events, or any other relevant data source within GCP. For example, a custom metric could track the number of errors logged by an application within a specific timeframe. Once created, these metrics can be incorporated into dashboards and alerting policies. Setting up alerting policies involves defining the conditions that trigger an alert. This includes specifying the metric to monitor, the threshold to exceed, and the notification channels to use. Notification channels can include email, SMS, or integration with other incident management systems. Visualizing data using dashboards is key to understanding application behavior. Dashboards can be customized to display a variety of metrics and charts, providing a holistic view of performance. Effective dashboards contribute to optimized stackdriver monitoring.

Consider examples of monitoring common application performance indicators using Cloud Monitoring (Stackdriver). CPU utilization is a critical metric to track. High CPU utilization can indicate resource bottlenecks or inefficient code. Memory consumption is another vital indicator. Excessive memory usage can lead to performance degradation or application crashes. Response latency, the time it takes for an application to respond to a request, is a key performance indicator for user experience. Monitoring these APIs, alongside others like disk I/O and network traffic, provides a comprehensive understanding of application health. By actively monitoring these metrics and configuring appropriate alerts, users can proactively identify and address performance issues. This ensures optimal application performance and a positive user experience. Stackdriver and its cloud monitoring tools enhance overall system stability.

Exploring Cloud Logging for Effective Troubleshooting

Cloud Logging, a pivotal component of Google Cloud Observability (formerly known as stackdriver), offers a robust solution for managing and analyzing log data within the Google Cloud Platform (GCP). It serves as a centralized repository for logs originating from various GCP services, applications, and even on-premises systems. Cloud Logging enables users to efficiently collect, store, search, and analyze logs, facilitating rapid issue identification and resolution. Its scalable architecture and powerful querying capabilities make it an indispensable tool for DevOps teams and system administrators.

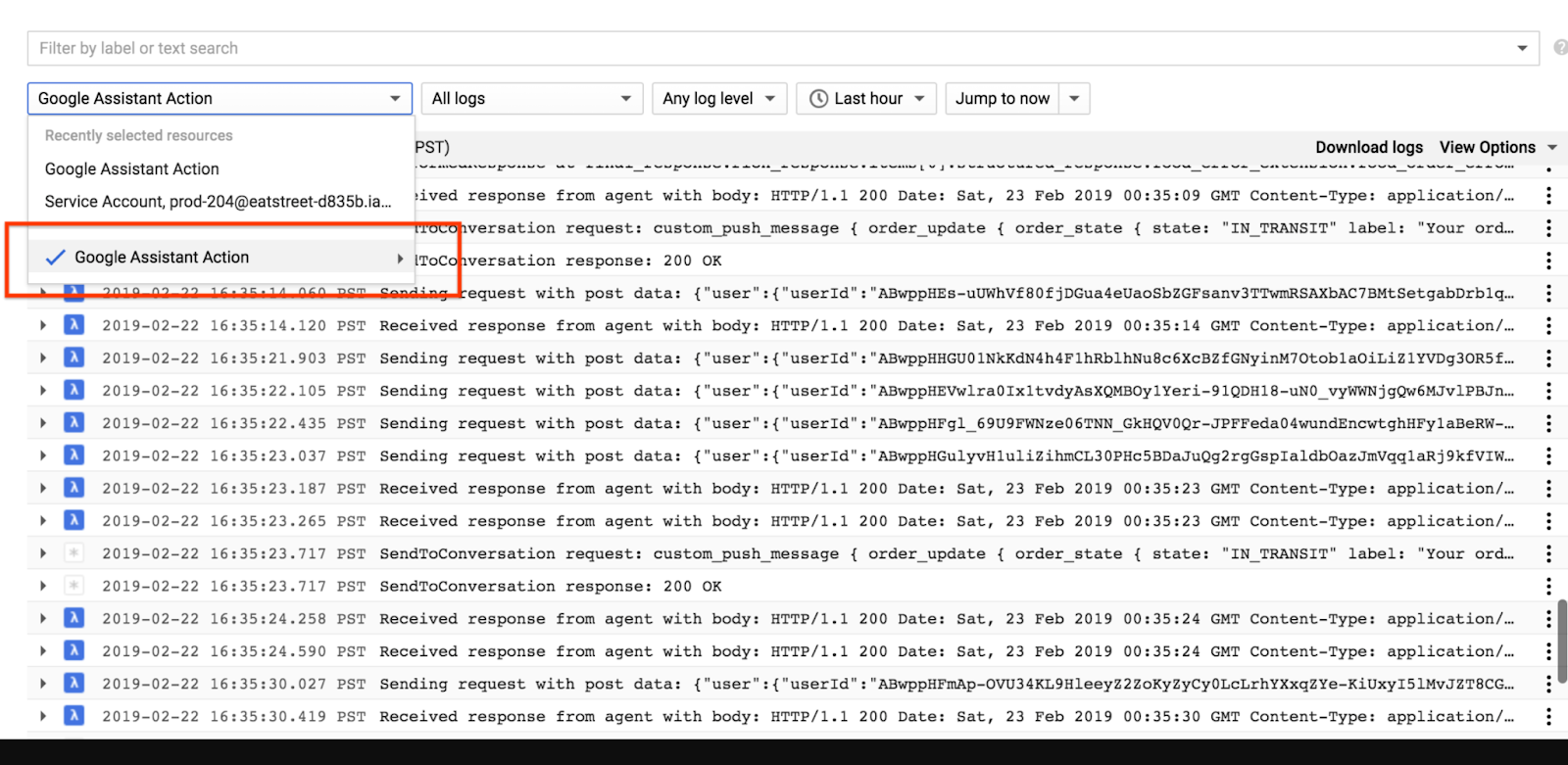

The process of leveraging Cloud Logging begins with collecting logs from diverse sources. GCP services automatically integrate with Cloud Logging, seamlessly sending logs related to their operations. For applications running within Compute Engine or Google Kubernetes Engine (GKE), the Ops Agent can be configured to forward logs to Cloud Logging. The agent supports various log formats and allows for custom log collection rules. Once logs are ingested, they are stored securely and indexed for efficient searching and analysis. Cloud Logging’s query language empowers users to filter logs based on timestamps, severity levels, resource types, and custom metadata. This allows for precise targeting of specific events or patterns within the vast log data.

Effective troubleshooting with stackdriver Cloud Logging hinges on the ability to quickly identify and diagnose issues. Features like log filtering and aggregation are invaluable in this regard. By filtering logs based on error codes or specific keywords, users can isolate relevant events from the noise. Aggregation allows for grouping logs based on common attributes, such as request IDs or user IDs, providing a holistic view of related events. Furthermore, Cloud Logging integrates seamlessly with other stackdriver components, such as Cloud Monitoring and Cloud Trace. This integration enables users to correlate log data with performance metrics and request traces, offering a comprehensive understanding of application behavior. For example, a spike in latency observed in Cloud Monitoring can be investigated by examining Cloud Logging for related error messages or slow database queries. Using stackdriver effectively streamlines the troubleshooting process, reducing mean time to resolution (MTTR) and improving application reliability.

Using Cloud Trace for In-Depth Request Latency Analysis

Cloud Trace, a crucial component of Google Cloud Observability (formerly known as stackdriver), offers invaluable insights into application performance by meticulously tracking individual requests as they traverse various services. This capability is especially beneficial in distributed systems and microservice architectures where a single user request can trigger a cascade of interactions across multiple components. Cloud Trace allows developers and operations teams to pinpoint performance bottlenecks, understand dependencies, and optimize the overall efficiency of their applications. stackdriver’s Cloud Trace empowers users to move beyond simple monitoring and delve into the intricacies of request handling.

To leverage Cloud Trace effectively, instrumentation is key. This involves adding code to applications to record timing information at various stages of request processing. Fortunately, stackdriver provides libraries and integrations for popular programming languages and frameworks, simplifying the instrumentation process. Once implemented, Cloud Trace captures detailed timing data for each request, creating a visual representation of the request’s journey through the system. This representation, known as a trace, highlights the time spent in each service or function, revealing potential areas of concern. For example, if a trace indicates that a significant portion of the request latency is attributed to a specific database query, developers can focus their optimization efforts on improving that query’s performance. Cloud Trace within stackdriver helps to reduce wasted effort and optimize code.

Beyond identifying slow database queries, Cloud Trace can also uncover inefficiencies in code sections, identify network latency issues, and reveal other performance impediments. By analyzing trace data, teams can gain a comprehensive understanding of the factors contributing to application latency and take targeted actions to improve performance. The integration with other Google Cloud Observability tools, like Cloud Monitoring and Cloud Logging, provides a holistic view of application health. Cloud Trace allows users to correlate performance data with logs and metrics, facilitating faster root cause analysis and issue resolution. With stackdriver Cloud Trace it becomes easier to build and maintain performant and reliable applications by providing the detailed visibility needed to proactively identify and address performance bottlenecks. Analyzing request flows becomes more efficient with Cloud Trace, which is essential for maintaining optimal application performance and user experience. The stackdriver tool is a game changer.

Profiling Application Performance with Cloud Profiler

Cloud Profiler, a powerful component of Google Cloud Observability (formerly Stackdriver), offers invaluable insights into application performance. It achieves this by identifying the functions within an application that consume the most resources, such as CPU time and memory. By understanding these resource-intensive areas, developers can strategically optimize code to enhance efficiency and reduce operational costs. This proactive approach to performance management allows for the identification of bottlenecks and inefficiencies that might otherwise go unnoticed.

The process begins with the collection of profiling data. Cloud Profiler continuously samples the application’s execution, recording the functions that are actively running and the resources they are consuming. This data is then aggregated and presented in a visual format, allowing developers to easily identify the “hot spots” in their code. The collected data highlights which functions are contributing most significantly to resource consumption, enabling targeted optimization efforts. Stackdriver Profiler supports multiple programming languages, making it a versatile tool for diverse development environments. The insights gained from Cloud Profiler enable developers to make informed decisions about code optimization, leading to improved application speed and reduced infrastructure costs. Proper stackdriver usage contributes to efficient cloud resource management.

Analyzing profiling data involves examining the call graphs and flame graphs generated by Cloud Profiler. Call graphs illustrate the relationships between different functions, showing how they call each other and the amount of time spent in each function. Flame graphs provide a hierarchical view of resource consumption, with wider sections indicating functions that consume more resources. By carefully studying these visualizations, developers can pinpoint specific lines of code that are causing performance issues. For example, a slow database query or an inefficient algorithm might be readily apparent in the profiling data. Addressing these issues directly leads to tangible improvements in application performance and a more efficient use of resources. Stackdriver helps provide the data needed for efficient cloud resource management. Furthermore, optimized code reduces the overall demand on infrastructure, potentially leading to lower cloud computing costs. Therefore, Cloud Profiler is essential for any organization seeking to maximize the performance and cost-effectiveness of their applications running on Google Cloud. Understanding and leveraging stackdriver tools such as Cloud Profiler is important for any cloud professional.

Integrating Google Cloud Observability with Kubernetes

Effectively monitoring applications within Google Kubernetes Engine (GKE) requires a robust observability solution. Google Cloud Observability (formerly stackdriver) seamlessly integrates with Kubernetes, providing comprehensive insights into the health and performance of your containerized workloads. This integration allows you to collect container logs and metrics, monitor pod health, and trace requests across microservices, all within a unified platform.

Collecting container logs is crucial for troubleshooting and understanding application behavior. Google Cloud Observability automatically collects logs from containers running in GKE. These logs can be viewed, filtered, and analyzed using Cloud Logging. You can configure log-based metrics to track specific events or errors, enabling proactive alerting and performance monitoring. Stackdriver also provides dashboards for visualizing key log metrics, allowing you to quickly identify trends and anomalies. Monitoring pod health is essential for ensuring the availability and reliability of your applications. Google Cloud Monitoring provides detailed metrics on pod resource utilization (CPU, memory, disk), restart counts, and readiness/liveness probe status. Alerting policies can be set up to notify you when pods are unhealthy or experiencing resource constraints. These alerts allow you to quickly respond to issues and prevent downtime. By leveraging these features, you can ensure that your Kubernetes applications are running smoothly and efficiently with stackdriver.

Tracing requests across microservices is vital for identifying performance bottlenecks in complex applications. Google Cloud Trace allows you to trace individual requests as they propagate through different services in your Kubernetes cluster. By visualizing the request flow and timing, you can pinpoint slow database queries, inefficient code sections, or network latency issues. Stackdriver helps optimize the performance of your microservices-based applications by providing detailed insights into request latency and dependencies. The integration between Google Cloud Observability and Kubernetes enables you to gain a holistic view of your application’s health, performance, and resource utilization. By leveraging the monitoring, logging, and tracing capabilities of Stackdriver, you can effectively troubleshoot issues, optimize performance, and ensure the reliability of your containerized workloads. Setting up these integrations properly from the start is essential for proper stackdriver management, and long term application reliability.

Best Practices for Optimizing Google Cloud Observability Usage and Costs

Effective management of Google Cloud Observability, formerly known as stackdriver, is crucial for maintaining optimal application performance without incurring unnecessary expenses. A well-structured strategy encompassing log filtering, metric management, and alert configuration is essential. Examining pricing models and utilizing cost management tools are also key components of cost optimization. The initial step involves a thorough evaluation of the logs being ingested into Cloud Logging. Excessive or irrelevant logging can significantly inflate costs. Implement robust filtering rules to exclude verbose or non-essential logs. Consider aggregating similar log entries to reduce the overall volume of data stored. Optimize the retention period for logs based on their relevance and compliance requirements. Cloud Monitoring relies on metrics to track application performance. High-cardinality metrics, which have a large number of unique values, can consume substantial resources and increase costs. Identify and eliminate unnecessary labels or dimensions from metrics. Focus on tracking only the most critical performance indicators relevant to your applications. Stackdriver alerting policies can trigger notifications when metrics cross predefined thresholds. Overly sensitive or poorly configured alerts can generate excessive noise and consume valuable resources. Regularly review and refine alerting policies to ensure they are accurate and relevant. Adjust thresholds based on historical data and application behavior. Consider using dynamic thresholds that automatically adapt to changing workloads.

Understanding the pricing models for Google Cloud Observability services is critical for cost management. Cloud Logging charges based on the volume of data ingested and stored. Cloud Monitoring charges based on the number of metrics stored and the frequency of data points. Cloud Trace and Cloud Profiler have their own pricing structures based on usage. Leverage cost management tools provided by Google Cloud Platform to gain visibility into your Observability spending. Use cost breakdowns to identify areas where you can optimize resource utilization. Set up budgets and alerts to track your spending and prevent unexpected costs. Regularly monitor your Google Cloud Observability usage and costs to identify potential areas for improvement. Analyze your log volume, metric cardinality, and alerting activity to pinpoint inefficiencies. Experiment with different configurations and settings to optimize performance and reduce expenses. Stackdriver provides valuable insights into application health and performance, but it’s essential to manage its usage effectively to control costs.

Proactive cost optimization is an ongoing process that requires continuous monitoring and refinement. Implement a feedback loop to track the impact of your optimization efforts and identify new opportunities for improvement. Share best practices and lessons learned with your team to promote a culture of cost consciousness. By following these best practices, organizations can maximize the value of Google Cloud Observability, including stackdriver, while minimizing their overall costs. Implementing these strategies ensures a balance between comprehensive monitoring and efficient resource utilization, leading to better application performance and cost savings.