Understanding Azure Databricks Cost Optimization

Azure Databricks is a powerful platform for big data processing and analytics, offering a collaborative environment for data scientists, engineers, and analysts. It provides a unified workspace for various tasks, from data ingestion and transformation to machine learning and real-time analytics. However, many users find the consumption-based pricing model challenging to navigate. Understanding the factors that influence azure databricks pricing is crucial for effective cost management.

One of the primary challenges lies in the dynamic nature of Databricks workloads. Costs can fluctuate significantly depending on the complexity of the data pipelines, the size of the datasets being processed, and the compute resources utilized. Organizations often struggle to accurately predict and control their azure databricks pricing, leading to unexpected expenses. Several factors contribute to the overall cost, including Databricks Units (DBUs), instance types, storage, and networking. Efficient resource allocation and workload optimization are essential to minimize expenditures. Identifying areas where costs can be reduced without impacting performance requires careful analysis and proactive management.

The intricacies of azure databricks pricing necessitate a comprehensive understanding of its components and effective strategies for managing consumption. Without proper planning and monitoring, organizations risk overspending on resources and hindering their return on investment. By implementing best practices for cost optimization and leveraging available tools, businesses can unlock the full potential of Azure Databricks while maintaining a predictable and manageable budget. Mastering azure databricks pricing empowers organizations to make informed decisions about resource allocation, workload scheduling, and infrastructure configuration, ultimately driving greater efficiency and value from their data initiatives. A key component is understanding how different features and configurations impact overall azure databricks pricing.

Deciphering Azure Databricks Unit Charges: A Detailed Guide

Understanding Azure Databricks pricing requires a breakdown of its core components. Databricks Units (DBUs) form the foundation of the cost structure. DBUs measure the processing power consumed. Instance types and storage costs also contribute significantly to the overall bill. Each element impacts the final expense. Different workloads consume varying amounts of DBUs. For example, a complex data transformation job utilizes more DBUs. A simple data query consumes fewer DBUs. Understanding these differences is crucial for cost management related to azure databricks pricing.

Instance types also play a vital role in azure databricks pricing. Selecting the appropriate instance type is essential for optimizing cost and performance. Compute-optimized instances are suitable for CPU-intensive tasks. Memory-optimized instances are best for workloads that require large amounts of RAM. GPU-accelerated instances are ideal for machine learning tasks. Choosing the right instance type aligns resources with workload demands. This prevents overspending on unnecessary capacity. Several factors will affect azure databricks pricing and finding the perfect balance is key to keep cost at minimum.

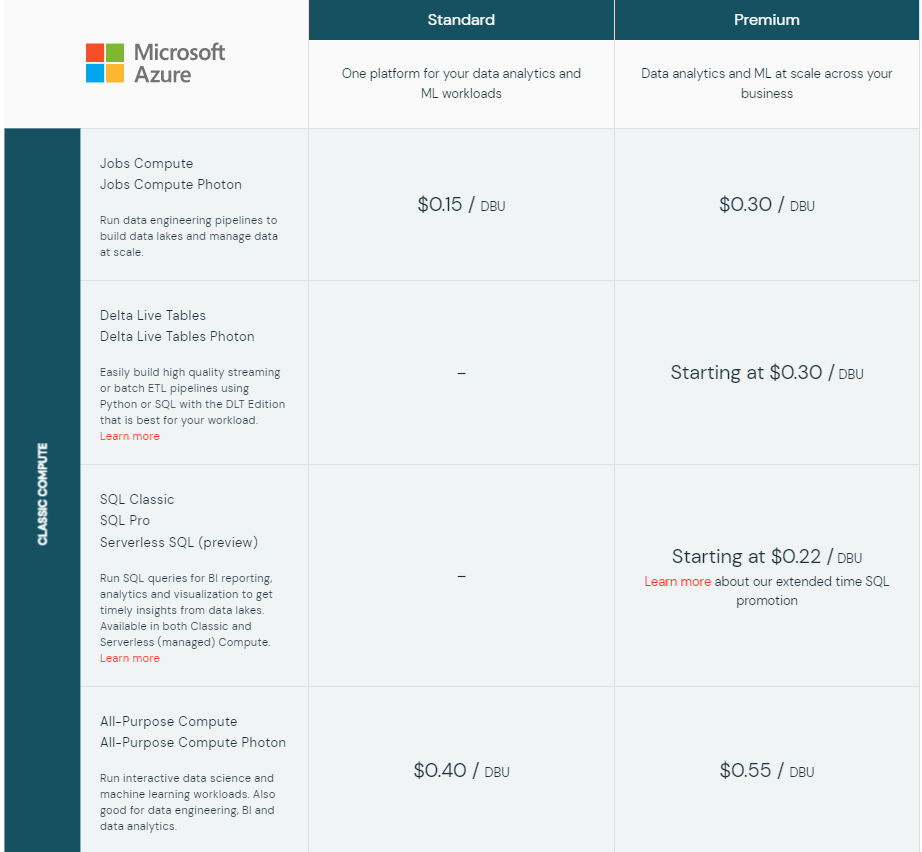

Azure Databricks offers different tiers, including Standard, Premium, and Enterprise. Each tier provides different features and pricing structures. The Standard tier offers basic functionality. The Premium tier includes advanced features like role-based access control. The Enterprise tier provides the most comprehensive set of features. Selecting the appropriate tier depends on specific organizational needs and requirements. Understanding the differences between these tiers is essential for effective azure databricks pricing management and overall cost optimization. It allows informed decisions that align with both functionality and budgetary constraints.

Unlocking Savings: How to Analyze and Reduce Databricks Expenses

To effectively manage and minimize unnecessary Azure Databricks costs, a multifaceted approach is essential. This involves leveraging the powerful tools available within Azure and Databricks itself to gain insights into consumption patterns and identify areas for optimization. Azure Cost Management plays a crucial role in providing a centralized view of your cloud spending, including detailed breakdowns of Azure Databricks pricing. By analyzing DBU consumption trends, users can pinpoint specific jobs or clusters that are contributing disproportionately to overall expenses. Furthermore, Databricks provides its own monitoring tools that offer granular visibility into cluster performance, job execution times, and resource utilization. These tools enable a deeper understanding of how workloads are consuming DBUs, facilitating targeted optimization efforts. Proper analysis of azure databricks pricing is important to keep costs under control.

One of the most impactful strategies for cost reduction is identifying and addressing idle clusters. Clusters that are left running when not actively processing data can quickly accumulate unnecessary charges. Azure Cost Management can help highlight such instances, allowing users to either terminate or properly configure them for auto-termination after a period of inactivity. Another key area for optimization lies in job scheduling. By carefully planning and scheduling jobs to run during off-peak hours or when resources are less constrained, you can potentially reduce contention and improve overall efficiency, leading to lower DBU consumption. Right-sizing clusters is equally important; over-provisioning resources can lead to wasted capacity and increased costs. Carefully assess the resource requirements of your workloads and choose instance types that align with those needs. Azure Databricks pricing depends on an appropriate configuration of your clusters and jobs.

Leveraging auto-scaling capabilities can further enhance cost efficiency. Auto-scaling dynamically adjusts the number of worker nodes in a cluster based on the demands of the workload. This ensures that you only pay for the resources you actually need, avoiding the costs associated with maintaining a static, over-provisioned cluster. Additionally, consider implementing resource quotas and cost allocation tags to improve accountability and track expenses across different teams or projects. Regular monitoring of Azure Databricks pricing and proactive optimization efforts are crucial for maintaining a cost-effective data analytics environment. Continuous monitoring and refinement of your Databricks configurations can lead to significant savings over time.

Strategies for Efficient Data Engineering Workflows on Databricks

Efficient data engineering practices are crucial for minimizing azure databricks pricing. Optimizing these workflows directly translates to reduced DBU consumption and lower overall costs. Several techniques can be employed to achieve this, focusing on Spark code optimization, data format selection, and minimizing data shuffling.

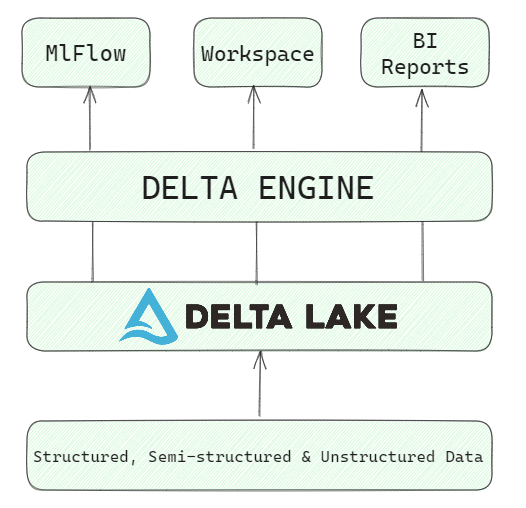

Optimizing Spark code involves carefully crafting transformations and actions to minimize resource usage. For example, avoid using `collect()` on large datasets as it moves all data to the driver node, potentially causing memory issues. Instead, leverage distributed operations and aggregations. Choose appropriate data formats like Parquet or Delta Lake. These formats offer efficient storage and retrieval due to columnar storage and compression capabilities. Delta Lake further enhances reliability with ACID transactions and versioning. Minimizing data shuffling is critical. Shuffling occurs when Spark needs to redistribute data across partitions, which is an expensive operation. Techniques like partitioning data appropriately and using broadcast joins for smaller datasets can significantly reduce shuffling. Consider the following example:

Instead of joining two large DataFrames directly, broadcast the smaller DataFrame to all executor nodes:

broadcast_df = broadcast(small_df)

joined_df = large_df.join(broadcast_df, "join_key")

This eliminates the need to shuffle the smaller DataFrame across the network, resulting in substantial performance gains and reduced azure databricks pricing. Furthermore, ensure that your data is properly partitioned based on common join keys or filter criteria. This will keep related data on the same nodes, reducing the need for shuffling during subsequent operations. Another aspect of efficient data engineering is effectively managing stateful operations. Windowing and aggregation operations can be resource-intensive. Utilize techniques like incremental aggregation and approximate algorithms to reduce the amount of data processed. Carefully design your pipelines to avoid unnecessary computations and intermediate data storage. Regularly review and profile your Spark code to identify performance bottlenecks and areas for optimization, which impacts azure databricks pricing directly. Proactive monitoring and optimization of data engineering workflows are key to controlling azure databricks pricing and maximizing the value of your Databricks investment. This proactive approach is essential for cost-effective data processing and analytics.

How to Choose the Optimal Databricks Instance Type for Your Workload

Selecting the right instance type is crucial for optimizing Azure Databricks pricing and ensuring efficient workload execution. Different workloads have varying resource requirements, and choosing an instance that aligns with these needs can significantly impact performance and cost. This section provides a detailed guide on matching instance types to specific job characteristics, considering factors like CPU, memory, and GPU requirements. Understanding these trade-offs allows you to optimize your azure databricks pricing effectively.

For data transformation workloads, which often involve heavy data processing and shuffling, memory-optimized or compute-optimized instances are generally a good choice. Memory-optimized instances, such as those in the E series on Azure, provide a large amount of RAM, which is beneficial for caching intermediate data and reducing disk I/O. Compute-optimized instances, like the F series, offer a high CPU-to-memory ratio, making them suitable for CPU-intensive tasks. In contrast, machine learning training workloads often benefit from GPU-accelerated instances, such as the NC or ND series. GPUs can significantly speed up training times for deep learning models, leading to faster results and potentially lower overall azure databricks pricing due to reduced compute hours. Consider your specific needs when choosing an instance type.

The trade-offs between CPU, memory, and GPU need careful evaluation. A workload that is bottlenecked by memory will not benefit from a high-CPU instance. Conversely, a CPU-bound workload will not see significant performance improvements from a GPU-accelerated instance. To help illustrate this, consider two scenarios: Scenario A involves complex data aggregations where the data volume is large and intermediate results need to be cached, here memory-optimized instances are best. Scenario B involves training a deep learning model on image data, in this case, GPU instances are more efficient. By analyzing the characteristics of your workload, you can select the most appropriate instance type, optimizing performance while managing your azure databricks pricing. Careful planning around instance types will help in keeping your costs aligned with your processing needs. The key is to understand your data, processing requirements, and how these align with instance capabilities.

Leveraging Azure Databricks Spot Instances for Cost Reduction

Azure Databricks offers a powerful cost-saving mechanism through the use of Azure Spot Instances (formerly known as Low Priority VMs). These instances provide access to unused Azure compute capacity at significantly reduced prices compared to regular on-demand instances. While this presents a compelling opportunity to optimize azure databricks pricing, it’s crucial to understand the trade-offs involved.

The primary consideration when using Spot Instances is the possibility of preemption. Because these instances are drawn from surplus capacity, Azure can reclaim them with a short notice of approximately 30 seconds. This means your Databricks jobs running on Spot Instances could be interrupted. Therefore, Spot Instances are best suited for fault-tolerant workloads that can handle interruptions gracefully. Examples include data transformation tasks, backfilling historical data, or running non-critical experiments where occasional restarts are acceptable. To mitigate the impact of preemption, implement checkpointing in your Spark jobs, allowing them to resume from the last saved state. You can also configure your Databricks clusters to use a combination of Spot Instances and regular on-demand instances. This ensures that critical tasks continue to run even if Spot Instances are preempted. Understanding your azure databricks pricing model will help you decide when to incorporate spot instances.

Conversely, Spot Instances are not suitable for latency-sensitive applications or production workloads that require guaranteed uptime. Critical ETL pipelines, real-time data processing, and interactive query services should typically run on on-demand instances to avoid disruptions. When configuring Spot Instances, carefully consider the instance type and availability zone. Some instance types may be more prone to preemption than others, and availability can vary across zones. Monitoring the preemption rates of different instance types can help you make informed decisions. By strategically leveraging Azure Spot Instances, organizations can significantly reduce their azure databricks pricing and overall compute costs without compromising the reliability of their most critical workloads. Keep in mind your azure databricks pricing and analyze your workload needs to find the best trade-off.

Mastering Azure Databricks Cost Monitoring and Alerting

Effective cost management is crucial for optimizing your Azure Databricks environment and controlling azure databricks pricing. Proactive monitoring and alerting mechanisms allow you to identify and address potential cost overruns before they significantly impact your budget. Leveraging the right tools and configurations within Azure and Databricks is essential for maintaining cost efficiency.

Azure Monitor offers comprehensive monitoring capabilities for your Databricks deployments. It allows you to track DBU consumption, a key factor influencing azure databricks pricing, resource utilization, and job performance metrics. By setting up custom dashboards and alerts in Azure Monitor, you can gain real-time visibility into your Databricks costs and receive notifications when consumption exceeds predefined thresholds. Furthermore, Azure Cost Management provides tools for analyzing your Databricks spending patterns, identifying cost drivers, and forecasting future expenses. You can define budgets and configure alerts to be notified when you are approaching or exceeding your allocated budget for Databricks resources. Integrating Azure Cost Management with Databricks monitoring enables a holistic view of your costs and facilitates data-driven decision-making.

Within Databricks itself, several built-in monitoring tools can enhance your cost management strategy and optimize azure databricks pricing. The Databricks UI provides detailed information on cluster utilization, job execution times, and DBU consumption at the individual job level. You can use this data to identify inefficient code, optimize job scheduling, and right-size your clusters. By analyzing the performance of your Spark applications, you can pinpoint areas for optimization and reduce unnecessary resource consumption. Setting up alerts based on job completion times or DBU consumption can help you identify anomalies and proactively address potential issues. Continuous monitoring and optimization are essential for maintaining a cost-effective Databricks environment. Regularly reviewing your monitoring data, analyzing cost trends, and adjusting your configurations will ensure you are maximizing the value of your Databricks investment while minimizing your azure databricks pricing.

Future-Proofing Your Databricks Environment: Emerging Cost Optimization Techniques

The landscape of cloud computing is ever-evolving, and Azure Databricks is no exception. Future cost optimization strategies will likely involve a combination of technological advancements and refined pricing models. One area to watch is the potential expansion of serverless Databricks capabilities. Serverless computing abstracts away the need to manage underlying infrastructure, potentially leading to more efficient resource utilization and reduced costs. This approach could allow users to focus solely on their data processing tasks, with Azure automatically scaling resources based on demand and optimizing azure databricks pricing.

Advanced cost prediction models are also on the horizon. Machine learning algorithms can analyze historical DBU consumption patterns, workload characteristics, and other relevant factors to forecast future costs with greater accuracy. These models can empower users to proactively identify potential overspending and make informed decisions about resource allocation. Furthermore, tighter integrations with other Azure services will play a key role in optimizing overall expenses. For example, integrating Databricks with Azure Synapse Analytics or Azure Data Lake Storage Gen2 can streamline data pipelines and reduce the need for costly data movement. Optimizing the interaction between these services will be crucial for efficient azure databricks pricing management.

Finally, potential improvements in Azure Databricks pricing models themselves could offer new avenues for cost savings. This might include more granular pricing tiers, specialized instance types tailored to specific workloads, or innovative discount programs based on committed usage. Keeping abreast of these developments will be essential for organizations seeking to maximize the value of their Databricks investments. As the platform matures, expect to see more sophisticated tools and techniques emerge that empower users to fine-tune their azure databricks pricing and achieve optimal cost efficiency. Embracing these emerging cost optimization techniques will not only reduce expenses but also improve the overall agility and scalability of your Databricks environment and improve azure databricks pricing.