Understanding Data Pipelines in Azure: A Comprehensive Overview

Data pipelines are fundamental to modern data integration, acting as the backbone for moving and transforming data from various sources to destinations. Within the Azure ecosystem, Azure Data Factory (ADF) emerges as a powerful, fully managed, cloud-based ETL (Extract, Transform, Load) service designed for building these sophisticated data pipelines. It allows users to orchestrate and automate data movement and data transformation at scale. Azure Data Factory empowers businesses to create complex data workflows that ingest data from diverse on-premises and cloud-based data stores, process the data, and load it into target systems for analytics and reporting. The creation of robust and efficient azure data factory pipelines is streamlined through its intuitive interface and comprehensive feature set.

The benefits of using ADF are multifold, starting with its inherent scalability. ADF can handle massive volumes of data without requiring extensive infrastructure management, scaling resources up or down as needed to meet fluctuating demands. Cost-effectiveness is another significant advantage. Users only pay for the resources consumed during pipeline execution, optimizing spending and eliminating the need for upfront capital investments. Furthermore, Azure Data Factory seamlessly integrates with a wide array of other Azure services, such as Azure Blob Storage, Azure SQL Database, Azure Synapse Analytics, Azure Databricks, and Azure Machine Learning. This integration enables users to build end-to-end data solutions within the Azure environment. Azure data factory pipelines benefit from the wider Azure ecosystem allowing for robust and adaptable data solutions.

Beyond scalability and cost, ADF also offers robust monitoring and management capabilities. Users can track pipeline execution, identify potential bottlenecks, and troubleshoot issues through the Azure portal. This visibility ensures data integrity and allows for proactive intervention to maintain data quality. The ability to create parameterized pipelines in ADF promotes reusability and simplifies maintenance. By defining parameters, pipelines can be adapted to process different datasets or environments without requiring code modifications. This flexibility reduces development time and enhances operational efficiency. In essence, Azure Data Factory provides a comprehensive platform for building, deploying, and managing azure data factory pipelines, enabling organizations to unlock the full potential of their data assets and gain valuable business insights. Through careful design and optimization, azure data factory pipelines built with ADF can provide a scalable, secure, and cost-effective solution for your data integration needs.

How to Build Data Pipelines with Azure’s Data Integration Tool

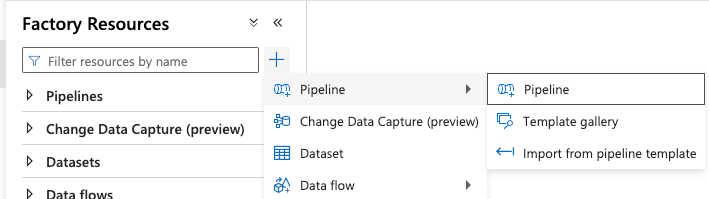

Creating an azure data factory pipelines involves several key steps within the Azure Data Factory (ADF) service. This guide outlines the process of building a basic data pipeline, focusing on core components and their configuration. The initial step is to create a linked service, which establishes a connection to your data source, such as Azure Blob Storage or Azure SQL Database. This linked service acts as a bridge, allowing ADF to access and interact with your data. Next, define datasets that represent the structure of your data within the linked service. These datasets specify the format (e.g., CSV, JSON), location, and schema of your data, enabling ADF to understand how to read and write information. The combination of linked services and datasets forms the foundation for data movement and transformation within your azure data factory pipelines.

With the data connection established, the next step involves defining activities within your azure data factory pipelines. Activities represent the actions performed on your data, such as copying data from one location to another or transforming data using a data flow. The “Copy Activity” is commonly used for data movement, allowing you to transfer data between different data stores. Configure the source and sink datasets in the Copy Activity to specify where the data is read from and written to. For more complex data transformations, utilize the “Data Flow Activity.” Data flows provide a visual interface for designing data transformations, allowing you to perform operations like aggregations, joins, and filtering. These activities are the workhorses of your azure data factory pipelines, orchestrating the movement and transformation of data based on your defined logic.

Finally, triggers initiate the execution of your azure data factory pipelines. Triggers can be schedule-based, event-based, or manual. A schedule trigger allows you to run your pipeline at regular intervals, such as daily or hourly. Event-based triggers respond to specific events, such as the arrival of a new file in a blob storage container. You can also manually trigger a pipeline execution as needed. Once a trigger is activated, ADF executes the defined activities in the pipeline, moving and transforming data according to your specifications. Throughout this process, Azure Data Factory provides monitoring capabilities to track pipeline execution, identify errors, and ensure data integrity. By following these steps, you can build robust and scalable azure data factory pipelines to meet your data integration needs.

Mastering Control Flow Activities for Robust Data Orchestration

Control flow activities in Azure Data Factory (ADF) are essential for building robust and dynamic azure data factory pipelines. These activities enable the orchestration of tasks, allowing for conditional execution, looping, and the management of dependencies within your data workflows. Understanding and effectively utilizing these activities is crucial for creating complex and reliable data integration solutions. ADF offers a variety of control flow activities, each designed to handle specific orchestration needs.

The “If Condition” activity allows you to implement conditional logic in your azure data factory pipelines. Based on the evaluation of an expression, you can direct the pipeline to execute different sets of activities. This is useful for handling different data scenarios or error conditions. For example, you might use an “If Condition” to check if a file exists before attempting to process it. The “ForEach” activity enables you to iterate over a collection of items and execute a set of activities for each item. This is particularly useful when processing multiple files, tables, or datasets. You can dynamically pass the current item in the collection to the activities within the loop, enabling flexible and scalable data processing. The “Until” activity provides a mechanism for looping until a specific condition is met. This is helpful for scenarios where you need to retry an operation until it succeeds or until a certain timeout is reached. For instance, you might use an “Until” activity to repeatedly check for the availability of a resource before proceeding with further processing. “Execute Pipeline” activity allows you to trigger another pipeline from within the current pipeline. This enables modularization and reusability, allowing you to break down complex data workflows into smaller, manageable components. You can pass parameters between pipelines, facilitating data sharing and coordination. By combining these control flow activities, you can create sophisticated azure data factory pipelines that can handle a wide range of data integration challenges.

Consider a scenario where you need to process daily sales data. You can use an “If Condition” activity to check if the sales data file for the current day exists. If it does, you can use a “ForEach” activity to iterate over each sales transaction in the file and perform data cleansing and transformation. If a transaction fails validation, you can use an “Until” activity to retry the validation process a certain number of times before logging an error. Finally, you can use an “Execute Pipeline” activity to trigger a separate pipeline that loads the processed sales data into a data warehouse. Another common use case involves handling dependencies between different data sources. You might have a pipeline that extracts data from multiple sources and loads it into a staging area. Before loading the data into the target system, you need to ensure that all the source data has been successfully extracted. You can use control flow activities to define these dependencies and ensure that the pipelines execute in the correct order. For example, you can use an “If Condition” activity to check if all the source data files have been received before triggering the data loading pipeline. This ensures data consistency and prevents errors due to incomplete data. Mastering control flow activities is essential for building robust and reliable azure data factory pipelines that can handle complex data integration scenarios.

Working with Data Flows: Transforming Your Data in the Cloud

Data flows within Azure Data Factory pipelines offer a visually driven approach to data transformation, enabling developers to construct complex ETL (Extract, Transform, Load) processes without writing extensive code. This feature allows users to design data transformations through a graphical interface, simplifying the development and maintenance of data integration solutions. Azure Data Factory pipelines leverage data flows to handle diverse data manipulation tasks, from simple data cleansing to intricate data modeling. The power of data flows lies in its ability to abstract away the complexities of underlying data processing engines, allowing users to focus on the logic of data transformation.

The transformation options within data flows are extensive, encompassing aggregations, joins, lookups, derived columns, and more. Aggregations allow users to summarize data by grouping rows based on specified criteria. Joins enable the combination of data from multiple sources based on related columns. Lookups enrich data by retrieving values from external datasets. Derived columns facilitate the creation of new columns based on existing data using expressions and functions. These transformations, when combined, provide a robust toolkit for manipulating data to meet specific business requirements. Azure Data Factory pipelines benefit significantly from these capabilities, as they enable the creation of highly customized data processing workflows.

One of the primary benefits of utilizing data flows in Azure Data Factory pipelines is the ability to handle complex data manipulation tasks with ease. The visual nature of data flows makes it easier to understand and maintain data transformation logic compared to traditional coding approaches. Furthermore, data flows are optimized for performance, leveraging the power of Azure’s scalable compute resources to process large datasets efficiently. By using data flows, organizations can accelerate the development of their data integration solutions, improve data quality, and gain valuable insights from their data assets. Azure Data Factory pipelines, enhanced with data flows, provide a comprehensive platform for modern data integration needs, allowing for a more streamlined and effective approach to managing and transforming data in the cloud.

Monitoring and Debugging Data Pipelines: Ensuring Data Integrity

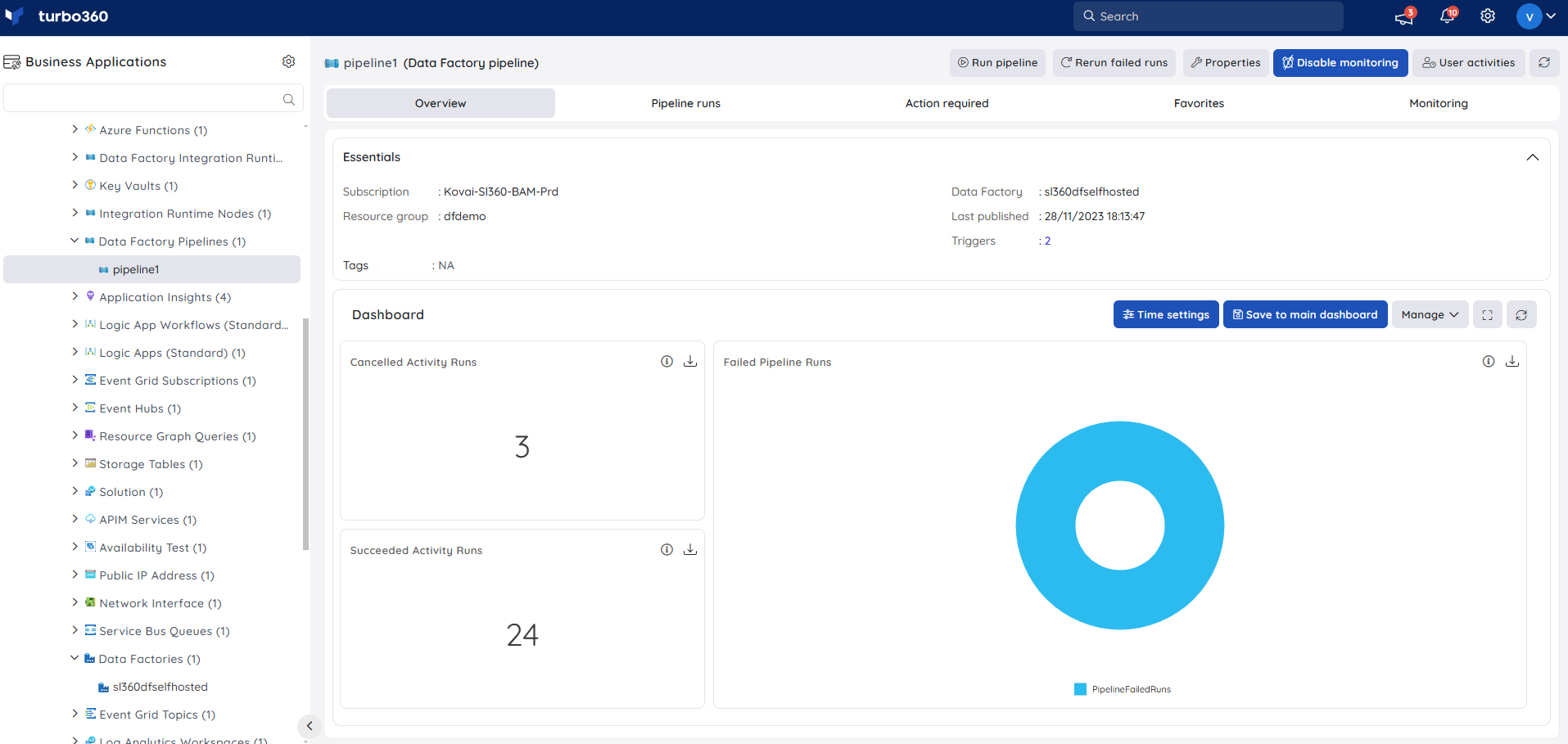

Data integrity is paramount when working with data pipelines. Monitoring and debugging Azure Data Factory pipelines are essential for ensuring data accuracy and reliability. The Azure portal provides comprehensive monitoring capabilities that allow users to track pipeline executions, identify errors, and troubleshoot issues effectively. Understanding how to leverage these tools is crucial for maintaining healthy and dependable azure data factory pipelines.

The monitoring section within the Azure portal offers a visual representation of pipeline runs, activity statuses, and execution times. Users can drill down into specific activity runs to view detailed logs and error messages. This granular level of visibility enables quick identification of bottlenecks and failures within the azure data factory pipelines. Implementing robust logging mechanisms is also vital. Activities should log relevant information about data transformations, input parameters, and output results. This detailed logging facilitates easier debugging and helps trace data lineage. Consider implementing custom logging within data flow activities to capture specific transformation details. Effective error handling is another critical aspect of pipeline maintenance. Implement error handling strategies within activities to catch exceptions and gracefully handle failures. This might involve retrying failed activities, sending notifications, or logging error details to a dedicated error log. Properly designed azure data factory pipelines account for potential errors and provide mechanisms for recovery.

Troubleshooting azure data factory pipelines often involves analyzing error messages and execution logs. Understanding the common error codes and their associated causes is essential for efficient debugging. For instance, connection errors might indicate issues with linked services, while data transformation errors might point to problems within data flows. When encountering errors, carefully review the activity inputs, outputs, and configuration settings. Check for data type mismatches, incorrect file paths, or invalid parameter values. Utilizing the debug mode in data flows allows step-by-step execution and inspection of data transformations, which greatly simplifies the debugging process. Remember to regularly review pipeline execution history and proactively address any recurring errors or performance issues. Proactive monitoring and debugging ensure the reliability and accuracy of your azure data factory pipelines, leading to improved data quality and better business outcomes.

Optimizing Performance of Azure Data Integration Services: Best Practices

Achieving optimal performance in Azure Data Factory pipelines requires a multifaceted approach, encompassing strategic design choices and meticulous configuration. One of the primary considerations is selecting the appropriate integration runtime. The Azure integration runtime is suitable for activities running within Azure, while the self-hosted integration runtime facilitates connectivity to on-premises data stores. Choosing the runtime that aligns with the data source and destination locations minimizes latency and maximizes throughput for your azure data factory pipelines.

Data flow performance is another critical area for optimization within azure data factory pipelines. Data flows, which visually represent data transformations, can become bottlenecks if not properly designed. It’s crucial to leverage appropriate transformation techniques, such as using aggregations to reduce data volume early in the flow. Furthermore, consider partitioning large datasets to enable parallel processing, thus accelerating transformation execution. Minimizing data movement is also paramount. Strive to perform transformations as close to the data source as possible to reduce network traffic and processing overhead. Utilizing parameterized pipelines enhances reusability and simplifies management of your azure data factory pipelines. Parameterization allows you to define generic pipelines that can be adapted to different data sources and destinations without requiring code modifications. This approach promotes efficiency and reduces the risk of errors.

Compression and file formats play a significant role in optimizing the performance of azure data factory pipelines. Employing compression techniques, such as GZIP or Snappy, reduces the size of data being transferred and stored, resulting in faster processing and lower storage costs. Selecting an appropriate file format, such as Parquet or ORC, which are column-oriented formats, can significantly improve query performance, especially for analytical workloads. These formats enable efficient data retrieval by only accessing the necessary columns, thereby minimizing I/O operations. By implementing these optimization techniques, one can significantly enhance the efficiency and cost-effectiveness of azure data factory pipelines, ensuring timely and reliable data integration processes.

Securing Your Data Pipelines: Protecting Sensitive Information in Transit and at Rest

Security is paramount when designing and implementing azure data factory pipelines. Protecting sensitive data during movement and storage is crucial for compliance and maintaining trust. Several Azure features and best practices can be employed to secure linked services, datasets, and the pipelines themselves.

Securing linked services is a primary concern. Azure Key Vault offers a secure way to store connection strings, passwords, and other secrets. Instead of embedding credentials directly in the linked service definition, you can reference secrets stored in Key Vault. This prevents sensitive information from being exposed in the Azure Data Factory metadata. Access to Key Vault should be strictly controlled using Azure Active Directory (Azure AD) roles and managed identities. Managed identities for Azure resources allow your azure data factory pipelines to authenticate to Key Vault without requiring you to manage credentials. Data encryption is another critical aspect of security. Azure Storage offers encryption at rest, automatically encrypting data before it is persisted. For data in transit, ensure that you are using secure protocols like HTTPS when connecting to data sources. Network security also plays a vital role. Azure Virtual Network (VNet) integration allows you to create a private network for your Azure Data Factory resources. This restricts access to the pipelines and data sources to only those within the VNet, further enhancing security. Consider using private endpoints for connecting to Azure services like Azure SQL Database and Azure Storage. This ensures that traffic stays within the Azure network and is not exposed to the public internet. Implementing these measures significantly reduces the risk of unauthorized access and data breaches in your azure data factory pipelines.

Access control is also essential. Azure role-based access control (RBAC) allows you to grant specific permissions to users and groups, limiting their access to Azure Data Factory resources. For example, you can grant a user the “Data Factory Contributor” role to allow them to create and manage pipelines, but restrict their ability to modify linked services or access Key Vault. Regularly review and update access control policies to ensure that users only have the permissions they need. Data masking and tokenization can be used to protect sensitive data within the pipelines themselves. Data masking replaces sensitive data with dummy values, while tokenization replaces sensitive data with non-sensitive tokens. These techniques can be used to prevent sensitive data from being exposed during pipeline execution or in logs. Monitoring and auditing are crucial for detecting and responding to security incidents. Enable Azure Security Center to monitor your Azure Data Factory environment for potential threats. Review audit logs regularly to identify any suspicious activity. By implementing these security measures, you can ensure that your azure data factory pipelines are protected from unauthorized access and data breaches.

Real-World Use Cases: Applying Data Integration Services to Solve Business Problems

Azure Data Factory pipelines offer versatile solutions for a multitude of business challenges. One common application is in data warehousing, where ADF pipelines automate the extraction, transformation, and loading (ETL) of data from disparate sources into a central data warehouse. Consider a retail company consolidating sales data from online stores, physical locations, and third-party marketplaces. An azure data factory pipelines can extract this data, cleanse and transform it to a consistent format, and load it into a data warehouse for analysis and reporting. This enables the company to gain insights into sales trends, customer behavior, and inventory management.

Another significant use case involves data migration. Businesses often need to migrate data from on-premises systems to the cloud or between different cloud platforms. Azure Data Factory simplifies this process by providing connectors to various data sources and destinations. Imagine a financial institution migrating its customer data from an on-premises database to Azure SQL Database. Azure data factory pipelines can securely transfer the data, ensuring data integrity and minimizing downtime. Furthermore, ADF excels in real-time data integration scenarios. For example, a manufacturing company can use ADF to ingest sensor data from its equipment in real time, process it, and send it to a dashboard for monitoring performance and predicting maintenance needs. This proactive approach can prevent equipment failures and optimize production efficiency. These real-world scenarios showcase the adaptability and power of azure data factory pipelines in solving diverse business problems.

Beyond these examples, azure data factory pipelines are instrumental in creating comprehensive data lakes. Organizations can ingest structured, semi-structured, and unstructured data into a data lake using ADF, preparing it for advanced analytics and machine learning. Consider a healthcare provider collecting patient data from electronic health records, wearable devices, and medical imaging systems. Azure data factory pipelines can ingest and process this diverse data, storing it in a data lake for researchers to analyze and identify patterns for improving patient care and outcomes. The ability to handle complex data transformations and orchestrate end-to-end data flows makes azure data factory pipelines a critical tool for organizations seeking to leverage the full potential of their data assets.