What is Google Cloud’s Collaborative Notebook Environment and Why Use It?

Google Cloud Datalab is a powerful, cloud-based tool designed for interactive data exploration, analysis, and visualization. It provides data scientists and analysts with a collaborative environment to investigate data, build models, and extract valuable insights. Google Cloud Datalab simplifies the process of working with data by offering a user-friendly interface and seamless integration with other Google Cloud services. These services include BigQuery, Cloud Storage, and more, creating a unified ecosystem for data-driven projects.

One of the key benefits of using Google Cloud Datalab is its collaborative nature. Multiple users can simultaneously access and work on the same notebooks, fostering teamwork and knowledge sharing. This collaborative environment allows for real-time discussions, code reviews, and joint problem-solving, which can significantly accelerate the data science workflow. Furthermore, Google Cloud Datalab’s integration with Google Cloud services eliminates the need for complex configurations and data transfers, streamlining the data analysis pipeline.

Google Cloud Datalab’s ease of use is another major advantage. Its intuitive interface and pre-installed libraries, such as Pandas, NumPy, and Matplotlib, make it easy for users to get started with data exploration and analysis. Whether you are a seasoned data scientist or a business analyst, Google Cloud Datalab provides the tools and resources you need to unlock the full potential of your data. By leveraging the power of Google Cloud’s infrastructure, Google Cloud Datalab enables you to process large datasets, build sophisticated models, and generate actionable insights, all within a secure and scalable environment.

Setting Up Your First Interactive Data Environment on Google Cloud

To begin using Google Cloud Datalab, the initial step involves setting it up within the Google Cloud Platform (GCP). First, select the appropriate Google Cloud project. If you don’t have one, you’ll need to create a new project in the Google Cloud Console. Once the project is selected, it’s essential to enable the necessary APIs. These typically include the Compute Engine API, Cloud Storage API, and BigQuery API, depending on your data sources and analysis needs. Enabling these APIs grants Datalab the permissions required to interact with other Google Cloud services. Make sure to verify that billing is enabled for your project, as Datalab utilizes GCP resources.

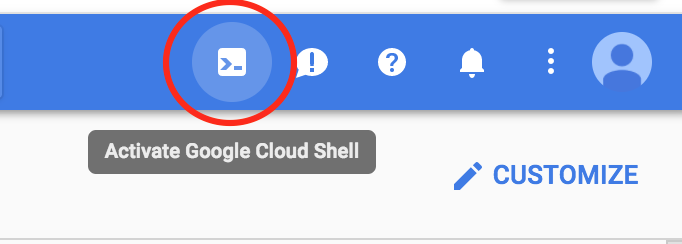

Next, you can launch a Google Cloud Datalab instance. There are a few deployment options available. One common method is using the Cloud Shell, a browser-based command-line interface. From Cloud Shell, you can use the `datalab create` command to create a new Datalab instance. This command allows you to specify various parameters, such as the zone where the instance will be located and the machine type. Another deployment option is using the Google Cloud Console. Navigate to the Datalab section and follow the instructions to create a new instance. The console provides a user-friendly interface for configuring the Datalab environment. Alternatively, Datalab can also be deployed on a local machine using Docker, offering flexibility for local development and testing. Google Cloud Datalab provides versatile options for different needs.

When choosing the right configuration, consider the size and complexity of your data and the expected workload. For small to medium-sized datasets, a standard machine type may suffice. However, for larger datasets or more computationally intensive tasks, consider using a machine type with more memory and processing power. Also, evaluate your storage needs. Datalab integrates seamlessly with Cloud Storage, allowing you to store and access your data files. Choose a storage class (e.g., Standard, Nearline, Coldline) that aligns with your access frequency and cost requirements. Proper configuration ensures optimal performance and cost-effectiveness when using Google Cloud Datalab for data exploration, analysis, and machine learning tasks. Security considerations, such as setting appropriate access controls and network configurations, should also be factored into the setup process, thus safeguarding your google cloud datalab environment.

How to Connect to Data Sources Within Google Cloud’s Collaborative Notebook Environment

Connecting Google Cloud Datalab to various data sources within Google Cloud is crucial for effective data analysis. This involves establishing secure and authenticated connections to services like BigQuery and Cloud Storage, enabling you to seamlessly access and query your data. Google Cloud Datalab simplifies this process, offering tools and libraries to streamline data access. Here’s a detailed guide on how to connect to these essential data sources.

To connect to BigQuery, Google’s fully managed, petabyte-scale data warehouse, you will first need to authenticate your Datalab instance. This can be achieved using Google Cloud’s Identity and Access Management (IAM). Ensure your Datalab instance has the necessary permissions to access BigQuery datasets. Once permissions are configured, you can use the BigQuery Python client library within Datalab. The following code snippet demonstrates how to query data from BigQuery:

from google.cloud import bigquery

client = bigquery.Client()

query = """SELECT column_1, column_2 FROM `your-project.your_dataset.your_table` LIMIT 10"""

query_job = client.query(query)

results = query_job.result()

for row in results:

print(row.column_1, row.column_2)

This code initializes a BigQuery client, defines a SQL query, executes the query, and iterates through the results, printing the specified columns. Similarly, connecting to Cloud Storage, Google’s object storage service, involves authentication and authorization. You can use the Cloud Storage client library to interact with your buckets and objects. Here’s an example of how to read a file from Cloud Storage:

from google.cloud import storage

client = storage.Client()

bucket = client.get_bucket('your-bucket-name')

blob = bucket.blob('your-file.csv')

content = blob.download_as_string()

print(content.decode('utf-8'))

This code retrieves a file from a specified Cloud Storage bucket and prints its contents. For secure data access, always adhere to the principle of least privilege, granting only the necessary permissions to your Datalab instance. Regularly review and update your IAM policies to maintain a secure environment. By following these steps, you can effectively connect Google Cloud Datalab to your data sources, facilitating comprehensive data exploration and analysis. Google Cloud Datalab streamlines these connections, offering robust tools for accessing and manipulating data from various Google Cloud services. This makes Google Cloud Datalab an invaluable asset for data scientists and analysts working within the Google Cloud ecosystem. Always prioritize secure data access when configuring these connections.

Exploring and Analyzing Data with Google Cloud’s Interactive Data Tool

Context_4: Google Cloud Datalab provides an interactive environment for robust data exploration and analysis. Users can perform many data manipulation tasks directly within the Datalab notebook. These tasks include filtering data based on specific criteria, sorting data for better organization, aggregating data to derive summary statistics, and transforming data to meet analytical requirements. Google cloud datalab supports Python, a versatile programming language widely used in data science. Libraries like Pandas and NumPy significantly enhance its capabilities. Pandas offers data structures like DataFrames for efficient data manipulation. NumPy provides support for numerical computations.

Using these tools within Google Cloud Datalab, analysts can rapidly explore datasets. For instance, one can filter a DataFrame to focus on specific customer segments. Sorting data by date allows for trend analysis over time. Aggregating sales data by region reveals top-performing areas. Transforming data, like converting units or creating new features, prepares it for advanced analysis. Code snippets within Datalab make these processes efficient and repeatable. Consider the following example, using Pandas to filter a DataFrame:

Visualizing Your Data with Interactive Charts and Graphs

This section focuses on the data visualization capabilities within the google cloud datalab environment. Effective data visualization is crucial for understanding trends, identifying outliers, and communicating insights derived from data analysis. Google cloud datalab seamlessly integrates with popular Python libraries that empower users to create a wide array of interactive charts and graphs. These visualizations can transform raw data into compelling visual stories. Libraries like Matplotlib, Seaborn, and Plotly offer diverse functionalities for crafting various chart types, including histograms, scatter plots, line charts, and more complex visualizations.

Matplotlib is a foundational library, providing extensive control over plot customization. Seaborn builds upon Matplotlib, offering a higher-level interface with aesthetically pleasing default styles and specialized plot types for statistical data visualization. Plotly enables the creation of interactive and web-based visualizations, allowing users to zoom, pan, and hover over data points for detailed exploration. To create a histogram illustrating the distribution of customer ages, one might use Matplotlib’s `plt.hist()` function. For a scatter plot visualizing the relationship between two variables, Seaborn’s `sns.scatterplot()` function can be employed. Line charts, useful for displaying trends over time, can be generated using Matplotlib’s `plt.plot()` function or Plotly’s interactive `go.Scatter()` function. Customization options are plentiful within each library, allowing users to adjust colors, labels, titles, and other aesthetic elements to enhance clarity and impact.

Furthermore, consider the specific type of data being visualized when selecting a chart type. Categorical data might be effectively represented using bar charts or pie charts, while geographical data can be visualized using maps. Google cloud datalab facilitates the creation of insightful visualizations by combining the power of Python libraries with the scalable resources of the Google Cloud Platform. By mastering these visualization techniques, users can unlock the full potential of their data, transforming raw numbers into actionable insights and compelling narratives. Experimenting with different chart types and customization options is encouraged to find the most effective way to communicate the underlying patterns and relationships within the data using google cloud datalab.

Leveraging Google Cloud’s Machine Learning Capabilities within Datalab

Google Cloud Datalab provides a robust environment for integrating with Google Cloud’s powerful machine learning services. This includes both TensorFlow and Vertex AI. Users can effectively leverage google cloud datalab for the entire machine learning lifecycle, from initial model development to training, evaluation, and eventual deployment. This integration streamlines the process, enabling data scientists and machine learning engineers to build and deploy models efficiently within a single, collaborative environment. The seamless connection between google cloud datalab and these services significantly accelerates the development and deployment pipeline.

To illustrate, consider using google cloud datalab to train a TensorFlow model. First, establish a connection to Cloud Storage, where your training data resides. Next, utilize TensorFlow code within a Datalab notebook to define your model architecture, specify the loss function, and select an optimizer. Datalab’s interactive environment allows for iterative model development. You can adjust parameters, visualize training progress, and evaluate model performance in real-time. Once satisfied, the trained model can be readily deployed to Vertex AI for serving predictions. This iterative and interactive nature of google cloud datalab dramatically improves the efficiency of model creation and fine-tuning. Here’s a basic example of using TensorFlow within google cloud datalab for model training:

Collaborative Data Science: Sharing and Collaborating on Notebooks

Google Cloud Datalab fosters collaborative data science by enabling multiple users to seamlessly work together on the same notebooks. This collaborative environment enhances productivity and facilitates knowledge sharing among team members involved in data analysis and machine learning projects. Sharing notebooks within Google Cloud Datalab is straightforward, promoting transparency and efficient teamwork. Users can easily share their notebooks with colleagues, granting specific permissions to control access and editing capabilities. This ensures that sensitive data and analyses are protected while allowing authorized team members to contribute effectively.

Managing permissions within Google Cloud Datalab is crucial for maintaining data security and integrity. Notebook owners can assign different roles to collaborators, such as viewer, commenter, or editor. These roles determine the level of access each user has, ensuring that only authorized individuals can modify the notebook content. Change tracking in Google Cloud Datalab allows users to monitor modifications, identify authors, and revert to previous versions if needed. This feature is invaluable for debugging, auditing, and maintaining a clear history of notebook development. Effective collaboration within Google Cloud Datalab also relies on clear communication and documentation. Encourage team members to use comments and markdown cells to explain their code, analyses, and findings. This promotes understanding and facilitates knowledge transfer among collaborators. Version control is another essential aspect of collaborative data science. While Google Cloud Datalab doesn’t have built-in version control, integrating it with Git repositories is a best practice. Using Git allows teams to track changes, manage branches, and resolve conflicts effectively. This ensures that the project remains organized and that changes are properly documented.

The benefits of using Google Cloud Datalab for team-based data science projects are numerous. It centralizes the data analysis environment, reducing the need for individual setups and configurations. This streamlines the development process and ensures consistency across team members. The collaborative features of Google Cloud Datalab promote knowledge sharing and accelerate problem-solving. Team members can learn from each other’s analyses, share insights, and collectively refine their approaches. This collaborative environment fosters innovation and leads to more effective data-driven decision-making. Google Cloud Datalab simplifies the management of complex data science projects. It allows teams to break down projects into smaller, manageable tasks and assign them to individual members. The ability to share notebooks and track progress ensures that everyone is aligned and working towards common goals. By leveraging the collaborative features of Google Cloud Datalab, data science teams can work more efficiently, effectively, and collaboratively, leading to better outcomes and greater insights from their data.

Optimizing Performance and Managing Costs with Google Cloud’s Interactive Data Tool

Effectively managing resources and minimizing costs are crucial when working with Google Cloud Datalab. Several strategies can be employed to ensure optimal performance without exceeding budget constraints. Understanding resource allocation within the Google Cloud Platform is paramount. Google Cloud Datalab instances consume compute resources, and selecting the appropriate instance size is vital. Over-provisioning leads to unnecessary expenses, while under-provisioning can hinder performance. Regularly monitor CPU and memory utilization to identify potential bottlenecks and adjust instance size accordingly. Data storage costs can also be significant. Utilize Cloud Storage efficiently by employing data compression techniques and lifecycle policies. Infrequent data should be moved to lower-cost storage tiers. Regularly review storage usage and delete unnecessary files to minimize expenses. When working with BigQuery through Google Cloud Datalab, query optimization is essential. Inefficient queries consume more resources and increase processing time. Use query explain plans to identify performance bottlenecks and optimize query structure. Partitioning and clustering tables can significantly improve query performance and reduce costs. Implementing these strategies ensures that Google Cloud Datalab is used efficiently and cost-effectively.

Google Cloud offers various pricing models for its services, including those used by Google Cloud Datalab. Understanding these models allows for informed decisions that can lead to significant cost savings. Committed Use Discounts (CUDs) provide substantial discounts in exchange for committing to use a certain amount of resources for a specified period. Consider CUDs if your Google Cloud Datalab usage is predictable and consistent. Spot VMs offer a way to run compute instances at a significantly reduced price. However, Spot VMs can be preempted with short notice. They are suitable for fault-tolerant workloads where interruptions are acceptable. Preemptible instances are ideal for batch processing tasks performed within Google Cloud Datalab. Monitoring Google Cloud Datalab usage is crucial for identifying potential cost savings. Cloud Monitoring provides insights into resource consumption and performance metrics. Set up alerts to notify you of any anomalies or excessive resource usage. Regularly review billing reports to identify areas where costs can be reduced. By carefully analyzing usage patterns and leveraging Google Cloud’s pricing models, you can effectively manage the costs associated with Google Cloud Datalab.

Effective data management practices contribute to both performance optimization and cost reduction in Google Cloud Datalab. Data locality is a key consideration. Storing data closer to the compute resources reduces latency and improves query performance. Utilize Google Cloud’s regions and zones to ensure data locality. Data governance policies ensure data quality and consistency, leading to more reliable analysis and better decision-making. Implement data validation and cleansing processes to maintain data integrity. Regularly audit data access and permissions to ensure data security and compliance. Optimize data formats for efficient storage and processing. Consider using columnar storage formats like Parquet or ORC for analytical workloads. These formats can significantly reduce storage costs and improve query performance. Explore Google Cloud Datalab’s capabilities to integrate with other Google Cloud services, streamlining workflows and enhancing overall efficiency. By adopting these best practices, you can optimize the performance of Google Cloud Datalab while minimizing costs and ensuring data quality and security. The proper use and monitoring of Google Cloud Datalab is critical to keeping operational costs low, while still achieving the maximum benefit from its interactive data analysis capabilities.