Understanding Internal Load Balancers

Internal load balancers in Azure play a critical role in distributing network traffic efficiently within a private cloud environment. These virtual appliances distribute traffic across multiple backend servers, enhancing application availability and performance. Internal load balancers differ significantly from external load balancers, which are exposed to the public internet. Internal load balancers, conversely, operate exclusively within an Azure virtual network, improving security and streamlining communication between on-premises resources and cloud-based applications. The primary benefit of using internal load balancers within Azure environments stems from their ability to enhance application performance and reliability.

A key advantage of internal load balancers is their seamless integration with Azure virtual networks. This streamlined connection ensures consistent and high-performing traffic distribution within the network. Furthermore, using internal load balancer azure significantly reduces latency compared to routing traffic through external endpoints. Efficient resource allocation and management of network traffic are critical elements of internal load balancer azure design. Effective application availability hinges on the reliable performance of internal load balancers. Moreover, internal load balancers simplify network administration by providing centralized traffic management within Azure.

Internal load balancers offer significant advantages over alternative solutions. They provide robust and scalable traffic distribution, ensuring high availability and consistent application performance. Using an internal load balancer azure solution enables improved application performance through dynamic traffic distribution, enhancing efficiency by automatically scaling resources to meet changing demands. The flexibility offered by internal load balancer azure architectures is remarkable, enabling administrators to tailor traffic distribution based on diverse application needs, making them highly suitable for high-traffic and high-availability environments.

Key Features and Functionalities

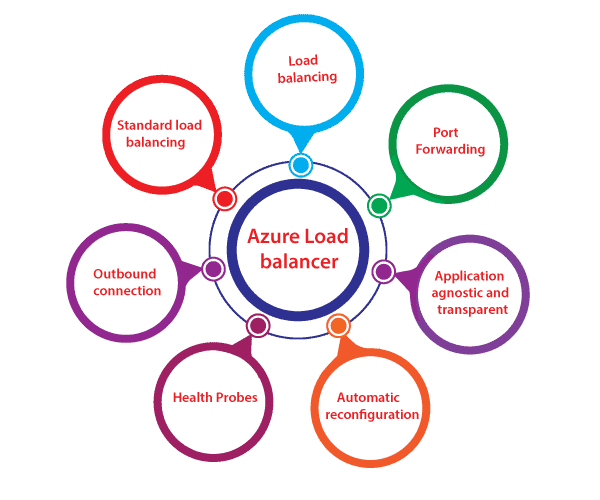

Azure Internal Load Balancers offer robust functionalities for distributing traffic efficiently within a private cloud environment. These internal load balancers play a crucial role in managing network traffic, ensuring high availability, and providing scalability within Azure deployments. Key features of internal load balancers include IP address management, enabling seamless traffic routing. This feature simplifies the process of managing IP addresses across the network. Configuring rules for traffic distribution is essential. Rules allow flexible routing of traffic based on specific criteria. This ensures optimal traffic distribution to the servers in the backend pool.

Health probes are critical for monitoring the health of the backend servers. These probes, an essential part of Azure internal load balancer functionality, check the server’s responsiveness, ensuring that only healthy servers receive incoming traffic. This continuous monitoring contributes to ensuring high availability and optimal performance. Azure’s internal load balancer’s features make it a valuable tool for handling fluctuating traffic demands within a network. Efficient traffic management is possible with these crucial features, allowing the internal load balancer to distribute traffic intelligently to servers.

The internal load balancer in Azure plays a vital role in directing traffic across a private network. This ensures that requests reach the correct servers in a smooth, manageable fashion. The internal load balancer effectively manages the complexity of distributing traffic across multiple servers within an Azure environment. This leads to higher application availability and improved performance within the infrastructure, enabling a reliable and scalable network. An internal load balancer in Azure is essential for the smooth functioning of applications within a private network.

Choosing the Right Internal Load Balancer Type for Your Needs

Selecting the appropriate type of Azure internal load balancer hinges on specific requirements. Different scenarios demand distinct configurations to optimize performance, cost-effectiveness, and scalability. Understanding the trade-offs between features and pricing is crucial. Azure offers various tiers for internal load balancers, each with unique capabilities. For applications requiring high throughput or complex routing scenarios, the standard tier might be the optimal choice. It offers enhanced features such as advanced health probes and more comprehensive traffic management options. In contrast, the basic tier is a cost-effective solution for basic traffic distribution, suitable for less demanding applications. Factors such as the expected volume of traffic, the complexity of routing rules, and the necessary level of availability play a pivotal role in the selection process. Choosing the right internal load balancer for an Azure environment ensures the smooth operation of applications and protects resources from over-burdening. The selection also needs to consider the specific needs of the application in terms of network performance and overall scalability.

The optimal choice for an internal load balancer Azure depends on specific application needs and expected traffic levels. Consider the trade-offs between features and cost, and match the tier to the specific characteristics of the workload. Basic internal load balancers are suitable for smaller applications or when cost is a primary consideration. Standard internal load balancers are ideal for applications requiring high throughput, advanced routing rules, or greater availability. Understanding the functionality and limitations of each type ensures the right choice aligns with performance needs and budget constraints. Factors such as the anticipated traffic volume, complexity of routing, and required availability should influence the selection process. Evaluating these considerations guarantees a robust and efficient internal load balancing solution within the Azure environment.

The diverse types of internal load balancers in Azure cater to different needs. Understanding the differences in performance, scalability, and cost is critical. A careful evaluation of the application’s characteristics will enable a choice that aligns with business requirements. Consider the level of traffic and the specific routing requirements. Analyzing these details helps find the optimal balance between cost and performance. When selecting a load balancer type, factors such as throughput, routing complexity, and availability should be significant considerations. This will ensure the selected internal load balancer is optimally suited for the intended application and network environment. Such strategic planning is key to efficient resource utilization and operational success.

Deploying an Azure Internal Load Balancer

Deploying an Azure internal load balancer involves several key steps. First, navigate to the Azure portal and select the resource group where you want to deploy the load balancer. Next, choose “Create a resource” and select “Internal Load Balancer” from the available resources. This crucial step initiates the deployment process. Provide the necessary details for your internal load balancer, including the name and location.

Configure the backend pools by specifying the virtual machines (VMs) or other resources that the load balancer should route traffic to. Define the IP addresses of these resources within the backend pool. Ensure the correct IP addresses are assigned, as they form the foundation of routing and load distribution. Specify the appropriate health probes to ensure the backend resources are functioning properly and responding to requests. Health probes monitor the status and availability of the backend resources. Accurate and well-defined health probes are critical to the performance of the internal load balancer. Configure routing rules that define how inbound traffic is distributed to the backend resources. Different rules can route traffic based on various criteria, such as protocols and ports. Carefully examine and specify the correct routing rules to ensure accurate traffic distribution.

Thoroughly review the security settings for your internal load balancer. Configure security groups and firewall rules to restrict access to the load balancer, especially in more complex or production-level deployments. Applying security settings to your Azure internal load balancer in this way is vital to protect the resources you’re routing traffic to. These security measures act as a crucial safeguard to protect sensitive data. Testing the internal load balancer after deployment is also crucial. Validate all the configurations and verify that traffic is flowing correctly to the backend resources. These steps ensure a smooth and efficient transition to using the internal load balancer and improve operational stability. A well-designed internal load balancer azure deployment will deliver exceptional performance.

Optimizing Performance and Scalability of an Azure Internal Load Balancer

Maximizing the performance and scalability of an Azure internal load balancer involves several key strategies. Proper configuration of health probes is crucial. Defining robust health probes allows the internal load balancer to quickly identify and remove unresponsive instances from the backend pool. This proactive measure ensures that traffic is consistently directed to healthy servers, maintaining application availability. Implementing appropriate traffic management policies is essential to efficiently distribute traffic across the servers in the backend pool. For instance, using weighted routing based on server capacity allows prioritizing traffic to the most capable machines. This ensures optimal resource utilization and prevents overloading specific servers.

Configuring scaling options for optimal performance is a key aspect of maintaining application availability and responsiveness. Using automated scaling solutions allows the internal load balancer azure to dynamically adjust the number of active servers based on fluctuating traffic demands. This proactive approach ensures consistent application performance even under peak loads. Adopting high availability strategies is crucial to ensure minimal downtime during planned maintenance or unexpected failures. Implementing a redundant internal load balancer azure setup allows for seamless failover to a backup load balancer in case of issues, thereby ensuring uninterrupted service. These strategies guarantee reliable and efficient application operation even with increased load demands.

Implementing these strategies for high availability is paramount for maintaining an effective internal load balancer azure. Implementing a redundant setup with automatic failover ensures uninterrupted service in case of load balancer or backend server failures. This strategy is essential to minimize downtime during planned maintenance or unexpected outages. Continuous monitoring of the load balancer’s performance metrics is also crucial for identifying and resolving potential bottlenecks or performance issues proactively. Monitoring tools provide real-time insight into key metrics, such as request latency, server response times, and connection rates, which assist in detecting performance degradations early. These proactive approaches contribute significantly to the reliability and scalability of the internal load balancer.

Security Considerations and Best Practices for Azure Internal Load Balancers

Ensuring the security of internal load balancers in Azure is crucial. Implementing robust security measures protects applications and data within the network. Proper configuration of security features is paramount for safeguarding the internal load balancer azure infrastructure. Thorough security practices prevent unauthorized access and ensure data protection. Network security best practices within Azure, particularly for internal load balancers, are critical for mitigating potential risks.

Security groups provide a critical layer of defense. Configure security groups to allow only necessary inbound and outbound traffic to the internal load balancer azure. Restricting access to specific ports and IP addresses significantly reduces attack surface. Implement strict firewall rules, acting as a gatekeeper, regulating traffic flow, effectively isolating the internal load balancer azure from potential threats. This strategy also prevents unauthorized access and malicious activity.

Monitoring network traffic for suspicious patterns is also critical. Monitoring tools can detect unusual activity and alert administrators of potential security breaches. Establish procedures to respond to security events effectively, containing and resolving any incidents. Network logs provide detailed insight into traffic patterns. Implementing logging for the internal load balancer azure and related network components can facilitate investigation if a security incident occurs. Proactive monitoring can effectively detect unauthorized or malicious activity. Implementing multiple layers of security measures for the internal load balancer azure strengthens overall network protection.

Troubleshooting Common Issues with Azure Internal Load Balancers

Troubleshooting an Azure internal load balancer often involves systematically checking several key areas. Health probes failing to function correctly are a common problem. Verify the probe configuration, ensuring it accurately reflects the application’s health checks. Incorrect port numbers or unhealthy application instances are frequent causes. Examine the probe’s settings in the Azure portal and check the health status of your backend pool instances. If problems persist, review the probe’s configuration against the application’s requirements. The internal load balancer azure might show failures if the backend pool isn’t configured correctly. Double-check that all necessary virtual machines are added to the backend pool and that their network configuration is correct. Incorrectly configured routing rules can also prevent traffic from reaching the intended destination. Review these rules carefully, ensuring they are correctly associated with the backend pool and properly defined to match incoming traffic patterns. Network security groups (NSGs) can sometimes inadvertently block traffic to the internal load balancer azure. Examine NSG rules to ensure they allow traffic on the necessary ports. Also, consider reviewing the load balancer’s diagnostics logs for more specific details. Azure provides robust logging capabilities; leverage these logs to identify more specific issues affecting your internal load balancer. Properly interpreting these logs is key to pinpointing the root cause.

Another area to focus on when troubleshooting your internal load balancer azure is its performance. Slow response times or high latency can indicate various issues. Examine resource usage on the virtual machines in the backend pool. High CPU utilization, memory pressure, or disk I/O bottlenecks can negatively impact application response times. Scale up the virtual machines or optimize application code to improve performance. The load balancer itself might be experiencing limitations. While highly scalable, exceeding its capacity can lead to performance degradation. Consider increasing the load balancer’s size or implementing additional load balancers for better distribution. Network connectivity issues can also hinder the load balancer’s performance. Check for network latency or packet loss between the load balancer and backend instances. Review the virtual network configuration and investigate potential routing or connectivity problems. Use tools such as Azure Network Watcher to perform detailed network diagnostics. The Azure portal provides a wealth of monitoring metrics for the internal load balancer. These metrics provide insight into request counts, latency, and error rates. Regularly monitor these metrics to identify potential issues before they impact application performance. Early detection of performance degradation allows for proactive troubleshooting and prevents disruptions to service.

Addressing configuration issues within the internal load balancer azure requires meticulous review. Incorrectly configured IP addresses, subnet assignments, or frontend IP configurations can cause connection problems. Always verify that the load balancer’s configuration accurately reflects your intended network setup. Ensure proper alignment between subnet ranges, IP address assignments, and the virtual networks your internal load balancer interacts with. Pay close attention to any changes made to the network infrastructure, as unexpected modifications might negatively impact the internal load balancer’s ability to route traffic. Carefully review any changes made to the configuration to identify potential conflicts or misconfigurations. Double check every setting; even minor errors can cause significant operational issues. When all else fails, creating a new internal load balancer azure and migrating traffic can be a valuable solution. This approach helps isolate and rule out any persistent configuration problems in the existing setup. This method provides a clean environment to thoroughly test and validate your setup. This approach eliminates the risk of compounding any configuration issues.

Case Study: Implementing Internal Load Balancing in a Production Environment

A fictional e-commerce company, “GlobalShop,” experienced significant performance bottlenecks during peak shopping seasons. Their Azure-based application struggled to handle the influx of traffic, resulting in slow response times and frustrated customers. The existing architecture lacked a robust mechanism for distributing network traffic efficiently. GlobalShop decided to implement an internal load balancer azure to address these scalability issues. The solution involved deploying an Azure Internal Load Balancer to distribute incoming requests across multiple application instances. This ensured that no single server was overloaded, maintaining responsiveness even under heavy traffic. The team carefully configured health probes to monitor the health of each application instance. Unhealthy instances were automatically removed from the load balancing pool, preventing them from receiving further requests.

The implementation of the internal load balancer azure involved several key steps. First, the team created a backend pool comprising all the application servers. Next, they defined a load balancing rule that directed traffic to the backend pool based on the incoming requests. The team also configured floating IP addresses associated with the internal load balancer. This ensured consistent access to the application even when individual instances were restarted or replaced. Security groups were implemented to restrict access to the internal load balancer and backend pool. Only authorized internal virtual networks could access the application, enhancing security. By incorporating these security measures, GlobalShop minimized potential risks associated with the internal load balancer.

The results were impressive. After implementing the internal load balancer azure, GlobalShop saw a significant improvement in application performance and scalability. Response times decreased dramatically, even during peak traffic periods. The system’s resilience also increased; the failure of a single server had minimal impact on overall availability. The improved performance and stability resulted in higher customer satisfaction and increased sales. This successful implementation showcases the significant benefits of using an internal load balancer azure in a production environment. The case study highlights the importance of careful planning, configuration, and security considerations when deploying an internal load balancer. The solution demonstrated a clear return on investment, enhancing both the user experience and the overall efficiency of GlobalShop’s online platform.

Cost Optimization Strategies for Internal Load Balancers in Azure

Optimizing the cost of an internal load balancer azure is crucial for maintaining a healthy budget. Azure offers different pricing tiers for internal load balancers. Understanding these tiers and choosing the right one based on your needs is the first step. Factors like the number of inbound and outbound rules, the number of backend pools, and the throughput required all impact pricing. Regularly review your usage patterns to identify potential cost savings. Consider right-sizing your internal load balancer azure by adjusting the configuration to match actual traffic demands. Avoid over-provisioning, which can significantly inflate costs. Proper capacity planning prevents unnecessary expenses.

Monitoring and analyzing your internal load balancer azure’s performance metrics is vital for cost optimization. Tools such as Azure Monitor provide detailed insights into resource utilization. This data helps identify periods of low traffic, allowing for efficient scaling adjustments. By scaling down during off-peak hours or low-demand periods, significant cost reductions can be achieved. Implementing autoscaling features, available within Azure, can automate this process, ensuring the internal load balancer automatically adjusts to fluctuating demands, dynamically balancing performance and cost. This automated approach minimizes manual intervention and optimizes resource allocation.

Beyond scaling, explore advanced features offered by Azure to further reduce costs associated with your internal load balancer azure. Consider features like traffic routing and health probes to ensure efficient traffic distribution and prevent unnecessary resource consumption. Azure offers multiple types of load balancing, each with a distinct cost structure. Carefully evaluating the requirements of the application and choosing the most cost-effective option are critical considerations. Regularly assessing your overall infrastructure design to determine whether the internal load balancer azure remains the most efficient solution is also important. Alternative architectures or optimization strategies might offer better cost-performance ratios.

Cost Optimization Strategies for Azure Internal Load Balancers

Understanding the cost implications of an internal load balancer azure is crucial for efficient cloud resource management. Azure offers different internal load balancer types, each with varying pricing structures. Factors influencing costs include the number of instances in the backend pool, the amount of data processed, and the chosen SKU. Organizations should carefully evaluate their needs to select the most cost-effective option. For instance, a basic load balancer might suffice for smaller deployments with lower throughput demands, while a standard load balancer offers more advanced features for larger-scale applications requiring higher performance and scalability. Selecting the appropriate SKU based on expected traffic patterns and scaling needs directly impacts the overall cost.

Optimizing costs involves proactive monitoring and analysis of usage patterns. Regularly reviewing resource utilization helps identify potential areas for cost reduction. Right-sizing the internal load balancer azure, ensuring it aligns with actual demands, prevents overspending. Azure’s pricing calculator can provide estimates based on projected usage, facilitating informed decision-making. Implementing autoscaling features allows the load balancer to automatically adjust capacity based on real-time demands, ensuring optimal performance while minimizing unnecessary costs. This dynamic approach avoids over-provisioning, a common cause of inflated expenses. Furthermore, using Azure Cost Management tools provides detailed insights into spending patterns, enabling identification of cost anomalies and opportunities for improvement.

Beyond SKU selection and scaling, network optimization plays a significant role in cost management. Efficient routing and minimizing unnecessary network traffic reduce data transfer charges, a major cost component. Properly configured health probes prevent unnecessary requests to unhealthy instances, thus optimizing resource utilization and lowering costs. Regularly reviewing and updating the load balancer’s configuration to reflect current requirements ensures optimal performance at a reasonable cost. The combination of intelligent resource allocation, monitoring, and proactive management can significantly reduce the overall cost of using an internal load balancer azure, maximizing return on investment for cloud infrastructure.