Understanding Kinesis Data Streams for Real-Time Data Processing

Amazon Kinesis Data Streams is a fully managed, scalable, and durable real-time data streaming service. It enables you to continuously collect, process, and analyze vast amounts of streaming data from various sources. An aws kinesis stream allows data to be ingested from hundreds of thousands of sources, all concurrently. The data is available in milliseconds, enabling real-time analytics and immediate responses. This capability is crucial for applications that require timely insights and actions. An aws kinesis stream operates by ingesting data records, which are sequences of bytes, and storing them temporarily in shards. Shards are the basic unit of throughput in a Kinesis data stream. The number of shards determines the stream’s capacity. Data retention policies dictate how long data is stored, offering flexibility based on your specific needs.

The core functionalities of an aws kinesis stream revolve around its ability to handle high-velocity and high-volume data. Data producers push data into the stream, and data consumers pull data out for processing. This publish-subscribe model ensures that multiple applications can consume the same data stream simultaneously, without affecting each other. Scalability is a key feature. You can adjust the number of shards in your stream to accommodate changing data volumes. This dynamic scaling ensures that your data pipeline can handle peak loads without compromising performance. Durability is achieved through replication. Kinesis Data Streams replicates data across multiple availability zones, protecting against data loss in the event of a failure.

Kinesis Data Streams excels in use cases that demand real-time data processing. Log aggregation is a common application. You can use Kinesis to collect logs from multiple servers and applications, enabling centralized monitoring and analysis. Clickstream analysis is another area where Kinesis shines. By capturing user clicks and actions in real-time, you can gain valuable insights into user behavior and optimize your website or application. Furthermore, the ingestion of IoT data is perfectly suited for aws kinesis stream. Data from sensors and devices can be streamed into Kinesis for real-time monitoring and control, making it essential for various industrial and consumer applications. In conclusion, Kinesis Data Streams provides a robust and versatile solution for real-time data ingestion and processing across a wide range of industries.

How to Build a Data Pipeline using Kinesis Data Streams: A Practical Guide

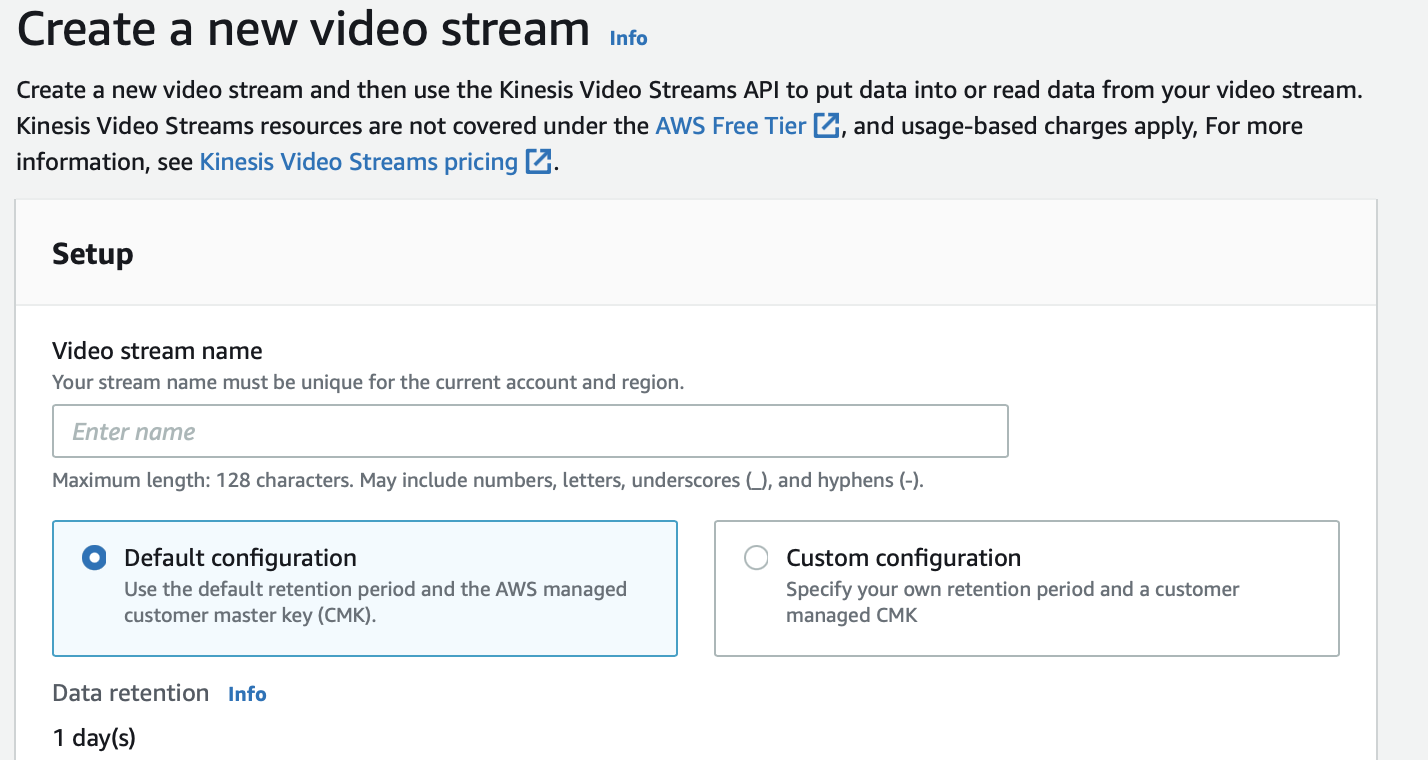

The following is a step-by-step guide to building a data pipeline using Amazon Kinesis Data Streams. First, an AWS account is required. Once the AWS account is active, access the Kinesis Data Streams console. This is the starting point for creating and managing your aws kinesis stream infrastructure. Begin by navigating to the Kinesis service within the AWS Management Console. From there, you can initiate the creation of a new Kinesis Data Stream.

Creating a Kinesis Data Stream involves several configuration steps. The most important is determining the number of shards. Shards are the fundamental units of throughput in a Kinesis Data Stream. The number of shards directly impacts the stream’s capacity to ingest and process data. When provisioning shards, consider the expected data volume and throughput. Next, define the data retention policy. Kinesis Data Streams allows you to retain data for a specified period, ranging from 24 hours to 7 days. Choosing the appropriate retention period depends on your data processing and analysis requirements. Now, let’s look at how to put data into the aws kinesis stream.

To send data to the aws kinesis stream, you’ll need a producer application. This application collects data from various sources and pushes it to the Kinesis Data Stream. Below is a Python code snippet using boto3, the AWS SDK for Python, illustrating how to accomplish this. First install boto3 library with “pip install boto3” command. The following script provides a basic example of sending data to your aws kinesis stream. Ensure you replace ‘your-stream-name’ with the actual name of your Kinesis Data Stream. Remember to configure your AWS credentials properly for the script to authenticate with your AWS account.

Architecting Scalable Data Solutions with AWS Streaming Services

When architecting scalable data solutions using AWS streaming services, particularly aws kinesis stream, several crucial considerations come into play. These considerations ensure the robustness, efficiency, and cost-effectiveness of the data pipeline. Scaling shards dynamically is paramount. The number of shards within an aws kinesis stream directly impacts its capacity to handle data volume and throughput. It’s vital to monitor shard utilization using CloudWatch metrics and proactively adjust the shard count based on incoming data traffic. Over-provisioning can lead to unnecessary costs, while under-provisioning can result in data ingestion bottlenecks and performance degradation. An effective strategy involves implementing automated scaling policies that respond to changes in data load in near real-time.

Monitoring the performance of the aws kinesis stream is essential for identifying and addressing potential bottlenecks. CloudWatch provides a wealth of metrics, including PutRecords.Success, PutRecords.Failed, GetRecords.Success, and GetRecords.Failed. Analyzing these metrics helps pinpoint issues such as data ingestion failures, shard exhaustion, or slow consumer performance. Setting up alarms based on these metrics allows for proactive intervention. Furthermore, understanding the distribution of data across shards is crucial. Data is routed to specific shards based on partition keys. Uneven distribution can lead to hot shards, where some shards are significantly more utilized than others. Implementing a well-designed partitioning strategy ensures even distribution and maximizes throughput. Error handling mechanisms are also important, implementing retry logic with exponential backoff helps to mitigate transient errors and ensure data delivery.

Building scalable data solutions also requires careful consideration of the integration with other AWS services. Aws kinesis stream often serves as the entry point for data that is subsequently processed by services like Kinesis Data Analytics, Kinesis Data Firehose, Lambda, or even persisted in S3 or Redshift. Optimizing the interaction between these services is critical for end-to-end performance. For instance, when integrating with Lambda, ensure that the Lambda function can process batches of records efficiently. When persisting data to S3, consider using data compression techniques to reduce storage costs and improve data transfer speeds. Moreover, security considerations are paramount. Implementing appropriate IAM roles and policies to control access to the aws kinesis stream and other related resources is a must. Encryption, both at rest and in transit, should be enabled to protect sensitive data. By addressing these architectural considerations, organizations can build robust and scalable data solutions that leverage the full potential of aws kinesis stream and other AWS streaming services.

Analyzing Streaming Data with Kinesis Data Analytics

Kinesis Data Analytics serves as a pivotal companion to aws kinesis stream, facilitating real-time data processing and insightful analysis. It seamlessly connects to aws kinesis stream, empowering users to derive immediate value from ingested data. This integration allows for the creation of dynamic dashboards, real-time alerts, and sophisticated data transformations directly from the streaming data flow. Kinesis Data Analytics is particularly valuable for applications that require immediate insights and actions based on incoming data.

The core of Kinesis Data Analytics lies in its ability to execute SQL queries against streaming data. These queries enable a wide range of operations, including real-time aggregations, filtering, and transformations. For instance, SQL can be used to calculate moving averages, identify trends, or filter out irrelevant data points, providing a cleaned and refined data stream for further analysis or storage. Consider a scenario where an e-commerce platform uses aws kinesis stream to capture user clickstream data. Kinesis Data Analytics can then be used to count the number of clicks on specific products in real-time, triggering alerts when a product’s popularity spikes or drops significantly. Another example is an IoT application monitoring sensor data. Here, Kinesis Data Analytics can filter and aggregate data, identifying anomalies indicating potential equipment failures.

Beyond basic aggregations and filtering, Kinesis Data Analytics supports more advanced functionalities like anomaly detection. By employing statistical algorithms, it identifies unexpected deviations from established patterns within the streaming data. This capability is crucial in various applications, such as fraud detection, where unusual transaction patterns can trigger immediate investigations. Moreover, the service seamlessly integrates with other AWS services, such as S3 and Redshift, allowing you to store processed data for historical analysis or further enrich your data pipeline. By leveraging the combination of aws kinesis stream and Kinesis Data Analytics, organizations can build responsive and intelligent systems capable of reacting in real-time to dynamic data streams. This synergy offers a powerful means to gain a competitive edge through instant insights and informed decision-making.

Choosing Between Kinesis Data Streams and Kinesis Data Firehose: A Comparison

Amazon offers two primary services for handling real-time data streaming: Kinesis Data Streams and Kinesis Data Firehose. Understanding the nuances of each is crucial for selecting the right tool for a specific use case. Both are part of the aws kinesis stream ecosystem. Kinesis Data Streams provides fine-grained control over data processing, while Kinesis Data Firehose simplifies data delivery to various AWS destinations.

Kinesis Data Streams is designed for use cases requiring custom data processing. It allows developers to build applications that consume and process data in real time. This service offers flexibility in terms of data transformation, enrichment, and analysis. You have full control over how data is processed and routed. With aws kinesis stream, you can implement complex logic using custom consumer applications. This makes it suitable for scenarios like real-time analytics, fraud detection, and complex event processing. Kinesis Data Streams requires more operational overhead since you manage the consumer applications. In contrast, Kinesis Data Firehose focuses on simplifying the process of loading streaming data into AWS data stores.

Kinesis Data Firehose is designed for loading data into destinations like Amazon S3, Redshift, Elasticsearch, and Splunk. It automatically scales to handle varying data volumes and doesn’t require managing consumer applications. It offers built-in data transformation capabilities. This includes format conversion, data compression, and encryption. This makes it ideal for use cases where data needs to be reliably delivered to storage or analytics services without custom processing. Choosing between aws kinesis stream Data Streams and Firehose depends on your needs. Consider Data Streams if you need control over real-time data processing. Opt for Firehose if your focus is on simple and reliable data delivery. Consider factors like data transformation requirements, processing complexity, and operational overhead when making your decision. Both services offer valuable solutions for managing real-time data. The best choice depends on your specific application requirements and architectural goals.

Securing Your Kinesis Data Streams Infrastructure

Security is paramount when working with sensitive data in the cloud, and an aws kinesis stream is no exception. Protecting your data within the aws kinesis stream and controlling access to it are critical for maintaining compliance and preventing unauthorized access. Implementing robust security measures ensures the confidentiality, integrity, and availability of your streaming data. This involves leveraging AWS Identity and Access Management (IAM) for access control, employing encryption for data protection, and adhering to security best practices for producer and consumer applications.

IAM roles and policies are fundamental to securing your aws kinesis stream infrastructure. IAM allows you to define granular permissions, specifying which AWS resources users and applications can access. For a Kinesis Data Stream, you can create IAM roles that grant specific permissions, such as the ability to put records into the stream or consume data from it. By assigning these roles to your producer and consumer applications, you can control precisely what actions they can perform on the aws kinesis stream. It’s crucial to follow the principle of least privilege, granting only the minimum necessary permissions to each role. This minimizes the potential impact of any security breaches. Regularly review and update your IAM policies to reflect changes in your application requirements and security landscape. Using well-defined IAM roles, you can restrict access, preventing unauthorized users from tampering with your aws kinesis stream.

Encryption is another essential aspect of securing your aws kinesis stream. AWS provides options for encrypting data both at rest and in transit. Encryption at rest protects your data when it’s stored within the Kinesis Data Stream. You can leverage AWS Key Management Service (KMS) to manage the encryption keys used to protect your data. Encryption in transit ensures that your data is protected as it moves between your producer and consumer applications and the Kinesis Data Stream. This is typically achieved using HTTPS, which encrypts the data using TLS/SSL protocols. When configuring your producer and consumer applications, ensure that they are configured to use HTTPS when communicating with the aws kinesis stream. Regularly rotate your encryption keys to further enhance security. In addition to these measures, it’s crucial to secure the producer and consumer applications themselves. This includes implementing proper authentication and authorization mechanisms, keeping the applications up-to-date with the latest security patches, and monitoring for any suspicious activity. By combining IAM roles, encryption, and secure application development practices, you can build a highly secure aws kinesis stream infrastructure.

Optimizing Costs and Performance with Kinesis Data Streams

Effective management of resources is crucial when working with aws kinesis stream to maintain cost-effectiveness and optimal performance. Monitoring shard utilization is a fundamental aspect of this process. Over-provisioning shards can lead to unnecessary expenses, while under-provisioning can cause performance bottlenecks. Therefore, consistently tracking shard utilization metrics is essential. These metrics, available through CloudWatch, provide insights into the amount of data flowing through each shard. Analyzing these metrics helps determine if the current number of shards aligns with the actual data volume and throughput requirements. Adjusting the number of shards dynamically, based on these insights, ensures efficient resource allocation and cost optimization for your aws kinesis stream.

Batching records is another effective strategy for improving throughput and reducing costs associated with aws kinesis stream. Instead of sending individual records, grouping multiple records into a single batch reduces the overhead associated with each transmission. This approach minimizes the number of API calls, leading to improved throughput and reduced costs. The Kinesis Data Streams API supports batching, allowing producers to send multiple records in a single PutRecords request. Implementing batching requires careful consideration of the maximum batch size allowed by Kinesis Data Streams to avoid exceeding the limits. Optimizing batch sizes can significantly enhance the overall efficiency of your data ingestion pipeline using aws kinesis stream.

Compression techniques play a vital role in minimizing data transfer costs within the aws kinesis stream ecosystem. Compressing data before sending it to Kinesis Data Streams reduces the amount of data transferred over the network, resulting in lower data transfer costs. Kinesis Data Streams supports various compression algorithms, such as GZIP and Snappy. Producers can compress data using these algorithms before sending it to the stream, and consumers can decompress the data after receiving it. Choosing the right compression algorithm involves balancing compression ratio and processing overhead. While higher compression ratios reduce data transfer costs further, they may also increase the processing overhead for compression and decompression. Evaluating different compression algorithms and selecting the one that best suits your specific use case can optimize cost and performance in your aws kinesis stream data pipeline.

Troubleshooting Common Issues with Kinesis Data Streams

Encountering issues while working with an aws kinesis stream is not uncommon, and understanding how to effectively troubleshoot them is crucial for maintaining a stable and reliable data pipeline. This section addresses some frequent problems faced when using aws kinesis stream, offering practical solutions and debugging techniques.

One common issue is data ingestion failure. This often manifests as records not appearing in the stream or producers experiencing errors when attempting to put data. Start by verifying the producer’s IAM role and policies to ensure it has the necessary permissions to write to the aws kinesis stream. Next, check the aws kinesis stream configuration, specifically the shard allocation. If the stream is under-sharded, it can lead to the “ProvisionedThroughputExceededException.” Monitor CloudWatch metrics like “PutRecords.ThrottledRecords” and “WriteProvisionedThroughputExceeded” to identify throttling issues. Scale the number of shards accordingly to accommodate the data volume. Ensure that the partition key used for routing records is distributing data evenly across the shards. Uneven distribution can lead to hot shards and performance bottlenecks. Also, examine the producer application logs for any error messages or exceptions that might indicate the root cause of the ingestion failure. Network connectivity issues between the producer and the aws kinesis stream endpoint can also cause ingestion problems. Verify network configurations and firewall rules.

Another common problem is slow consumer performance. If consumers are falling behind in processing records, it can lead to data delays and impact real-time analytics. Check the consumer’s CloudWatch metrics, such as “GetRecords.IteratorAgeMilliseconds,” to assess the consumer lag. If the iterator age is consistently high, it indicates that the consumer is struggling to keep up with the data stream. Investigate the consumer application code for any performance bottlenecks. Ensure that the consumer is efficiently processing records in batches. Increase the number of consumer instances to parallelize processing. Verify that the consumer has sufficient resources (CPU, memory) to handle the workload. Also, consider optimizing the consumer’s shard assignment strategy to ensure even distribution of shards across consumer instances. Security-related errors can also disrupt the operation of an aws kinesis stream. Verify that the consumer’s IAM role has the necessary permissions to read from the aws kinesis stream. Ensure that encryption is properly configured, both at rest and in transit, to protect sensitive data. By systematically addressing these common issues and implementing effective monitoring and alerting, you can minimize disruptions and maintain a healthy and performant aws kinesis stream infrastructure.