Understanding the Need for Dynamic Scaling

Manually managing server capacity presents significant challenges. Maintaining optimal performance becomes difficult when dealing with unpredictable traffic fluctuations. For example, seasonal sales or viral marketing campaigns can quickly overwhelm systems, leading to slowdowns, outages, and frustrated users. These unpredictable spikes necessitate a more dynamic approach to resource management. Autoscaling in AWS offers a powerful solution. This technology automatically adjusts server capacity based on real-time demand. By intelligently scaling resources up or down, organizations can optimize costs while ensuring high availability and consistent performance. The benefits of implementing autoscaling in AWS are numerous, offering a significant improvement over traditional static scaling methods. Efficient resource utilization is a key advantage of autoscaling in AWS, allowing businesses to pay only for the computing resources actually consumed. This contrasts sharply with the potential waste associated with over-provisioning in static environments. Autoscaling in AWS enables businesses to respond effectively to sudden traffic surges, preventing service disruptions and maintaining a positive user experience. The dynamic nature of autoscaling in AWS provides the flexibility needed to handle varying workloads efficiently.

Consider a scenario where a popular e-commerce site experiences a sudden surge in traffic due to a flash sale. Without autoscaling, the site might become overloaded, resulting in slow loading times and potentially lost sales. Autoscaling in AWS would automatically detect the increased demand and provision additional servers, ensuring that the site remains responsive and available. Conversely, during periods of low traffic, autoscaling reduces the number of servers, minimizing costs. This automated response system greatly improves operational efficiency and minimizes human intervention in managing server resources. The ability to rapidly scale resources up or down is critical for maintaining business continuity and achieving cost-effectiveness. Autoscaling in AWS offers a robust and reliable solution for managing fluctuating workloads. The automated nature of the system provides considerable cost savings, compared to manual scaling procedures.

In summary, the limitations of manual server capacity management are clear. Unpredictable demand surges cause performance issues and wasted resources. Implementing autoscaling in AWS provides a solution by dynamically adjusting capacity based on real-time needs. This leads to significant cost savings and improved application availability, representing a crucial step in modern infrastructure management. The core benefits are automated scaling, cost optimization, and improved application availability. Autoscaling in AWS is a valuable tool for organizations of all sizes, particularly those dealing with fluctuating workloads.

Exploring AWS Autoscaling Services: EC2 Auto Scaling and Other Options

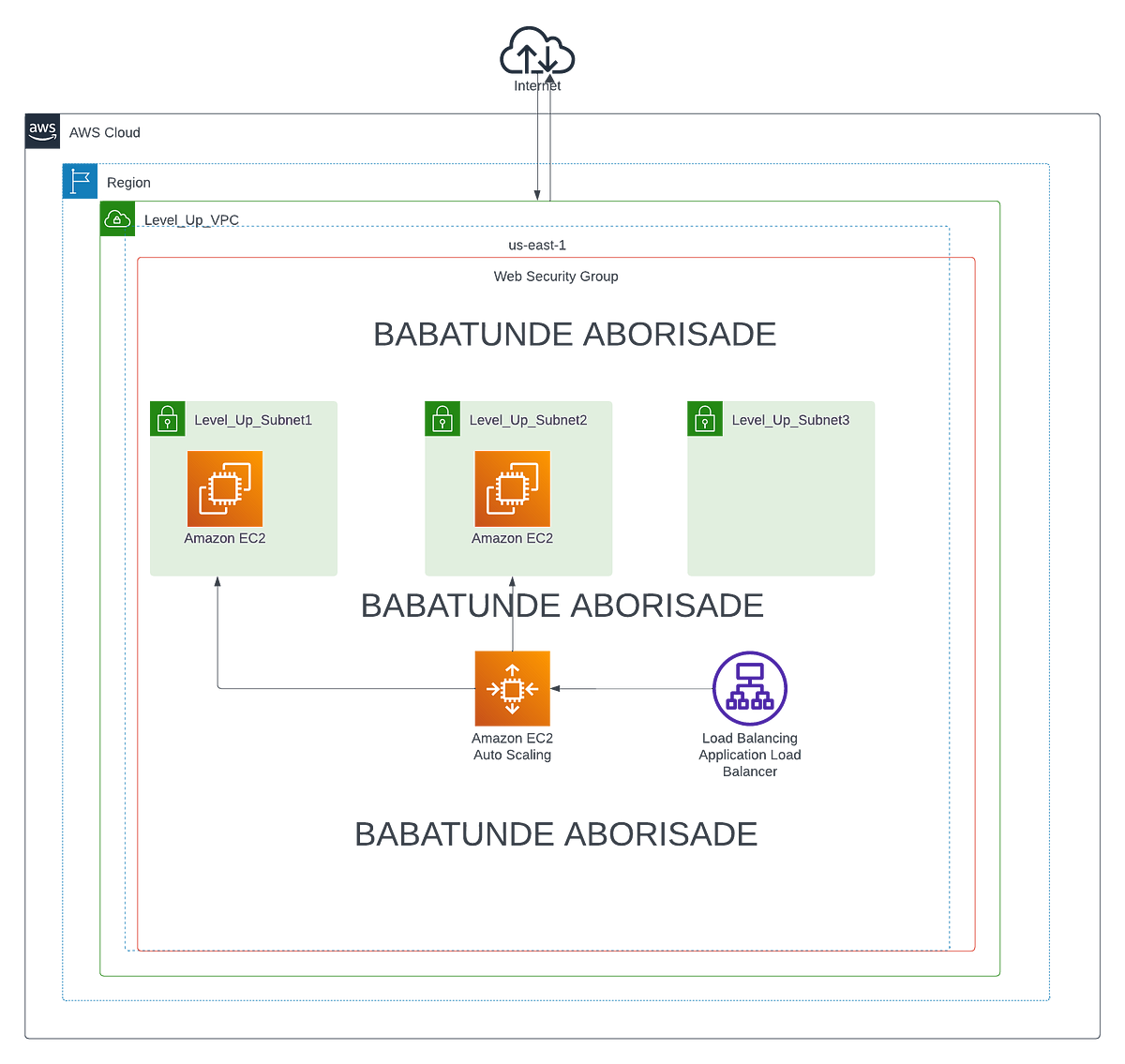

Amazon EC2 Auto Scaling is a core service for managing the capacity of EC2 instances. It allows for dynamic scaling of resources based on various metrics, ensuring optimal performance and cost efficiency. This autoscaling in AWS solution automates the process of launching and terminating instances, adapting to fluctuating demand. Key components include launch configurations, which define the instance type, AMI, and other settings; scaling groups, which represent a collection of instances; and scaling policies, which dictate how the autoscaling in AWS system responds to changes in demand. The system seamlessly integrates with Application Load Balancers (ALB) and Elastic Load Balancers (ELB) to distribute traffic across the available instances.

Beyond EC2 Auto Scaling, AWS offers autoscaling solutions for containerized environments. Amazon ECS (Elastic Container Service) and Amazon EKS (Elastic Kubernetes Service) provide autoscaling capabilities for containerized applications. These services automatically adjust the number of containers based on demand, optimizing resource utilization and ensuring application availability. AWS Fargate, a serverless compute engine for containers, abstracts away the management of EC2 instances, offering a fully managed autoscaling experience. The choice of autoscaling service depends on the specific application architecture and deployment strategy. Each service offers unique benefits and considerations when designing your autoscaling in AWS strategy.

Understanding the nuances of each AWS autoscaling service is crucial for effective resource management. EC2 Auto Scaling excels for managing virtual machines, while ECS, EKS, and Fargate provide robust autoscaling for container workloads. Choosing the right service ensures optimal performance, cost efficiency, and scalability for your applications. Careful consideration of application requirements and future growth potential will inform the selection of the most appropriate autoscaling in AWS solution. Effective implementation of autoscaling minimizes operational overhead, improves application responsiveness, and optimizes resource allocation, aligning perfectly with best practices for cloud-based application management.

How to Configure EC2 Auto Scaling for Optimal Performance

Setting up EC2 Auto Scaling involves several key steps. First, create a Launch Configuration. This defines the AMI (Amazon Machine Image), instance type, and other parameters for your EC2 instances. Specify the desired instance size, ensuring it aligns with your application’s needs. Remember to configure security groups to control network access. Next, create an Auto Scaling group. This group defines the desired capacity, minimum and maximum number of instances, and the launch configuration you just created. Autoscaling in AWS manages the instances within this group. Choose a suitable load balancer, either Application Load Balancer (ALB) or Elastic Load Balancer (ELB), to distribute traffic efficiently across your instances. This is critical for maintaining application availability during scaling events. Proper configuration of the load balancer is crucial for seamless autoscaling in AWS.

Next, define your scaling policies. These determine how autoscaling in AWS responds to changes in demand. You can use step scaling, adjusting the number of instances based on predefined thresholds for metrics like CPU utilization. Target tracking scaling automatically adjusts capacity to maintain a specific metric, such as average CPU utilization, at a target value. For example, you might set a target of 70% CPU utilization. If it drops below 60%, the autoscaling group will automatically increase the number of instances, and vice-versa. Configure health checks to ensure only healthy instances receive traffic. This prevents unhealthy instances from impacting application performance. Autoscaling in AWS relies heavily on these health checks to maintain a robust and healthy pool of EC2 instances. Don’t forget to schedule scaling if you anticipate regular fluctuations in demand, such as daily or weekly peaks.

Finally, monitor your Auto Scaling group’s performance using Amazon CloudWatch. CloudWatch provides valuable metrics, including CPU utilization, network traffic, and request latency. Use these metrics to fine-tune your scaling policies. Adjust thresholds and scaling adjustments to optimize performance and cost. Efficiently utilizing autoscaling in AWS requires regular monitoring and adjustments. By proactively monitoring and making necessary adjustments based on CloudWatch data, you ensure your autoscaling setup remains optimized for performance and cost-efficiency. Remember, testing and iterative refinement of your scaling policies are crucial for achieving optimal results with autoscaling in AWS. Start with conservative settings and gradually increase the aggressiveness of your scaling policies as you gain confidence and data about your application’s behavior.

Defining Scaling Policies: Metrics, Thresholds, and Adjustment Types

Effective scaling policies are crucial for optimal autoscaling in AWS. These policies dictate how your autoscaling group responds to changes in demand. The foundation of a good policy lies in selecting the right metrics. AWS offers a wide range of metrics, including CPU utilization, network traffic, and custom metrics you can define based on your application’s specific needs. Consider CPU utilization as a starting point; it’s a readily available metric that often correlates well with application performance. However, for specific scenarios, you might need to monitor custom metrics to achieve the best autoscaling results.

Once you’ve chosen your metrics, you must define thresholds. These thresholds determine when the autoscaling group should scale up or down. For example, you might set a threshold of 80% CPU utilization. When the average CPU utilization across your instances exceeds this threshold, autoscaling in AWS will automatically launch additional instances to handle the increased load. Conversely, if utilization falls below a lower threshold, the system will terminate idle instances to optimize costs. The choice of thresholds requires careful consideration and iterative adjustment based on real-world performance data. Start with conservative settings and monitor your environment closely to make data-driven adjustments. Observe how your application behaves under different load conditions to find the sweet spot. Don’t hesitate to experiment to see what works best for your unique situation and application.

Beyond setting thresholds, you need to specify the adjustment type. This defines how many instances will be added or removed when a threshold is crossed. Common adjustment types include percentage change (e.g., increase the number of instances by 20%) and absolute number (e.g., add two instances). Percentage change is generally more adaptable to varying levels of demand, while absolute numbers provide more predictable scaling behavior. The selection of adjustment type will influence the responsiveness and cost-efficiency of your autoscaling configuration. Testing and fine-tuning your scaling policies are essential to balance performance and cost. Continuous monitoring and analysis of your autoscaling groups allow you to optimize your configuration and achieve the ideal equilibrium for your workload. Remember, finding the optimal balance between responsiveness and cost is an ongoing process, not a one-time task. Autoscaling in AWS provides the tools to adjust your approach as needed.

Advanced Autoscaling Techniques: Predictive Scaling and Advanced Load Balancing

Predictive scaling represents a significant advancement in autoscaling in AWS. Unlike reactive scaling, which responds to existing demand, predictive scaling leverages machine learning algorithms to forecast future resource needs. Amazon Forecast, for example, can analyze historical metrics and external factors to predict future demand. This allows for proactive capacity adjustments, preventing performance bottlenecks and ensuring optimal resource utilization. The integration of forecasting with autoscaling policies enables a more efficient and cost-effective approach to managing workloads. By anticipating demand fluctuations, organizations can avoid over-provisioning resources during periods of low activity, resulting in considerable cost savings.

Advanced load balancing strategies play a crucial role in maximizing the effectiveness of autoscaling in AWS. Application Load Balancers (ALBs) and Elastic Load Balancers (ELBs) distribute traffic across multiple instances within an autoscaling group. However, to truly optimize performance, consider sophisticated techniques like weighted routing, where traffic is distributed based on instance capabilities or health. Implementing advanced health checks, beyond simple ping tests, can further enhance resilience. These checks may evaluate application-specific metrics to ensure only healthy instances receive traffic. This improves the user experience and minimizes downtime. Advanced load balancing, combined with robust autoscaling, ensures application availability and high performance, regardless of traffic fluctuations. Autoscaling in AWS allows for seamless integration of these functionalities, creating a robust infrastructure.

Further enhancing autoscaling in AWS involves exploring container orchestration services. Amazon Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) provide autoscaling capabilities tailored for containerized applications. These services dynamically adjust the number of containers based on workload requirements. This granular control optimizes resource usage and scalability for microservices architectures. Serverless options like AWS Fargate abstract away the management of underlying infrastructure, further simplifying the process of autoscaling. Organizations can focus on application logic while AWS manages the underlying autoscaling mechanisms. Choosing the right approach—EC2 Auto Scaling, ECS, EKS, or Fargate—depends on the application architecture and scaling requirements. Each offers specific advantages for different use cases within the broader context of autoscaling in AWS.

Monitoring and Optimizing Your Autoscaling Groups

Effective monitoring is crucial for any autoscaling in AWS setup. CloudWatch provides the necessary tools to track key performance indicators (KPIs) within your autoscaling groups. These KPIs include CPU utilization, network latency, error rates, and request counts. Regularly review these metrics to understand the performance of your application and the responsiveness of your autoscaling policies. Analyzing trends in these metrics allows for proactive identification of bottlenecks and areas needing optimization. For example, consistently high CPU utilization might indicate a need to adjust your scaling policies to increase the number of instances more quickly during traffic spikes. Conversely, consistently low utilization suggests opportunities to reduce costs by decreasing the number of instances.

CloudWatch alarms offer proactive issue management. Configure alarms to alert you when specific thresholds are breached. For instance, set an alarm for CPU utilization exceeding 80% for a sustained period. This alerts you to potential performance degradation before users experience issues. Similarly, create alarms for high error rates or prolonged periods of increased latency. Responding promptly to these alerts enables you to prevent performance problems and ensure the smooth operation of your autoscaling in AWS environment. Remember to utilize CloudWatch’s comprehensive visualization tools to gain insights from your data. Graphs and dashboards allow for quick identification of trends and anomalies, supporting more informed decision-making in managing and optimizing your autoscaling groups. Autoscaling in AWS relies heavily on data-driven decisions; CloudWatch is the engine powering this process.

Optimizing autoscaling in AWS involves iterative refinement. Begin by establishing baseline metrics. Then, implement scaling policies based on your observed data. Continuous monitoring allows you to analyze the effectiveness of these policies and make necessary adjustments. Perhaps a different metric, a revised threshold, or an alternate scaling strategy proves more effective. This continuous feedback loop is key to achieving optimal cost efficiency and application performance. Remember, autoscaling is not a set-it-and-forget-it solution. Regular review and optimization are vital for maximizing the benefits of this dynamic scaling technique. By proactively monitoring and adjusting your autoscaling configuration, you ensure your application scales efficiently and cost-effectively, meeting user demands while avoiding unnecessary expense.

Cost Optimization Strategies with AWS Autoscaling

Autoscaling in AWS offers significant cost savings opportunities. Efficient autoscaling minimizes expenses by dynamically adjusting compute resources based on real-time demand. This prevents over-provisioning, where resources sit idle and incur unnecessary charges. By scaling down during periods of low activity, organizations reduce their infrastructure costs. Understanding and implementing effective scaling policies is crucial for cost optimization with autoscaling in AWS.

Leveraging spot instances is a key strategy for reducing costs with autoscaling in AWS. Spot instances provide spare compute capacity at significantly lower prices than on-demand instances. However, they can be interrupted with short notice. Proper configuration of your autoscaling groups to handle these interruptions is vital. This includes designing applications that are tolerant of instance terminations and implementing strategies for data persistence and recovery. The selection of appropriate instance types also impacts costs. Choosing instances optimized for specific workloads ensures that you’re not paying for unnecessary processing power or memory. Right-sizing instances based on application needs prevents overspending. This contributes greatly to long-term savings with autoscaling in AWS.

Careful configuration of scaling policies directly influences cost efficiency. Aggressive scaling can lead to rapid resource consumption and increased costs. Conversely, conservative scaling may result in performance bottlenecks and user dissatisfaction. Finding the right balance requires meticulous monitoring and analysis of key metrics such as CPU utilization, memory usage, and network traffic. Regular review and adjustment of autoscaling policies based on observed data ensures that your autoscaling in AWS setup remains cost-effective and performs optimally. Automating cost analysis and reporting helps identify areas for improvement and promotes continuous optimization of your infrastructure spending. Regular monitoring, combined with strategic use of spot instances and instance type optimization, ensures that your autoscaling in AWS deployment provides both performance and cost efficiency.

Troubleshooting Common Autoscaling Issues and Best Practices for Autoscaling in AWS

Implementing autoscaling in AWS can present challenges. Scaling delays might occur due to insufficient capacity in the underlying infrastructure or misconfigured scaling policies. Errors can arise from incorrect launch configurations, faulty health checks, or permissions issues. Unexpected costs often stem from over-provisioning, inefficient instance types, or neglecting to utilize spot instances. Careful planning and proactive monitoring mitigate these risks. Regular reviews of scaling policies ensure they adapt to changing application demands. Thorough testing of new policies in a non-production environment is crucial before deployment to production. Autoscaling in AWS offers detailed CloudWatch metrics for identifying the root causes of issues. Analyzing these metrics allows for timely intervention and effective adjustments to scaling policies. Understanding the different types of scaling policies, including step scaling and target tracking scaling, helps fine-tune autoscaling for optimal performance.

Effective troubleshooting for autoscaling in AWS requires a systematic approach. Begin by examining CloudWatch logs and metrics for clues about failures. Check the health status of instances within the auto scaling group. Inspect launch configurations and ensure they reflect the current application requirements. Verify scaling policies are correctly configured and aligned with application needs. Address permissions problems by reviewing IAM roles and policies associated with the autoscaling group. Consider implementing sophisticated alerting mechanisms, using CloudWatch alarms, to proactively detect anomalies and potential issues. Automation plays a vital role in preventing and resolving problems associated with autoscaling in AWS. Automated health checks and recovery procedures minimize downtime. The use of automation tools can streamline the management of autoscaling groups, reducing manual intervention and improving efficiency. Autoscaling in AWS is designed for high availability, however, diligent planning is essential to ensure continuous operation and minimal disruption.

Best practices for autoscaling in AWS involve designing resilient architectures. Employ multiple Availability Zones to distribute instances across geographically separate regions. Configure robust health checks to detect and replace unhealthy instances promptly. Leverage advanced load balancing strategies, such as Application Load Balancers (ALB) and Elastic Load Balancers (ELB), for efficient traffic distribution. Regularly review and optimize scaling policies based on historical data and changing application needs. Consider implementing predictive scaling to anticipate demand fluctuations proactively. Regularly audit and refine your AWS cost optimization strategy. Utilize spot instances to decrease operational costs. These strategies, when applied correctly, ensure a smooth and cost-effective autoscaling experience in AWS. Remember that effective autoscaling in AWS is an iterative process requiring continuous monitoring, adjustment, and optimization.