How to Leverage Python for Database Interactions

The ability to interact with databases in Python is fundamental for developing robust and scalable applications that require persistent data storage. Unlike using flat files, which can become cumbersome and inefficient for managing large datasets or complex relationships, databases offer structured ways to store, retrieve, and manage data efficiently. This transition to using databases in python marks a significant step forward in software development, allowing for much more organized and reliable data handling practices. Databases ensure that data is not only stored but also accessible in a controlled and manageable way, which is paramount for many applications ranging from web services to analytical platforms. Without the integration of databases in Python, applications would struggle with data persistence and management, ultimately limiting their capabilities and scalability. This first step into database interactions will set the stage for a thorough exploration of different options, providing a roadmap to select and implement the most appropriate solution based on specific project needs. When considering persistent data needs, the use of databases in Python is essential to any project aiming to handle data effectively and efficiently. The power of python combined with databases provide a versatile set of tools.

The strategic decision to use databases in python instead of relying on flat files is often driven by the need for structured data management, data integrity, and efficient querying capabilities. Flat files might suffice for very small, simple data sets, but as data grows and requirements become more complex, they quickly become limiting. Databases provide features like indexing, normalization, and transaction management, that are simply not available with files. This leads to significant improvements in performance and data reliability. Furthermore, databases often offer tools for backups, security, and access control, which are crucial for maintaining data integrity and protecting it from unauthorized access or accidental loss. The seamless integration of databases in python also enables developers to work with data using familiar programming paradigms and libraries, streamlining the development process and making it easier to manage complex data operations. This allows developers to focus more on application logic rather than wrestling with low-level data management concerns. This approach to data management forms the basis for sustainable application development.

Exploring Relational Database Systems with Python

Relational databases are foundational in data management, structuring information into tables composed of rows (records) and columns (attributes). These databases, which are essential for many applications that utilize databases in python, utilize schemas that define data relationships, ensuring consistency and integrity. Common relational database systems include SQLite, PostgreSQL, and MySQL, each offering unique features and capabilities. SQLite, known for its serverless nature, is often embedded directly into applications, making it a popular choice for lightweight projects or applications needing a local data store. PostgreSQL stands out with its advanced features, robust performance, and strong support for complex data types, making it suitable for large-scale applications. MySQL, another popular choice, is praised for its speed and reliability. Python’s integration with these databases is remarkably seamless, primarily through specialized libraries that act as database connectors. The `sqlite3` library is bundled with Python, making working with SQLite databases straightforward, while `psycopg2` provides the necessary tools to connect Python applications to PostgreSQL servers and `mysql-connector-python` facilitates the same for MySQL. Each library manages communication between the database and python code by abstracting lower-level SQL functions. The choice between these systems often depends on the project’s scale, complexity, and performance requirements. SQLite is well suited for prototyping or smaller apps that require no complex database administration while PostgreSQL and MySQL are best in production scenarios.

When implementing databases in python, each relational database system has unique strengths and weaknesses. SQLite’s simplicity and file-based storage make it ideal for projects where portability and simplicity outweigh the need for a fully featured database server; however, it lacks advanced features of other options. PostgreSQL is noted for its robust performance, handling complex queries efficiently, along with support for advanced data types and extensions that are very useful for complex data models, though it does have a steeper learning curve. MySQL is often lauded for its speed and reliability, boasting a robust community for extensive resources; it does come with a cost when deploying at scale and some advanced features are less developed than those available in PostgreSQL. Libraries like `sqlite3`, `psycopg2`, and `mysql-connector-python` allow Python developers to interact with these databases with minimal configuration required, establishing connection strings, writing and executing SQL queries, and retrieving data efficiently. Understanding these differences is key for selecting the appropriate relational database when using databases in python, and ensuring that it aligns with project goals and resource constraints. Developers should consider these aspects when deciding on how to manage relational databases in python projects to optimize performance and maintenance in the long run.

Working with NoSQL Databases using Python

The landscape of data management extends beyond traditional relational databases, with NoSQL databases offering flexible alternatives for diverse application needs. NoSQL, or “not only SQL,” databases encompass a variety of data models, each suited for specific use cases. These models include document databases, key-value stores, and graph databases, among others. Unlike relational databases that store data in structured tables with predefined schemas, NoSQL databases are schema-less, allowing for more dynamic and adaptable data structures. This flexibility is particularly advantageous when dealing with large volumes of unstructured or semi-structured data, or when rapid development and frequent schema changes are required. The choice between relational and NoSQL databases hinges on the specific requirements of a project. NoSQL databases often excel in scenarios where horizontal scalability and high availability are paramount, and when data does not fit neatly into a tabular structure. For developers working with databases in python, integrating with these systems is straightforward, thanks to dedicated libraries and drivers.

Document databases, such as MongoDB, store data in JSON-like documents, which are particularly well-suited for applications where data is hierarchical or lacks a fixed schema. The `pymongo` library enables seamless interaction with MongoDB, allowing Python developers to perform CRUD (Create, Read, Update, Delete) operations with ease. Key-value stores, like Redis, are designed for quick and efficient data retrieval based on unique keys, making them ideal for caching and session management. While dedicated python libraries facilitate their use, the specific operations vary. Graph databases, on the other hand, are optimized for handling data with complex relationships, often used in social network analysis and recommendation engines, with libraries like `neo4j` connecting Python applications. Each of these NoSQL options presents unique benefits and trade-offs. The selection of which NoSQL system to adopt is contingent on the specific requirements of the project, performance expectations, and the nature of the data involved. The usage of databases in python therefore, requires an understanding of these differences.

The advantages of using NoSQL databases include increased flexibility in handling schema changes and the ability to scale horizontally to handle massive amounts of data. When the application calls for flexible schema, or high-velocity, high volume, semi-structured data, NoSQL databases should be considered. This contrasts with the rigid structures of relational databases. For python developers, this means the correct selection of a database depends on the need for these key features. Using `pymongo` for example, facilitates handling JSON-like documents in python, aligning closely with the flexible nature of document databases. Similarly, other database libraries in python help developers to harness the power of NoSQL models. The effective use of these tools is crucial for building modern, scalable, and high-performance applications. In essence, the integration of NoSQL databases within the Python ecosystem is key for applications requiring flexibility and rapid adaptability.

Integrating SQLite for Lightweight Data Storage in Python Projects

SQLite stands out as a practical and highly accessible option for Python projects that require local data storage, making the management of databases in python straightforward. Its self-contained nature, storing the entire database within a single file, simplifies deployment and eliminates the need for a separate database server. This characteristic is especially beneficial for smaller applications, prototypes, or situations where a full-fledged database system is overkill. The Python standard library includes the `sqlite3` module, enabling seamless interaction with SQLite databases without requiring external library installations. To get started with SQLite, you first establish a connection to a database file, or create one if it doesn’t exist. This is achieved through the `sqlite3.connect()` method, which returns a connection object that is utilized for performing all subsequent database operations. When a database resides solely in memory, it is created using `sqlite3.connect(“:memory:”)` which provides a temporary database and is useful for development or testing purposes. This method offers an easy way to learn about database interaction and how to work with databases in python.

Once connected, you use the cursor object, created via `connection.cursor()`, to execute SQL statements. Operations such as creating tables, inserting data, querying data, updating records, and deleting records are performed using this cursor. For instance, creating a table is accomplished by calling `cursor.execute()` with the appropriate SQL `CREATE TABLE` statement. Inserting data is handled using `cursor.execute()` with an `INSERT INTO` statement, and parameterization is recommended to prevent SQL injection vulnerabilities, a key consideration when dealing with databases in python. Querying data is performed using a `SELECT` statement, and retrieving results is achieved using methods such as `cursor.fetchone()` to fetch a single record, `cursor.fetchall()` to retrieve all results, or `cursor.fetchmany()` to fetch a specified number of results. The `sqlite3` module empowers developers to manage databases in python effectively by providing tools for both basic and more complex tasks. When changes are made to the database, the `connection.commit()` method is essential to save those modifications. Moreover, proper resource management is critical; therefore, it is best practice to always close the database connection when it’s no longer needed by calling `connection.close()`, which releases the resources and ensures no open connections are left.

The following code example demonstrates how to create a database, insert data, and then query that data, showing how databases in python can be used:

Connecting Python Applications to PostgreSQL Databases

Exploring the integration of PostgreSQL databases in python applications introduces a robust solution for managing complex datasets. PostgreSQL, known for its powerful features and reliability, becomes even more accessible through the `psycopg2` library. This library facilitates seamless communication between Python code and PostgreSQL servers, enabling developers to perform a wide array of database operations. To start, establishing a connection requires specifying connection parameters, such as the database name, user, password, host, and port, typically encapsulated within a connection string. For instance, a typical connection string might look like: `dbname=’your_database’ user=’your_user’ password=’your_password’ host=’localhost’ port=’5432’`. The `psycopg2` library uses this string to create a connection object. Once a connection is established, executing SQL queries becomes straightforward. You can use the connection’s cursor object to submit queries, fetch results, and commit changes. For example, to select all rows from a table named ’employees’, one might use the following Python code snippet: `cursor.execute(“SELECT * FROM employees”)`, which would then allow you to fetch results, one at a time using `cursor.fetchone()` or all at once with `cursor.fetchall()`. Such capabilities demonstrate how efficiently databases in python can be accessed.

Handling data with `psycopg2` further highlights the strength of using databases in python. After executing queries, the results are typically returned as tuples or lists, which Python can easily process. Furthermore, data manipulation, like inserting, updating, or deleting data, is managed effectively through SQL queries executed via the cursor. Consider inserting a new employee into the ’employees’ table; the Python code might use `cursor.execute(“INSERT INTO employees (name, department) VALUES (%s, %s)”, (‘John Doe’, ‘Engineering’))` followed by `conn.commit()` to persist the changes. When handling parameters within SQL queries, it is crucial to use placeholders, as shown above with %s, instead of string interpolation to avoid SQL injection vulnerabilities and improve the security and robustness of your code. The proper usage of parameterized queries enhances the security and reliability of database interactions, establishing good database practices. Furthermore, proper connection management is paramount; always ensuring that connections are closed after use and exceptions are handled appropriately, avoids resource leaks and connection stability issues. This can be done with try/finally blocks or using with statements which automatically close the connection and cursor, thereby improving how databases in python integrate with your programs.

Practical implementations include building a web application that stores user data, a data processing pipeline, or a backend for a mobile app, where managing relational databases efficiently is necessary. By using Python with `psycopg2` it ensures that your application can interact with PostgreSQL databases in python effectively, thus creating dynamic and responsive database driven applications. When your application interacts with PostgreSQL databases in python you will see that combining Python’s versatility with PostgreSQL’s power is a solid combination for most backends. This approach not only facilitates database interactions but also showcases how databases in python can lead to advanced and well-structured applications that handle significant data volumes. When using databases in python, always remember proper handling of data and connections for a robust and dependable application.

Using MongoDB with Python: A Practical Example

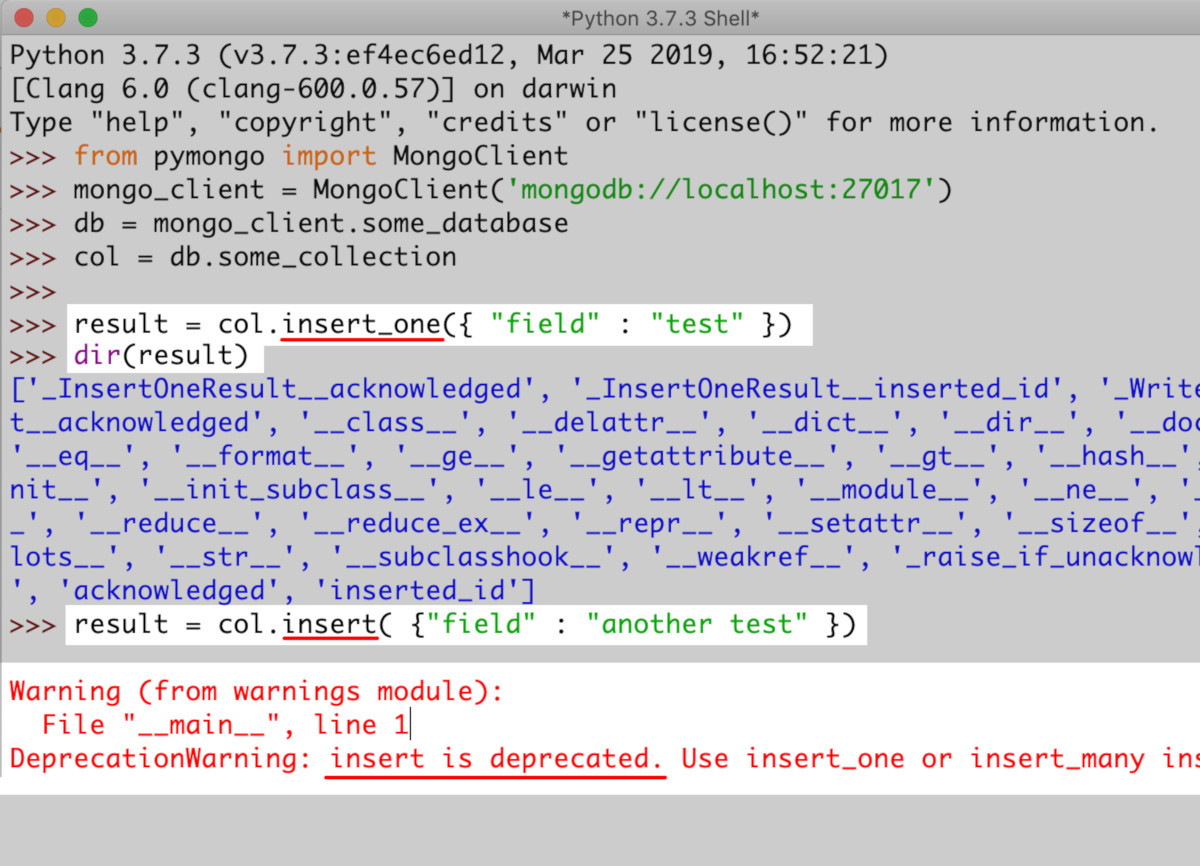

Integrating MongoDB with a Python project offers a flexible approach to managing data, particularly when dealing with unstructured or semi-structured information. The `pymongo` driver facilitates seamless interaction with MongoDB, allowing developers to perform various operations efficiently. To begin, one must install the `pymongo` library using pip: `pip install pymongo`. Connecting to a MongoDB database is straightforward; typically involving specifying the MongoDB connection URI. Once connected, creating a database or selecting an existing one is done by simply defining the database name. Within MongoDB, data is stored in collections, which are analogous to tables in relational databases. Creating a new collection, or selecting an existing one, is also a simple process; a collection is created when data is first inserted in it. Inserting data involves creating Python dictionaries that represent individual documents. These dictionaries are then passed to the collection’s insert_one or insert_many methods. The flexibility of document databases in python makes it easy to manage different structures of data, which is especially useful when working with diverse datasets. This is one of the most popular ways of using databases in python.

Querying data in MongoDB through `pymongo` is done by creating query documents. These documents specify search criteria, which can be as simple as matching a single field or as complex as combining multiple conditions. The find method returns a cursor, which allows iterating over the query results. Update operations use methods like update_one or update_many, which target specific documents based on search criteria and modify them. Operations such as adding new fields to every document in a collection are as simple as updating all documents using an empty query document. In document databases in python, the ability to easily manage diverse and varying data types makes it a favorite choice for data scientists or application that need to quickly evolve to store different types of data. Use cases include applications with complex data models, real-time analytics, content management systems, and projects that need to scale horizontally.

Further exploration of `pymongo` functionalities provides even more fine-grained control over data manipulation. MongoDB’s document-based approach allows for nested data, which can be managed and queried effectively using Python’s dictionary structures. The flexibility of document databases in python also offers the ability to index data fields for improved performance. These indexes accelerate queries and enhance data retrieval efficiency, making it possible to handle large volumes of data smoothly. The integration of MongoDB with Python thus presents a powerful solution for many use cases, showcasing the adaptability and versatility of modern databases in python development.

Choosing the Right Database for Your Python Project

Selecting the appropriate database for a Python project is a critical decision that significantly impacts its performance, scalability, and maintainability. The choice hinges on several factors, and a careful evaluation is essential. One primary consideration is the nature of the data itself. If your project deals with structured data that can be easily organized into tables with well-defined relationships, relational databases like SQLite, PostgreSQL, or MySQL might be the most suitable. These systems excel at managing data with consistent schemas and complex relationships. SQLite, being lightweight and file-based, is excellent for smaller applications or local data storage, and it’s an easy way to start working with databases in python. However, if your application will scale, then PostgreSQL and MySQL offer more robust solutions and support concurrent users for more demanding projects. When dealing with less structured or frequently changing data, or when high scalability and flexibility are paramount, NoSQL databases like MongoDB, which are document-oriented databases are a solid option. They allow for flexible schemas, and are excellent with a rapidly evolving project that might have its data change often. Understanding the volume and velocity of data, as well as the nature of queries your application will perform will greatly influence this decision.

Scalability requirements also play a major role in selecting databases in python. For projects that require horizontal scaling to handle a massive influx of data or users, NoSQL databases or managed cloud database services might be better options. Projects that start small, but expect future growth should take into account the time it takes to migrate into a better database solution. Budget and available resources must also be factored in. SQLite is free and requires minimal setup, making it a very accessible choice for smaller projects and prototyping. PostgreSQL and MySQL are also open-source, but may require more setup and maintenance. MongoDB has a community version and a hosted enterprise version. The team’s expertise with relational or NoSQL databases will also influence the decision, as time spent learning a new database technology will impact the overall budget and project timeline. It is important to consider the long-term implications of the choice. Will your project require advanced features like transaction management, complex querying, or full-text search? These features might be supported better by one database type compared to another. Each project has unique needs that must be evaluated before settling on a database solution.

In summary, when making your decision between different databases in python, you should start by evaluating the type of data and how it will be accessed and structured. Then consider the scale of your project and how it is expected to grow over time, both in data volume and number of users. Budgetary constraints, the team’s existing skill set, and long term maintenance plans are crucial components to consider in the process. Relational databases excel with structured data and complex relations, while NoSQL databases are very flexible and work well with less structured data, and are easier to scale. It is essential to carefully weigh the pros and cons of each type to make an informed decision that meets your Python project’s needs. Asking the right questions about data structure, expected growth, and required features will help in selecting the ideal database from the various options available when using databases in python.

Python Database Libraries and Best Practices

This section consolidates our exploration of databases in python, focusing on best practices for interacting with various database systems. Throughout this article, we’ve introduced several key Python libraries, notably `sqlite3`, `psycopg2`, and `pymongo`, which facilitate seamless communication between your Python applications and different types of databases. Choosing the right library depends on the specific database you’re working with, and ensuring its correct use is paramount. Effective connection management is essential to avoid resource leaks and connection exhaustion, especially in high-traffic applications. Always close database connections when they are no longer needed. Employing connection pooling, where available, can also improve performance by reusing existing connections, reducing the overhead of establishing new ones. Be vigilant against SQL injection vulnerabilities, especially when incorporating user-supplied data into SQL queries. This can be mitigated through parameterized queries or prepared statements provided by the database driver, which prevents user input from being interpreted as executable SQL code. Another critical aspect is input data validation before it is inserted into the database; this should be done using Python before the query execution, or using the database constraints to ensure that data integrity is maintained, preventing incorrect or malicious data from being stored.

To enhance your overall workflow when working with databases in python, consider using tools for database administration. These tools provide a user-friendly interface to view, manage, and query database contents, offering a more visual method than plain command-line interactions. Furthermore, Object-Relational Mappers (ORMs) like SQLAlchemy can significantly simplify database interactions by allowing you to work with database tables as Python objects. ORMs reduce the amount of raw SQL you need to write, improving code readability and maintainability, making the code more portable between different types of relational databases, and further reducing the risk of SQL injection. Remember that there are cases where using an ORM is not the ideal approach, like very complex queries, or cases where performance needs to be a top priority. Adopting a strategy where the data management is separated from the business logic will greatly improve how your application will be structured, giving it more flexibility and maintainability. Always be aware of database schema design, indexing strategies, and query optimization techniques to ensure optimal performance, particularly when working with large datasets. By implementing these best practices and leveraging the right tools and libraries, you can effectively use databases in python, building robust, scalable, and secure applications.