Understanding Azure Network Performance: Minimizing Inter-Region Delays

The speed at which data travels between Azure regions is a crucial factor impacting application performance and user experience. Several elements contribute to the overall azure latency between regions, primarily stemming from the physical distances involved. Data packets must traverse extensive networks, and the sheer mileage between data centers introduces inherent delays. This is further compounded by the network infrastructure’s condition, including the quality of the fiber optic cables, the efficiency of the routing hardware, and the congestion levels at various network nodes. Additionally, Azure’s routing policies, which govern how data packets are directed across the network, play a significant role. These policies aim to optimize traffic flow, but the most efficient routes are not always the shortest geographically. It is a complex combination of physical laws and technical considerations that determine how quickly information can be transferred across regions.

The goal of any cloud deployment strategy is to achieve optimal performance, where latency is minimized, and data transfer is as fast as possible. Understanding the elements influencing azure latency between regions is the first step toward this optimization. The physical distance between data centers is an unchangeable factor, but other network components can be fine-tuned to achieve better results. The challenge lies in mitigating the delays that accumulate across the network path. This requires a thoughtful approach that balances the needs for performance, cost-effectiveness, and resilience. Therefore, a cloud architecture must be carefully designed to minimize any potential bottlenecks, allowing data to flow smoothly and swiftly between Azure regions, ensuring applications and services perform optimally. There are tools and services provided by Microsoft that aim to mitigate the inherent complexities of distance that are explained in later sections of this article.

Exploring the Concept of Azure Region Pairs and Data Transfer

Azure region pairs are a fundamental aspect of Microsoft’s cloud infrastructure, strategically designed to enhance disaster recovery and ensure business continuity. Each Azure region is paired with another within the same geography, forming a relationship that supports data replication and failover capabilities. This pairing isn’t arbitrary; it’s carefully planned to minimize the risk of simultaneous outages and to ensure that if one region experiences an issue, its paired region can take over with minimal disruption. This setup significantly impacts data synchronization processes. When implementing inter-regional replication, data is copied from the primary region to its paired counterpart, often asynchronously. While this ensures that data is consistently available across both regions, it also introduces potential azure latency between regions. The physical distance between the paired regions, although geographically close, still contributes to this latency, impacting the speed at which data changes are propagated and reflected in the secondary region. Consequently, the time it takes for data to become fully consistent across both regions isn’t instantaneous, which is a critical aspect to consider when designing applications that rely on this cross-region synchronization. Understanding these latency considerations is crucial for optimizing application performance and maintaining desired recovery objectives.

Keeping data within region pairs offers significant advantages, particularly for businesses with strict compliance requirements or those prioritizing resilience. The inherent design of region pairs reduces the complexity of replicating data and provides a pre-defined path for disaster recovery, thereby streamlining operations. However, it’s important to acknowledge that there are situations when selecting only a region pair isn’t feasible. For instance, specific regulatory compliance might require data to reside within a particular geographic location, which might not align with the typical pre-defined pairings. Additionally, very large enterprises might need to leverage a mixture of region pairs and individual regions to serve different markets, to better manage azure latency between regions across the organization, or to achieve optimal network configurations. This means that while region pairs should be prioritized, business needs might necessitate using other regions, which can increase azure latency between regions, and it makes the architecture more complex. Hence, understanding both the advantages of working within region pairs and the reasons for going beyond them is critical for building a balanced and effective Azure infrastructure strategy.

When dealing with inter-regional replication between Azure regions, developers and architects must understand that azure latency between regions is a tangible factor that can affect performance. Even if the selected regions are part of a paired setting, the physical distance impacts the speed at which replication takes place. This makes the selection of regions a key architectural decision. The impact isn’t simply on replication times; it also has a knock-on effect on application behavior, particularly in active-active setups or where data needs to be consistently updated across regions. Therefore, it is imperative to thoroughly assess these potential limitations in the context of specific workloads and expected application requirements. This kind of evaluation helps to make sure that all aspects of performance are considered.

How to Measure Azure Latency Between Regions Effectively

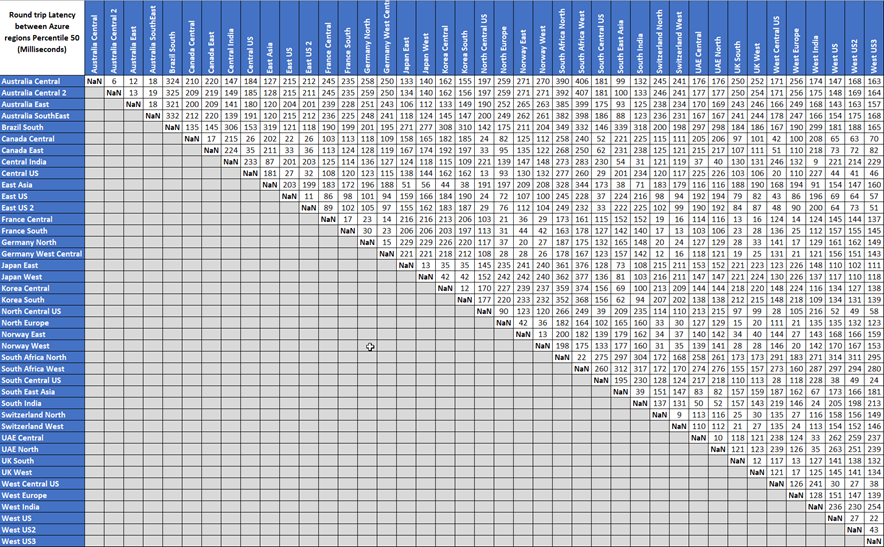

Accurately measuring azure latency between regions is crucial for optimizing application performance and ensuring a smooth user experience. Several methods and tools are available to provide insights into network delays. Azure Network Watcher is a powerful service that offers a range of diagnostic and monitoring capabilities, including the ability to perform network performance tests between different Azure regions. Its ‘connection monitor’ feature allows you to set up and execute tests simulating real user traffic patterns, giving you a view of the latency and packet loss rates. The results can be visualized and analyzed to pinpoint potential network issues. In addition to Azure Network Watcher, command-line utilities like ‘ping’ and ‘tracert’ are invaluable for performing basic latency checks. While ‘ping’ measures the round-trip time for packets, ‘tracert’ provides the route data takes through the network and each hop’s time delay, which can help you identify potential bottlenecks in the path. However, keep in mind that ICMP packets (used by ‘ping’) may sometimes be de-prioritized or blocked, affecting the precision of the results. Therefore, it’s crucial to employ a combination of tools for a more complete picture of azure latency between regions.

Application performance monitoring (APM) solutions provide another perspective by tracking the performance of applications in real-time. APM tools give application-level insights and can provide metrics specific to an application’s response time for operations that involve inter-regional communication. This data is useful for understanding the actual impact of network latency on user experience. When measuring azure latency between regions, consistent and repeatable testing is essential. Conduct tests at various times of the day and during different traffic loads to gain a realistic representation of latency under various conditions. Establish a baseline latency by testing at times of low traffic and then compare these figures with the latency observed during peak hours. By following these best practices, you can get a reliable baseline for azure latency between regions and use it to make informed decisions about network design and application architecture. Regular measurements of this type will also assist in monitoring the impact of infrastructure changes or optimizations you may make over time. The use of diverse tools ensures a comprehensive and accurate understanding of potential latency issues.

Strategies for Reducing Inter-Region Latency in Azure

Minimizing azure latency between regions is crucial for applications demanding high performance and responsiveness. Several effective techniques can significantly reduce data transfer delays. Proximity placement groups offer a valuable solution by ensuring that Azure resources are physically located close to each other within a region, which reduces the physical distance that data needs to travel. While these groups do not address inter-region latency directly, they can be used in conjunction with other strategies. For environments requiring dedicated and predictable network connections, Azure ExpressRoute provides a private, high-bandwidth link between on-premises infrastructure and Azure datacenters, bypassing the public internet and substantially lowering latency for inter-region traffic by enabling a faster path with less network hops. When distributing traffic globally, Azure Front Door provides a global entry point for web applications, optimizing traffic routing for reduced latency and improved performance. This service intelligently directs end-user traffic to the closest available Azure region based on proximity and health checks, improving response times and minimizing network delays. Employing content delivery networks (CDNs) is another strategy for accelerating content delivery, especially for static content. By caching content in edge servers closer to users, CDNs reduce the distance data has to travel, significantly impacting azure latency between regions and improving application responsiveness, without the need for cross-region requests for every user interaction.

Strategically planning network infrastructure is essential for optimizing performance and lowering azure latency between regions. Architecting solutions with performance in mind includes the effective use of these various techniques to minimize latency and improve the overall user experience. A well-planned architecture not only addresses current needs but is also scalable and adaptable to future demands. It is crucial to consider the characteristics of your specific application and user base when determining which strategies are most appropriate. The combination of proximity placement groups, private connections via ExpressRoute, intelligent traffic routing via Azure Front Door and the use of a CDN offers a multi-faceted approach to latency reduction. This approach provides organizations with the tools needed to establish a robust and performant environment.

Choosing the right combination of strategies to reduce azure latency between regions requires careful planning. The choice should be based on various factors, such as the application’s sensitivity to latency, the geographic location of the user base and the type of data being transmitted. It is important to evaluate each method’s performance impact and cost-effectiveness thoroughly before implementation. Moreover, continuous monitoring and testing will be necessary to ensure the ongoing success of the implemented measures. This enables organizations to proactively identify and remediate any latency challenges before they impact the user experience. Ultimately, the effective minimization of azure latency between regions is the result of a well-architected solution, incorporating the right tools and methods tailored to specific business requirements.

Analyzing Network Topology for Optimal Azure Performance

A crucial aspect of optimizing azure latency between regions involves a thorough analysis of your network topology. Understanding how your virtual networks, subnets, and network security groups (NSGs) are structured directly impacts data transfer speeds. Virtual networks provide the foundation for your Azure resources, and their design can create bottlenecks if not carefully planned. Improper subnet segmentation can lead to unnecessary hops, increasing latency, particularly when data travels between different subnets within or across regions. Network security groups, while essential for security, can also introduce latency if rules are not optimized. Every hop or check a network packet takes within the Azure infrastructure can impact the overall time it takes for data to travel between Azure regions. Therefore, strategically designing subnets based on anticipated traffic patterns can significantly enhance the performance and reduce the overall azure latency between regions. For instance, grouping resources that frequently communicate within the same subnet will reduce unnecessary network hops, and this directly contributes to achieving lower inter-region latency. Effective network segmentation is not just about security; it’s a key element in optimizing data transfer speeds and overall performance across your cloud environment.

Further, the way you configure your virtual network peering and how data travels between virtual networks influences azure latency between regions. Incorrectly designed peering can lead to non-optimal paths that might introduce additional latency when traffic needs to traverse multiple virtual network connections to reach the destination. Traffic optimization, from a network topology perspective, involves ensuring that traffic flows through the most direct paths with minimal hops. This often means designing virtual networks to reflect application architecture and data flows. By carefully considering the physical layout of Azure resources and matching the virtual network infrastructure to this layout, you can avoid unnecessary data transfer delays. Furthermore, an in-depth analysis of the traffic within the network will provide useful information about the most used paths and this will help optimize those by allocating better resources and improving the overall latency between regions. It’s not just about network infrastructure; it’s about intelligent management of traffic flow and placement of application resources. Understanding your traffic patterns, combined with careful network design, is critical for lowering the azure latency between regions.

In conclusion, analyzing the network topology should not be a one-time task but an ongoing process. As application requirements change, the network architecture should also be reassessed to maintain the optimal performance. A proactive approach to network management, coupled with the ability to analyze the traffic flow, ensures low azure latency between regions while also guaranteeing the security of the network. Regular reviews of virtual network layouts, subnet configurations, and NSG rules help in identifying potential bottlenecks before they impact performance. By addressing network topology with the same level of importance as compute resources and applications, you will minimize the azure latency between regions, maximizing the overall performance of your Azure cloud environment.

Real-World Scenarios: Optimizing Azure Latency for Specific Workloads

In real-world scenarios, optimizing azure latency between regions is critical for various applications, each with unique performance demands. Consider a gaming platform requiring low-latency for real-time interactions; the slightest delay can disrupt the player experience. In this context, deploying game servers in Azure regions closest to the player base, coupled with proximity placement groups, is essential. Additionally, utilizing Azure Front Door can dynamically route traffic to the nearest server for each player, minimizing azure latency between regions and ensuring a smooth and responsive gaming environment. Another scenario involves big data analytics where data is often spread across different Azure regions. Imagine a global organization with data lakes in multiple geographies. Cross-region queries could suffer from significant azure latency between regions, impacting analysis speed. Solutions for this include employing data replication strategies within Azure region pairs and leveraging Azure Data Factory for optimized data movement. Furthermore, using Azure ExpressRoute for a dedicated network connection can minimize latency compared to public internet routes. The third use case centers on geographically dispersed applications demanding high availability and low latency. A global e-commerce platform, for example, needs to be accessible and responsive to users worldwide. To achieve this, using a multi-region active-active architecture is ideal, with Azure Traffic Manager or Azure Front Door distributing traffic intelligently. CDNs are also crucial for caching static content, further reducing azure latency between regions for end-users and providing a better experience. These solutions are only a few examples, and they show the importance of designing and implementing an optimal architecture for a specific workload in Azure.

Another practical application of reducing azure latency between regions can be seen in financial trading platforms, where milliseconds can represent a significant gain or loss. In this scenario, low latency is paramount; therefore, the careful selection of Azure regions where market data feeds are located is critical. Additionally, using ExpressRoute to create direct and private connections to these feeds will lower latency and increase network stability. Proximity Placement Groups further optimize co-location of related resources within the data centers. For example, databases and high-frequency compute resources should be located in the same proximity group to maximize performance. Also, the architecture should include continuous monitoring and alerts to react quickly to any fluctuation in network performance. Finally, consider healthcare applications that require highly secure and low-latency data access. This often involves transferring patient data between hospitals and research facilities. For such cases, the data needs to be transferred within secure virtual networks and using the right tools for monitoring and testing. This is especially critical for real-time diagnostics. The selection of Azure regions should align with both compliance requirements and the best performing region with the lowest azure latency between regions to provide the right level of services to the patients.

Selecting the Right Azure Region for Performance and Compliance

Optimizing for performance requires careful consideration of factors beyond just minimizing azure latency between regions. Geographic location plays a crucial role in minimizing latency for end-users, particularly for applications requiring low-latency interactions. Deploying resources closer to the end-user base significantly reduces the distance data must travel, leading to a marked improvement in application responsiveness. However, the optimal region isn’t solely determined by proximity. Compliance requirements often dictate where data must reside, impacting the potential to select the geographically closest region. Data sovereignty regulations, for example, mandate data storage within specific geographic boundaries, potentially increasing azure latency between regions if the optimal region for performance is outside of those boundaries. Balancing performance needs with compliance obligations necessitates a comprehensive assessment of both factors, often involving trade-offs to find the best compromise.

Another critical element in regional selection is the nature of the application and its data sensitivity. Applications handling sensitive personal information, financial data, or healthcare records may face stringent regulations about where they can store and process data. These regulations might prioritize compliance over pure performance optimization, potentially increasing azure latency between regions but ensuring adherence to legal and ethical obligations. Furthermore, choosing a region with robust infrastructure and established data centers is vital for ensuring high availability and resilience. A region with extensive network capacity and redundancy minimizes the risk of service disruptions due to network congestion or unforeseen incidents, which can significantly impact the consistency of application performance and add unexpected latency. The interplay between performance demands and compliance necessitates a thorough analysis of these factors to determine the best Azure region for deployment.

Understanding the trade-offs between performance optimization and compliance is essential for achieving a balance. This involves a careful evaluation of latency metrics, compliance regulations, data sovereignty laws, and the specific requirements of the application. While minimizing azure latency between regions is a key goal, ensuring compliance and maintaining data security should never be compromised. A well-architected solution considers all of these factors, leading to a deployment strategy that prioritizes not only speed and efficiency but also security and regulatory adherence. Advanced techniques such as using Azure Traffic Manager to direct users to the nearest region with available capacity can help mitigate performance concerns while upholding compliance standards, thus creating a robust and adaptable solution that performs optimally while meeting all requirements.

Monitoring and Maintaining Optimal Azure Inter-Region Network Performance

Ensuring consistent low-latency performance for applications spanning multiple Azure regions requires diligent monitoring and proactive maintenance of the network infrastructure. It is not a one-time setup; rather, it’s an ongoing process of refinement and adaptation. The initial steps to minimize azure latency between regions, such as selecting optimal regions and implementing proximity placement groups, are only the start. Continuous monitoring is essential to detect any degradation in performance due to changes in network traffic, infrastructure updates, or other unforeseen issues. This monitoring should not only focus on measuring the raw latency but also on identifying bottlenecks that might be impeding data transfer speeds. Tools like Azure Network Watcher provide valuable insights into network performance and can help pinpoint areas that need attention. Additionally, application performance monitoring (APM) solutions can offer a more holistic view by tracking latency from the application’s perspective. Regular and repeatable testing is also crucial. Setting up automated tests that simulate typical user traffic can help identify performance issues before they impact end-users. By employing a combination of these tools and strategies, IT teams can maintain a vigilant watch on their Azure network and ensure consistently low azure latency between regions.

The key to sustaining optimal performance is adaptability. As application requirements evolve, the underlying Azure infrastructure must also change. This may involve adjusting virtual network configurations, subnet allocations, or network security group rules. Furthermore, as Azure services and capabilities expand, new options might become available to further optimize inter-region latency. For example, new Azure regions may open that offer better proximity to your users or new connectivity options become available. Regularly reassessing your Azure network topology is important to ensure that it aligns with your changing business needs and technological advancements. When performance degradation is detected, having a clear process to quickly diagnose and resolve the underlying cause is essential. This might involve anything from simply re-routing network traffic to re-architecting components of the application infrastructure. Proactive maintenance, including patching and software upgrades is crucial to ensuring the stability and performance of network equipment. In this respect, maintaining minimal azure latency between regions needs a commitment to regular review, testing and adjustments to ensure applications continue to perform optimally. In summary, consistent monitoring coupled with adaptable infrastructure management are crucial to guarantee long term optimal network performance.