Understanding the Need for EC2 Scaling

Efficient EC2 scaling is crucial for modern applications. Fluctuating workloads, ensuring high availability, and maintaining optimal performance under pressure are key challenges addressed by proper scaling strategies. Consider e-commerce platforms experiencing significant traffic spikes during sales events or gaming servers handling a massive player base. Without robust scaling mechanisms, these applications could suffer performance degradation, leading to frustrated users and potential revenue loss. Scalable EC2 deployments are therefore essential to maintaining a positive user experience and ensuring business continuity in the face of changing demands. A properly designed scaling solution for EC2 instances can automatically adjust resources based on real-time demands, ensuring consistent performance and availability. This dynamic approach ensures an application can seamlessly handle increased user activity and respond to surges in demand.

Applications with predictable workloads can sometimes benefit from manual EC2 scaling, but this approach can become impractical when dealing with significant fluctuations in demand. For example, a small company with a predictable number of website visitors may find manual scaling sufficient. However, a major e-commerce platform, anticipating surges during promotional periods or holidays, needs the agility of automated scaling. A key advantage of auto-scaling is the ability to predict and react to demands. Properly configured EC2 scaling not only helps manage peak demand but also effectively utilizes resources, keeping costs in check. This automation significantly reduces the overhead of manual scaling, allowing IT teams to focus on other critical tasks while maintaining application performance at scale.

Effective EC2 scaling is essential to handling unpredictable traffic spikes. Auto-scaling, using AWS Auto Scaling groups, ensures applications can handle increased user activity and respond promptly to surges in demand. This proactive approach prevents performance degradation and maintains a positive user experience. Choosing the right approach to EC2 scaling allows businesses to adapt to changing demands without compromising performance or incurring unnecessary costs. Well-designed auto-scaling strategies ensure that resources are available only when needed, preventing over-provisioning and optimizing costs. Implementing automated EC2 scaling provides significant benefits to businesses of all sizes by enabling them to handle unpredictable and fluctuating workloads efficiently and economically.

Exploring EC2 Scaling Options: Auto Scaling vs. Manual Scaling

Manual ec2 scaling involves manually adjusting the number of EC2 instances based on observed workload demands. This approach is suitable for smaller applications with predictable workloads, where scaling needs are infrequent and easily anticipated. The advantages include greater control over resource allocation and potentially lower costs if managed efficiently. However, manual scaling can be time-consuming, prone to human error, and struggles to respond quickly to sudden spikes in demand. It may lead to performance degradation or outages if not proactively managed, especially during periods of unexpectedly high traffic. Moreover, it requires constant monitoring and intervention, making it an inefficient solution for applications requiring continuous high availability.

Automated ec2 scaling, achieved using Auto Scaling groups, offers a dynamic and efficient alternative. Auto Scaling groups automatically adjust the number of EC2 instances based on predefined metrics, such as CPU utilization, network traffic, or custom metrics. This proactive approach ensures that the application always has the required resources, automatically scaling up during peak demand and down during periods of low activity. The primary benefits include improved application availability, enhanced performance under pressure, and optimized resource utilization. Auto Scaling significantly reduces the operational overhead associated with manual scaling, allowing for a more responsive and efficient infrastructure. While the initial setup requires more configuration, the long-term benefits of automation far outweigh the initial investment of time. The cost implications are largely dependent on the efficiency of the scaling policies; well-tuned policies can significantly reduce costs compared to over-provisioning with manual scaling.

Choosing between manual and automated ec2 scaling hinges on the specific application’s requirements and workload characteristics. Applications with stable, predictable workloads may find manual scaling sufficient, although the risk of performance issues due to unforeseen demand spikes should be carefully considered. However, for applications demanding high availability, rapid scalability, and the ability to handle unpredictable traffic fluctuations, automated scaling using Auto Scaling groups is the superior choice. The efficiency gains and reduced operational burden offered by automated scaling typically justify its adoption for most modern applications, despite the slightly more complex initial setup. The key to successful ec2 scaling lies in carefully considering the trade-offs between control, cost, and operational efficiency when selecting the appropriate scaling approach.

How to Implement Auto Scaling for Optimal Performance

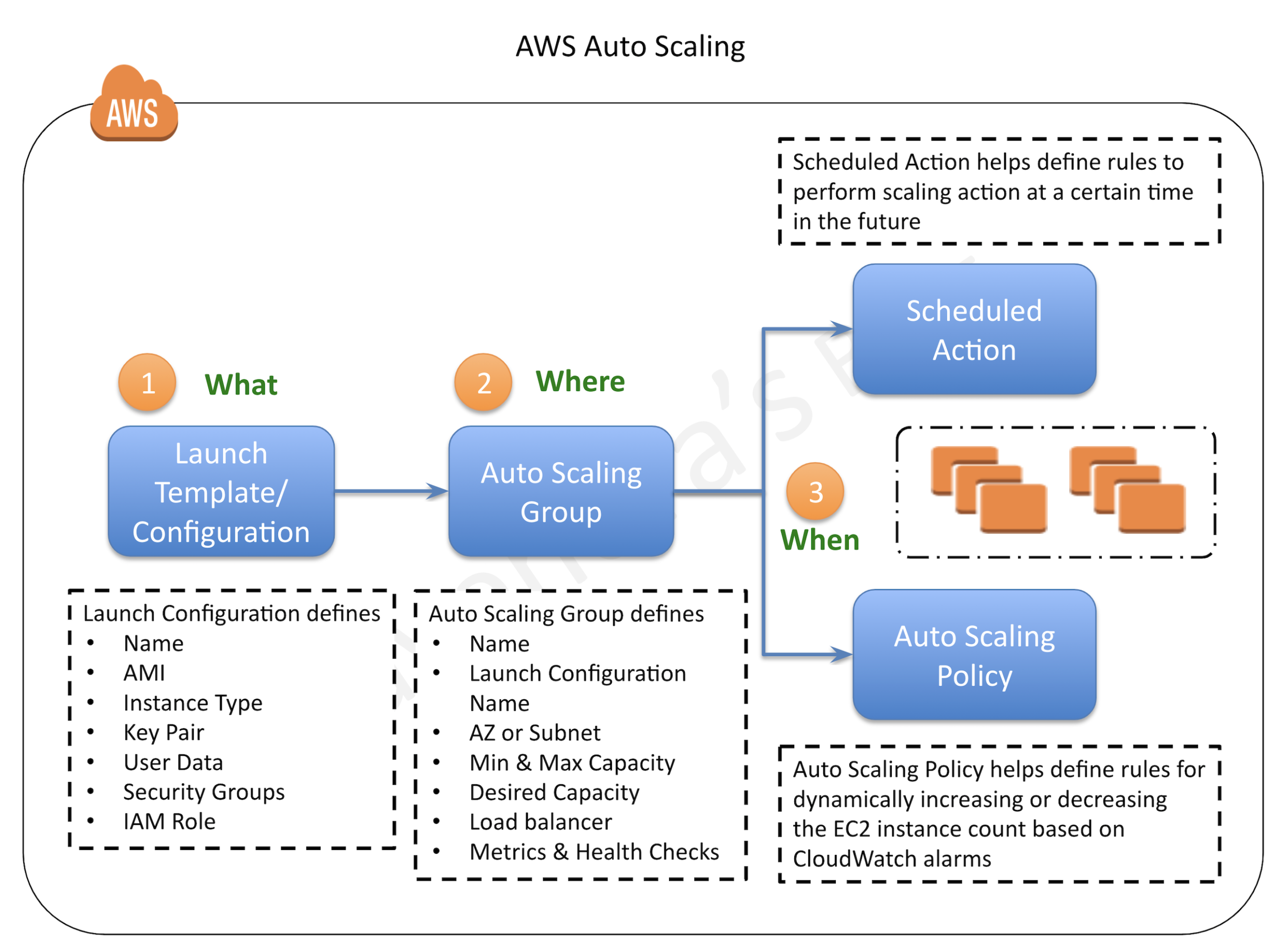

Implementing effective ec2 scaling with Amazon EC2 Auto Scaling involves a series of steps designed to ensure your applications handle varying workloads efficiently. First, define a Launch Configuration. This configuration specifies the AMI (Amazon Machine Image), instance type, and other settings for your EC2 instances. Choosing the right instance type is crucial for ec2 scaling; consider factors like CPU, memory, and storage requirements based on your application’s needs. Careful selection here directly impacts cost and performance. Next, create an Auto Scaling group. This group defines the desired capacity (minimum, maximum, and desired number of instances), the launch configuration, and the load balancer (if applicable). The load balancer distributes incoming traffic across your instances, improving availability and performance under high demand. This is a critical step for achieving seamless ec2 scaling.

Next, configure scaling policies to automatically adjust the number of instances based on predefined metrics. Common metrics include CPU utilization, network traffic, and custom metrics from your application. For example, a scaling policy might increase the number of instances if CPU utilization exceeds 80% for a sustained period, ensuring your application remains responsive even during peak loads. Conversely, if demand decreases, the scaling policy can reduce the number of instances to optimize cost efficiency. Health checks are also vital for ec2 scaling; they ensure that only healthy instances receive traffic. These checks can be either EC2 instance status checks or custom health checks integrated with your application. Regularly reviewing and adjusting your scaling policies based on performance monitoring data is essential for optimizing your ec2 scaling strategy. Consider implementing different scaling policies for different times of the day or week to adapt to predictable fluctuations in demand.

Effective ec2 scaling requires a proactive approach to troubleshooting. Common issues include insufficient capacity, misconfigured scaling policies, and network bottlenecks. Regular monitoring of CloudWatch metrics provides valuable insights into potential problems. For example, consistently high CPU utilization despite numerous instances suggests a need for more powerful instance types or optimization of your application code. Similarly, persistent network errors might indicate a bottleneck that necessitates scaling network resources. By carefully configuring launch configurations, defining appropriate scaling policies, and actively monitoring performance, you can achieve robust and cost-effective ec2 scaling for your applications. Remember that iterative adjustments are key; continuously refine your scaling strategy based on real-world data and performance observations to optimize both cost and responsiveness.

Leveraging CloudWatch for Monitoring and Optimization of EC2 Scaling

CloudWatch plays a pivotal role in effectively monitoring EC2 instances and Auto Scaling groups, providing crucial insights for optimizing ec2 scaling strategies. By visualizing key performance indicators (KPIs), administrators gain a comprehensive understanding of resource utilization and scaling efficiency. Real-time monitoring of metrics such as CPU utilization, network traffic, and disk I/O allows for proactive identification of performance bottlenecks. For instance, consistently high CPU utilization might indicate a need to increase the number of EC2 instances within the Auto Scaling group to handle the increased workload. Conversely, consistently low CPU utilization suggests potential over-provisioning, leading to unnecessary costs. CloudWatch dashboards can be customized to display these metrics, providing a clear, at-a-glance view of the health and performance of the entire ec2 scaling infrastructure. Effective use of CloudWatch alarms allows for automated responses to significant deviations from established baselines, ensuring rapid scaling adjustments based on predefined thresholds. This proactive approach minimizes downtime and enhances the overall responsiveness of the application.

Understanding the nuances of CloudWatch metrics is crucial for successful ec2 scaling. CPU utilization, expressed as a percentage, reflects the processing power being used by the EC2 instances. High CPU utilization often necessitates scaling up to handle the increased demand. Network traffic, measured in bytes per second, shows the volume of network data being transmitted. Sustained high network traffic might indicate the need to optimize network configuration or scale out to distribute the load. Similarly, high disk I/O indicates intensive disk activity, potentially suggesting the need for faster storage solutions or adjustments to application design. By analyzing these metrics in conjunction with application-specific metrics, a holistic view of performance and scaling efficiency emerges. CloudWatch also provides detailed information on the success or failure of scaling events, enabling the fine-tuning of scaling policies to improve responsiveness and resource allocation. Analyzing this data helps refine the scaling configuration, enabling a more precise and cost-effective ec2 scaling solution. This data-driven approach helps reduce wasted resources and ensures that the scaling mechanisms effectively meet application demands.

Beyond basic monitoring, CloudWatch offers advanced features to further enhance ec2 scaling optimization. Anomaly detection identifies unusual patterns in metrics, alerting administrators to potential problems before they impact application performance. This proactive identification of issues allows for swift intervention and prevents major disruptions. Furthermore, CloudWatch integrates seamlessly with other AWS services, providing a holistic view of the entire application infrastructure. By correlating metrics from multiple services, administrators can pinpoint the root cause of performance bottlenecks and develop more targeted scaling strategies. The ability to create custom dashboards and metrics allows for tailoring CloudWatch to specific application needs, resulting in more effective and efficient monitoring and optimization of ec2 scaling operations. This comprehensive monitoring capability ensures that ec2 scaling remains agile and responsive, consistently adapting to meet the dynamic demands of the application.

Optimizing Scaling Strategies for Cost Efficiency in EC2 Scaling

Cost optimization is paramount when implementing ec2 scaling. Choosing the right EC2 instance type is crucial. Smaller, less powerful instances might suffice for low-traffic periods, while scaling up to larger instances during peak demand ensures performance. Right-sizing instances based on actual workload needs prevents overspending on unnecessary capacity. Regularly reviewing instance types and adjusting as needed is a proactive approach to cost management in ec2 scaling. Effective monitoring tools like CloudWatch provide insights into resource utilization, enabling data-driven decisions to avoid over-provisioning. This careful selection and monitoring is a key component of efficient ec2 scaling.

Leveraging Amazon EC2 Spot Instances offers significant cost savings for ec2 scaling. Spot Instances provide spare EC2 capacity at a significantly reduced price compared to On-Demand Instances. Applications tolerant of occasional interruptions can benefit greatly from Spot Instances. Auto Scaling groups can be configured to utilize Spot Instances as a primary instance type, with fallback to On-Demand Instances if Spot Instances are unavailable. This hybrid approach balances cost savings with application reliability. Careful consideration of application requirements and tolerance for interruptions is necessary to successfully integrate Spot Instances into your ec2 scaling strategy. Properly configured, Spot Instances are a powerful tool for optimizing ec2 scaling costs.

Effective scaling policies are essential for cost-efficient ec2 scaling. Overly aggressive scaling can lead to significant waste as idle instances consume resources. Conversely, insufficient scaling can lead to performance degradation and lost revenue. Fine-tuning scaling policies involves carefully balancing performance needs with cost considerations. This might involve adjusting the scaling thresholds, cooldown periods, and metrics used to trigger scaling events. Regularly reviewing and refining scaling policies, based on performance data and cost analysis, ensures that ec2 scaling remains both responsive and cost-effective. Analyzing CloudWatch metrics and adjusting scaling parameters iteratively leads to a highly optimized and cost-efficient ec2 scaling setup. This iterative approach, combined with utilizing Spot Instances and appropriate instance types, represents a comprehensive strategy for managing ec2 scaling costs.

Handling Scaling Challenges: Common Pitfalls and Solutions in EC2 Scaling

Effective ec2 scaling is crucial for maintaining application performance and availability, but improperly configured scaling policies can lead to various issues. One common challenge is scaling events triggering too frequently, resulting in wasted resources and increased costs. This often stems from overly sensitive scaling policies that react to minor fluctuations in metrics, such as short-term spikes in CPU utilization. To mitigate this, developers should carefully define scaling thresholds and cool-down periods, allowing for temporary fluctuations without triggering unnecessary scaling actions. Analyzing historical metrics to establish realistic baselines and adjusting scaling policies accordingly can significantly improve efficiency. Another aspect of effective ec2 scaling is ensuring the scaling policies are appropriately aligned with the application’s needs; otherwise, you might encounter delays and inefficiencies. For example, insufficient capacity in the underlying infrastructure, such as network bottlenecks or database limitations, can prevent the auto-scaling group from adding instances quickly enough to meet demand, leading to degraded performance and potential outages. Addressing these underlying limitations through appropriate infrastructure upgrades or architectural changes is critical to successful ec2 scaling. Regular monitoring of CloudWatch metrics, including network latency, throughput, and database response times, can help identify these bottlenecks proactively.

Conversely, ec2 scaling policies might not scale quickly enough, leading to prolonged periods of high latency or unavailability. This often occurs when scaling policies are too conservative or when the application experiences sudden, unexpected surges in demand. To prevent this, consider implementing predictive scaling, which leverages historical data and machine learning to anticipate future demand and proactively adjust the number of instances. Furthermore, ensuring that the launch configuration is optimized for rapid instance provisioning is essential. Employing instance types with fast boot times and pre-configured images can drastically reduce the time it takes to add new instances to the auto-scaling group. Regular load testing can help simulate peak demand scenarios and identify potential scaling limitations before they impact production. Another common pitfall is neglecting health checks. Robust health checks are paramount to ensure that only healthy instances contribute to the application’s capacity. If unhealthy instances are included in the auto-scaling group, they can consume resources without contributing to the application’s functionality, leading to inefficiency and potential service disruptions.

Troubleshooting ec2 scaling issues requires a systematic approach. Start by reviewing CloudWatch logs and metrics to identify the root cause. Analyzing error messages and performance data can provide insights into the reasons behind scaling inefficiencies. If the problem relates to scaling policies, carefully examine the thresholds, cool-down periods, and scaling adjustments. If network bottlenecks are suspected, investigate network bandwidth utilization, latency, and potential congestion points. Remember that successful ec2 scaling relies on a holistic approach, involving optimized application design, well-defined scaling policies, and proactive monitoring and troubleshooting. Addressing potential issues in these areas is essential for ensuring the reliability, performance, and cost-effectiveness of your EC2 deployments. Properly diagnosing and resolving these issues is paramount to maintaining application health and avoiding service disruptions. Analyzing CloudWatch alarms and logs can pinpoint issues quickly; understanding the reasons for scaling failures allows for proactive adjustments to avoid future issues. Understanding potential causes and implementing preventative measures will help improve the overall ec2 scaling strategy. Efficient ec2 scaling necessitates a comprehensive strategy that addresses all potential issues.

Advanced Scaling Techniques: Beyond the Basics

This section delves into more sophisticated ec2 scaling strategies that go beyond the fundamental Auto Scaling group configurations. Application-specific scaling leverages custom metrics from your application, allowing for more precise scaling based on real-time performance indicators like database query latency or API request rates. This granular control ensures resources are allocated only when needed, optimizing ec2 scaling for specific application demands and preventing over-provisioning. Predictive scaling uses machine learning algorithms to forecast future resource requirements, proactively adjusting capacity to meet anticipated demand. This proactive approach minimizes latency spikes and ensures a smooth user experience, especially beneficial for applications with highly variable workloads. Careful consideration of historical data and algorithm selection is crucial for accurate predictions in predictive ec2 scaling.

Integrating ec2 scaling with other AWS services enhances functionality and efficiency. Elastic Load Balancing (ELB) distributes incoming traffic across multiple EC2 instances, ensuring high availability and minimizing downtime during scaling events. Combining Auto Scaling with ELB creates a robust and scalable architecture. Amazon SQS (Simple Queue Service) can be used to decouple scaling processes, allowing the system to handle increased load without immediate scaling actions. This asynchronous approach adds resilience and improves overall system responsiveness. Integrating with AWS Lambda allows for automated responses to scaling events, triggering functions that perform tasks such as database backups or log analysis, streamlining post-scaling activities. Efficiently managing these integrations requires careful planning and understanding of each service’s capabilities to avoid unnecessary complexity in ec2 scaling.

Another advanced ec2 scaling concept involves the utilization of spot instances. Spot instances provide spare compute capacity at significantly reduced prices compared to on-demand instances, making them cost-effective for less critical workloads. However, spot instances can be interrupted with short notice, requiring careful consideration of application design and fault tolerance. Implementing effective strategies for handling spot instance interruptions, such as using lifecycle hooks and automated failover mechanisms, ensures continuous operation despite potential interruptions. Careful planning and architecture design are key to leveraging the cost benefits of spot instances without compromising application reliability in ec2 scaling. Understanding the trade-offs between cost and reliability is crucial for optimal ec2 scaling strategies. By mastering these advanced techniques, organizations can achieve unparalleled levels of efficiency and control over their cloud infrastructure.

Real-World Case Studies: Successful EC2 Scaling Implementations

A hypothetical e-commerce company experienced significant performance improvements through effective EC2 scaling. During peak holiday seasons, their website traffic would surge dramatically, leading to slow loading times and frustrated customers. By implementing an auto-scaling group tied to CloudWatch metrics, such as CPU utilization and request latency, they were able to dynamically adjust the number of EC2 instances based on real-time demand. This proactive ec2 scaling strategy ensured consistent website performance, even during peak traffic, resulting in increased sales and improved customer satisfaction. The ability to scale EC2 instances rapidly and efficiently proved invaluable in maintaining a positive user experience and avoiding revenue loss. This case study highlights the importance of proactive ec2 scaling strategies for e-commerce businesses facing unpredictable traffic patterns.

In the gaming industry, a popular multiplayer online game leveraged EC2 scaling to accommodate a large and fluctuating player base. Initially, the game experienced lag and server outages during peak playing hours. The implementation of an automated ec2 scaling solution, using predictive scaling based on historical player data and anticipated events, allowed the game servers to adapt quickly to changing player numbers. The result was a significant reduction in lag and server downtime, leading to an enhanced player experience and increased player retention. This demonstrated how effective ec2 scaling can improve the quality of online gaming experiences, ultimately enhancing the profitability and success of the game. The integration of predictive scaling with their ec2 scaling architecture proved particularly effective in anticipating demand fluctuations.

Another example illustrates how ec2 scaling can improve the efficiency and resilience of large-scale data processing applications. A financial institution uses EC2 instances to process enormous datasets for risk assessment and market analysis. Through sophisticated ec2 scaling, they dynamically allocate resources based on data volume and processing complexity. This dynamic allocation allows the institution to complete analysis faster while optimizing the cost of computing resources by scaling down when processing is less demanding. The effective use of ec2 scaling resulted in cost savings and improved operational efficiency without compromising the accuracy and timeliness of their analyses. Implementing proper monitoring and sophisticated scaling policies proved key to achieving these impressive results in this specific ec2 scaling implementation. The project’s success highlights the versatility of ec2 scaling across different industry sectors.