What are Application Containers and Why Are They Important?

In the realm of modern software development, applications containers have emerged as a transformative technology, revolutionizing how applications are packaged, deployed, and managed. These containers are essentially lightweight, standalone packages that bundle together the necessary code, runtime, system tools, libraries, and settings required for an application to run. Unlike traditional virtual machines, which simulate an entire operating system, applications containers share the host OS kernel, making them much more efficient in terms of resource utilization and startup speed. The core appeal of applications containers lies in their ability to solve some fundamental challenges in software development. One of the most significant is the notorious “it works on my machine” problem. This arises from inconsistencies in environments across different stages of the development lifecycle, which can lead to application failures when transitioning from development to testing or production. By encapsulating an application and its dependencies within a container, developers ensure that the application will run consistently across any environment that supports containerization. This eliminates conflicts stemming from incompatible versions of libraries or system tools and creates a standardized execution environment for each application.

Beyond solving dependency conflicts, applications containers address another critical issue which is application portability. Since they contain everything an application needs to run, these containers can be effortlessly moved between different computing infrastructures, whether it be on-premise servers, cloud environments, or even local workstations. This increased mobility enables applications to be deployed in multiple locations without the need for extensive environment adjustments. For instance, a microservices architecture, which consists of many independent applications working together, is greatly facilitated by applications containers. These allow individual services to be deployed, updated, and scaled independently without affecting the rest of the system. This level of granularity is crucial in modern software development paradigms. Furthermore, applications containers also enable teams to work with more agility, allowing faster deployment cycles and a quicker time to market for new features. As such, applications containers have become a fundamental technology in modern software development, improving efficiency, consistency, and portability, making them indispensable in the creation and deployment of applications.

Containerization vs Virtualization: Key Differences

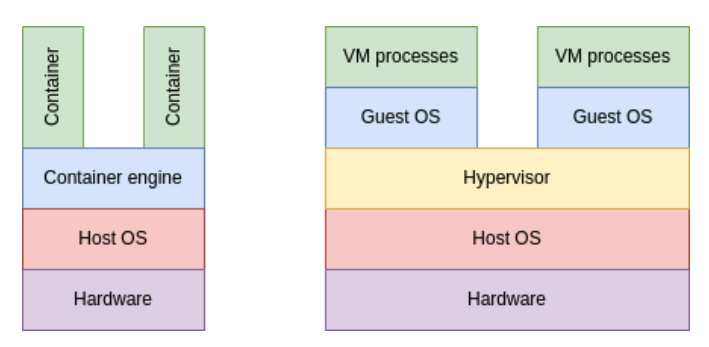

The realm of software deployment offers various methods to encapsulate and run applications, with two prominent approaches being containerization and traditional virtualization. Virtualization, utilizing virtual machines (VMs), creates complete, isolated operating system environments, each with its own kernel, libraries, and system utilities. This approach, while providing strong isolation, incurs a considerable overhead. Each VM requires significant resources like RAM, CPU, and storage, leading to slower boot times and reduced resource utilization. In contrast, containerization, particularly with application containers, offers a much more lightweight alternative. Containers share the host operating system’s kernel, eliminating the need for a full operating system for each instance. This fundamental difference results in drastically reduced resource consumption, faster startup times, and improved performance compared to VMs. The lightweight nature of applications containers makes them ideal for microservices architectures and scalable applications where multiple instances of services must be running concurrently.

The efficiency gains of applications containers stem from their ability to package only the application and its necessary dependencies, rather than a full OS image. This lean packaging results in significantly smaller image sizes, facilitating quicker distribution and deployment. Furthermore, the shared kernel approach translates to faster boot times for applications containers, measured in milliseconds rather than the minutes often associated with VMs. The reduced overhead not only benefits performance but also allows for higher density deployment, meaning more application instances can be run on the same hardware compared to VMs. This makes containerization a cost-effective choice, particularly when dealing with large-scale deployments and resource-constrained environments. The fundamental distinction lies in the level of isolation and resource overhead; while VMs offer complete operating system isolation, they come with significant performance penalties, whereas applications containers provide a lightweight and efficient alternative suitable for many modern deployment scenarios.

The Rise of Docker: A Leader in Container Technology

Docker has emerged as a pivotal force in the landscape of application containers, profoundly impacting how software is developed, deployed, and managed. It is not just a tool; it’s a platform that has democratized container technology, making it accessible and manageable for developers of all skill levels. Before Docker, working with application containers could be a complex undertaking, but Docker revolutionized the process by providing an intuitive interface and a comprehensive suite of features that simplified every stage of the container lifecycle. Docker’s success stems from its ability to abstract the complexities of container creation and execution, making it as simple as writing a text file – the Dockerfile – that dictates how an application is packaged and run within a container. These Dockerfiles serve as blueprints for creating Docker images, which are the packaged, immutable snapshots of an application and all its dependencies. This ability to create repeatable, consistent environments with these images is one of the key reasons why Docker has become so popular among developers as it eliminates the “it works on my machine” problem, ensuring that an application behaves consistently across different systems and environments. The Docker Hub, a cloud-based registry service, is another crucial component, offering developers a place to store, share, and download Docker images, facilitating collaboration and promoting the reuse of existing application containers.

Docker’s impact extends beyond simple packaging; it has fostered a rich ecosystem around application containers. The ease with which Docker allows for the building of images from Dockerfiles, the deployment of containers, and the management of these containers has drastically changed the dynamics of development workflows. This platform has enabled developers to focus more on their code and less on infrastructural issues, leading to faster development cycles and increased productivity. Furthermore, the uniformity provided by Docker images means that applications are always deployed in the same manner, regardless of the specific deployment environment, whether it’s a developer’s laptop, a testing server, or a production data center. This repeatability is a cornerstone of DevOps practices and has become a key component in the modern software delivery pipeline. The widespread adoption of Docker has also led to a flourishing community that actively shares and maintains a wide variety of pre-built images, further streamlining the process of working with application containers. Docker’s innovative approach to solving challenges related to portability and consistency has cemented its place as a leader in the containerization field, offering tools and a platform that continue to shape the future of software development.

How to Deploy Applications Using Containers

The deployment of applications using containers involves a streamlined process that enhances both flexibility and efficiency. It begins with the creation of container images, which are essentially snapshots of your application and its required dependencies. These images are built using a Dockerfile, a simple text file that outlines the steps to set up the application environment. Once the image is crafted, it’s typically stored in a container registry, a central hub that allows developers to share and manage their container images. This registry acts as a repository, ensuring images are accessible for deployment. Think of it as a software library, where you can easily find, access, and utilize the images needed to run your applications. The use of a registry is a cornerstone of modern container deployment, allowing for consistent builds across different environments. The advantages of this method are significant, providing a highly flexible approach to application deployment. By using these standardized images, you can be confident that your application will function in the same way across different setups, from a development laptop to a production server. Moreover, this methodology provides a clear demarcation between the application and its execution environment, enhancing maintainability and reducing the risk of dependency conflicts. The next step involves pulling the container images from the registry and running them within a container runtime on the designated infrastructure.

The actual deployment process of applications containers may involve several orchestration tools and strategies to improve scalability and manageability. While direct execution of the container image is possible, larger more complex applications greatly benefit from using orchestration platforms like Kubernetes. These tools manage the deployment, scaling, and health monitoring of containers, automating many of the tasks required to run applications in production. The use of these tools not only streamlines the deployment process but also reduces manual overhead. Such platforms allow for the automated rollout of new versions of applications, ensuring minimal downtime and rapid updates. Furthermore, using such tools to orchestrate applications containers makes it easier to manage changes and scale resources up or down as required. These features dramatically boost the agility and responsiveness of the development team. The combination of containerization and orchestration provides a robust framework for running applications at scale, whether in a cloud or on-premise setting, allowing for optimized usage of resources and a high level of operational reliability.

In essence, deploying applications using containers promotes a workflow that centers around reusable, portable application units, ensuring uniformity and streamlining the software development lifecycle. By adopting a container-based deployment strategy, developers can focus more on coding and less on managing environmental inconsistencies, accelerating the time-to-market for new products and features. From building container images to orchestrating with tools like Kubernetes, the entire process supports modern development practices, emphasizing both agility and stability. The flexible nature of applications containers allows for easy scaling and modifications without impacting the underlying host, a feature that’s essential for modern infrastructures.

Kubernetes: Orchestrating Containerized Workloads

Kubernetes has emerged as the dominant platform for orchestrating applications containers, fundamentally changing how these powerful tools are managed and scaled in modern IT environments. It serves as a sophisticated system that automates the deployment, scaling, and management of containerized applications. Kubernetes allows organizations to effectively handle large and complex applications containers deployments, moving beyond the limitations of manual management. A primary function of Kubernetes is to automate the scheduling and deployment of containers across a cluster of machines, providing a unified view and control plane. This capability dramatically simplifies operational tasks, such as managing rolling updates, rollbacks, and scaling based on application load. Through automated rollouts, Kubernetes ensures that new versions of applications containers are deployed smoothly and reliably, minimizing downtime and risks. Service discovery is also another crucial feature, allowing containers to locate and communicate with each other, irrespective of where they are deployed within the cluster. This ability is essential for building intricate, microservices-based architectures where different parts of an application are running as independent containers.

The sophisticated architecture of Kubernetes includes a range of features aimed at enhancing the reliability and scalability of applications containers. Kubernetes monitors the health of applications, automatically restarting failing containers and maintaining the desired application state. The auto-scaling feature adjusts the number of container instances based on real-time traffic demands, ensuring applications can handle peak loads efficiently without manual intervention. Kubernetes also facilitates efficient resource utilization within the cluster, optimizing the distribution of applications containers across available infrastructure, thus reducing waste and cost. Its flexibility allows it to operate on a variety of infrastructures, including private data centers, public clouds, or hybrid environments, making it an extremely versatile choice. For large-scale deployments, Kubernetes has become the standard for managing the complexity of distributed applications containers, providing a robust and efficient platform for continuous delivery and operation. This is why the adoption of Kubernetes has been pivotal in driving the adoption and success of container technology.

Benefits of Using Application Containers in Development

The adoption of application containers significantly streamlines the software development lifecycle, offering numerous advantages that contribute to more efficient and reliable workflows. One of the primary benefits is the consistent environment they provide. By packaging applications and their dependencies into containers, developers ensure that the application behaves identically across different stages of development, testing, and production. This eliminates the “it works on my machine” problem, which often leads to delays and debugging nightmares. Furthermore, application containers enable faster development cycles. The lightweight nature of containers allows for rapid iteration, and spinning up new environments with required libraries and tools becomes a matter of seconds, rather than hours. This speed boost translates to faster time-to-market for software products, giving organizations a competitive edge. Application containers also foster better collaboration within development teams. By encapsulating everything needed to run an application, containers facilitate easy sharing of environments among team members. This improved environment sharing and standardization leads to fewer integration headaches and more productive collaboration, enabling development teams to focus on building innovative features.

Another crucial advantage of leveraging application containers is the enhanced testing process. With containers, a consistent testing environment can be readily replicated, ensuring that applications undergo rigorous quality checks under conditions mirroring production. This consistent testing environment increases the quality of the delivered product by pinpointing issues earlier and reducing the chances of unexpected errors in production environments. Furthermore, application containers improve deployment efficiency. The ability to deploy applications quickly and repeatedly, alongside consistent runtime, drastically reduces the risk associated with application deployment. The immutability of application container images also plays a key role in maintaining application integrity across the different environments. This consistent and reliable deployment process is especially crucial for large-scale deployments and applications with high availability requirements. The combined effects of environment consistency, faster development cycles, improved collaboration, enhanced testing, and efficient deployments make the use of application containers indispensable for modern software development practices.

In summary, the use of application containers accelerates application delivery, improves quality, and optimizes resource utilization. The benefits extend from development to deployment, creating a faster, more reliable and streamlined process. The consistent runtime offered by containerized applications reduces integration issues and allows for better control over the application’s lifecycle. Overall, the implementation of application containers represents a strategic advantage, providing better return on investment and a higher quality end-product for today’s evolving technological demands.

Popular Tools in the Application Container Ecosystem

The landscape of applications containers extends beyond Docker, with several notable tools and technologies playing crucial roles in different aspects of container management and deployment. One such tool is containerd, a core container runtime that provides the foundation for many higher-level container platforms. Unlike Docker, which offers a more comprehensive suite of features, containerd focuses solely on the runtime aspects, making it a lightweight and efficient choice for embedding into other systems. Podman, another prominent tool, provides a daemonless container engine, offering an alternative to Docker’s client-server architecture. This approach enhances security by eliminating the need for a central daemon, which may be a vulnerability target, thereby offering a more robust environment for managing applications containers. These tools demonstrate that the container ecosystem is not monolithic and provides different solutions to various challenges.

Beyond individual tools, cloud providers also offer specialized services that cater to specific use cases. For instance, AWS Fargate enables users to deploy applications containers without managing the underlying servers, which significantly reduces the operational overhead. Similarly, Azure Container Instances provides a serverless container experience, allowing developers to run containers directly without having to set up a full orchestration platform. These serverless applications containers solutions are particularly useful for short-lived tasks or applications with unpredictable traffic patterns, allowing users to scale resources dynamically and pay only for what they consume. The diverse tools and services in the container ecosystem allow developers to select the right solution for their unique environment.

Moreover, several other complementary technologies and approaches continue to push the boundaries of what is achievable with applications containers. These range from container-native storage solutions that optimize data management for containers to specialized network plugins that facilitate seamless container communication. The constant innovation within this ecosystem leads to a greater variety of options and improved efficiency and makes managing application containers more versatile and flexible. The breadth of these options emphasizes how the container ecosystem is constantly evolving, offering new capabilities and improved integration methods, ensuring that application containers remain a fundamental building block for modern software development.

The Future of Application Container Technology

The trajectory of application containers points towards a future of increasing ubiquity and sophistication. One significant evolution lies in the rise of serverless container solutions. These technologies abstract away the underlying infrastructure management, allowing developers to focus solely on their applications containers. This approach enables highly scalable and cost-effective deployments, making it easier to run containerized workloads without the complexities of server provisioning. Another crucial trend involves the integration of applications containers with edge computing. As more processing power moves closer to the source of data, containers offer the ideal packaging mechanism to deploy applications to these distributed environments. This allows for reduced latency and faster response times, crucial for applications that require real-time data analysis and interaction. Furthermore, the integration of AI and Machine Learning workflows with applications containers is poised to drive innovation. Container technology provides the necessary platform for running complex AI/ML models, offering the portability and scalability needed to train and deploy these applications effectively. It is also worth noting the continued growth in container security with new innovations being constantly researched and deployed to protect container workloads. The future is set for faster, more flexible and efficient development pipelines thanks to this approach.

Looking ahead, advancements in networking technologies and container runtime environments are expected to further optimize the performance and resource utilization of application containers. New initiatives aim to tackle the challenges of managing and securing containers across heterogeneous environments, simplifying hybrid and multi-cloud deployments, so organizations can use application containers seamlessly across different cloud providers. Moreover, the developer experience is likely to be continuously streamlined with better tools for image building, testing, and deployment, lowering the barrier to entry for those looking to adopt containerization. These improvements will be vital in sustaining and accelerating the growth of application container adoption by organizations of all sizes and in all fields. As the technology matures, we anticipate more sophisticated orchestration platforms and further integration with other technologies such as service meshes and microservices architecture to make it the cornerstone of the modern software stack. Also, the future of application containers seems tightly coupled with the rise of specialized hardware accelerators that help with high performance computing workloads.

The continuous evolution of application containers is not simply about incremental improvements, it’s a transformative shift in how software is developed, deployed, and scaled. The potential for serverless, edge-based, and AI-driven applications suggests that containers will play a crucial role in shaping future technological landscapes. The flexibility of applications containers allows developers and architects to craft and design very specific architectures tailored to the needs of the business. As these innovative approaches become more accessible and refined, they will empower businesses to build faster, more robust applications. From single developers to complex multinational corporations, everyone is expected to harness the benefits that application containers and their corresponding ecosystem of tools provide to produce the best possible digital products.