Understanding Docker: The Foundation of Modern Software Development

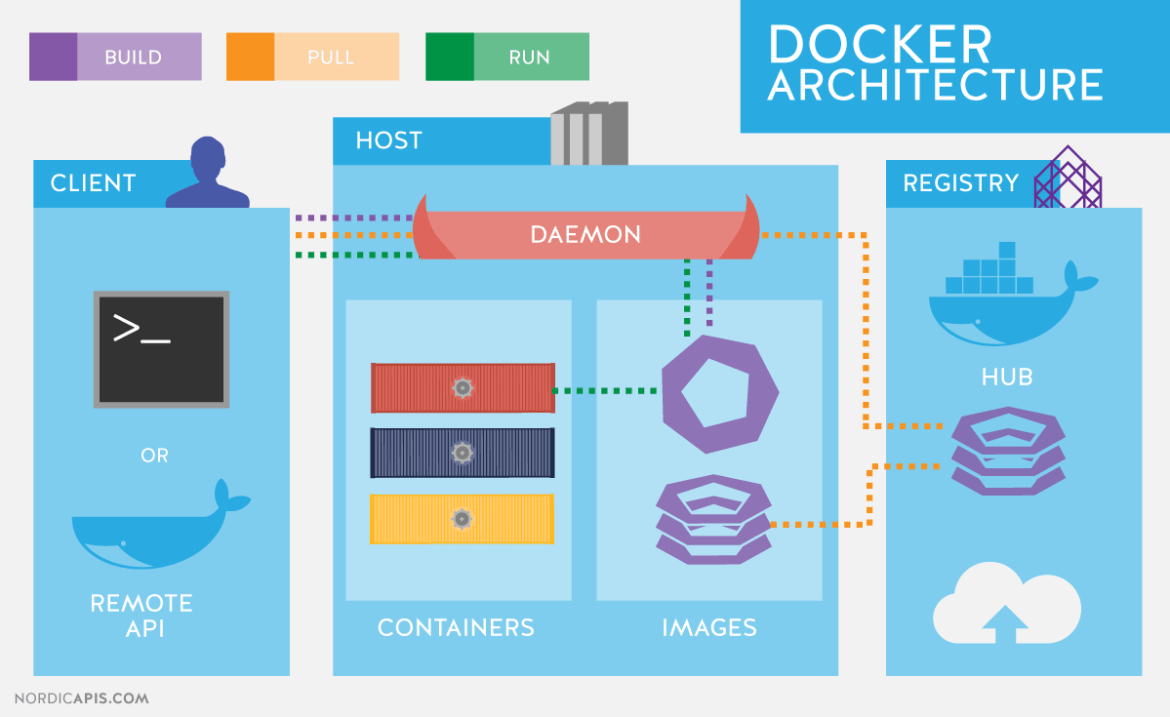

Docker is a revolutionary platform transforming how software is developed, deployed, and managed. At its core, Docker uses containerization technology to package applications and their dependencies into isolated units called containers. Unlike virtual machines that emulate entire operating systems, containers share the host operating system’s kernel, resulting in significantly improved efficiency and resource utilization. A Docker image acts as a blueprint for creating a container, containing everything needed to run the application—code, libraries, runtime, system tools, and settings. This ensures consistency across different environments, from development to production. The advantages are substantial: improved portability (run the same container anywhere), enhanced consistency (eliminate the “it works on my machine” problem), increased efficiency (lighter weight than VMs), and simplified deployment (faster and easier). Consider a simple web application: with Docker, the application, its dependencies (like a Python web framework and database driver), and its runtime environment are all bundled into a single, portable unit. This significantly simplifies deployment, whether on a personal laptop, a cloud server, or any other infrastructure supporting Docker. This docker kubernetes tutorial will guide you through the process step-by-step, helping you master these powerful tools.

The benefits extend beyond simplified deployments. A key advantage of Docker is its ability to promote reproducible builds. By using a Dockerfile—a text file containing instructions for building a Docker image—developers can guarantee consistent builds across different machines and teams. This eliminates discrepancies and ensures that the application behaves predictably in every environment. Furthermore, Docker facilitates efficient resource management. Because containers share the host OS kernel, they consume fewer resources compared to virtual machines. This allows for running more applications simultaneously on the same hardware, leading to cost savings and optimized infrastructure utilization. A successful docker kubernetes tutorial should emphasize these practical benefits, empowering developers to build robust and scalable applications. This approach significantly simplifies complex deployments, especially crucial in microservices architectures where applications are broken down into smaller, independent units. The adoption of Docker is a key enabler for streamlining workflows and improving efficiency within DevOps practices.

Docker’s impact on modern software development is undeniable. It streamlines the entire application lifecycle, from development and testing to deployment and maintenance. By creating consistent, portable, and efficient containerized applications, Docker has fundamentally changed how developers work and deploy software. Learning Docker is a crucial step for anyone aiming to build and manage applications in today’s dynamic and cloud-centric environment. This docker kubernetes tutorial is designed to be your comprehensive guide to mastering this essential technology. Mastering Docker is fundamental to understanding the power of container orchestration platforms like Kubernetes, which we’ll explore further in this docker kubernetes tutorial. The ease of use and increased efficiency offered by Docker containers make it an invaluable tool for both small projects and large-scale enterprise applications.

Building Your First Docker Image: A Step-by-Step Docker Kubernetes Tutorial

This section provides a practical, hands-on tutorial for building a simple Docker image, a crucial step in any docker kubernetes tutorial. The process involves creating a Dockerfile, a text document containing instructions for building the image. This tutorial will use a simple Python web server application as an example, readily adaptable to other applications. First, create a new directory for your project, and inside it create a file named `app.py` containing your Python application code. For instance, a basic web server might look like this: from flask import Flask; app = Flask(__name__); @app.route("/") def hello(): return "Hello, Docker!"; if __name__ == "__main__": app.run(debug=True, host='0.0.0.0'). Then create a file called `Dockerfile`. This file will contain instructions to Docker on how to build your image. A basic `Dockerfile` for this application might look like this: FROM python:3.9-slim-buster; WORKDIR /app; COPY . .; RUN pip install flask; CMD ["python", "app.py"]. This `Dockerfile` instructs Docker to use a slim Python 3.9 image as a base, set the working directory, copy the application files, install Flask, and finally run the application. Remember to save the file, paying close attention to formatting and syntax; even small errors can prevent the Docker build from succeeding.

To build the image, navigate to the project directory in your terminal and run the command docker build -t my-python-app .. The -t flag tags the image with the name “my-python-app,” making it easier to manage. The “.” specifies the current directory as the build context. The process will output various messages indicating the steps Docker is taking, including downloading the base image, installing dependencies, and copying files. Upon successful completion, you can verify the image exists using docker images. This command will list all images on your system, including the newly created “my-python-app” image. This docker kubernetes tutorial emphasizes a practical approach to image creation, focusing on clear, concise commands and readily understandable code. Running docker run -p 5000:5000 my-python-app will start the container, mapping port 5000 on your host machine to port 5000 in the container. You can then access the application at `http://localhost:5000` in your web browser. This simple example showcases the fundamental power of Docker for packaging and running applications consistently across various environments, a foundation for further exploration in this docker kubernetes tutorial.

This docker kubernetes tutorial highlights the core concepts of Docker image creation. By following these steps, you have successfully created a Docker image for your Python web application. This image can now be deployed to various environments, including a Kubernetes cluster, illustrating the portability and consistency that Docker provides. The next steps in this docker kubernetes tutorial will guide you through deploying this image to a Kubernetes cluster, further demonstrating the seamless integration between Docker and Kubernetes for efficient and scalable application deployment. Understanding this foundational step is critical before proceeding to orchestration with Kubernetes, another key component of a comprehensive docker kubernetes tutorial.

Introduction to Kubernetes: Orchestrating Your Containers

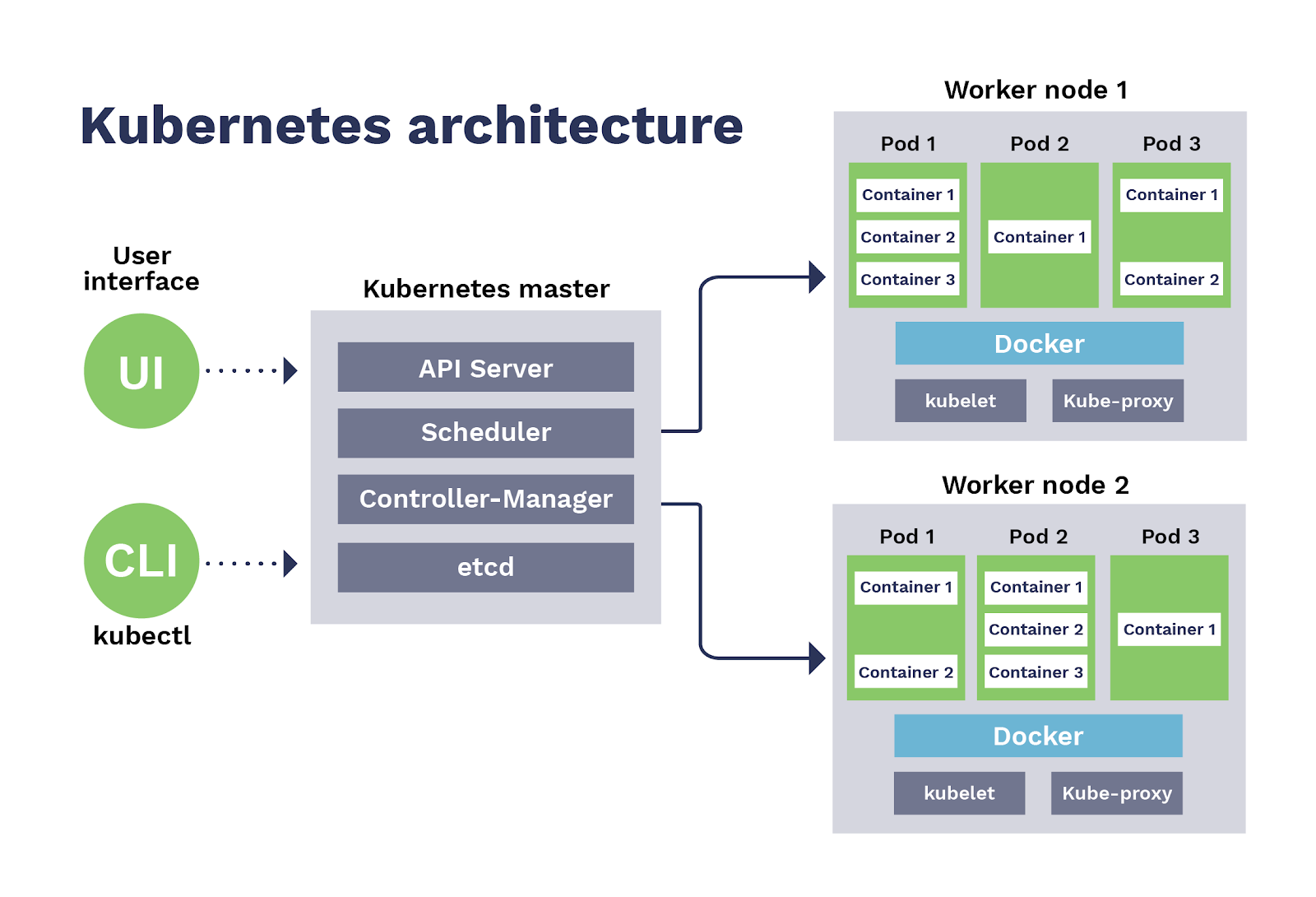

Kubernetes emerges as a powerful container orchestration platform, essential for managing and scaling applications built with Docker. This section of the docker kubernetes tutorial introduces the core concepts that underpin Kubernetes functionality, presenting them in a way that’s accessible to those new to the platform. Imagine you have many Docker containers, each running a part of your application; Kubernetes acts as the conductor of an orchestra, ensuring all these containers work together harmoniously. It manages these containers across multiple machines, abstracting away the underlying infrastructure complexities. At the heart of Kubernetes are fundamental concepts such as pods, which represent the smallest deployable unit, containing one or more Docker containers; deployments, which manage the desired state of your application, ensuring that a specified number of pod replicas are running at all times; services, which expose your application to the outside world or to other services within the cluster; and namespaces, which provide a mechanism to logically partition resources within a cluster. A good analogy might be to think of a well-organized city: each pod is like a building, the deployments act like city planners ensuring there’s enough of each type of building, services are like public utilities connecting buildings, and namespaces are like different districts of the city. Understanding these concepts is crucial for any docker kubernetes tutorial, as they form the foundation of Kubernetes’ ability to handle complex applications.

The need for Kubernetes arises from the challenges of manually managing multiple Docker containers in a real-world production environment. As applications grow in complexity, it becomes increasingly difficult to ensure high availability, scalability, and consistent deployments. Kubernetes automates these tasks, providing features like self-healing, automatic scaling, load balancing, and rolling updates. These features allow you to focus on building the application, while Kubernetes manages the underlying infrastructure. Kubernetes’s power lies in its ability to handle the complexities of managing a distributed system, thereby reducing the operational burden on the developers and system administrators. For example, if one of your pods goes down, Kubernetes automatically restarts it, or if your application suddenly needs to handle more traffic, it automatically scales up the number of pods, providing necessary resources. This automatic management is a critical element of any successful docker kubernetes tutorial, enabling a more robust and efficient development cycle. The introduction provided here sets the stage for more practical examples on deploying applications to a cluster, further simplifying the docker kubernetes tutorial process.

The benefits of using Kubernetes in combination with Docker are numerous. It enhances application reliability by providing self-healing capabilities, ensuring that if one container fails, another takes its place seamlessly. It also promotes scalability, allowing applications to adapt to changing demands without manual intervention. This is crucial for applications that need to respond to traffic peaks. Furthermore, it streamlines the deployment process, allowing for rolling updates with zero downtime, avoiding service interruptions during application upgrades. The use of Kubernetes allows teams to quickly adapt to new application features without fear of creating outages. This introduction into Kubernetes, a core element of a complete docker kubernetes tutorial, provides an understanding that the platform simplifies the operational aspect of managing containers and is essential for any organization aiming to embrace modern deployment methodologies. Kubernetes is becoming the industry standard for container orchestration, and mastering its concepts is a vital step for any software developer or system administrator in today’s tech landscape.

Deploying Your Docker Image to a Kubernetes Cluster: A Practical Guide

This section provides a practical docker kubernetes tutorial, guiding you through deploying the Docker image you’ve previously built to a Kubernetes cluster. The deployment process involves creating Kubernetes YAML files that define the desired state of your application, including how many replicas to run (deployments) and how to expose it to the network (services). These YAML files are essentially configuration files that describe the infrastructure and setup of your application within the Kubernetes environment. To start, you will need to create two essential YAML files: one for your deployment and another for your service. The deployment YAML file specifies details such as the Docker image to use, the number of replicas, and resource requests/limits. The service YAML file defines how your application will be accessed, whether it’s internally within the cluster or exposed externally. Once these files are prepared, the `kubectl` command-line tool, which is the primary way to interact with a Kubernetes cluster, comes into play. The command `kubectl apply -f

After successfully deploying your application, monitoring its status is vital to ensure it’s running smoothly. Use the command `kubectl get pods` to check the status of your pods, where your application’s containers reside. If there are any issues, the output will provide hints on troubleshooting the root cause. For example, if the status indicates “ImagePullBackOff,” it means there was a problem downloading the Docker image from the specified registry. Similarly, errors such as “CrashLoopBackOff” suggest the application container is starting and crashing repeatedly. For service exposition, use the command `kubectl get svc` to check the service status, and this will reveal the exposed ports and internal IP addresses. If the deployment is exposed with type LoadBalancer, an external IP will be assigned. To access the application, you can use the external IP or the node’s port depending on the type of service. A key advantage of Kubernetes is its built-in scaling capabilities. The command `kubectl scale deployment

To simplify your initial experience, consider using Minikube, which provides a lightweight Kubernetes environment within your local machine. This allows beginners to test their deployments without setting up a full Kubernetes cluster. Minikube greatly simplifies the docker kubernetes tutorial process, allowing for hands-on practice with all the commands and configurations discussed here. By leveraging Minikube, you gain a safe and practical learning space to better grasp the intricacies of deploying applications on Kubernetes, from initially creating the deployment configurations to scaling and exposing the application for client access. It is important to monitor your deployments and services for smooth operation. Kubernetes provides several other ways to monitor, like `kubectl logs` to check application logs, that are important for application maintenance and stability. Remember that practical experience is key, so experiment with these steps and monitor the outcomes. This practical docker kubernetes tutorial will solidify your understanding, and enhance your skill in deploying and managing applications on a Kubernetes cluster.

Managing and Monitoring Your Kubernetes Applications

Effective management and monitoring are crucial for ensuring the smooth operation of applications deployed within a Kubernetes cluster. This section of our docker kubernetes tutorial delves into essential practices for maintaining the health and stability of your containerized applications. Resource monitoring plays a vital role; tools such as Kubernetes Dashboard and kubectl top provide real-time insights into CPU, memory, and disk usage. Understanding these metrics allows for proactive identification of potential performance bottlenecks. Implementing robust logging strategies is also paramount. Centralized logging systems, which gather logs from various containers and pods, enable effective troubleshooting and debugging. Kubernetes allows for integration with popular logging solutions, making it easier to track application behavior and diagnose issues. Furthermore, monitoring application health involves setting up health checks to automatically detect and react to problems. These checks allow Kubernetes to restart failing pods and maintain service availability. Utilizing tools like Prometheus and Grafana can provide comprehensive monitoring and alerting capabilities, essential components in any robust docker kubernetes tutorial deployment strategy.

Troubleshooting is an inevitable part of any deployment. A key aspect of effective troubleshooting within a Kubernetes environment is understanding how to utilize `kubectl` commands to inspect pod and container status. Examining logs using `kubectl logs` is crucial for pinpointing issues. Learning to interpret the output of `kubectl describe pod` and `kubectl get events` provides further valuable diagnostic information. Common problems encountered in a docker kubernetes tutorial include resource constraints, network connectivity problems, and application crashes. Implementing proper resource requests and limits for containers helps prevent resource starvation. Furthermore, understanding the importance of network policies to manage traffic flow in the cluster is a vital part of troubleshooting network related issues. Following a systematic approach to diagnose and fix issues, combined with effective monitoring practices, is key to managing applications efficiently in a Kubernetes environment. Regular monitoring and proactive strategies learned in this docker kubernetes tutorial will contribute to the overall health and stability of applications.

Beyond basic monitoring and troubleshooting, advanced management techniques involve setting up alerts to proactively notify administrators of issues before they become critical. Implementing best practices for resource management is essential, including defining resource quotas, names, and limits. Using Kubernetes tools that assist in performance analysis can significantly enhance the overall quality of the infrastructure. This also includes understanding and configuring different Kubernetes components, such as deployments and services, to ensure that the application behaves as expected. By taking a deep dive into the many aspects of management and monitoring this section of the docker kubernetes tutorial provides vital insights to ensure the overall health and stability of your applications within the Kubernetes ecosystem.

Scaling and High Availability with Kubernetes

This section delves into the crucial aspects of scaling and high availability within a docker kubernetes tutorial context. Achieving scalability involves adapting your application’s capacity to handle fluctuating workloads. Kubernetes offers robust mechanisms to automatically adjust the number of running application instances based on demand. This dynamic scaling is achieved through Horizontal Pod Autoscalers (HPA), which monitor resource utilization (CPU, memory) and automatically increase or decrease the number of pods to maintain optimal performance. A well-structured docker kubernetes tutorial should emphasize the configuration of HPAs, including setting target utilization levels and scaling metrics. Understanding these settings is vital for ensuring your application efficiently handles varying user traffic and resource demands. Effective scaling is a cornerstone of a reliable and cost-effective deployment strategy in any docker kubernetes tutorial.

High availability, another critical element explored in a comprehensive docker kubernetes tutorial, focuses on ensuring continuous application uptime even in the face of hardware or software failures. Kubernetes facilitates this through replication controllers and deployments. Replication controllers maintain a specified number of pod replicas, ensuring that if one pod fails, another automatically replaces it. Deployments build on this by providing advanced features such as rolling updates, which allow for gradual application upgrades with minimal downtime. A robust docker kubernetes tutorial should illustrate how to configure these features to minimize disruptions during updates and to maintain application resilience in the event of component failures. Understanding the intricacies of replication controllers and deployments is fundamental to constructing robust and fault-tolerant applications within a Kubernetes environment. The combination of scaling and high availability practices forms a crucial aspect of any practical docker kubernetes tutorial.

Strategies for ensuring high availability extend beyond basic replication. Consider using techniques such as load balancing to distribute traffic across multiple pods, preventing overload on any single instance. Implementing health checks helps Kubernetes detect and replace unhealthy pods promptly. Furthermore, a comprehensive docker kubernetes tutorial should also touch upon the use of StatefulSets for applications that require persistent storage. StatefulSets guarantee that each pod retains its unique identity and data across restarts, crucial for databases and other stateful applications. The integration of these techniques creates a resilient and highly available system, a key outcome of a practical docker kubernetes tutorial focusing on real-world deployment scenarios. Mastering these concepts transforms a simple docker kubernetes tutorial into a powerful guide for creating robust and scalable applications.

Securing Your Kubernetes Cluster and Applications

Securing a docker kubernetes tutorial deployment is paramount. This section details crucial security best practices for protecting your Kubernetes cluster and the applications running within it. Robust security is essential for maintaining data integrity, ensuring confidentiality, and preventing unauthorized access. A layered approach, combining multiple security mechanisms, provides the most effective defense. Network policies play a vital role, allowing fine-grained control over network traffic between pods. By defining specific rules, administrators can restrict communication to authorized pods only, significantly reducing the attack surface. This is especially critical in multi-tenant environments where isolating applications is crucial for preventing data breaches and unauthorized access. A well-defined network policy acts as a firewall for your containers, enhancing the overall security posture of the docker kubernetes tutorial implementation.

Role-Based Access Control (RBAC) is another cornerstone of Kubernetes security. RBAC enables granular control over access to cluster resources, ensuring that users and services only have the permissions necessary to perform their tasks. Instead of granting broad access to all cluster resources, RBAC allows the creation of roles with specific permissions, limiting potential damage from compromised accounts or malicious actors. This principle of least privilege significantly reduces the risk of unauthorized actions, thereby strengthening the security of your docker kubernetes tutorial. By carefully assigning roles and permissions, administrators can effectively control access to sensitive data and critical cluster components. Effective RBAC implementation requires a thorough understanding of the roles and responsibilities within your organization and the applications being deployed.

Beyond network policies and RBAC, securing your docker kubernetes tutorial also involves using secure images. Employing images from reputable sources and regularly scanning for vulnerabilities helps prevent the introduction of malware or exploits. Staying up-to-date with security patches for the Kubernetes components themselves is equally vital. Regular updates address known vulnerabilities, improving the resilience of the cluster to attacks. A robust security strategy in a docker kubernetes tutorial environment requires a combination of proactive measures, such as regular security audits and vulnerability scans, coupled with a reactive approach that promptly addresses identified security threats. This multifaceted strategy ensures a secure and reliable operational environment.

Advanced Kubernetes Concepts and Best Practices for a Robust docker kubernetes tutorial

Having mastered the fundamentals of Docker and Kubernetes, this section briefly explores more advanced concepts to further enhance your understanding. StatefulSets, unlike deployments, manage stateful applications requiring persistent storage and unique identities for each pod. This is crucial for databases or applications relying on persistent data. Understanding StatefulSets is a significant step towards building more complex and robust applications using this powerful docker kubernetes tutorial. Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) provide a mechanism for dynamically provisioning persistent storage to your pods, ensuring data survives even if pods are rescheduled or replaced. This is critical for applications that require data persistence, providing a highly reliable setup built upon a strong foundation laid by this docker kubernetes tutorial. Ingress controllers act as reverse proxies, providing external access to services running within the Kubernetes cluster. They handle routing traffic based on rules defined in ingress resources, simplifying external access to your applications. This is especially relevant when deploying applications accessible via the internet, which a docker kubernetes tutorial should touch upon. Mastering these concepts within this docker kubernetes tutorial will allow for scaling and complexity management as needed, even in high-traffic scenarios. This docker kubernetes tutorial has covered core deployment and management principles, and now more advanced functionalities to create a scalable and resilient system. This docker kubernetes tutorial showcases the power of combining Docker and Kubernetes for modern application deployment.

Efficient resource utilization is paramount in Kubernetes. Understanding concepts like resource requests and limits allows for better resource allocation and avoids contention between pods. Proper monitoring and logging are essential for identifying and resolving issues quickly. Effective monitoring allows for proactive adjustments to ensure the application’s stability and performance, a crucial aspect often overlooked in simpler docker kubernetes tutorial guides. Implementing robust logging strategies helps in debugging and troubleshooting issues that may arise in a production environment. This is a key step towards operational excellence for your applications based on this docker kubernetes tutorial. This docker kubernetes tutorial has stressed the importance of security throughout; continuous monitoring and implementing security best practices remain crucial for maintaining a secure and reliable Kubernetes cluster. The docker kubernetes tutorial has highlighted essential security measures. Proper image security scans, regular updates, and network policies provide a robust security posture for your applications and the underlying infrastructure, preventing vulnerabilities.

The synergy between Docker and Kubernetes significantly enhances the deployment, scaling, and management of applications. This comprehensive docker kubernetes tutorial has provided a solid foundation for building and deploying containerized applications effectively. This docker kubernetes tutorial equips you to manage containerized applications at scale and build highly available and resilient systems. By combining the portability and efficiency of Docker with the orchestration power of Kubernetes, one can create robust and scalable applications ready for deployment in dynamic environments. The concepts and best practices detailed in this docker kubernetes tutorial will serve as valuable assets in your journey of mastering containerization and orchestration. Remember that continuous learning and exploration of advanced features are vital to staying at the forefront of this rapidly evolving technology landscape within this docker kubernetes tutorial.