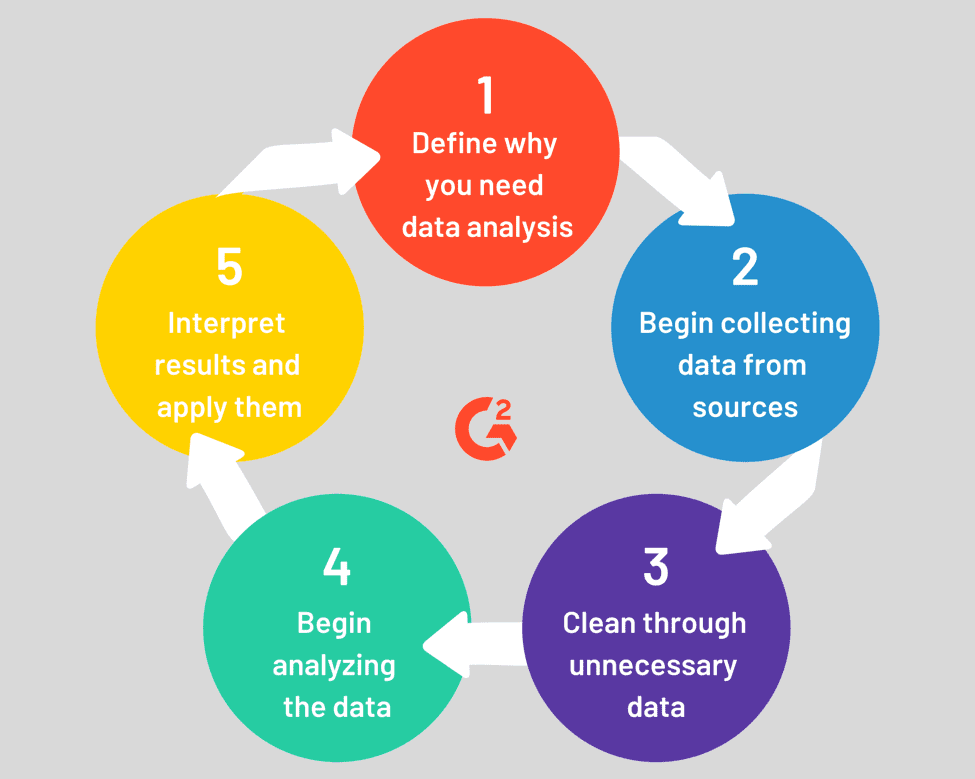

Understanding the Landscape of Data Analysis

The basics of data analysis, at its core, involves examining raw information to extract meaningful insights. It’s a process of transforming seemingly random numbers and facts into understandable patterns and trends. Think of it as a detective’s work, where the data are the clues and the analysis is the investigation. This investigative approach is not limited to a specific field; in fact, its applications span a vast spectrum. Businesses utilize data analysis to understand customer behavior, optimize operations, and make strategic decisions. In the scientific realm, researchers employ these techniques to identify patterns in experimental data, validate theories, and advance our understanding of the world. The types of data encountered can be quite diverse, ranging from structured data, like information stored in spreadsheets, to unstructured data, such as text documents or images, which require more sophisticated methods. The ability to perform the basics of data analysis is increasingly valuable as the world generates more and more data. This process is the key to converting vast amounts of information into actionable knowledge, which helps in problem-solving, and aids decision-making in various domains. The ability to glean insights and patterns from the enormous amount of data is what gives it purpose.

Understanding the importance of the basics of data analysis lies in its ability to illuminate hidden trends and correlations that are not immediately obvious. Whether you are trying to predict future sales, identify a disease outbreak, or analyze the effectiveness of a new marketing campaign, data analysis provides the necessary tools and techniques. For example, in finance, data analysis can help identify market trends and inform investment decisions. In healthcare, data analysis can contribute to improved patient care by identifying risk factors and treatment patterns. In the realm of social science, researchers use it to study human behavior and make informed social policies. The value of data analysis is in its capacity to transform raw information into meaningful intelligence, and the basics of data analysis serves as a crucial foundation for all further exploration of data analysis.

Essential Data Analysis Tools and Techniques

Embarking on the journey of data analysis requires familiarity with a range of tools and techniques designed to unlock the hidden potential within datasets. At the foundational level, spreadsheets such as Microsoft Excel and Google Sheets stand out as indispensable utilities. These platforms offer a user-friendly interface for handling data, enabling users to execute basic manipulations like sorting, filtering, and calculating aggregates. Furthermore, their visualization capabilities, although simple, provide an initial glimpse into patterns and trends. Moving beyond spreadsheets, specialized software packages like SPSS, R, and Python become increasingly significant as the complexity of data analysis tasks grows. These tools often feature a comprehensive suite of statistical functions, advanced analytical methods, and powerful visualization options, catering to a broader spectrum of analytical needs. It’s important to acknowledge the core aspects of data preparation, emphasizing data cleaning, transformation, and systematic organization. These initial steps of preparing the data are key to ensure that analysis is reliable and insights are robust. Understanding these fundamentals provides a strong platform for exploring the exciting realm of data analysis.

Exploring the basics of data analysis should include understanding how these tools fit into the process. The ability to manipulate data in spreadsheets is often the first encounter with data wrangling. These programs are designed for non-coders, enabling individuals to perform simple yet vital operations, such as identifying data types, merging data from various sources, and creating preliminary visualizations. While spreadsheet software is great for smaller data sets, analysis of larger datasets usually necessitates the use of more powerful tools like R or Python. R is known for its statistical prowess, providing access to a rich library of specialized statistical routines. Python, on the other hand, offers a versatile environment for data analysis, machine learning, and general-purpose programming. Regardless of the specific tool, the core techniques of data cleaning, transforming, and organizing remain consistent, and their mastery is essential for effective data analysis. For instance, cleaning could involve removing duplicated entries, correcting inconsistencies in the data, and properly formatting it. Transformation involves changing the scale or structure of data using mathematical or other logical functions, such as using logarithms to stabilize variability or creating categories based on ranges of data. The emphasis here is on the general concepts, with the aim of sparking the reader’s curiosity and motivation to further investigate these powerful tools and methods.

The basics of data analysis also involve data organization: organizing data efficiently sets the stage for streamlined analysis and effective decision making. Whether you’re using spreadsheets or more powerful platforms, employing a systematic approach to organizing your data is critical for accurate outcomes. Key concepts in this process include understanding the importance of column headers for easy identification of variables, maintaining data consistency, and utilizing database techniques to handle large amounts of data. The value of well-organized data cannot be overstated. When datasets are structured logically, they can be easily accessed, manipulated, and analyzed. Therefore, comprehending the basics of data analysis is not just about mastering software, but also embracing practices that encourage the seamless interaction between data and insight. The journey starts with the right preparation, laying a solid foundation for impactful discoveries ahead.

How to Clean and Prepare Your Data for Analysis

Data cleaning forms a crucial part of the basics of data analysis, laying the groundwork for accurate and meaningful insights. Before diving into sophisticated analysis techniques, it’s essential to address common data issues that can skew results. Missing values are frequently encountered; these can arise from various reasons, including incomplete data entry or malfunctioning equipment. One approach to handling missing data is deletion, removing rows or columns containing missing values. However, this method can lead to a loss of valuable information, particularly if a significant portion of the data is missing. A more sophisticated approach is imputation, where missing values are replaced with estimated values based on the available data. Simple imputation methods include replacing missing values with the mean, median, or mode of the respective column. More advanced techniques, not covered in this beginner’s guide to the basics of data analysis, involve using statistical modeling to predict missing values.

Another common challenge in the basics of data analysis is the presence of outliers. Outliers are data points that significantly deviate from the rest of the data. These values can disproportionately influence the results of statistical analyses and may indicate errors in data collection or recording. Identifying outliers often involves visual inspection of data through scatter plots or box plots, enabling the detection of points far removed from the central tendency. Dealing with outliers requires careful consideration; sometimes, outliers are genuine data points representing extreme values, while at other times they reflect errors needing correction or removal. The decision to retain, correct, or remove outliers should depend on the context of the data and the analysis goals. Understanding the basics of data analysis necessitates careful handling of outliers to avoid drawing incorrect conclusions.

Inconsistencies in data formatting are another frequent problem encountered in the basics of data analysis. This might involve inconsistent date formats, differing units of measurement, or variations in spelling or capitalization. Addressing these inconsistencies is vital for accurate analysis. Data cleaning in spreadsheets involves techniques like using built-in functions to standardize date formats, converting units to a consistent scale, and employing find-and-replace functions to correct spelling errors. Careful attention to these seemingly small details in the data cleaning stage ensures the reliability of subsequent analyses. Effective data cleaning, a fundamental aspect of the basics of data analysis, contributes significantly to the accuracy and credibility of the insights derived from the data. It empowers analysts to focus on uncovering meaningful patterns and drawing valid conclusions based on reliable information.

Exploring Data Through Visualization: A Key Element of the Basics of Data Analysis

Data visualization is crucial in the basics of data analysis because it transforms complex datasets into easily understandable visual representations. This allows analysts to quickly identify patterns, trends, and outliers that might be missed when examining raw data. Effective visualization techniques are essential for communicating findings to both technical and non-technical audiences. Different chart types serve distinct purposes; choosing the right visualization is paramount for clear communication. For instance, bar charts effectively compare categories, while pie charts illustrate proportions of a whole. Line charts track changes over time, making them ideal for displaying trends. Scatter plots reveal relationships between two variables, highlighting correlations or lack thereof. Histograms effectively show the distribution of a single variable, revealing its central tendency and spread. Mastering these basic visualization techniques forms an integral part of the basics of data analysis.

Consider a dataset showing sales figures for different product categories over a year. A bar chart could clearly display the sales of each category, allowing for easy comparison. To show the proportion of sales contributed by each category, a pie chart would be more suitable. If the goal is to see how sales for a specific product changed over time, a line chart would be the best choice. To explore the relationship between advertising spending and sales, a scatter plot would be ideal, revealing any correlation. Finally, a histogram could demonstrate the distribution of sales values, revealing whether sales are clustered around a specific value or are more evenly spread. Understanding how to select and interpret these different visualizations is critical for gaining insightful conclusions from your data. Effective data visualization is not just about creating charts; it’s about communicating information clearly and efficiently. Selecting the right chart type for the data and the audience is a key skill in the basics of data analysis.

The power of data visualization lies in its ability to reveal hidden insights and facilitate effective communication. By mastering the basics of data analysis through visualization, one can uncover compelling narratives within data, making complex information accessible and understandable. Understanding the nuances of each chart type and selecting the most appropriate visualization are critical steps in extracting meaningful conclusions. The application of appropriate visual tools in the basics of data analysis unlocks the potential of data, transforming raw numbers into compelling stories that resonate with audiences. This ability to translate data into visual narratives is a valuable skill, contributing significantly to effective decision-making across many disciplines.

Descriptive Statistics: Unveiling Key Insights in the Basics of Data Analysis

Descriptive statistics are fundamental to the basics of data analysis, providing a concise summary of key features within a dataset. They help to paint a picture of the data’s central tendency, variability, and shape, without making inferences about a larger population. Understanding these measures is crucial for interpreting data effectively. Central tendency is typically described using the mean, median, and mode. The mean represents the average value, calculated by summing all values and dividing by the number of observations. The median represents the middle value when data is ordered, providing a robust measure less sensitive to extreme values than the mean. The mode identifies the most frequently occurring value within the dataset. These measures are particularly useful when exploring the basics of data analysis for a single variable.

Beyond central tendency, understanding the variability or spread of data is equally important in the basics of data analysis. The range, a simple measure of spread, represents the difference between the maximum and minimum values. However, a more comprehensive measure is the standard deviation, which quantifies the average distance of each data point from the mean. A larger standard deviation indicates greater variability, while a smaller standard deviation suggests data points are clustered closely around the mean. Considering both central tendency and variability provides a more complete understanding of data distribution. Visualizing this data, such as using histograms, can further enhance comprehension of the basics of data analysis.

The application of descriptive statistics in the basics of data analysis extends to understanding data distribution shapes. Symmetrical distributions, where data is evenly spread around the mean, are characterized by similar mean and median values. Skewed distributions, however, show an uneven spread, with the mean being pulled towards the tail of the distribution. A positive skew indicates a long tail to the right, while a negative skew shows a long tail to the left. Recognizing these distribution patterns is vital in the basics of data analysis, guiding choices for further analysis and interpretation. Understanding these descriptive statistics provides a solid foundation for moving to more complex analytical techniques and further strengthens one’s comprehension of the basics of data analysis.

Inferential Statistics: Drawing Conclusions from Data

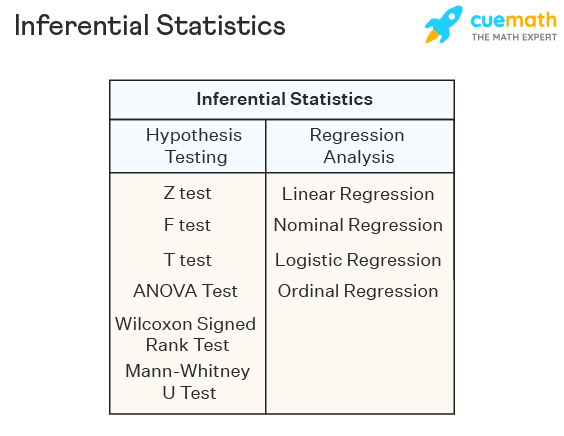

Inferential statistics builds upon the basics of data analysis by enabling generalizations about a larger population based on the analysis of a smaller sample. Unlike descriptive statistics, which merely summarize data, inferential statistics allows us to draw conclusions and make predictions about the broader context from which the sample was taken. This is crucial because it’s often impractical or impossible to collect data from every single member of a population. Imagine trying to survey every single registered voter in a country – the task is monumental. Instead, researchers employ inferential statistics to analyze data from a representative sample and then extend those findings to the entire population, with an understanding of the inherent uncertainty involved.

A core concept in inferential statistics is hypothesis testing. This involves formulating a testable statement (hypothesis) about a population and then using sample data to determine whether there is enough evidence to support or reject that hypothesis. For example, a researcher might hypothesize that a new drug is more effective than an existing one. By analyzing data from a clinical trial (the sample), they can use inferential statistical tests to determine whether the observed difference in effectiveness between the two drugs is statistically significant, or simply due to random chance. Similarly, confidence intervals provide a range of values within which the true population parameter is likely to fall, with a specified level of confidence. For instance, a 95% confidence interval for the average height of women might be 5’4″ to 5’6″, suggesting that one can be 95% confident that the true average height falls within this range. Understanding these fundamental concepts of inferential statistics is paramount for anyone seeking a deeper understanding of the basics of data analysis.

While mastering the complex mathematical formulas behind inferential statistics might require specialized training, grasping the core principles of hypothesis testing and confidence intervals empowers individuals to critically evaluate research findings and make more informed decisions based on data. This understanding is vital for interpreting results in various fields, from healthcare to market research, enabling informed decision-making. The basics of data analysis, including both descriptive and inferential methods, are interwoven, offering a complete picture of how to extract meaning and insights from raw data, ultimately facilitating evidence-based decision-making across many disciplines. This ability to interpret data and to communicate these interpretations effectively is a crucial skill in today’s data-driven world.

Interpreting Your Findings and Communicating Results

The ability to interpret findings and communicate results effectively is a cornerstone of data analysis, and especially crucial when working with the basics of data analysis. Data, on its own, rarely speaks volumes to those unfamiliar with statistical jargon or the nuances of analytical tools. The true power of data analysis lies in the ability to transform raw figures and complex calculations into clear, actionable insights. It is not sufficient to simply present a collection of charts or tables; one must instead craft a narrative that translates technical findings into plain, understandable language for the intended audience. Effective communication begins with a clear understanding of who the audience is and what they need to know. A technical presentation delivered to a team of fellow analysts will differ markedly from a presentation given to stakeholders or a general public. This requires focusing on the ‘so what?’ of the data—highlighting implications, recommendations, and actionable items that will drive decisions. The basics of data analysis involves knowing how to translate complex insights into an understandable format, thereby promoting data-driven culture within a team or organization. Furthermore, visuals also need careful consideration, the goal being to highlight key findings without being confusing or overwhelming. Every chart, every graph, every data point must serve a purpose in the larger story one is trying to convey.

Crafting a compelling data story requires the ability to connect individual findings to a broader context. Avoid presenting isolated statistics and aim for a cohesive narrative that illustrates the implications of findings. This involves framing data in a way that resonates with the audience’s experience and goals. For instance, instead of simply stating that ‘sales increased by 15%’, explain what factors contributed to this growth, the expected impact on profitability and what actions should be considered in the next period. The basics of data analysis extends far beyond calculations; it includes the ability to see the narrative embedded within the numbers and tell a story that others can easily grasp and understand. This is essential because data-driven decisions rely heavily on the clarity with which data insights are delivered. Furthermore, the art of communicating data effectively not only clarifies one’s findings but also builds trust and confidence in the data analysis process. Ultimately, the goal is to enable the audience to not only understand the data but also to act upon the insights effectively, whether it be making strategic decisions, driving improvement or addressing business problems. Therefore, clear, concise, and relevant communication is an essential component of the data analysis lifecycle, and the basics of data analysis emphasize its importance for success.

The Journey Continues: Mastering the Basics of Data Analysis

Embarking on the path of data analysis is an investment in a skill that will serve you well across numerous disciplines. The power to transform raw information into meaningful insights is invaluable in today’s data-rich world. Having grasped the fundamental concepts and techniques discussed, the next step involves consistent practice and exploration. The basics of data analysis form the bedrock for more advanced methods, making it essential to continually hone these initial skills. For instance, regularly working with spreadsheet software to clean and visualize data will sharpen your abilities, solidifying your understanding of how different data treatments can affect outcomes. Likewise, experimenting with various types of charts and graphs can expand your visual analysis skills. Remember, each dataset presents a unique challenge and opportunity to deepen your grasp of data analysis basics. Embrace these challenges, and you will be well on your way to mastering not just the tools, but the critical thinking required to unlock the hidden stories within the data.

The field of data analysis is in constant evolution, with new tools and techniques emerging frequently. Staying current with these advancements can give you an edge in your career or personal endeavors. Consider exploring open-source platforms for data manipulation and visualization, which could expand your toolkit and introduce you to new concepts. Learning how to program with languages such as Python or R can open doors to more sophisticated techniques like machine learning. The fundamental principles of data cleaning, descriptive statistics and data visualization will, however, remain constant anchors through any advanced learning. The application of these basics of data analysis transcends specific tools, and mastering the core concepts will enable you to adapt to new software and frameworks quickly. The capacity to analyze data effectively has never been more valuable, opening up a wide array of career paths. Whether you are aiming to become a data scientist, analyst or use data to inform decisions in other fields, a strong command of data analysis basics will prove to be an asset.