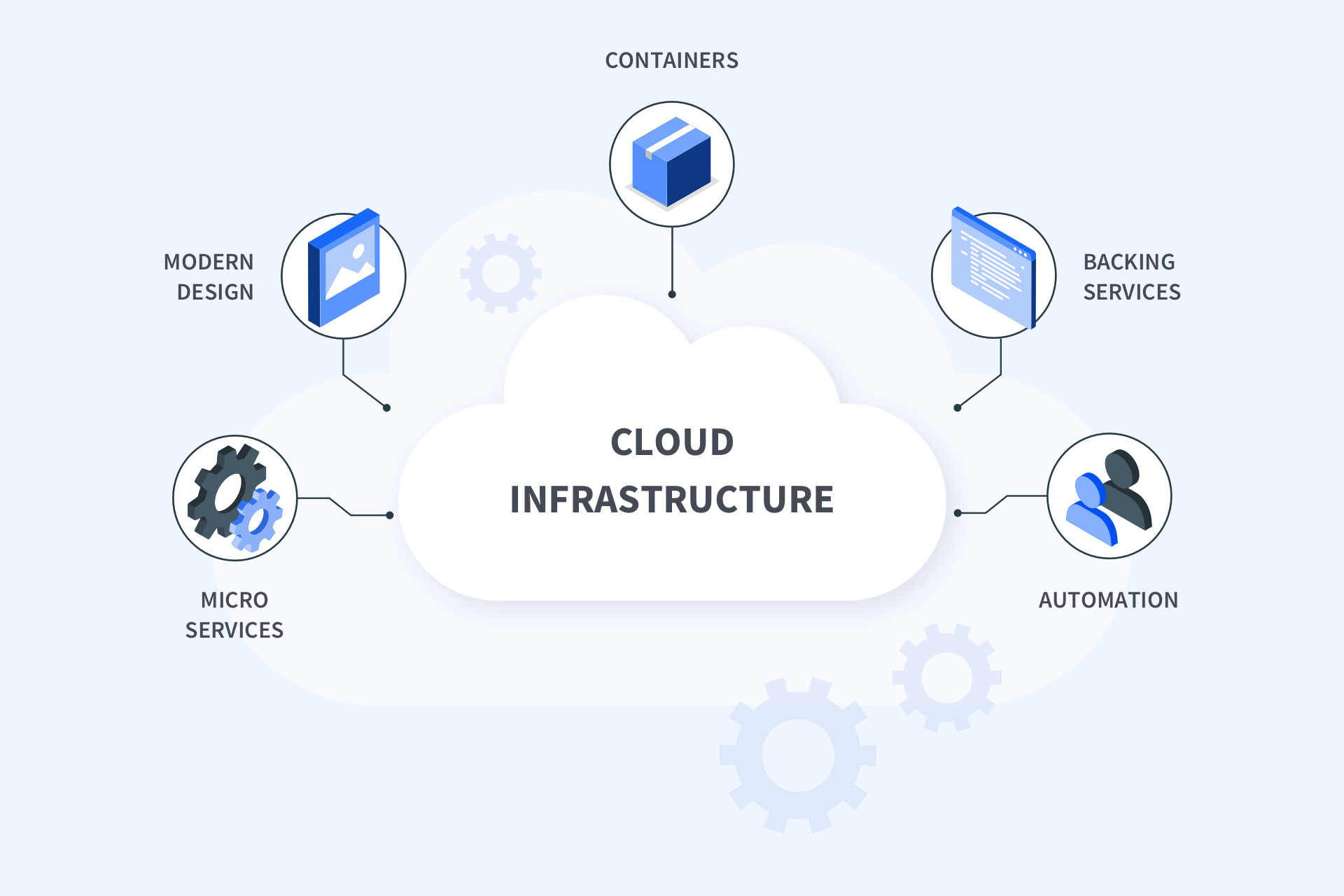

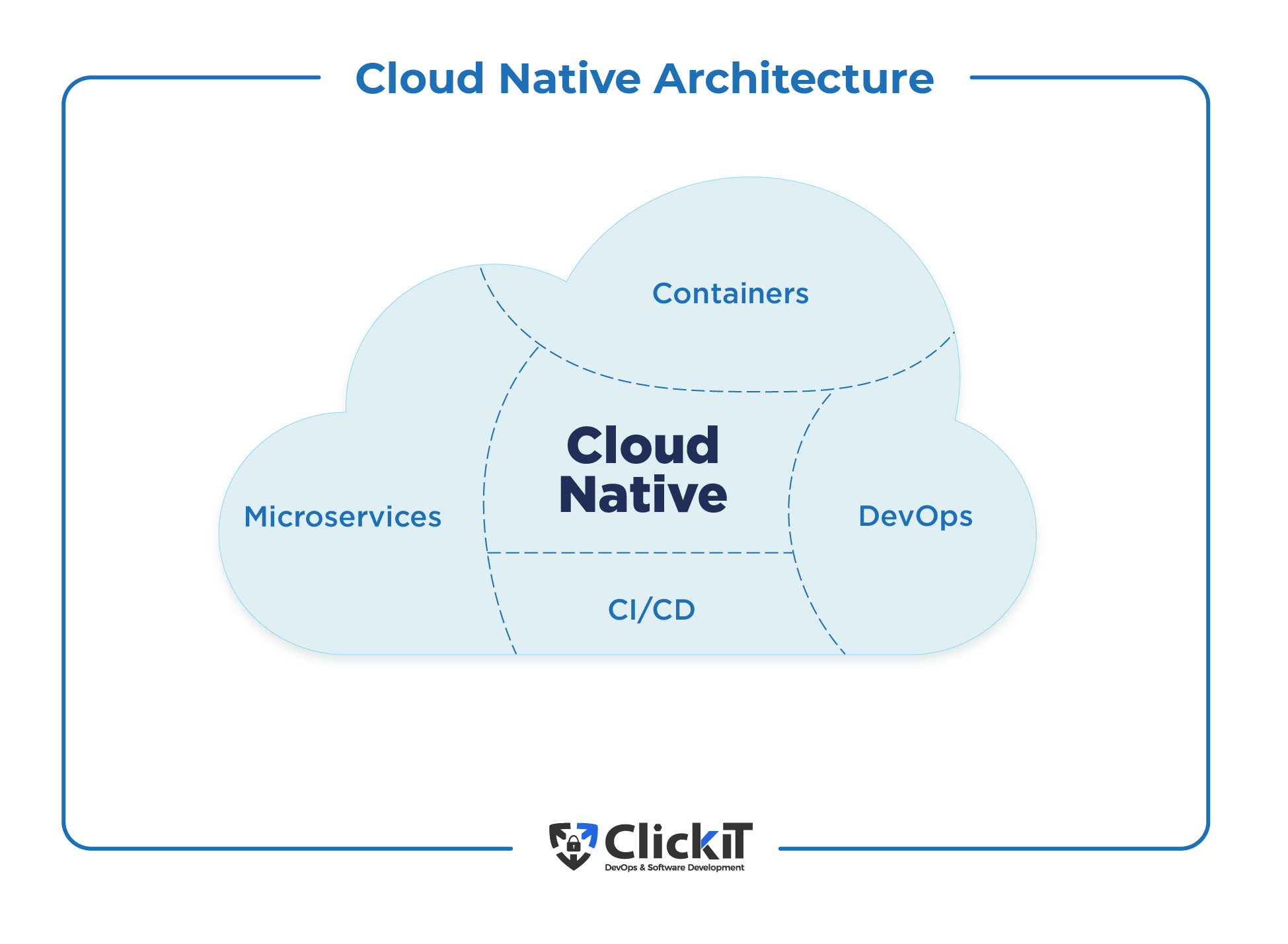

What is Cloud-Native Architecture?

Cloud-native architecture is a modern approach to building and deploying applications that leverages the benefits of cloud computing. At its core, cloud-native architecture is composed of three key components: microservices, containers, and orchestration systems. Microservices are a software development technique that involves building an application as a collection of small, independent services that communicate with each other through APIs. This approach allows for greater flexibility and scalability compared to traditional monolithic architectures.

Containers are a lightweight, standalone, and executable software package that includes everything needed to run a piece of software, including code, a runtime, system tools, and system libraries. Containers provide a consistent and reliable way to run applications across different environments, from development to production.

Orchestration systems are tools that manage and automate the deployment, scaling, and management of containerized applications. Popular orchestration systems include Kubernetes, Docker Swarm, and Apache Mesos.

The benefits of cloud-native architecture are numerous, including greater scalability, resilience, and faster time-to-market. With cloud-native architecture, applications can be scaled up or down quickly and easily to meet changing demands. Additionally, cloud-native architecture provides built-in resilience and fault tolerance, ensuring that applications remain available and performant even in the face of failures. Finally, cloud-native architecture enables organizations to release new features and updates more quickly, reducing time-to-market and improving competitiveness.

Incorporating cloud-native architecture into your application development and deployment strategy can provide significant benefits, but it’s important to approach the transition carefully. In the next section, we’ll discuss how to leverage cloud-native architecture for applications, including assessing application readiness, selecting the right tools and platforms, and implementing best practices for containerization and orchestration.

How to Leverage Cloud-Native Architecture for Applications

Cloud-native architecture is a powerful approach to building and deploying applications, offering numerous benefits such as scalability, resilience, and faster time-to-market. However, adopting cloud-native architecture for applications requires careful planning and execution. In this section, we’ll discuss the step-by-step process of leveraging cloud-native architecture for applications.

Assessing Application Readiness

The first step in adopting cloud-native architecture for applications is to assess their readiness for the transition. This involves evaluating the application’s current architecture, identifying areas that may benefit from modernization, and determining the level of effort required to make the transition. When assessing application readiness, consider factors such as the application’s complexity, the scalability of its current architecture, and the level of integration required with other systems. Additionally, consider the application’s business value and the potential impact of downtime during the transition.

Selecting the Right Tools and Platforms

Once you’ve assessed application readiness, the next step is to select the right tools and platforms for your cloud-native architecture. This includes selecting a containerization platform, such as Docker or Kubernetes, and an orchestration system, such as Kubernetes, Docker Swarm, or Apache Mesos. When selecting tools and platforms, consider factors such as the application’s scalability requirements, the level of automation required for deployment and management, and the level of support and resources available for the chosen platform.

Implementing Best Practices for Containerization and Orchestration

Once you’ve selected the right tools and platforms, the next step is to implement best practices for containerization and orchestration. This includes creating container images that are small, efficient, and easily deployable, and implementing automation for deployment, scaling, and management of containerized applications. When implementing best practices for containerization and orchestration, consider factors such as the application’s resource requirements, the need for fault tolerance and resilience, and the potential impact of failures or downtime.

Monitoring and Optimizing Performance

The final step in leveraging cloud-native architecture for applications is to monitor and optimize their performance. This includes tracking key performance metrics, such as response time, throughput, and error rates, and identifying areas for optimization and improvement. When monitoring and optimizing performance, consider factors such as the application’s resource utilization, the impact of traffic patterns and spikes, and the potential impact of changes to the application or its infrastructure.

Incorporating cloud-native architecture into your application development and deployment strategy can provide significant benefits, but it’s important to approach the transition carefully. By following the step-by-step process outlined in this section, you can ensure a successful adoption of cloud-native architecture for your applications.

Real-World Examples of Successful Cloud-Native Architecture Implementations

Leveraging cloud-native architecture for applications has become increasingly popular in recent years, with many organizations achieving significant benefits such as scalability, resilience, and faster time-to-market. In this section, we’ll provide examples of successful cloud-native architecture implementations in various industries, highlighting the challenges they faced, the solutions they implemented, and the benefits they achieved.

Example 1: Netflix

Netflix is a well-known example of a company that has successfully implemented cloud-native architecture. The company’s microservices-based architecture consists of over 700 services, each running in its own container. By using containerization and orchestration systems, Netflix has achieved high levels of scalability and resilience, with the ability to handle millions of concurrent streams and automatically recover from failures.

Example 2: GE Aviation

GE Aviation, a leading manufacturer of aircraft engines, has implemented a cloud-native architecture for its Predix platform, which provides industrial analytics and applications for the aviation industry. By using containerization and orchestration systems, GE Aviation has achieved faster time-to-market for its applications, with the ability to deploy changes in minutes rather than weeks.

Example 3: The Australian Government’s Digital Transformation Agency

The Australian Government’s Digital Transformation Agency has implemented a cloud-native architecture for its government services, including the myGov platform, which provides access to government services for millions of citizens. By using containerization and orchestration systems, the agency has achieved high levels of scalability and resilience, with the ability to handle spikes in traffic and automatically recover from failures.

Challenges and Solutions

While implementing cloud-native architecture can provide significant benefits, it also presents challenges. These challenges include the complexity of managing and scaling containerized applications, the need for new skills and expertise, and the potential impact on security and compliance. To address these challenges, organizations can implement best practices for containerization and orchestration, such as creating container images that are small, efficient, and easily deployable, and implementing automation for deployment, scaling, and management of containerized applications. Additionally, organizations can provide training and support for their teams to develop the necessary skills and expertise.

Benefits

Successful implementation of cloud-native architecture can provide significant benefits, including:

- Scalability: the ability to handle spikes in traffic and automatically scale resources up and down as needed.

- Resilience: the ability to automatically recover from failures and minimize downtime.

- Faster time-to-market: the ability to deploy changes quickly and efficiently, reducing the time to market for new features and applications.

- Consistency: the ability to run applications consistently across different environments, reducing the risk of compatibility issues and errors.

In conclusion, leveraging cloud-native architecture for applications can provide significant benefits, as demonstrated by successful implementations in various industries. By following best practices for containerization and orchestration, organizations can overcome the challenges of implementing cloud-native architecture and achieve the benefits of scalability, resilience, and faster time-to-market.

Microservices: The Building Blocks of Cloud-Native Architecture

Cloud-native architecture is a modern approach to building and deploying applications that emphasizes scalability, resilience, and faster time-to-market. At the heart of cloud-native architecture are microservices, which are small, independent components that perform specific functions within an application.

Benefits of Microservices

Microservices offer several benefits over traditional monolithic architectures. They allow for greater flexibility and agility in application development, as each microservice can be developed, deployed, and scaled independently. This means that changes to one microservice do not require changes to the entire application, resulting in faster development cycles and more frequent releases. Additionally, microservices can be written in different programming languages and frameworks, allowing developers to choose the best tool for each job. This leads to more diverse and innovative applications that can take advantage of the latest technologies and trends.

Challenges of Microservices

While microservices offer many benefits, they also present several challenges. One of the biggest challenges is managing and coordinating the interactions between multiple microservices. This requires a robust communication protocol and a way to handle failures and errors. Another challenge is ensuring consistent behavior and performance across all microservices. This requires careful design and implementation, as well as ongoing monitoring and optimization.

Best Practices for Microservices Design and Implementation

To overcome these challenges and maximize the benefits of microservices, it’s important to follow best practices for design and implementation. These include:

- Defining clear boundaries and interfaces between microservices.

- Implementing a robust communication protocol, such as REST or gRPC.

- Using a service registry and discovery mechanism to locate and communicate with other microservices.

- Implementing health checks and fault tolerance mechanisms to handle failures and errors.

- Monitoring and logging all microservice interactions to detect and diagnose issues.

Conclusion

Microservices are a key component of cloud-native architecture, offering greater flexibility and agility in application development. By following best practices for design and implementation, organizations can overcome the challenges of microservices and maximize their benefits, including faster development cycles, more frequent releases, and the ability to take advantage of the latest technologies and trends. Incorporating microservices into a cloud-native architecture requires careful planning and consideration, as well as a deep understanding of the challenges and best practices involved. By following these guidelines, organizations can successfully leverage microservices to build and deploy high-performing, scalable, and resilient applications.

Containerization: The Key to Scalability and Portability in Cloud-Native Architecture

Cloud-native architecture is a modern approach to building and deploying applications that emphasizes scalability, resilience, and faster time-to-market. One of the key components of cloud-native architecture is containerization, which enables applications to run consistently across different environments.

What is Containerization?

Containerization is the process of packaging an application and its dependencies into a container, which is a lightweight, standalone, and executable package. Containers are isolated from each other and from the host system, which enables applications to run reliably and consistently across different environments.

Benefits of Containerization

Containerization offers several benefits for cloud-native architecture, including:

- Scalability: Containers can be easily scaled up or down to meet changing application demands.

- Portability: Containers can be deployed on any platform or infrastructure that supports the container runtime.

- Consistency: Containers ensure that applications run consistently across different environments, reducing the risk of compatibility issues.

- Efficiency: Containers are lightweight and require fewer resources than virtual machines, which leads to better performance and lower costs.

Popular Containerization Platforms

There are several popular containerization platforms available, including:

- Docker: Docker is a popular containerization platform that enables developers to create, deploy, and manage containerized applications. Docker provides a rich set of features, including image management, network communication, and storage management.

- Kubernetes: Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes provides features such as self-healing, auto-scaling, and rolling updates.

Best Practices for Containerization

To maximize the benefits of containerization, it’s important to follow best practices for design and implementation. These include:

- Defining clear boundaries and interfaces between containers.

- Implementing a robust communication protocol, such as REST or gRPC.

- Using a container registry and discovery mechanism to locate and communicate with other containers.

- Implementing health checks and fault tolerance mechanisms to handle failures and errors.

- Monitoring and logging all container interactions to detect and diagnose issues.

Conclusion

Containerization is a key component of cloud-native architecture, enabling applications to run consistently and reliably across different environments. By following best practices for containerization, organizations can maximize the benefits of this approach, including scalability, portability, and faster time-to-market. Incorporating containerization into a cloud-native architecture requires careful planning and consideration, as well as a deep understanding of the challenges and best practices involved. By following these guidelines, organizations can successfully leverage containerization to build and deploy high-performing, scalable, and resilient applications.

.jpg)

Orchestration Systems: Managing Complex Cloud-Native Architectures

Cloud-native architecture is a modern approach to building and deploying applications that emphasizes scalability, resilience, and faster time-to-market. One of the key components of cloud-native architecture is orchestration systems, which manage and scale complex containerized applications.

What are Orchestration Systems?

Orchestration systems are tools that automate the deployment, scaling, and management of containerized applications. They enable organizations to manage large numbers of containers and services, ensuring that applications run reliably and consistently across different environments.

Popular Orchestration Systems

There are several popular orchestration systems available, including:

- Kubernetes: Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes provides features such as self-healing, auto-scaling, and rolling updates.

- Docker Swarm: Docker Swarm is a container orchestration platform that is built into the Docker engine. It enables organizations to create and manage a swarm of Docker nodes, which can be used to deploy and manage containerized applications.

- Apache Mesos: Apache Mesos is an open-source cluster manager that enables organizations to manage and scale distributed systems. Mesos provides features such as resource isolation, fault tolerance, and multi-tenancy.

Benefits of Orchestration Systems

Orchestration systems offer several benefits for cloud-native architecture, including:

- Scalability: Orchestration systems enable organizations to scale applications up or down to meet changing demands.

- Resilience: Orchestration systems ensure that applications are highly available and fault-tolerant, even in the face of failures and errors.

- Consistency: Orchestration systems ensure that applications run consistently across different environments, reducing the risk of compatibility issues.

- Efficiency: Orchestration systems enable organizations to optimize resource utilization, reducing costs and improving performance.

Best Practices for Orchestration Systems

To maximize the benefits of orchestration systems, it’s important to follow best practices for design and implementation. These include:

- Defining clear boundaries and interfaces between services.

- Implementing a robust communication protocol, such as REST or gRPC.

- Using a service registry and discovery mechanism to locate and communicate with other services.

- Implementing health checks and fault tolerance mechanisms to handle failures and errors.

- Monitoring and logging all service interactions to detect and diagnose issues.

Conclusion

Orchestration systems are a critical component of cloud-native architecture, enabling organizations to manage and scale complex containerized applications. By following best practices for orchestration, organizations can maximize the benefits of this approach, including scalability, resilience, and faster time-to-market. Incorporating orchestration systems into a cloud-native architecture requires careful planning and consideration, as well as a deep understanding of the challenges and best practices involved. By following these guidelines, organizations can successfully leverage orchestration systems to build and deploy high-performing, scalable, and resilient applications.

Security Considerations in Cloud-Native Architecture

As organizations adopt cloud-native architecture, it’s essential to consider the security implications of this approach. Cloud-native architecture introduces new security challenges, such as network security, data encryption, and access control. To address these challenges, organizations must implement security best practices in containerization and orchestration systems.

Network Security

Network security is a critical concern in cloud-native architecture. With the increasing use of microservices and containers, there are more attack surfaces and potential vulnerabilities. To mitigate these risks, organizations should implement network segmentation, which involves dividing the network into smaller, isolated segments. This approach reduces the attack surface and makes it easier to detect and contain security breaches.

Data Encryption

Data encryption is another essential security consideration in cloud-native architecture. Encrypting data at rest and in transit ensures that sensitive information is protected, even if it falls into the wrong hands. Organizations should use encryption algorithms that are widely accepted and proven to be secure, such as Advanced Encryption Standard (AES) and Rivest-Shamir-Adleman (RSA).

Access Control

Access control is a critical component of cloud-native architecture security. Organizations should implement role-based access control (RBAC), which restricts access to resources based on the user’s role within the organization. This approach ensures that users only have access to the resources they need to perform their job functions.

Security Best Practices in Containerization and Orchestration Systems

To implement security best practices in containerization and orchestration systems, organizations should follow these guidelines:

- Use only trusted container images and avoid using images from untrusted sources.

- Implement network policies that restrict communication between containers and limit the attack surface.

- Use secrets management tools to securely store and manage sensitive information, such as API keys and passwords.

- Implement vulnerability scanning and patch management for container images and hosts.

- Monitor container and host logs to detect and respond to security incidents.

Conclusion

Security is a critical consideration in cloud-native architecture. Organizations must implement security best practices in containerization and orchestration systems to mitigate the risks associated with this approach. By following these guidelines, organizations can build and deploy secure, scalable, and resilient applications that meet the needs of their customers and stakeholders.

Incorporating security best practices into cloud-native architecture requires careful planning and consideration. By following these guidelines, organizations can build and deploy secure, scalable, and resilient applications that meet the needs of their customers and stakeholders.

Leveraging cloud-native architecture for applications requires a deep understanding of the security considerations involved. By following best practices for containerization and orchestration systems, organizations can build and deploy secure, scalable, and resilient applications that meet the needs of their customers and stakeholders.

“