An Introduction to Kubernetes and Its Role in Container Orchestration

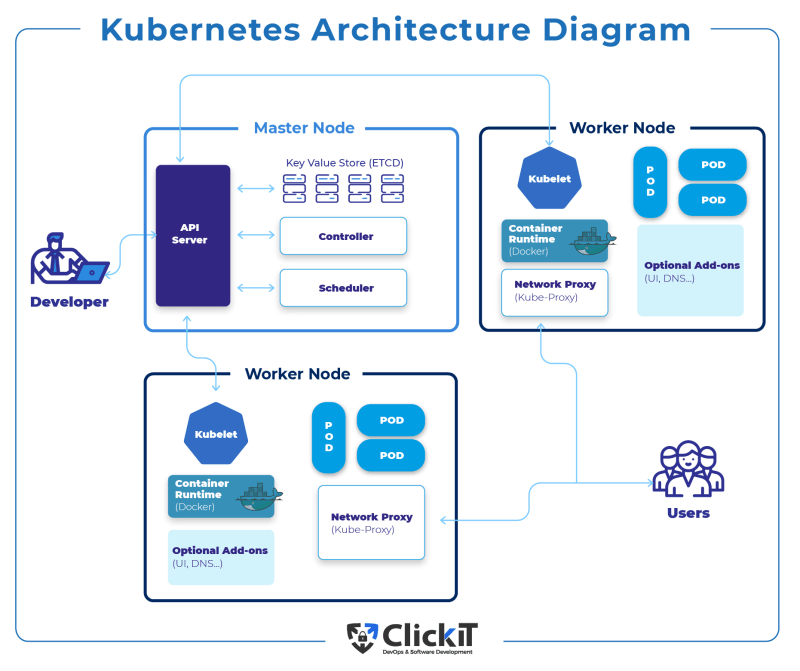

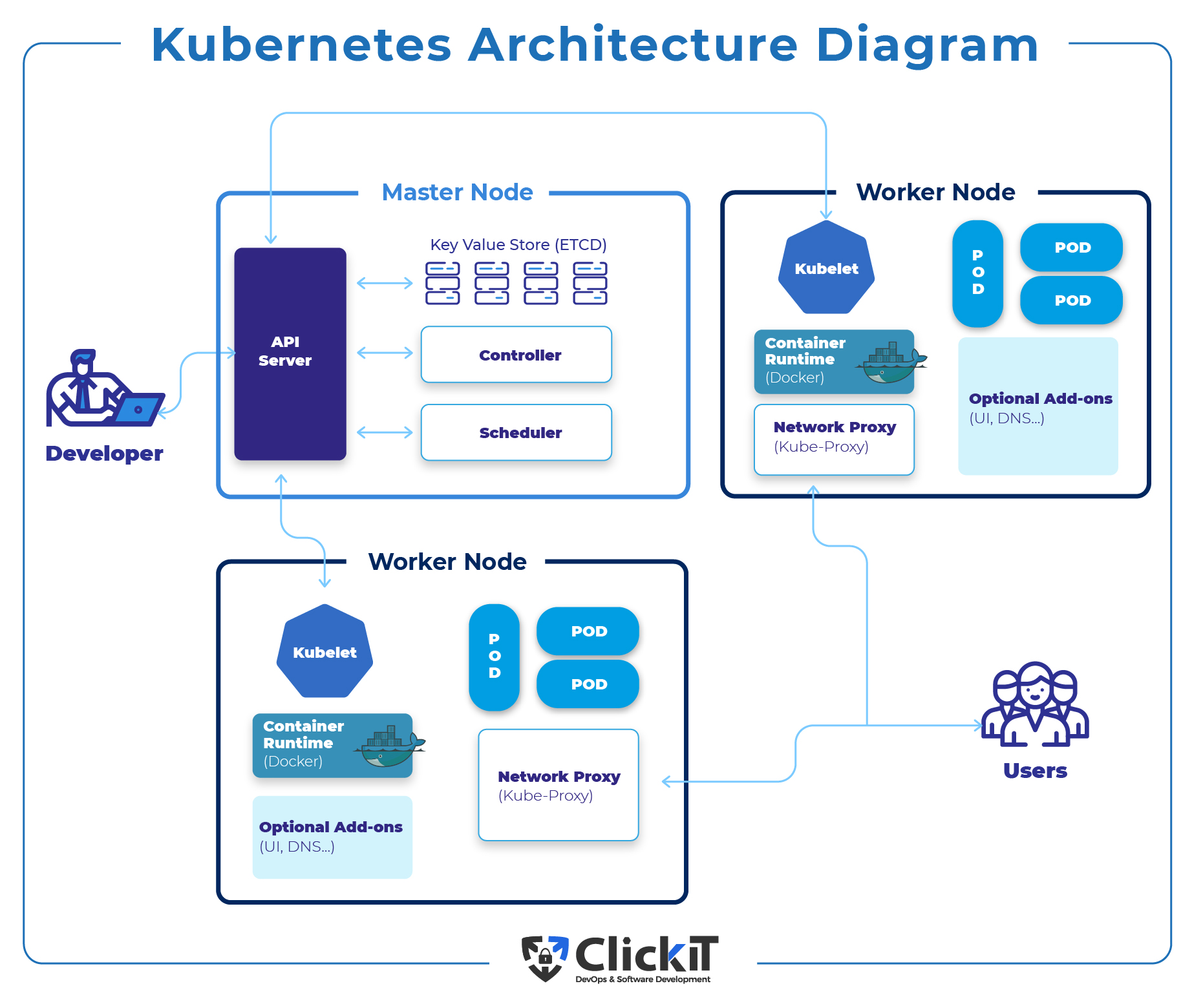

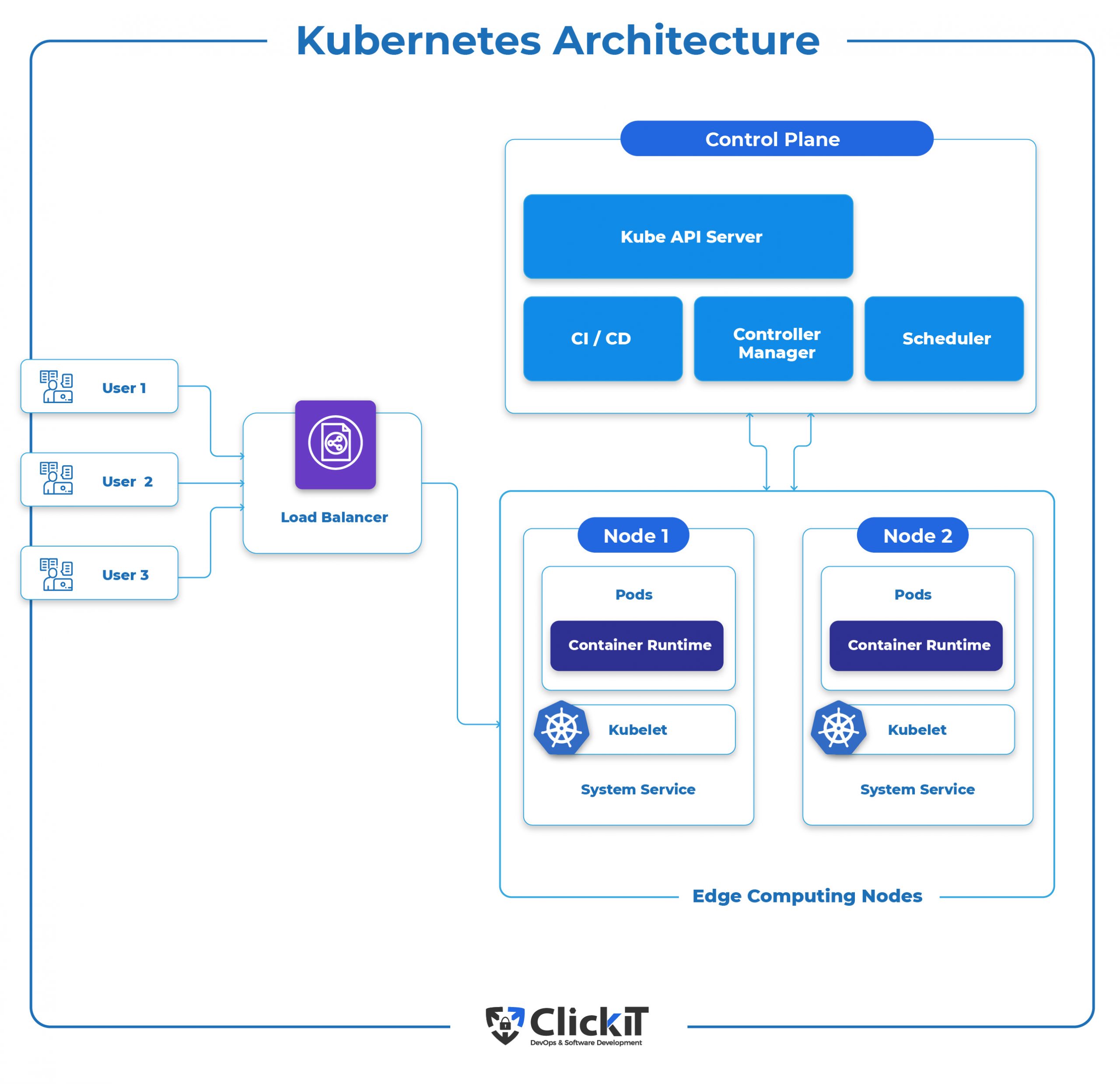

Kubernetes, also known as K8s, is an open-source platform designed to automate deploying, scaling, and managing containerized applications. It groups containers that make up an application into logical units for easy management and discovery. As a container orchestration system, Kubernetes handles the complex tasks of scheduling containers onto physical or virtual machines, networking, and maintaining the desired state of the application.

Container orchestration with Kubernetes offers several benefits, including improved scalability, resource optimization, and deployment automation. By using Kubernetes, teams can manage applications more efficiently, reduce manual intervention, and ensure high availability of their services. The platform’s flexibility and extensive ecosystem make it an ideal choice for organizations looking to modernize their application deployments and streamline their operations.

Getting Started with Kubernetes: Installation and Setup

To begin using Kubernetes, you’ll need to install and set up the platform on your local machine or a preferred cloud platform. Start by checking the prerequisites and system requirements for your chosen environment. Common requirements include a modern Linux distribution, Docker (or another container runtime), and a minimum amount of RAM and CPU resources.

For a local installation, consider using Minikube, a tool that makes it easy to run Kubernetes locally. Follow these steps to get started:

- Install VirtualBox, VMware, or another supported virtualization provider.

- Install Minikube by following the official documentation for your operating system.

- Start Minikube using the command line, e.g.,

minikube start. - Verify the installation by checking the status of your cluster with

minikube status.

To set up Kubernetes on a cloud platform, consider using Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS). These managed services simplify the installation and maintenance of Kubernetes clusters, allowing you to focus on deploying and managing your applications.

Regardless of your chosen environment, remember to periodically update your Kubernetes installation to take advantage of new features and security updates. Following the official documentation and best practices will ensure a smooth installation and setup process, enabling you to start using Kubernetes for container orchestration.

Navigating the Kubernetes Dashboard: A User-Friendly Interface for Cluster Management

The Kubernetes dashboard is a powerful web-based user interface for managing and monitoring Kubernetes clusters. It provides a graphical overview of your cluster resources, making it easier to navigate and interact with your applications compared to command-line tools. The dashboard offers several features, including resource visualization, logs, events, and the ability to perform various actions such as creating, updating, and deleting resources.

To access the Kubernetes dashboard, you can use the following steps:

- Ensure that the Kubernetes API server is exposed and accessible.

- Create a service account and role binding for the dashboard to access your cluster resources.

- Proxy the dashboard using the command line, e.g.,

kubectl proxy. - Access the dashboard using your web browser, e.g., http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/.

Using the dashboard, you can easily manage and monitor your applications, services, and other Kubernetes resources. The dashboard’s visual representation of your cluster resources helps you quickly identify issues and perform actions to resolve them. Additionally, the dashboard offers a more user-friendly experience for those new to Kubernetes or prefer a graphical interface over command-line tools.

Deploying and Managing Applications with Kubernetes

Kubernetes simplifies deploying and managing containerized applications using key concepts such as pods, services, deployments, and replica sets. These abstractions enable you to define the desired state of your applications, and Kubernetes automatically maintains that state, ensuring high availability and reliability.

Pods: The Basic Unit of Deployment

Pods are the smallest and simplest unit in the Kubernetes object model that you create or deploy. A Pod represents a running process on your cluster and can contain one or more containers. Containers in a pod share the same network namespace, enabling them to communicate using localhost.

Services: Providing Stable Endpoints

Services provide stable endpoints for a set of pods, allowing you to define a policy to access them. Services enable load balancing, service discovery, and communication between pods. By default, Kubernetes assigns a DNS name to a service, making it easy to locate and connect to it.

Deployments: Describing the Desired State

Deployments describe the desired state for your application, including the number of replicas, container images, and updates. Kubernetes automatically creates and updates pods to maintain the desired state, ensuring high availability and reliability.

Replica Sets: Ensuring Desired Pod Count

Replica sets ensure that a specified number of pod replicas are running at any given time. They work in conjunction with deployments to maintain the desired state of your application, automatically scaling pods up or down as needed.

To create and manage these resources, you can use declarative configuration files written in YAML or JSON format. These files define the desired state of your applications, and Kubernetes ensures that the actual state matches the desired state. By following best practices and utilizing these Kubernetes concepts, you can efficiently deploy and manage applications in a containerized environment.

Scaling and Updating Applications with Kubernetes

Kubernetes offers powerful features for scaling and updating applications, ensuring high availability and seamless deployments. By leveraging strategies such as rolling updates and zero-downtime deployments, you can maintain application uptime while introducing new features and updates.

Horizontal Scaling: Adding or Removing Pods

Horizontal scaling involves adding or removing pods to handle changes in workload. Kubernetes makes it easy to scale applications based on resource utilization, user demand, or other factors. You can use the command line or the Kubernetes dashboard to adjust the number of replicas in a deployment or replica set, enabling you to scale your applications quickly and efficiently.

Rolling Updates: Seamless Deployments

Rolling updates allow you to update your applications without downtime. Kubernetes gradually replaces old pods with new ones, ensuring that a minimum number of pods are available to handle user requests at all times. This strategy minimizes the risk of application outages and enables you to deploy updates confidently.

Zero-Downtime Deployments: Ensuring Continuous Availability

Zero-downtime deployments involve updating running instances without any disruption to the user experience. Kubernetes supports zero-downtime deployments by using rolling updates, readiness probes, and liveness probes. These features ensure that new instances are fully functional before replacing old ones, maintaining application availability throughout the deployment process.

To implement these techniques, follow best practices such as gradual rollouts, canary deployments, and thorough testing. By mastering the art of scaling and updating applications in Kubernetes, you can ensure high availability, seamless deployments, and a positive user experience.

Monitoring and Troubleshooting Kubernetes Clusters

Monitoring and troubleshooting Kubernetes clusters are essential for maintaining application performance, availability, and security. Kubernetes provides built-in monitoring tools and integrates with third-party solutions to help you identify and resolve issues quickly and efficiently.

Built-in Monitoring Tools

Kubernetes includes several built-in monitoring tools, such as cAdvisor, Metrics Server, and kube-state-metrics. These tools collect and aggregate metrics related to resource utilization, container health, and cluster state. By analyzing this data, you can gain insights into your cluster’s performance and identify potential issues before they impact your applications.

Third-Party Monitoring Solutions

Third-party monitoring solutions, such as Prometheus, Grafana, and Datadog, offer advanced monitoring and visualization capabilities for Kubernetes clusters. These tools integrate with Kubernetes to collect and analyze metrics, logs, and events, providing a comprehensive view of your cluster’s health and performance. By leveraging these solutions, you can quickly identify and resolve issues, optimize resource utilization, and ensure high availability for your applications.

Best Practices for Monitoring and Troubleshooting

To effectively monitor and troubleshoot Kubernetes clusters, follow these best practices:

- Establish monitoring baselines and alerts for critical resources and applications.

- Regularly review logs, metrics, and events to identify trends and anomalies.

- Perform proactive maintenance and updates to ensure your cluster remains secure and up-to-date.

- Leverage automation and self-healing features to minimize manual intervention and human error.

- Document your monitoring and troubleshooting processes to ensure consistency and repeatability.

By following these best practices and utilizing the monitoring tools available in Kubernetes, you can maintain a healthy and performant cluster, ensuring optimal application performance and user experience.

Networking and Security in Kubernetes

Networking and security are critical aspects of managing Kubernetes clusters and containerized applications. By understanding and implementing best practices for networking and security, you can ensure a secure and efficient environment for your applications.

Network Policies: Controlling Pod-to-Pod Communication

Network policies in Kubernetes allow you to control pod-to-pod communication based on labels and selectors. By defining network policies, you can restrict traffic between pods, ensuring that only authorized communication occurs within your cluster. This approach helps maintain a secure environment and prevents unauthorized access to your applications and data.

Ingress and Egress Rules: Managing External Access

Ingress and egress rules enable you to manage external access to your cluster and individual applications. Ingress rules define how external traffic is routed to internal services, while egress rules control outbound traffic from your cluster. By configuring these rules, you can ensure secure and efficient communication between your cluster and external resources.

Securing Communication Between Pods

Securing communication between pods is essential for maintaining a secure environment. Kubernetes supports various encryption and authentication mechanisms, such as network encryption, transport layer security (TLS), and certificate-based authentication. By implementing these mechanisms, you can ensure that data transmitted between pods is secure and protected from unauthorized access.

Best Practices for Networking and Security

To ensure a secure and efficient Kubernetes environment, follow these best practices:

- Implement network policies and ingress/egress rules to control pod-to-pod communication and external access.

- Use encryption and authentication mechanisms to secure communication between pods.

- Regularly review and update your security policies and configurations.

- Leverage role-based access control (RBAC) and network segmentation to limit access to sensitive resources.

- Monitor your cluster for security vulnerabilities and implement security patches and updates promptly.

By following these best practices and implementing networking and security concepts in Kubernetes, you can maintain a secure and efficient environment for your containerized applications.

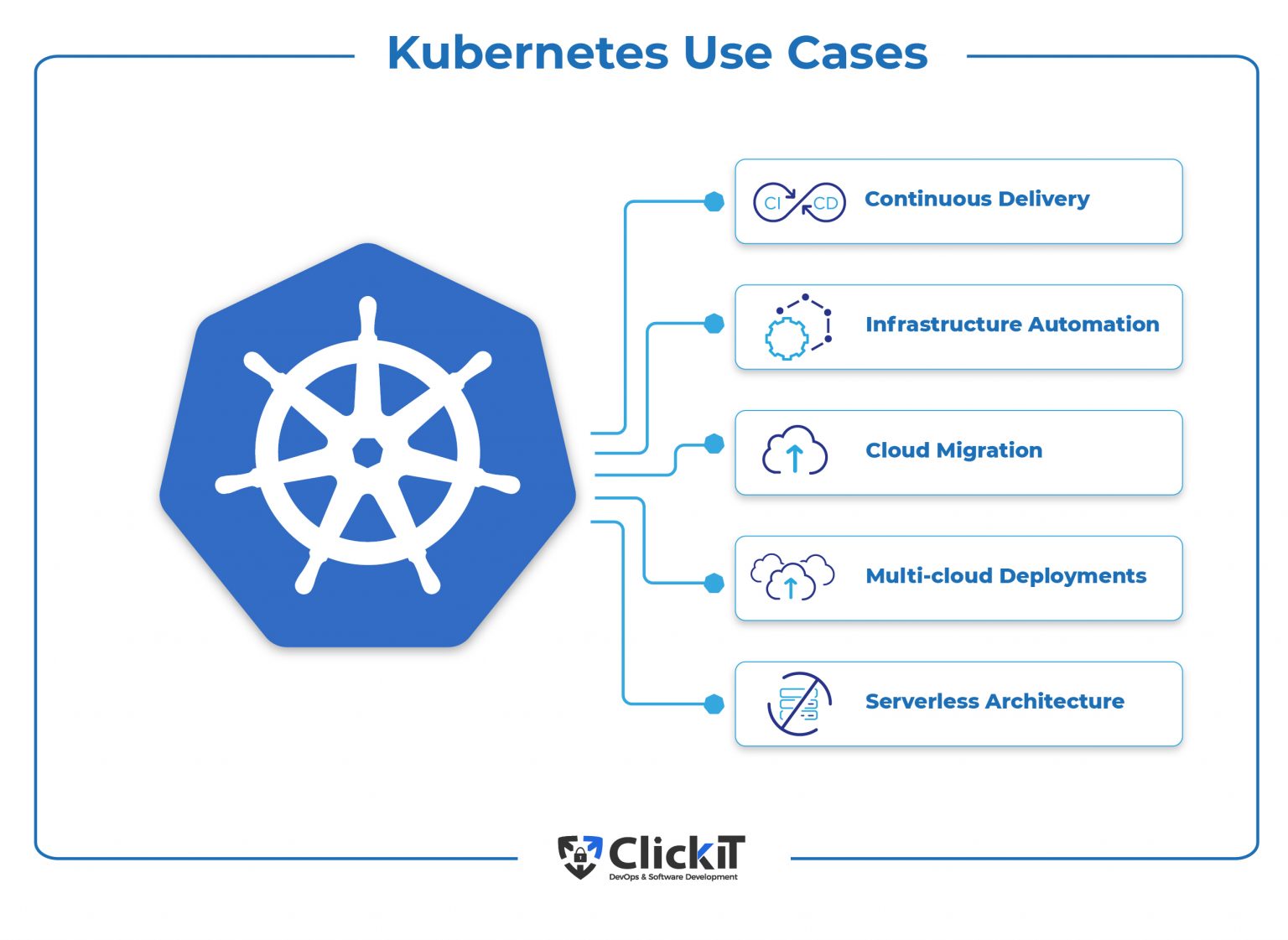

Advanced Kubernetes Features and Use Cases

Kubernetes offers several advanced features and use cases that can help you address complex container orchestration challenges. By understanding and leveraging these features, you can optimize your Kubernetes environment and improve application performance, scalability, and resilience.

Stateful Applications

Stateful applications, such as databases and message queues, require persistent storage and unique network identities. Kubernetes supports stateful applications through features like StatefulSets, which provide stable and unique network identities for each pod and support persistent storage using volumes and volume claims. By using StatefulSets, you can ensure that your stateful applications are deployed and managed consistently and reliably in a Kubernetes environment.

Autoscaling

Autoscaling enables you to automatically adjust the number of replicas in a deployment based on resource utilization or other performance metrics. Kubernetes supports horizontal pod autoscaling, which scales the number of pods in a deployment up or down based on customizable thresholds and rules. By implementing autoscaling, you can ensure that your applications are always running at optimal performance levels, automatically adapting to changes in workload and resource availability.

Custom Resource Definitions

Custom Resource Definitions (CRDs) allow you to extend the Kubernetes API with custom resources and controllers. By using CRDs, you can define your own resources and workflows, tailoring Kubernetes to your specific use case or application requirements. CRDs enable you to create custom resources, such as custom controllers, validators, and admission webhooks, providing a powerful and flexible way to extend Kubernetes and automate complex workflows.

Best Practices for Advanced Kubernetes Features

To effectively leverage advanced Kubernetes features and use cases, follow these best practices:

- Understand the specific requirements and constraints of your applications and workloads.

- Choose the appropriate Kubernetes features and tools for your use case.

- Test and validate your configurations and deployments thoroughly before deploying to production.

- Monitor your applications and Kubernetes environment for performance, availability, and security issues.

- Regularly review and update your configurations and deployments to ensure they remain aligned with your business and application requirements.

By following these best practices and leveraging advanced Kubernetes features and use cases, you can optimize your Kubernetes environment and improve application performance, scalability, and resilience.