What is a TF File?

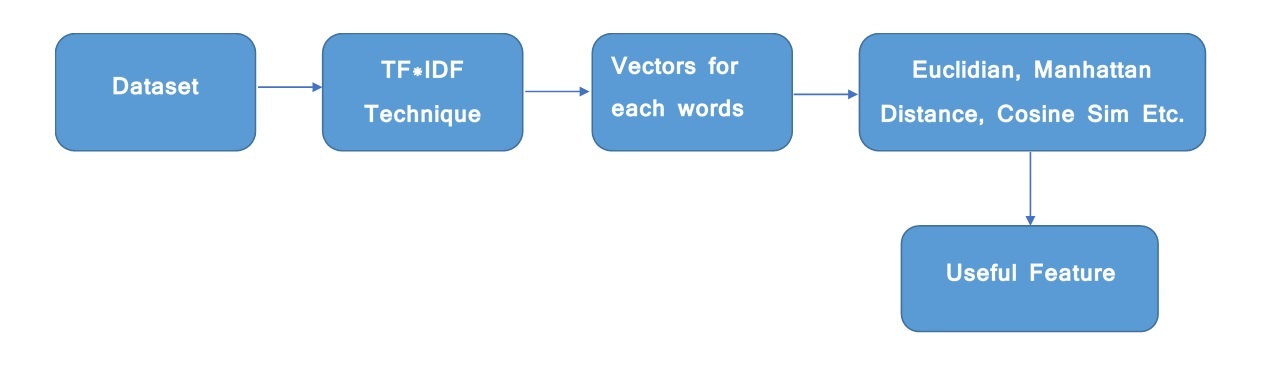

A TF file, short for TensorFlow SavedModel format, is a crucial component in the machine learning ecosystem. It is a file format used by TensorFlow, a popular open-source platform for machine learning, to serialize and save trained models and their parameters. The TF file format allows for easy sharing and reuse of models, making it an essential tool for developers and researchers in the field of artificial intelligence (AI).

The Role of TF Files in Machine Learning

TF files play a vital role in machine learning projects by storing trained models and their parameters. These files are essentially serialized representations of machine learning models that have undergone training, allowing them to make predictions based on new data. By saving models as TF files, developers and researchers can easily share and reuse them in various applications, fostering collaboration and accelerating the development of AI solutions.

In a typical machine learning workflow, a model is trained using a large dataset, and its performance is evaluated using various metrics. Once a satisfactory level of performance is achieved, the trained model is saved as a TF file. This file can then be imported into other projects or shared with other developers, enabling them to leverage the knowledge and expertise encapsulated in the model without having to repeat the time-consuming training process.

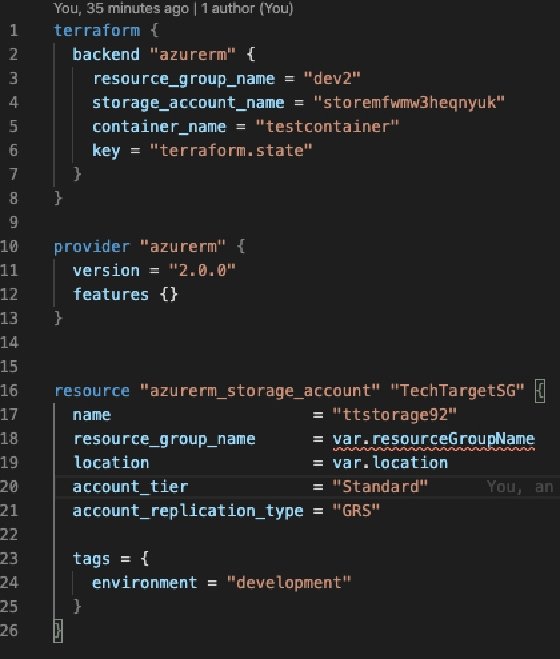

How to Create a TF File

Creating a TF file involves saving a trained TensorFlow model and its parameters in the TensorFlow SavedModel format. Here’s a step-by-step guide to help you create a TF file:

-

Start by importing the necessary TensorFlow libraries:

import tensorflow as tf import your_model -

Load your trained model:

model = your_model.load_model('path/to/your/trained/model') -

Save the model as a TF file using the

tf.saved_model.save()function:tf.saved_model.save(model, 'path/to/save/tf_file')

In this example, replace your_model with the name of your trained model, and update the paths as needed. After executing these steps, you will have a new TF file saved at the specified location.

Using Pretrained TF Files in Your Projects

Using pretrained TF files in your machine learning projects can save time and resources, as they allow you to leverage the knowledge and expertise encapsulated in existing models. Pretrained models can be fine-tuned for specific tasks, making them an excellent starting point for your projects.

Downloading Pretrained TF Files

You can download pretrained TF files from various sources, such as TensorFlow’s official model repository, TensorFlow Models GitHub repository, or other trusted repositories. Make sure to choose models that are relevant to your project’s goals and tasks.

Importing Pretrained TF Files

To import a pretrained TF file into your TensorFlow environment, use the tf.saved_model.load() function:

import tensorflow as tf model = tf.saved_model.load('path/to/pretrained/tf_file')

Once the model is loaded, you can use it for making predictions or fine-tuning it for your specific use case.

Converting Other Model Formats to TF Files

Converting models from other formats, such as Keras or ONNX, to TF files can be helpful when you want to leverage pretrained models from different frameworks or share your models with a broader audience. TensorFlow provides tools and utilities to facilitate the conversion process.

Converting Keras Models to TF Files

To convert a Keras model to a TF file, you can use TensorFlow’s tf.keras.models.save_model() function:

import tensorflow as tf from tensorflow.keras.models import load_model

Load your Keras model

keras_model = load_model('path/to/keras/model.h5')

Save the model as a TF file

tf.keras.models.save_model(keras_model, 'path/to/tf_file', save_format='tf')

Converting ONNX Models to TF Files

To convert an ONNX model to a TF file, you can use the tf2onnx and onnxruntime packages:

import tensorflow as tf import tf2onnx import onnxruntime as rt

Convert the ONNX model to a TensorFlow model

onnx_model = rt.InferenceSession('path/to/onnx/model.onnx')

tf_model = tf2onnx.convert.from_onnx(onnx_model.get_model(), "1")

Save the TensorFlow model as a TF file

tf.saved_model.save(tf_model, 'path/to/tf_file')

These examples demonstrate how to convert Keras and ONNX models to TF files, enabling you to utilize a wider range of pretrained models and share your models with a broader audience.

Best Practices for Managing TF Files

Effective management and organization of TF files in your machine learning projects can help ensure smooth collaboration, efficient version control, and secure data handling. Here are some best practices to follow:

Version Control

Use version control systems, such as Git, to track changes to your TF files and collaborate with your team. This allows you to easily revert to previous versions, compare changes, and maintain a history of your models’ development.

Backup Strategies

Implement regular backups of your TF files and related project assets. This can help protect your work from data loss due to hardware failures, accidental deletions, or other unforeseen circumstances. Consider using cloud storage services, such as Google Drive or AWS S3, for added redundancy and accessibility.

Collaboration Techniques

When working with a team, establish clear guidelines for managing and sharing TF files. This can include using centralized repositories, setting up access controls, and defining naming conventions to avoid conflicts and confusion.

Organization

Organize your TF files and related assets in a logical and consistent manner. This can help you quickly locate and manage your models, as well as facilitate collaboration and version control. Consider creating separate directories for different stages of the model development process, such as training, validation, and deployment.

TF File Security and Privacy Considerations

When working with TF files, it’s essential to consider security and privacy concerns to protect sensitive data and models. Here are some recommendations to help you maintain the confidentiality and integrity of your TF files:

Access Control

Implement access controls to restrict who can view, modify, or share your TF files. This can include using file permissions, user authentication, and network security measures to ensure that only authorized individuals can access your models and data.

Data Encryption

Encrypt your TF files and related data when storing or sharing them, especially when transmitting them over unsecured networks. This can help protect your data from unauthorized access, tampering, or interception.

Differential Privacy

When working with sensitive data, consider using differential privacy techniques to add noise to the data, making it difficult for attackers to infer sensitive information about individual data points. TensorFlow provides a tf.data.experimental.dp module for implementing differential privacy in your machine learning projects.

Secure Collaboration

When collaborating with others on TF files, use secure communication channels, such as encrypted email or secure file transfer protocols (SFTP), to protect your data during transmission. Additionally, consider using version control systems with built-in access controls and encryption, such as GitHub Enterprise or GitLab, to ensure secure collaboration.

Exploring Advanced Features of TF Files

In addition to storing trained models and their parameters, TF files offer advanced features that can help improve model performance and reduce file size. Here, we will discuss three such techniques: model pruning, quantization, and optimization.

Model Pruning

Model pruning, also known as model sparsification, involves removing unnecessary or redundant parts of a model, such as weights or neurons, to reduce its complexity and improve inference speed. TensorFlow provides tools like tf.keras.models.clone_model() and tf.keras.mixed_precision.experimental.PruningStrategy to facilitate model pruning.

Quantization

Quantization is a process that reduces the precision of a model’s weights and activations, typically from 32-bit floating-point to 16-bit or lower. This can significantly reduce the model’s size and improve inference speed, with minimal impact on accuracy. TensorFlow provides the tf.keras.mixed_precision module for implementing quantization in your models.

Optimization

Optimization techniques, such as weight quantization, weight clustering, and weight sharing, can further reduce the size of a TF file while maintaining model accuracy. TensorFlow’s tf.lite and tf.keras.mixed_precision modules provide various optimization algorithms and tools to help you optimize your models for deployment on edge devices or other resource-constrained environments.

By incorporating these advanced features into your TF files, you can improve model performance, reduce file size, and enhance the overall user experience in your machine learning projects.