What is Kubernetes and Why Deploy to It?

Kubernetes, also known as K8s, is an open-source platform designed to automate deploying, scaling, and managing containerized applications. As a powerful container orchestration tool, Kubernetes simplifies the process of deploying to a microservices architecture, providing benefits such as scalability, flexibility, and resource optimization. Modern applications increasingly rely on containerization for seamless deployment across various environments. By efficiently deploying to Kubernetes, developers can ensure their applications run smoothly, regardless of the infrastructure. This platform’s robust features and extensive community support make it an ideal choice for managing containerized workloads and services.

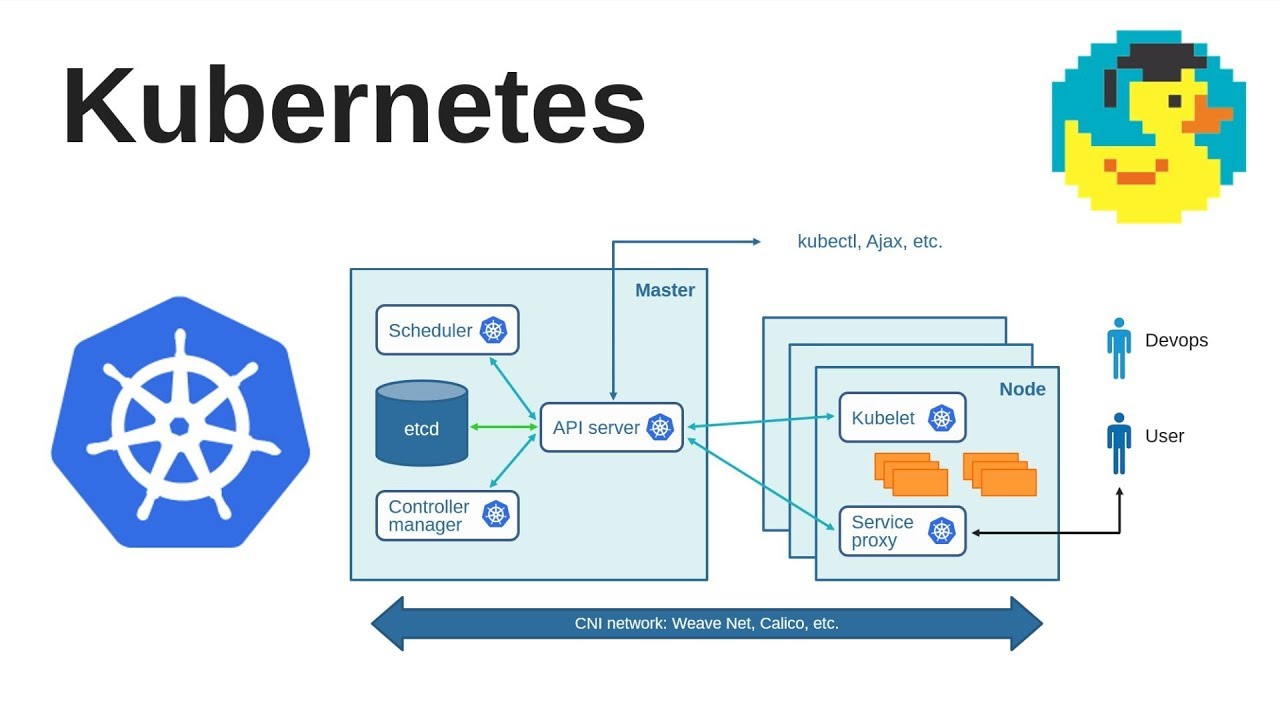

Understanding the Basics of Kubernetes Architecture

Kubernetes architecture consists of several fundamental components that work together to manage containerized applications and services. These components include nodes, pods, services, and deployments. A node is a worker machine in Kubernetes, which can be a physical or virtual machine. Each node contains the necessary services to run pods and is managed by the master components.

Pods are the smallest and simplest units in the Kubernetes object model that you create or deploy. A pod represents a running process on your cluster and can contain one or more containers. Pods are ephemeral and are not designed to be durable.

Services in Kubernetes are an abstract way to expose an application running on a set of pods as a network service. With Kubernetes, you don’t need to modify your application to use an unfamiliar service discovery mechanism. Kubernetes gives every pod its own IP address and a unique DNS name.

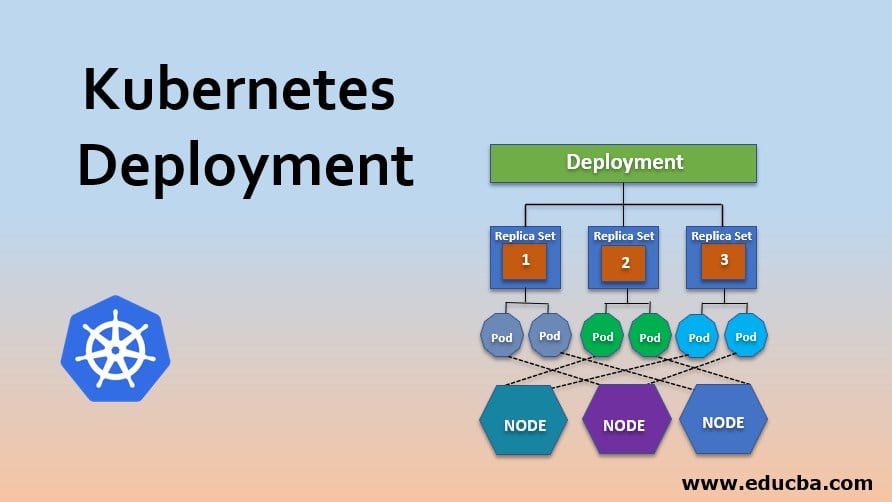

Deployments are a Kubernetes resource that manages a set of replica Pods. They are the primary way to describe stateless applications in Kubernetes. With deployments, you can declare the desired state for your pods, and Kubernetes automatically maintains that state.

By understanding these core Kubernetes components, you can design and deploy applications that take full advantage of the platform’s capabilities, ensuring a robust and efficient application deployment.

Preparing Your Application for Kubernetes Deployment

Before deploying an application to Kubernetes, specific steps are necessary to ensure a smooth and efficient process. These steps include containerization, image creation, and configuration management. Containerization is the process of packaging an application and its dependencies into a container. Containerization allows applications to run reliably across different computing environments. Popular containerization tools include Docker and rkt.

Image creation involves building a container image, which includes the application code, runtime, libraries, and any other necessary dependencies. Container images are typically stored in a container registry, such as Docker Hub or Google Container Registry.

Configuration management is the process of organizing and storing configuration files for your application. Configuration files define the application’s behavior and settings. Kubernetes supports various configuration management strategies, including environment variables, config maps, and secrets.

To streamline the deployment process, consider using popular tools such as Docker Compose, Kaniko, or Tekton. These tools automate the process of building and deploying container images, reducing the risk of errors and inconsistencies.

Best practices for preparing an application for Kubernetes deployment include:

- Minimizing the number of containers per application.

- Reducing the size of container images.

- Using multi-stage builds to separate build and runtime environments.

- Leveraging Kubernetes-native configuration management strategies.

- Implementing automated testing and validation processes.

By following these best practices, you can ensure a smooth and efficient deployment process when moving your application to Kubernetes.

How to Deploy an Application to Kubernetes: A Step-by-Step Guide

Deploying an application to Kubernetes involves several steps, from creating a deployment manifest to configuring services and exposing the application. Here’s a step-by-step guide to help you through the process. Step 1: Create a Deployment Manifest

A deployment manifest is a YAML or JSON file that describes the desired state of your application in Kubernetes. It includes details such as the container image, resource requirements, and environment variables.

Here’s an example of a simple deployment manifest for a Node.js application:

apiVersion: apps/v1 kind: Deployment metadata: name: my-node-app spec: replicas: 3 selector: matchLabels: app: my-node-app template: metadata: labels: app: my-node-app spec: containers: - name: my-node-app image: my-node-app:1.0.0 ports: - containerPort: 8080 Step 2: Apply the Deployment Manifest

Once you’ve created the deployment manifest, you can apply it to your Kubernetes cluster using the kubectl apply command:

kubectl apply -f deployment.yaml Step 3: Expose the Application

To make the application accessible from outside the Kubernetes cluster, you need to expose it as a service. Here’s an example of a service manifest for the Node.js application:

apiVersion: v1 kind: Service metadata: name: my-node-app spec: selector: app: my-node-app ports: - protocol: TCP port: 80 targetPort: 8080 type: LoadBalancer Step 4: Apply the Service Manifest

Similar to the deployment manifest, you can apply the service manifest using the kubectl apply command:

kubectl apply -f service.yaml Step 5: Verify the Deployment

To verify that the application is running and accessible, you can use the kubectl get command to view the status of the deployment and service:

kubectl get deployments kubectl get services By following these steps, you can deploy your application to Kubernetes and make it accessible to users.

Monitoring and Managing Your Deployed Applications

Once you’ve successfully deployed your application to Kubernetes, it’s crucial to monitor and manage its performance to ensure optimal user experience. Kubernetes provides built-in tools and allows integration with third-party solutions for monitoring and management. Logging is an essential aspect of monitoring and managing applications in Kubernetes. Each container in a pod generates logs, which can be collected and aggregated using tools such as Fluentd, Logstash, or Elasticsearch. These tools enable you to search, analyze, and visualize logs, making it easier to identify and troubleshoot issues.

Alerting is another critical feature for managing applications in Kubernetes. Alerting tools, such as Prometheus or Grafana, monitor application performance and trigger alerts when specific conditions are met. These alerts can notify developers or system administrators via email, Slack, or other communication channels, allowing them to take prompt action.

Scaling is a significant advantage of deploying to Kubernetes. Kubernetes allows you to scale applications horizontally by adding or removing replicas based on resource utilization or other performance metrics. This scaling can be manual or automated using tools such as Kubernetes Horizontal Pod Autoscaler (HPA) or Cluster Autoscaler.

To effectively monitor and manage applications in Kubernetes, consider the following best practices:

- Implement centralized logging and alerting for all applications and services.

- Configure automated scaling based on resource utilization and performance metrics.

- Monitor application performance and resource utilization regularly.

- Establish a process for responding to alerts and addressing performance issues.

- Regularly review and update monitoring and management strategies as needed.

By following these best practices, you can ensure optimal application performance and minimize downtime, ultimately leading to a better user experience and long-term deployment success.

Troubleshooting Common Issues in Kubernetes Deployments

Deploying applications to Kubernetes can be straightforward, but challenges may arise during the deployment and management process. Here are some common issues and best practices for addressing them: Resource Quotas and Limitations

Kubernetes allows you to set resource quotas and limitations to prevent resource starvation and ensure fair usage across the cluster. However, incorrectly configured quotas can lead to application failures or poor performance. To avoid this, ensure that resource quotas and limitations are appropriately set based on application requirements and performance metrics.

Networking and Service Discovery

Kubernetes uses a flat network model, where all pods can communicate with each other using their IP addresses. However, managing IP addresses and service discovery can be challenging, especially in large clusters. To address this, consider using a service mesh, such as Istio or Linkerd, which simplifies service discovery, traffic management, and security.

Rolling Updates and Rollbacks

Kubernetes supports rolling updates and rollbacks, allowing you to update or revert to a previous version of your application without downtime. However, if a rolling update fails, it can leave your application in an inconsistent state. To mitigate this risk, ensure that your deployment manifest includes proper health checks and readiness probes, and consider using canary deployments to test new versions of your application in a controlled manner.

Resource Utilization and Scaling

Effective resource utilization and scaling are critical for ensuring optimal application performance in Kubernetes. However, incorrectly configured scaling policies can lead to resource starvation or wastage. To avoid this, regularly monitor resource utilization and performance metrics, and adjust scaling policies accordingly.

Security and Access Control

Kubernetes provides various security features, such as network policies, role-based access control (RBAC), and secrets management. However, misconfigured security policies can expose your application to security risks. To mitigate this risk, ensure that your Kubernetes cluster and application are secured using best practices, such as enabling network policies, using RBAC for access control, and encrypting sensitive data.

By following these best practices, you can minimize common issues in Kubernetes deployments and ensure long-term deployment success.

Popular Tools and Solutions for Deploying to Kubernetes

Deploying applications to Kubernetes can be simplified using various tools and solutions. Here are some popular options and their features: Helm

Helm is a package manager for Kubernetes that simplifies the deployment of applications and services. It provides a chart repository, which contains pre-configured charts for popular applications and services. Helm also supports custom charts, allowing you to create and share your own charts. Helm’s features include:

- Easy installation and management of Kubernetes applications.

- Support for custom charts and versioning.

- A large and active community.

Kustomize

Kustomize is a standalone tool to customize Kubernetes objects through a kustomization file. It simplifies the deployment of applications and services by allowing you to define a set of customizations for a base configuration. Kustomize’s features include:

- Easy customization of Kubernetes objects without modifying the original configuration.

- Support for overlays, patches, and functions.

- Integration with kubectl and other Kubernetes tools.

Jenkins X

Jenkins X is a project that simplifies the process of deploying applications to Kubernetes using Jenkins and other tools. It provides a set of tools and best practices for continuous integration, continuous delivery, and automated testing. Jenkins X’s features include:

- Automated setup and configuration of Jenkins and other tools.

- Support for multiple cloud providers and Kubernetes distributions.

- Integration with popular development tools and workflows.

When choosing a tool or solution for deploying to Kubernetes, consider the following factors:

- Ease of use and installation.

- Integration with existing tools and workflows.

- Community support and activity.

- Scalability and performance.

By selecting the right tool or solution for your project requirements, you can simplify the deployment process and ensure long-term success in deploying and managing applications on Kubernetes.

Best Practices for Long-Term Kubernetes Deployment Success

Deploying applications to Kubernetes can provide numerous benefits, including scalability, flexibility, and resource optimization. However, to ensure long-term success, it’s essential to follow best practices for deploying and managing applications on Kubernetes. Here are some best practices to consider: Continuous Integration and Testing

Continuous integration and testing are critical for ensuring that changes to your application code don’t introduce new issues or break existing functionality. By integrating your application code with Kubernetes early in the development process, you can catch issues before they become significant problems.

Automated Deployment

Automated deployment can help ensure that your application is deployed consistently and reliably. By automating the deployment process, you can reduce the risk of errors and ensure that your application is deployed quickly and efficiently.

Monitoring and Logging

Monitoring and logging are essential for ensuring optimal application performance and identifying issues before they become significant problems. By monitoring your application’s performance and logs, you can quickly identify and address issues, ensuring that your application remains available and responsive.

Scaling and Resource Optimization

Scaling and resource optimization are critical for ensuring that your application can handle increased traffic and usage. By monitoring your application’s resource usage and adjusting scaling policies accordingly, you can ensure that your application remains performant and responsive, even during periods of high traffic.

Security and Access Control

Security and access control are essential for ensuring that your application remains secure and protected from unauthorized access. By implementing security policies and access controls, you can ensure that only authorized users can access your application and its data.

Continuous Learning and Improvement

Finally, it’s essential to stay up-to-date with the latest Kubernetes features and improvements. By continuously learning and improving your Kubernetes deployment and management skills, you can ensure that your application remains secure, performant, and scalable over the long term.

By following these best practices, you can ensure long-term success in deploying and managing applications on Kubernetes. By prioritizing continuous integration, testing, monitoring, scaling, security, and learning, you can ensure that your application remains available, responsive, and secure, providing value and usefulness to your users.