What is Kubernetes HPA and Why is it Important?

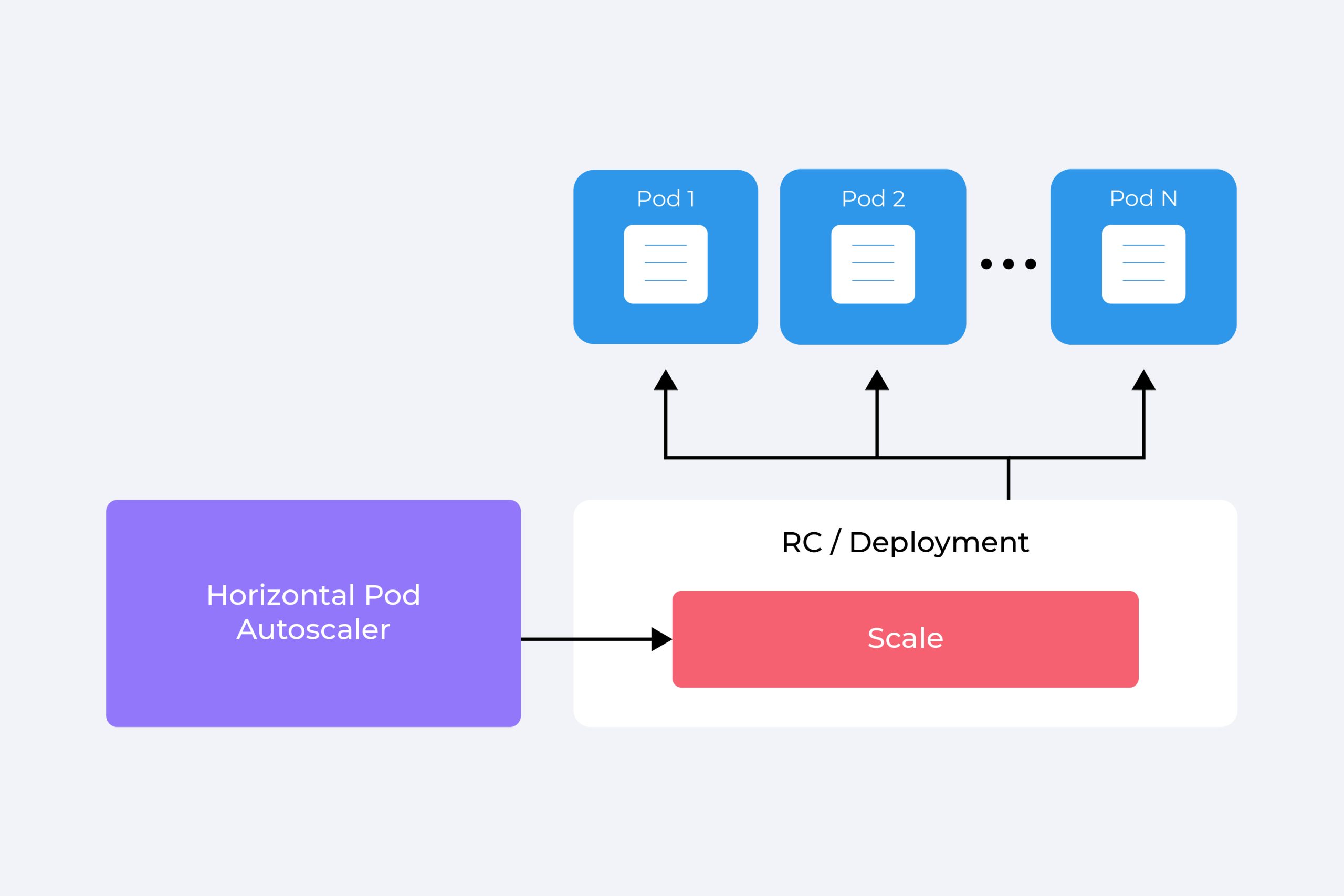

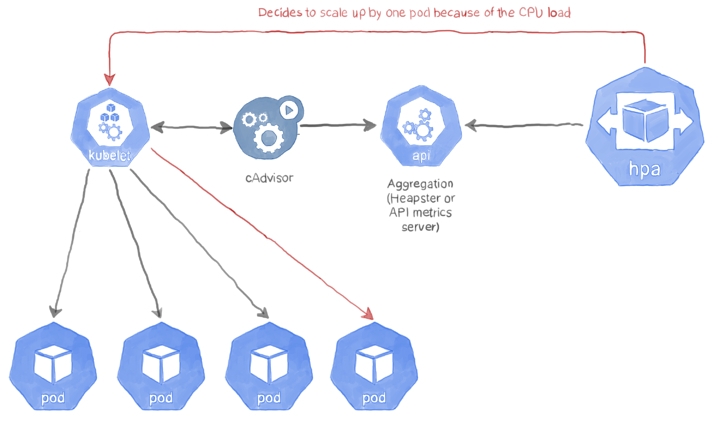

Kubernetes HPA (Horizontal Pod Autoscaler) is a built-in Kubernetes component that automatically scales the number of pods in a replication controller, deployment, replica set, or stateful set based on observed CPU utilization. This scaling mechanism helps to maintain the desired performance level and ensures high availability for containerized applications. The HPA is particularly useful in dynamic and unpredictable workloads where manual scaling may not be efficient or timely.

The HPA in Kubernetes is an essential feature for managing modern applications that require scalability, flexibility, and reliability. By automatically adjusting the number of pods, the HPA ensures that the system can handle sudden spikes in traffic or resource usage. This, in turn, leads to improved application performance, reduced downtime, and lower operational costs.

The HPA uses a simple yet effective algorithm to determine when to scale up or down. It continuously monitors the CPU utilization of the pods and compares it to a predefined target value. If the CPU utilization exceeds the target value, the HPA triggers a scaling event to increase the number of pods. Conversely, if the CPU utilization falls below the target value, the HPA reduces the number of pods to optimize resource usage.

In summary, Kubernetes HPA is a powerful tool for managing containerized applications. It provides automatic scaling, high availability, and optimal resource usage, making it an essential feature for modern applications that require scalability, flexibility, and reliability.

How to Implement HPA in Kubernetes?

Implementing HPA in Kubernetes involves several steps, including meeting the prerequisites, creating a sample deployment, and configuring the HPA. Here’s a step-by-step guide to help you get started:

-

Ensure that the Kubernetes cluster version is 1.6 or later, as HPA is only supported in these versions.

-

Create a sample deployment with at least one replica. Here’s an example deployment file:

apiVersion: apps/v1 kind: Deployment metadata: name: my-app spec: replicas: 1 selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app-container image: my-app-image:latest resources: requests: cpu: 100m limits: cpu: 200m -

Create a horizontal pod autoscaler (HPA) for the sample deployment. Here’s an example HPA file:

apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler metadata: name: my-app-hpa spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: my-app minReplicas: 1 maxReplicas: 10 metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 50 -

Apply the deployment and HPA files using the kubectl command:

kubectl apply -f deployment.yaml kubectl apply -f hpa.yaml -

Verify that the HPA is working as expected by checking the status of the HPA:

kubectl get hpa

In summary, implementing HPA in Kubernetes involves meeting the prerequisites, creating a sample deployment, and configuring the HPA. By following the step-by-step guide outlined above, you can ensure that your containerized applications are automatically scaled based on observed CPU utilization, leading to improved performance, high availability, and optimal resource usage.

Key Considerations for HPA in Kubernetes

When implementing HPA in Kubernetes, there are several key considerations to keep in mind to ensure optimal performance, cost, and scalability. Here are some of the most important factors to consider:

-

Minimum and maximum number of pods: It’s essential to set a minimum and maximum number of pods for the HPA to ensure that the system can handle sudden spikes in traffic or resource usage. The minimum number of pods should be set to ensure high availability, while the maximum number of pods should be set to prevent resource exhaustion and maintain performance.

-

CPU utilization target: The CPU utilization target is the threshold at which the HPA triggers a scaling event. It’s essential to set an appropriate CPU utilization target to ensure that the system can handle sudden spikes in traffic or resource usage without overprovisioning resources. A good starting point is to set the CPU utilization target to 50%, but it may vary depending on the application’s requirements.

-

Impact of HPA on the overall performance and cost of the system: While HPA can improve the performance and scalability of containerized applications, it can also increase the cost of the system. It’s essential to monitor the overall performance and cost of the system to ensure that the benefits of HPA outweigh the costs. This includes monitoring the CPU usage, memory usage, and network usage of the pods, as well as the cost of running the pods in the cluster.

By considering these key factors, you can ensure that the HPA is implemented effectively and efficiently in Kubernetes. This, in turn, leads to improved performance, high availability, and optimal resource usage for containerized applications.

Real-World Examples of HPA in Kubernetes

HPA is a powerful feature in Kubernetes that has been widely adopted in production environments. Here are some real-world examples of HPA in Kubernetes, highlighting the benefits and challenges of using HPA in production environments:

-

Example 1: A leading e-commerce company used HPA to automatically scale the number of pods in a deployment based on observed CPU utilization. By implementing HPA, the company was able to handle sudden spikes in traffic and ensure high availability for their customers. However, the company faced challenges in setting the appropriate CPU utilization target and monitoring the overall performance and cost of the system.

-

Example 2: A software development company used HPA to automatically scale the number of pods in a stateful set based on observed CPU utilization. By implementing HPA, the company was able to ensure that their database cluster could handle sudden spikes in traffic and maintain performance. However, the company faced challenges in setting the appropriate minimum and maximum number of pods and monitoring the overall cost of the system.

-

Example 3: A gaming company used HPA to automatically scale the number of pods in a replication controller based on observed CPU utilization. By implementing HPA, the company was able to ensure that their gaming platform could handle sudden spikes in traffic and maintain performance. However, the company faced challenges in setting the appropriate CPU utilization target and monitoring the overall cost of the system.

In all these examples, HPA proved to be a valuable feature in managing containerized applications. However, it’s essential to consider the key considerations and best practices for HPA in Kubernetes to ensure optimal performance, cost, and scalability. By doing so, you can leverage the benefits of HPA and ensure that your containerized applications are highly available, performant, and cost-effective.

Best Practices for HPA in Kubernetes

Implementing HPA in Kubernetes can be a complex process, and it’s essential to follow best practices to ensure optimal performance, cost, and scalability. Here are some best practices for HPA in Kubernetes:

-

Monitoring and alerting: It’s crucial to monitor the CPU usage, memory usage, and network usage of the pods, as well as the cost of running the pods in the cluster. Setting up monitoring and alerting can help you detect issues early and take corrective action. You can use tools like Prometheus, Grafana, and Elasticsearch to monitor and alert on Kubernetes resources.

-

Testing and validation: Before implementing HPA in a production environment, it’s essential to test and validate the configuration in a staging environment. This can help you identify issues and optimize the configuration for your specific use case. You can use tools like Kubernetes’ own stress testing tool, kubectl-stress, to simulate workloads and test the HPA configuration.

-

Continuous integration and delivery: Implementing HPA in a continuous integration and delivery (CI/CD) pipeline can help you automate the scaling process and ensure that your containerized applications are always available and performant. You can use tools like Jenkins, GitLab, and CircleCI to automate the HPA configuration and deployment process.

By following these best practices, you can ensure that the HPA is implemented effectively and efficiently in Kubernetes. This, in turn, leads to improved performance, high availability, and optimal resource usage for containerized applications.

Alternatives to HPA in Kubernetes

While HPA is a powerful feature in Kubernetes, it’s not the only option for scaling containerized applications. Here are some alternatives to HPA in Kubernetes, along with their advantages and disadvantages:

-

Manual scaling: Manual scaling involves manually adjusting the number of pods in a replication controller, deployment, replica set, or stateful set. This approach is simple and easy to implement but lacks the automation and flexibility of HPA. Manual scaling is best suited for applications with predictable and stable workloads.

-

Vertical scaling: Vertical scaling involves increasing the resources (CPU, memory, or storage) of a single pod. This approach is useful for applications with unpredictable workloads but can be more expensive than HPA. Vertical scaling also requires downtime for the pod, which can impact availability and performance.

-

Cluster autoscaling: Cluster autoscaling involves automatically scaling the number of nodes in a Kubernetes cluster based on resource usage. This approach is useful for applications with unpredictable workloads and can help optimize resource usage and cost. However, cluster autoscaling can be more complex to implement and manage than HPA.

When choosing between HPA and these alternatives, it’s essential to consider the specific requirements and constraints of your application. HPA is best suited for applications with predictable and stable workloads, while manual scaling, vertical scaling, and cluster autoscaling are better suited for applications with unpredictable workloads. By understanding the advantages and disadvantages of each approach, you can make an informed decision and ensure that your containerized applications are highly available, performant, and cost-effective.

Future Trends and Developments in HPA for Kubernetes

HPA is a powerful feature in Kubernetes, and its importance and benefits are clear. However, the field of container orchestration is constantly evolving, and HPA is no exception. Here are some future trends and developments in HPA for Kubernetes that you should be aware of:

-

Integration with other cloud-native tools: HPA is just one of many features in the Kubernetes ecosystem. In the future, we can expect to see more integration between HPA and other cloud-native tools, such as service meshes, ingress controllers, and serverless functions. This integration can help optimize the performance, cost, and scalability of containerized applications even further.

-

Machine learning-based scaling: Traditional HPA relies on predefined metrics, such as CPU utilization, to trigger scaling events. However, machine learning-based scaling can use historical data and real-time analytics to predict and prevent scaling events before they occur. This approach can help optimize resource usage and cost even further and reduce the risk of downtime and performance degradation.

-

Multi-dimensional scaling: Traditional HPA focuses on scaling the number of pods based on a single metric, such as CPU utilization. However, modern applications often have complex workloads that require scaling based on multiple metrics, such as memory usage, network traffic, and storage usage. Multi-dimensional scaling can help optimize resource usage and cost based on multiple metrics and ensure that containerized applications are highly available, performant, and cost-effective.

By staying up-to-date with these future trends and developments in HPA for Kubernetes, you can ensure that your containerized applications are always optimized for performance, cost, and scalability. Whether you’re a developer, DevOps engineer, or IT manager, understanding and implementing HPA in Kubernetes is essential for modern applications in a cloud-native world.

Conclusion: The Value of HPA in Kubernetes for Modern Applications

In this comprehensive guide, we have explored Kubernetes HPA, its role in managing containerized applications, and how it helps to automatically scale the number of pods based on observed CPU utilization. We have also discussed the key considerations for HPA in Kubernetes, real-world examples, best practices, and alternatives to HPA. Furthermore, we have highlighted the future trends and developments in HPA for Kubernetes.

HPA is an essential feature in Kubernetes that provides numerous benefits for modern applications, including improved performance, cost optimization, and scalability. By automatically scaling the number of pods based on observed CPU utilization, HPA helps ensure that containerized applications are always available, performant, and cost-effective.

However, implementing HPA in Kubernetes requires careful consideration of various factors, such as the minimum and maximum number of pods, CPU utilization target, and the impact of HPA on the overall performance and cost of the system. It is essential to follow best practices, such as monitoring and alerting, testing and validation, and continuous integration and delivery, to ensure that HPA is implemented effectively and efficiently.

While HPA is a powerful feature in Kubernetes, it is not the only option for scaling containerized applications. Manual scaling, vertical scaling, and cluster autoscaling are alternatives to HPA, each with its advantages and disadvantages. Understanding the differences between these scaling options is essential for making informed decisions about the best approach for your specific use case.

Finally, the future trends and developments in HPA for Kubernetes, such as integration with other cloud-native tools, machine learning-based scaling, and multi-dimensional scaling, hold great promise for optimizing the performance, cost, and scalability of containerized applications even further. By staying up-to-date with these trends and developments, you can ensure that your containerized applications are always optimized for modern cloud-native environments.