Introduction: AWS CLI and Its Versatile ‘ls’ Command

The AWS Command Line Interface (CLI) is a powerful tool that enables users to manage various AWS services directly from the command line. By utilizing the AWS CLI, developers, IT professionals, and enthusiasts can streamline their workflows, automate tasks, and efficiently control their AWS resources. Among the numerous commands available, the ‘ls’ command plays a crucial role in listing files and directories within AWS CLI, particularly when working with AWS S3 (Simple Storage Service) buckets.

Getting Started: Installing and Configuring AWS CLI

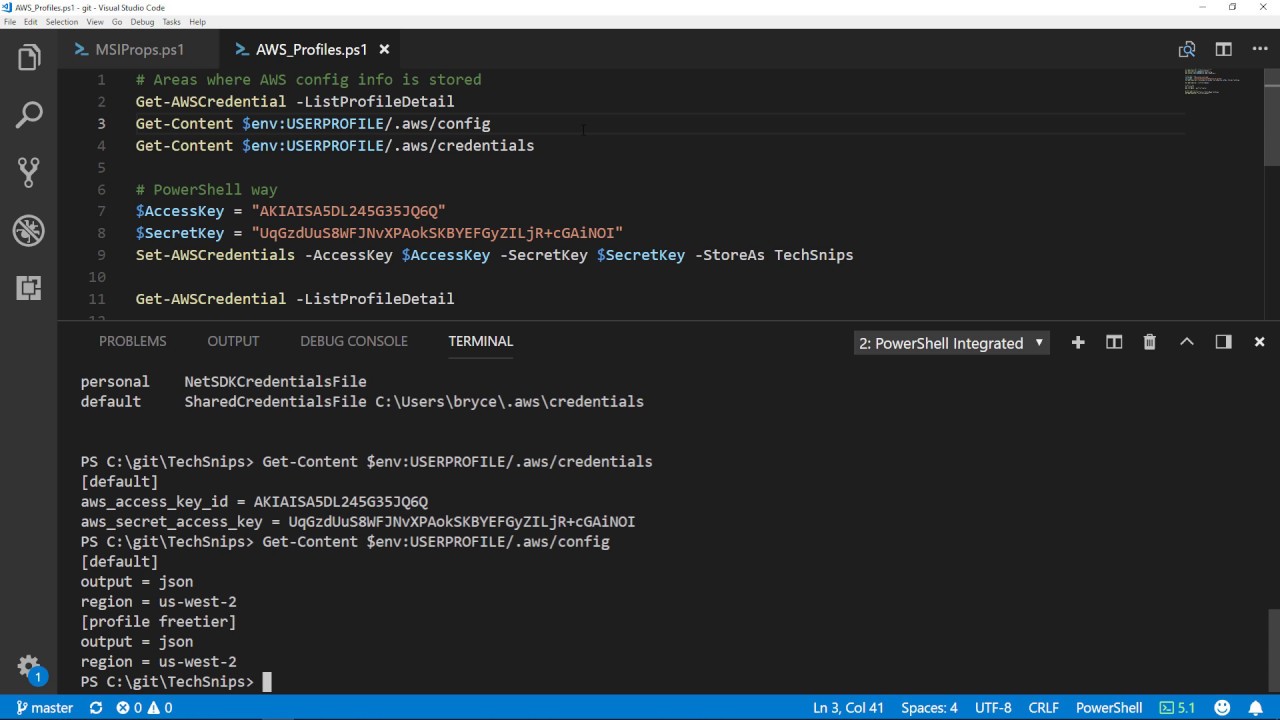

Before utilizing the AWS CLI ‘ls’ command, you must first install and configure the AWS CLI on your local machine. This process involves meeting specific prerequisites and following a step-by-step guide to ensure successful installation and configuration.

First, ensure that your system meets the necessary prerequisites. For Windows, macOS, and Linux users, the AWS CLI supports Python versions 2.7+ or 3.4+. If you do not have Python installed, download and install it from the official Python website.

Next, follow these steps to install and configure the AWS CLI:

- Download the AWS CLI installer for your operating system from the official AWS CLI download page.

- Run the installer and follow the on-screen instructions to complete the installation.

- After installation, open a new terminal or command prompt window to ensure the system recognizes the AWS CLI.

- To configure the AWS CLI, run the command ‘

aws configure‘ and enter the following information when prompted: - Access Key ID: Your AWS access key.

- Secret Access Key: Your AWS secret access key.

- Default region name: The AWS region you want to use (e.g., ‘us-west-2’).

- Default output format: The output format for AWS CLI commands (e.g., ‘json’, ‘text’, or ‘table’).

Once you have completed these steps, the AWS CLI ‘ls’ command will be accessible and ready for use.

Basic Usage: AWS CLI ‘ls’ Command Syntax and Options

The AWS CLI ‘ls’ command is used to list files and directories within AWS S3 buckets. To use the ‘ls’ command effectively, it is essential to understand its basic syntax and available options. The primary syntax for the ‘ls’ command is as follows:

aws s3 ls [<options>] [<bucket>/[<prefix>]]In this syntax, ‘bucket‘ refers to the name of the S3 bucket, and ‘prefix‘ is an optional parameter that filters the results to only include objects whose keys begin with the specified prefix.

Some of the most common options for the AWS CLI ‘ls’ command include:

--human-readable: Displays file sizes in a human-readable format (e.g., ’10 KB’, ‘5 MB’).--recursive: Lists all objects in the specified bucket or prefix, including all subdirectories.--summarize: Displays a summary of the objects in the specified bucket or prefix, including the total number of objects and the total size of the objects.

To demonstrate the use of the ‘ls’ command, consider the following example:

aws s3 ls s3://my-bucket/ --human-readable --recursiveIn this example, the ‘ls’ command lists all objects in the ‘my-bucket’ bucket, including all subdirectories, in a human-readable format. This command can help you quickly and easily view the contents of your S3 buckets and manage your files and directories within AWS CLI.

Advanced Techniques: Filtering and Sorting File Lists

When managing large file lists within AWS S3, using the AWS CLI ‘ls’ command with various options can help you efficiently filter and sort your files. This section discusses advanced techniques for managing file lists using the ‘ls’ command.

Filtering Files

To filter files based on specific criteria, you can use the ‘--exclude‘ and ‘--include‘ options. These options allow you to include or exclude files based on a specified pattern. For example, the following command lists all objects in the ‘my-bucket’ bucket that have the word ‘report’ in their name:

aws s3 ls s3://my-bucket/ --recursive --include "*report*"Additionally, you can use the ‘--exclude‘ option to exclude specific files or directories from the list. For instance, the following command lists all objects in the ‘my-bucket’ bucket, excluding the ‘temp/‘ directory:

aws s3 ls s3://my-bucket/ --recursive --exclude "temp/*"Sorting Files

To sort files based on specific criteria, you can use the ‘--sort-key‘ option. This option allows you to sort files based on a specific attribute, such as the object’s size or last modified time. For example, the following command lists all objects in the ‘my-bucket’ bucket, sorted by size in descending order:

aws s3 ls s3://my-bucket/ --recursive --sort-key SizeYou can also combine the ‘--sort-key‘ option with the ‘--reverse‘ option to sort files in ascending order. For instance, the following command lists all objects in the ‘my-bucket’ bucket, sorted by last modified time in ascending order:

aws s3 ls s3://my-bucket/ --recursive --sort-key LastModified --reverseBy mastering these advanced techniques for filtering and sorting file lists, you can significantly improve your productivity and efficiency when managing AWS S3 resources using the AWS CLI ‘ls’ command.

Integration: Utilizing AWS CLI ‘ls’ Command in Scripts and Workflows

The AWS CLI ‘ls’ command can be integrated into scripts and workflows for automation purposes, allowing you to manage AWS S3 resources more efficiently. This section discusses examples and best practices for seamless integration.

Bash Script Example

Consider the following bash script, which lists all objects in an S3 bucket and archives them to an Amazon S3 Glacier vault:

#!/bin/bash

Set AWS CLI credentials

export AWS_ACCESS_KEY_ID=your_access_key

export AWS_SECRET_ACCESS_KEY=your_secret_key

Set AWS S3 bucket name and Glacier vault name

BUCKET_NAME=my-bucket

VAULT_NAME=my-vault

List all objects in the S3 bucket

aws s3 ls s3://$BUCKET_NAME --human-readable --recursive > object_list.txt

Archive objects to the Glacier vault

while read line; do

FILE_NAME=$(echo $line | awk '{print $4}')

aws s3 glacier-2012-06-01 initiate-job --account-id $(aws sts get-caller-identity --query "Account" --output text) --vault-name $VAULT_NAME --inventory-retrieval-job --archive-description "$FILE_NAME" --tier-selection "EXPEDITED" --archive-creation-options='{"Description":"'$FILE_NAME'"}' < /dev/null

done < object_list.txt

In this example, the 'aws s3 ls' command is used to list all objects in the specified S3 bucket and save the output to a text file. The script then reads the text file and archives each object to an Amazon S3 Glacier vault using the 'aws s3 glacier-2012-06-01 initiate-job' command.

Best Practices

- Error Handling: Ensure that your scripts handle errors gracefully. Use the '

--debug' option to troubleshoot issues and add error-handling logic to your scripts. - Automation Tools: Consider using automation tools such as AWS CloudFormation or AWS Systems Manager Automation to manage your scripts and workflows.

- Version Control: Use version control systems like Git to track changes to your scripts and collaborate with your team.

By integrating the AWS CLI 'ls' command into scripts and workflows, you can automate repetitive tasks, improve your productivity, and efficiently manage AWS S3 resources at scale.

Troubleshooting: Common Issues and Solutions

When using the AWS CLI 'ls' command, you may encounter various issues and errors. This section addresses common problems and provides troubleshooting tips and solutions to help you overcome these challenges.

Error: "An error occurred (403) when calling the HeadObject operation: Forbidden"

This error occurs when the AWS CLI does not have the necessary permissions to access the specified S3 bucket or object. To resolve this issue, ensure that your AWS CLI credentials have the required permissions and that the bucket and object are not private.

Error: "Unknown options: [option]"

This error occurs when you use an invalid or unsupported option with the 'ls' command. To resolve this issue, review the available options for the 'ls' command and ensure that you are using a valid option.

Error: "A client error (InvalidBucketName) occurred when calling the HeadBucket operation: The specified bucket is not valid."

This error occurs when the specified bucket name is invalid or does not exist. To resolve this issue, double-check the bucket name and ensure that it is spelled correctly and

Security Best Practices: Protecting Your AWS Resources

When using the AWS CLI 'ls' command to manage AWS S3 resources, it is crucial to follow security best practices to protect your resources and ensure secure access and management.

Access Control

To control access to your S3 buckets and objects, use AWS Identity and Access Management (IAM) policies, bucket policies, and Access Control Lists (ACLs). These tools allow you to specify who can access your resources and what actions they can perform.

Encryption

To protect your data in transit and at rest, use encryption when transferring data to and from S3 and when storing data in S3. AWS S3 supports server-side encryption (SSE) and client-side encryption (CSE), allowing you to choose the encryption method that best meets your needs.

Secure Credential Management

To manage your AWS CLI credentials securely, use AWS Security Token Service (STS) to generate temporary security credentials, or use AWS Systems Manager Parameter Store to securely store and manage your credentials.

Least Privilege Principle

Follow the principle of least privilege when granting access to your S3 resources. Only grant the minimum permissions necessary for users to perform their tasks, and regularly review and update your IAM policies and bucket policies to ensure they are up-to-date and reflect the current security requirements.

Multi-Factor Authentication (MFA)

Enable MFA Delete on your S3 buckets to prevent unauthorized deletion of your data. MFA Delete requires users to provide a second form of authentication before they can delete objects or change bucket properties, adding an extra layer of security to your S3 resources.

By following these security best practices, you can ensure that your AWS S3 resources are protected and secure, and that your data is accessible only to authorized users and applications.

Conclusion: Enhancing AWS CLI Productivity with 'ls' Command

The AWS CLI 'ls' command is a powerful tool for managing AWS S3 resources, providing users with the ability to list files and directories efficiently and effectively. By mastering the basic syntax and available options, as well as advanced techniques for filtering and sorting file lists, you can significantly improve your productivity and efficiency in managing AWS services.

Integrating the 'ls' command into scripts and workflows for automation purposes can further enhance your productivity, allowing you to manage large file lists and perform repetitive tasks with ease. By following security best practices, such as access control, encryption, and secure credential management, you can ensure that your AWS S3 resources are protected and secure.

In conclusion, the AWS CLI 'ls' command is an essential tool for any AWS user, providing a versatile and efficient way to manage AWS S3 resources. By exploring additional AWS CLI commands and resources, you can maximize your productivity and efficiency in managing AWS services, and take full advantage of the power and flexibility of the AWS platform.