What are Containers and Why are they Important?

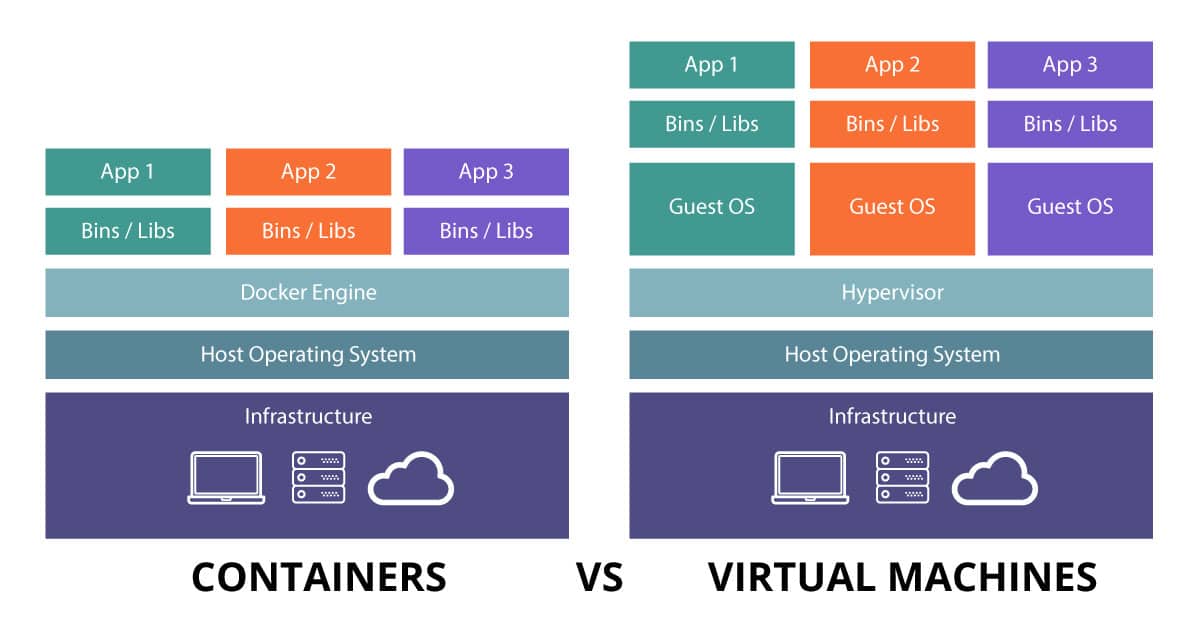

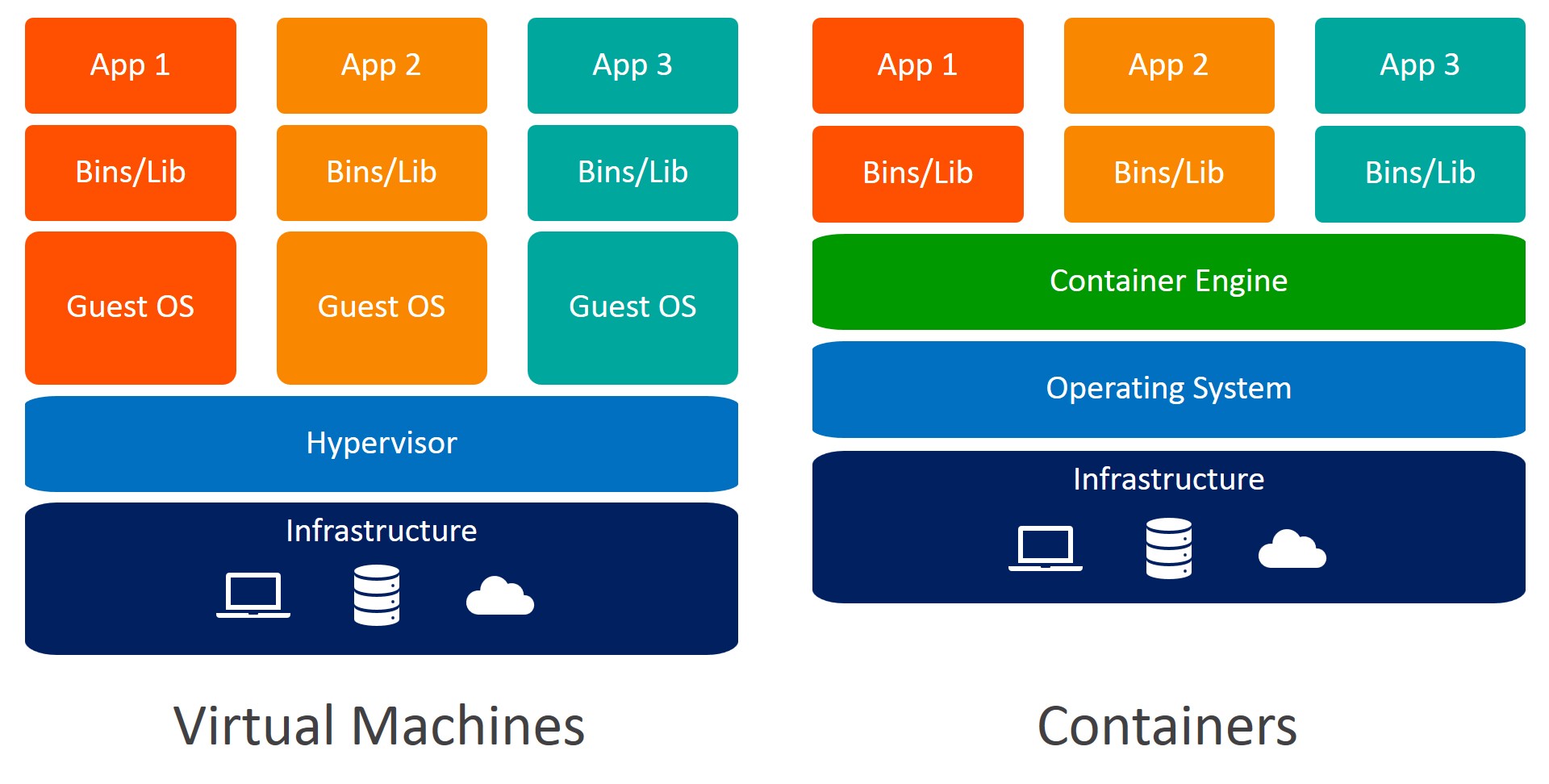

Containers and Kubernetes have become essential in modern software development due to their convenience and versatility. Containers are a form of lightweight virtualization that package an application and its dependencies into a single, self-contained unit. This packaging makes it easier to deploy and run the application in different environments, as the container includes everything it needs to operate independently.

The importance of containers lies in their ability to simplify the deployment and management of applications. By encapsulating the application and its dependencies, containers ensure consistency across various environments, such as development, testing, and production. This consistency reduces the risk of compatibility issues and makes it easier to maintain and update applications.

Containers also offer improved resource utilization and faster deployment times compared to traditional virtualization methods. By sharing the host operating system and only including the necessary application components, containers require fewer resources than traditional virtual machines. Additionally, containers can be started and stopped much more quickly, allowing for faster deployment and scaling of applications.

In summary, containers and Kubernetes have become indispensable tools for organizations and developers due to their ability to simplify application deployment, improve resource utilization, and increase deployment speed. By leveraging these technologies, businesses can reduce costs, improve efficiency, and accelerate their software development life cycle.

Kubernetes: A Container Orchestration Platform

Kubernetes is an open-source platform for managing and orchestrating containers. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes provides a powerful and flexible solution for automating the deployment, scaling, and management of containerized applications, making it an indispensable tool for organizations and developers working with containers.

Containers Kubernetes offer several benefits, including improved resource utilization, faster deployment times, and consistent application behavior across different environments. By managing the lifecycle of containers, Kubernetes simplifies the process of deploying and scaling applications, allowing developers to focus on writing code instead of managing infrastructure.

Kubernetes achieves this by providing a set of key components that work together to form a robust container orchestration system. These components include Pods, Services, Deployments, and Volumes, which can be combined and configured to create complex containerized applications. Pods are the smallest deployable units in Kubernetes, representing one or more containers that share the same network namespace. Services provide a stable IP address and DNS name for a set of Pods, allowing them to communicate with each other and with external services. Deployments manage the rollout and scaling of Pods, ensuring that the desired number of replicas is always running. Volumes provide a way to store and manage data across Pods, ensuring that data persists even when Pods are deleted or recreated.

In summary, Kubernetes is a powerful container orchestration platform that simplifies the deployment, scaling, and management of containerized applications. By providing a set of key components, such as Pods, Services, Deployments, and Volumes, Kubernetes enables developers to focus on writing code and delivering value to their users, while the platform takes care of the underlying infrastructure.

Key Components of Kubernetes

Kubernetes is a powerful container orchestration platform that relies on several key components to manage and orchestrate containerized applications. These components include Pods, Services, Deployments, and Volumes, which work together to provide a robust and flexible container management system.

Pods

Pods are the smallest deployable units in Kubernetes, representing one or more containers that share the same network namespace. Containers within a Pod can communicate with each other using localhost, and they share the same IP address and port space. Pods provide a way to manage the lifecycle of containers, ensuring that they are deployed, scaled, and terminated together as a single unit.

Services

Services provide a stable IP address and DNS name for a set of Pods, allowing them to communicate with each other and with external services. Services enable load balancing, traffic routing, and service discovery, ensuring that containerized applications are highly available and scalable. By abstracting the underlying Pods, Services provide a stable interface for applications, allowing them to continue functioning even when Pods are added, removed, or updated.

Deployments

Deployments manage the rollout and scaling of Pods, ensuring that the desired number of replicas is always running. Deployments provide a way to describe the desired state of a containerized application, including the number of replicas, container images, and resource requirements. Kubernetes automatically manages the underlying Pods to ensure that the desired state is maintained, even in the face of failures or changes.

Volumes

Volumes provide a way to store and manage data across Pods, ensuring that data persists even when Pods are deleted or recreated. Volumes can be used to store application data, configuration files, or other types of data that need to be shared across Pods. Kubernetes supports several types of Volumes, including emptyDir, hostPath, and persistentVolumes, each with its own set of features and benefits.

In summary, Kubernetes relies on several key components, including Pods, Services, Deployments, and Volumes, to provide a robust and flexible container management system. By combining these components, Kubernetes enables organizations and developers to deploy, scale, and manage containerized applications with ease, providing a powerful tool for modern software development.

How to Deploy Containers with Kubernetes

Kubernetes provides a powerful and flexible platform for deploying and managing containerized applications. By following a few simple steps, organizations and developers can quickly and easily deploy their applications using Kubernetes.

Step 1: Create a Pod

The first step in deploying a containerized application with Kubernetes is to create a Pod. A Pod represents a single instance of a running container and provides a way to manage the lifecycle of the container. To create a Pod, you can define a Pod manifest in YAML or JSON format, which specifies the container image, resource requirements, and other settings.

Step 2: Expose the Pod as a Service

Once you have created a Pod, you can expose it as a Service, which provides a stable IP address and DNS name for the Pod. By exposing the Pod as a Service, you can enable other Pods and applications to communicate with the Pod using a consistent address, even if the Pod is rescheduled or restarted.

Step 3: Create a Deployment

To ensure that your containerized application is highly available and scalable, you can create a Deployment, which manages the rollout and scaling of Pods. A Deployment provides a way to describe the desired state of your application, including the number of replicas, container images, and resource requirements. Kubernetes automatically manages the underlying Pods to ensure that the desired state is maintained, even in the face of failures or changes.

Step 4: Create a Volume

To enable data persistence and sharing across Pods, you can create a Volume. A Volume provides a way to store and manage data across Pods, ensuring that data persists even when Pods are deleted or recreated. Kubernetes supports several types of Volumes, including emptyDir, hostPath, and persistentVolumes, each with its own set of features and benefits.

Step 5: Manage and Scale Your Application

Once you have created a Pod, exposed it as a Service, created a Deployment, and created a Volume, you can manage and scale your containerized application using Kubernetes. Kubernetes provides several tools and features for managing and scaling applications, including the ability to roll out updates, scale applications up or down, and monitor application health and performance.

In summary, deploying containerized applications with Kubernetes is a straightforward process that involves creating Pods, exposing them as Services, creating Deployments, creating Volumes, and managing and scaling the application. By following these steps, organizations and developers can take advantage of the powerful and flexible container management capabilities provided by Kubernetes.

Real-World Applications of Kubernetes and Containers

Containers and Kubernetes have become essential tools for modern software development, enabling organizations and developers to build, deploy, and manage applications with greater speed, efficiency, and flexibility. Here are some real-world examples of organizations and projects that have successfully implemented and leveraged containers and Kubernetes to achieve their goals.

Netflix

Netflix is one of the largest and most well-known users of containers and Kubernetes. The company has migrated the majority of its infrastructure to the cloud and uses containers and Kubernetes to manage and orchestrate its microservices-based architecture. By using containers and Kubernetes, Netflix has been able to achieve greater agility, scalability, and reliability, enabling the company to deliver high-quality streaming services to millions of users worldwide.

Google is another major user of containers and Kubernetes. The company has been using containers for over a decade to manage its massive and complex infrastructure. Google developed Kubernetes as an open-source project to manage and orchestrate containers, and the platform has since become one of the most popular and widely used container orchestration tools in the industry. By using containers and Kubernetes, Google has been able to achieve greater efficiency, scalability, and reliability, enabling the company to deliver high-quality services to billions of users worldwide.

The Pokemon Company

The Pokemon Company is a leading developer and publisher of video games and entertainment properties. The company has successfully implemented and leveraged containers and Kubernetes to manage and orchestrate its applications and services. By using containers and Kubernetes, The Pokemon Company has been able to achieve greater agility, scalability, and reliability, enabling the company to deliver high-quality gaming experiences to millions of users worldwide.

Challenges and Best Practices

While containers and Kubernetes offer many benefits, they also present some challenges and best practices that organizations and developers should be aware of. Some of the challenges include managing and securing containers, ensuring compatibility and interoperability, and optimizing performance and scalability. Some of the best practices include implementing monitoring, logging, and backup strategies, using automation and orchestration tools, and following security and compliance guidelines.

In summary, containers and Kubernetes have become essential tools for modern software development, enabling organizations and developers to build, deploy, and manage applications with greater speed, efficiency, and flexibility. By implementing and leveraging containers and Kubernetes, organizations and developers can achieve greater agility, scalability, and reliability, enabling them to deliver high-quality services and experiences to their users.

Best Practices for Managing Containers with Kubernetes

Kubernetes has become the go-to platform for managing and orchestrating containers, offering a wide range of benefits for organizations and developers. However, managing and maintaining containerized applications with Kubernetes can also present some challenges, particularly when it comes to monitoring, logging, security, and backup strategies. Here are some best practices for managing and maintaining containerized applications with Kubernetes.

Monitoring and Logging

Monitoring and logging are critical components of any containerized application, enabling organizations and developers to identify and troubleshoot issues, optimize performance, and ensure reliability. Kubernetes provides several tools and features for monitoring and logging, including built-in metrics, logging, and tracing capabilities. However, it is essential to implement a comprehensive monitoring and logging strategy that includes real-time monitoring, alerting, and reporting.

Security

Security is a top concern for organizations and developers implementing containerized applications, particularly when it comes to protecting sensitive data and preventing unauthorized access. Kubernetes provides several security features, including network policies, secrets management, and role-based access control (RBAC). However, it is essential to implement a comprehensive security strategy that includes network segmentation, encryption, and regular security audits.

Backup and Disaster Recovery

Backup and disaster recovery are critical components of any containerized application, enabling organizations and developers to recover from data loss, hardware failure, or other disasters. Kubernetes provides several tools and features for backup and disaster recovery, including etcd backup and restore, persistent volume snapshots, and Kubernetes disaster recovery solutions. However, it is essential to implement a comprehensive backup and disaster recovery strategy that includes regular backups, testing, and validation.

Optimizing Performance and Scalability

Optimizing performance and scalability is critical for containerized applications, particularly when it comes to managing large-scale, distributed systems. Kubernetes provides several tools and features for optimizing performance and scalability, including resource quotas, horizontal pod autoscaling, and cluster autoscaling. However, it is essential to implement a comprehensive optimization strategy that includes load testing, performance monitoring, and regular tuning and optimization.

In summary, managing and maintaining containerized applications with Kubernetes can present some challenges, particularly when it comes to monitoring, logging, security, and backup strategies. However, by implementing best practices for monitoring, logging, security, and backup strategies, organizations and developers can ensure the reliability, security, and performance of their containerized applications. By following these best practices, organizations and developers can take full advantage of the benefits of containers and Kubernetes, enabling them to build, deploy, and manage applications with greater speed, efficiency, and flexibility.

Alternatives to Kubernetes for Container Orchestration

While Kubernetes has become the de facto standard for container orchestration, it is not the only option available. There are several alternatives to Kubernetes, each with its own features, benefits, and drawbacks. Here are some of the most popular alternatives to Kubernetes for container orchestration.

Docker Swarm

Docker Swarm is a container orchestration platform developed by Docker, the company behind the popular Docker container runtime. Docker Swarm is tightly integrated with Docker and provides a simple and lightweight solution for container orchestration. However, Docker Swarm has limited scalability and functionality compared to Kubernetes, making it more suitable for small-scale and experimental projects.

Apache Mesos

Apache Mesos is an open-source cluster manager that can be used for container orchestration. Apache Mesos provides a powerful and flexible solution for managing large-scale and distributed systems, enabling organizations and developers to run a wide range of workloads, including containerized applications. However, Apache Mesos has a steep learning curve and requires significant expertise and resources to set up and maintain.

Amazon ECS

Amazon ECS (Elastic Container Service) is a fully managed container orchestration platform provided by Amazon Web Services (AWS). Amazon ECS provides a simple and scalable solution for container orchestration, enabling organizations and developers to run and manage containerized applications in the cloud. However, Amazon ECS has limited functionality and customization options compared to Kubernetes, making it more suitable for small-scale and simple projects.

When to Use Alternatives to Kubernetes

While Kubernetes is a powerful and flexible solution for container orchestration, it may not be the best option for every organization and project. Here are some scenarios where it might be appropriate to use alternatives to Kubernetes:

- Small-scale and experimental projects: For small-scale and experimental projects, Docker Swarm and other lightweight container orchestration platforms might be more suitable due to their simplicity and ease of use.

- Large-scale and distributed systems: For large-scale and distributed systems, Apache Mesos and other cluster managers might be more suitable due to their ability to manage a wide range of workloads and resources.

- Cloud-native and managed services: For cloud-native and managed services, Amazon ECS and other cloud-based container orchestration platforms might be more suitable due to their integration with cloud services and infrastructure.

In summary, while Kubernetes is the most popular and widely used container orchestration platform, it is not the only option available. There are several alternatives to Kubernetes, each with its own features, benefits, and drawbacks. By understanding the strengths and weaknesses of each platform, organizations and developers can choose the best option for their specific needs and requirements. By selecting the right container orchestration platform, organizations and developers can ensure the reliability, security, and performance of their containerized applications, enabling them to build, deploy, and manage applications with greater speed, efficiency, and flexibility.

The Future of Containers and Kubernetes

Containers and Kubernetes have revolutionized the way organizations and developers build, deploy, and manage applications. As we look to the future, it is clear that containers and Kubernetes will continue to play a critical role in modern software development. Here are some emerging trends and developments that are likely to shape the future of containers and Kubernetes.

Serverless Computing

Serverless computing is an emerging technology that enables organizations and developers to build and run applications without managing servers or infrastructure. Serverless computing is often used in conjunction with containers and Kubernetes, enabling organizations and developers to build highly scalable and flexible applications. As serverless computing continues to mature, we can expect to see closer integration between containers, Kubernetes, and serverless computing platforms.

Edge Computing

Edge computing is an emerging technology that involves processing data and running applications on distributed devices and networks, rather than centralized data centers. Edge computing is often used in conjunction with containers and Kubernetes, enabling organizations and developers to build highly scalable and distributed applications. As edge computing continues to mature, we can expect to see closer integration between containers, Kubernetes, and edge computing platforms.

Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are emerging technologies that enable organizations and developers to build intelligent and automated applications. Containers and Kubernetes are often used in conjunction with AI and ML, enabling organizations and developers to build highly scalable and flexible applications. As AI and ML continue to mature, we can expect to see closer integration between containers, Kubernetes, and AI/ML platforms.

Security and Compliance

Security and compliance are critical concerns for organizations and developers implementing containers and Kubernetes. As containers and Kubernetes continue to mature, we can expect to see closer integration between containers, Kubernetes, and security and compliance platforms. This integration will enable organizations and developers to build more secure and compliant applications, while also simplifying the management and maintenance of containerized applications.

Conclusion

Containers and Kubernetes have become essential tools for modern software development, enabling organizations and developers to build, deploy, and manage applications with greater speed, efficiency, and flexibility. As we look to the future, it is clear that containers and Kubernetes will continue to play a critical role in modern software development, shaping the way we build, deploy, and manage applications. By understanding the emerging trends and developments in containers and Kubernetes, organizations and developers can ensure they are well-positioned to take advantage of the benefits of these powerful technologies, while also addressing the challenges and risks associated with containerized applications.