An In-depth Exploration of Kubernetes Strategy Types

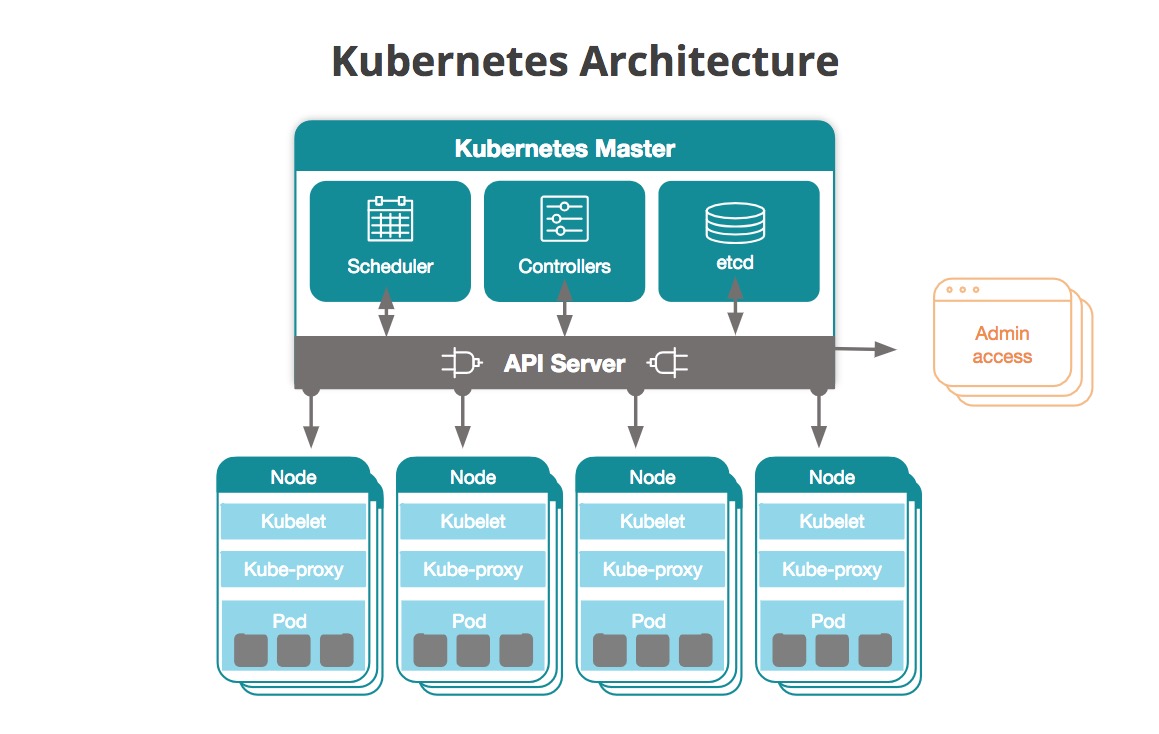

Kubernetes strategies play a crucial role in managing containerized applications, enabling efficient orchestration, and optimizing resource utilization. As organizations increasingly adopt containerization, understanding kubernetes strategy types becomes essential for successful deployment and maintenance. This article delves into the various strategies available, empowering you to make informed decisions and harness the full potential of Kubernetes.

Proactive Approach: Devising a Robust Kubernetes Implementation Strategy

A well-thought-out Kubernetes implementation strategy is the cornerstone of successful containerized application management. By proactively planning, organizations can streamline deployment, optimize resource utilization, and minimize operational costs. This section delves into the importance of selecting appropriate kubernetes strategy types for a robust implementation strategy.

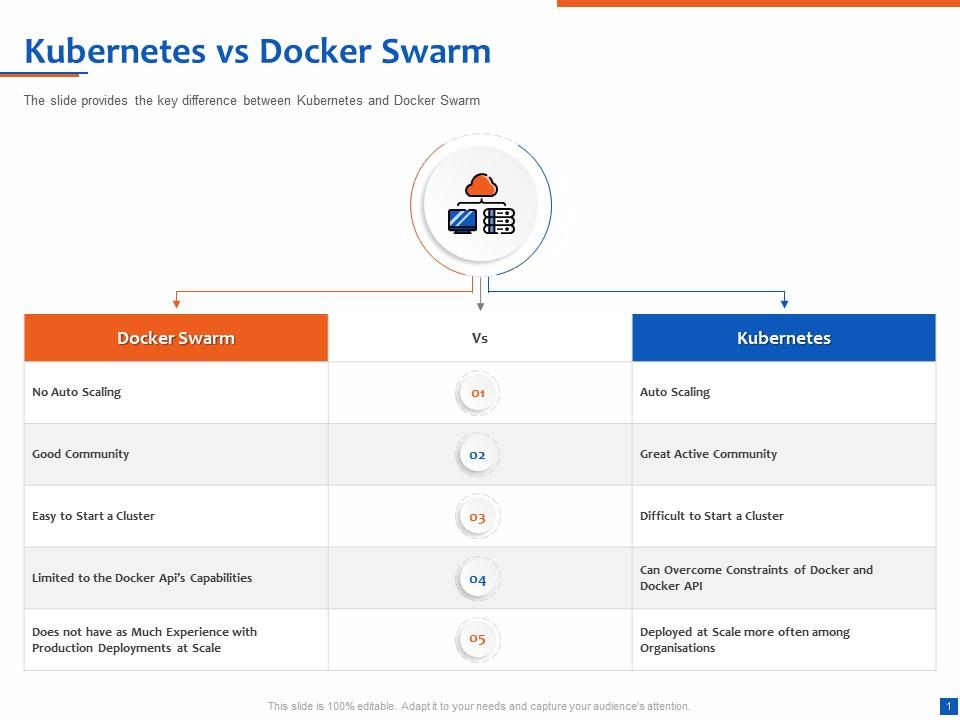

Scalability Techniques: Harnessing the Power of Kubernetes Auto Scaling

Scalability is a critical aspect of managing containerized applications, and Kubernetes offers powerful auto scaling capabilities to handle dynamic workloads. By implementing appropriate kubernetes strategy types, organizations can ensure seamless resource allocation and efficient load distribution. This section explores various auto scaling strategies and their benefits.

Kubernetes auto scaling revolves around three main components: the Horizontal Pod Autoscaler (HPA), the Vertical Pod Autoscaler (VPA), and the Cluster Autoscaler. The HPA adjusts the number of replicas in a pod to match the desired resource utilization, while the VPA automatically tunes the resource requests and limits of a pod. The Cluster Autoscaler, on the other hand, manages the size of the node pool to accommodate the changing workload demands.

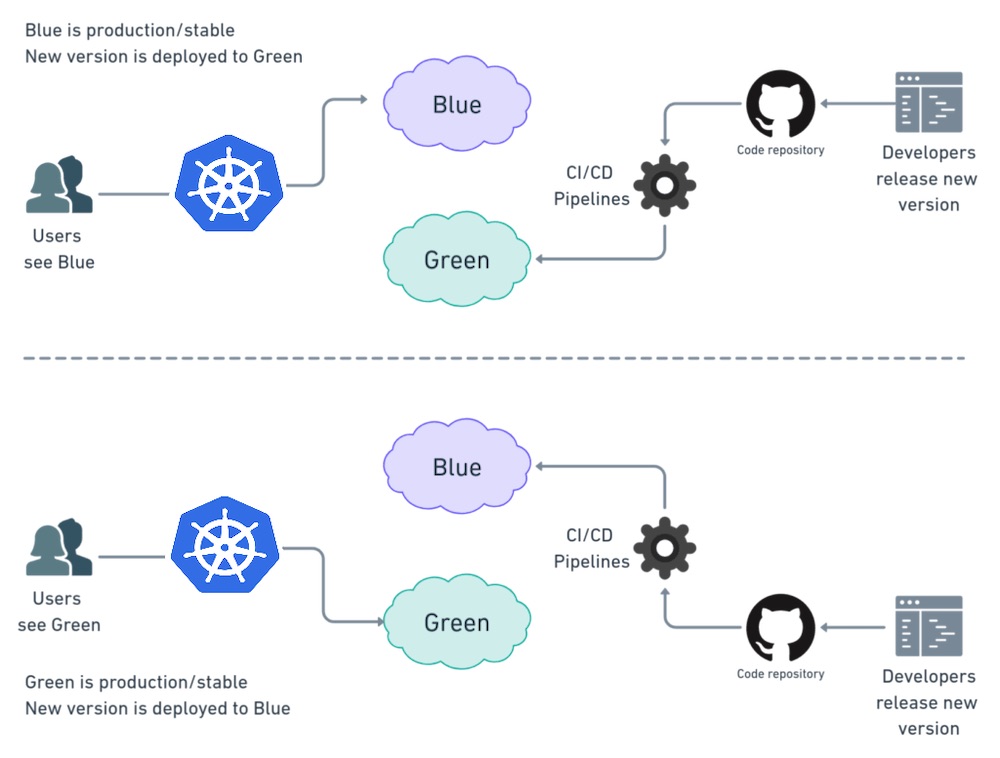

Rolling Updates and Rollbacks: Smooth Deployment Strategies

Kubernetes deployment strategies play a crucial role in minimizing downtime and ensuring application availability during updates and maintenance. Among the various deployment strategies, rolling updates and rollbacks are particularly noteworthy. These techniques allow for seamless deployment of new application versions while maintaining high levels of reliability and fault tolerance.

Rolling updates involve incrementally updating the running instances of an application with new versions, one at a time. By doing so, Kubernetes ensures that at least one instance of the application remains available during the update process. This strategy reduces the risk of application downtime and enables organizations to maintain service level agreements (SLAs) even during maintenance windows.

In addition to rolling updates, Kubernetes also supports rollbacks, allowing organizations to revert to a previous version of an application if issues arise during an update. By preserving the history of deployments, Kubernetes enables administrators to quickly and easily revert to a stable version, minimizing the impact of potential issues and ensuring application continuity.

Ingress Management: Effective Traffic Routing Strategies

Kubernetes Ingress is a powerful API resource that manages external access to applications running on a Kubernetes cluster. By implementing effective Ingress management strategies, organizations can efficiently route traffic, optimize resource utilization, and enhance application availability. This section explores various Ingress management strategies, including path-based routing, host-based routing, and canonical name (CNAME) based routing.

Path-based routing is a popular Ingress management strategy that allows organizations to direct traffic to different services based on the URL path. By configuring Ingress rules, administrators can route incoming requests to the appropriate service, ensuring seamless traffic distribution and efficient resource allocation.

Host-based routing is another Ingress management strategy that enables organizations to direct traffic to different services based on the hostname. This approach is particularly useful when managing multiple applications or services with distinct hostnames on a single Kubernetes cluster.

Canonical name (CNAME) based routing is a more advanced Ingress management strategy that allows organizations to map multiple hostnames to a single service. By using CNAME records, administrators can simplify service discovery and management, ensuring consistent traffic routing and improved application availability.

Storage Orchestration: Kubernetes Volume Management Strategies

Kubernetes volume management is a critical aspect of managing persistent storage for containerized applications. By implementing effective volume management strategies, organizations can ensure data consistency, improve resource utilization, and simplify application deployment. This section explores various volume management strategies, including dynamic provisioning, manual provisioning, and storage classes.

Dynamic provisioning is a powerful Kubernetes volume management strategy that enables organizations to automatically provision storage resources when a user requests them. By integrating with popular storage providers, Kubernetes can create and manage volumes on-demand, ensuring seamless storage allocation and efficient resource utilization.

Manual provisioning, on the other hand, involves manually creating and managing storage resources. While this approach offers more control over storage configuration, it can be time-consuming and error-prone, particularly in large-scale environments. Nevertheless, manual provisioning remains a viable option for organizations with specific storage requirements or constraints.

Storage classes are a Kubernetes abstraction that allows organizations to define custom storage provisioning rules. By creating storage classes with specific properties, administrators can ensure consistent storage allocation and management, simplifying application deployment and maintenance.

Monitoring and Logging: Essential Kubernetes Strategy Components

Monitoring and logging are crucial aspects of any Kubernetes strategy, providing valuable insights into system performance, resource utilization, and application behavior. By implementing effective monitoring and logging techniques, organizations can ensure high availability, streamline troubleshooting, and optimize resource allocation. This section explores various monitoring and logging tools and techniques, such as Prometheus, Grafana, and Fluentd.

Prometheus is an open-source monitoring system that enables organizations to collect and analyze time-series data from Kubernetes clusters. By integrating Prometheus with Kubernetes, administrators can monitor resource utilization, application performance, and system health, ensuring optimal performance and availability.

Grafana is a popular visualization tool that complements Prometheus by providing rich, interactive dashboards for monitoring Kubernetes clusters. By using Grafana, organizations can create custom visualizations, set up alerts, and collaborate on monitoring and troubleshooting efforts, enhancing overall situational awareness.

Fluentd is a powerful open-source data collector that simplifies logging and monitoring in Kubernetes environments. By integrating Fluentd with Kubernetes, organizations can collect, process, and forward logs to various destinations, such as Elasticsearch, Splunk, or AWS CloudWatch, ensuring comprehensive logging and monitoring coverage.

Security Best Practices: Implementing Kubernetes Security Strategies

Security is a critical aspect of any Kubernetes strategy, ensuring the protection of sensitive data, maintaining system integrity, and preventing unauthorized access. By implementing robust security measures, organizations can safeguard their Kubernetes environments and minimize the risk of security breaches. This section explores various security best practices and strategies, including network policies, secrets management, and role-based access control (RBAC).

Network policies are a Kubernetes resource that enables organizations to define and enforce network traffic rules between pods. By implementing network policies, administrators can restrict communication between pods, ensuring that only authorized traffic is allowed, thereby reducing the attack surface and enhancing overall security.

Secrets management is another essential aspect of Kubernetes security, involving the storage, retrieval, and distribution of sensitive information, such as passwords, API keys, and certificates. By using Kubernetes secrets or third-party solutions like HashiCorp Vault or AWS Secrets Manager, organizations can securely manage sensitive data and minimize the risk of unauthorized access.

Role-based access control (RBAC) is a powerful Kubernetes feature that allows organizations to control access to cluster resources based on user roles. By defining custom roles and role bindings, administrators can ensure that users have the appropriate permissions to perform their tasks, preventing unauthorized access and maintaining a secure Kubernetes environment.