The Role of Workload Balancing and Auto-Scaling in Cloud Computing

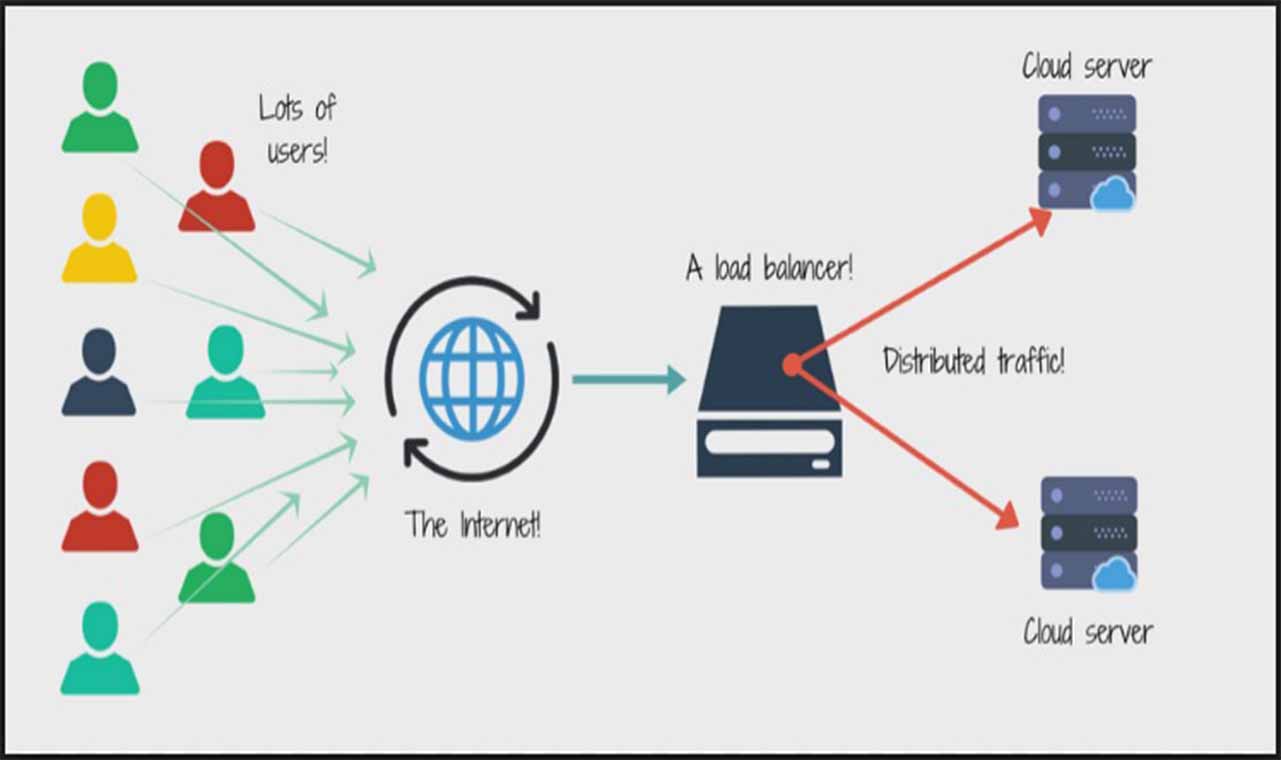

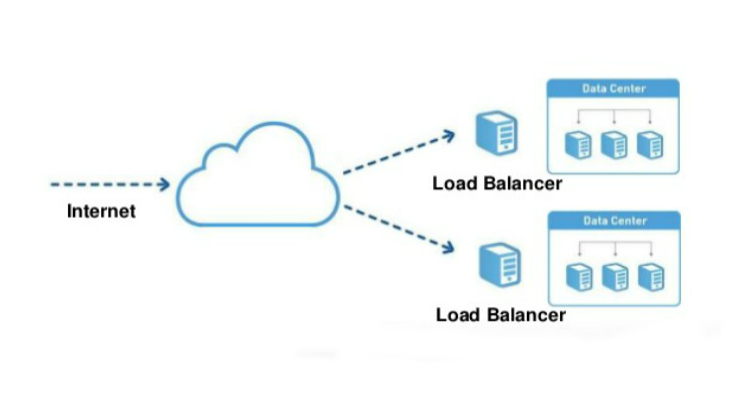

Workload balancing and auto-scaling are essential components of modern cloud computing, designed to optimize resource allocation, reduce costs, and enhance system performance. Workload balancing, also known as load balancing, refers to the distribution of workloads across multiple computing resources, ensuring that no single resource is overwhelmed. Auto-scaling, on the other hand, automatically adjusts the number of resources based on the current workload, allowing for seamless adaptation to fluctuating demands.

Understanding Workload Balancing Techniques

Workload balancing, also known as load balancing, is a critical aspect of cloud computing that ensures optimal resource utilization and high availability. By distributing workloads across multiple computing resources, load balancing prevents any single resource from becoming a bottleneck, thereby improving system performance and reducing costs. Here are some common workload balancing techniques:

Load Distribution

Load distribution refers to the even distribution of workloads across multiple resources. This technique ensures that no single resource is overloaded, thereby improving system performance and reducing the risk of downtime. Load distribution can be achieved through various methods, such as round-robin, least connections, and IP hash.

Load Sharing

Load sharing involves distributing workloads across multiple resources in a dynamic manner, based on the current workload and resource availability. This technique allows for optimal resource utilization and ensures that resources are used efficiently. Load sharing can be implemented through various methods, such as active-active and active-passive configurations.

Load Scheduling

Load scheduling involves scheduling workloads to be executed at specific times or on specific resources. This technique allows for better control over workload execution and can help improve system performance and reduce costs. Load scheduling can be implemented through various methods, such as time-based and resource-based scheduling.

Each workload balancing technique has its benefits and use cases. For example, load distribution is ideal for scenarios where workloads are evenly distributed and resources are homogeneous. Load sharing, on the other hand, is more suitable for scenarios where workloads are dynamic and resources are heterogeneous. Load scheduling is useful in scenarios where workloads have specific requirements, such as execution time or resource availability.

Implementing Auto-Scaling in Cloud Infrastructures

Auto-scaling is a key feature of cloud computing that enables the dynamic allocation of resources based on workload demands. By automatically scaling resources up or down, auto-scaling helps ensure optimal resource utilization, reduces costs, and improves system performance. Here are the principles of auto-scaling and how it can be implemented in popular cloud platforms:

Scaling Up and Scaling Down

Scaling up involves adding more resources to handle increased workload demands, while scaling down involves removing resources when demand decreases. Auto-scaling policies define the conditions under which scaling up or scaling down should occur. For example, a policy may specify that resources should be scaled up when CPU utilization exceeds 70% and scaled down when CPU utilization falls below 50%.

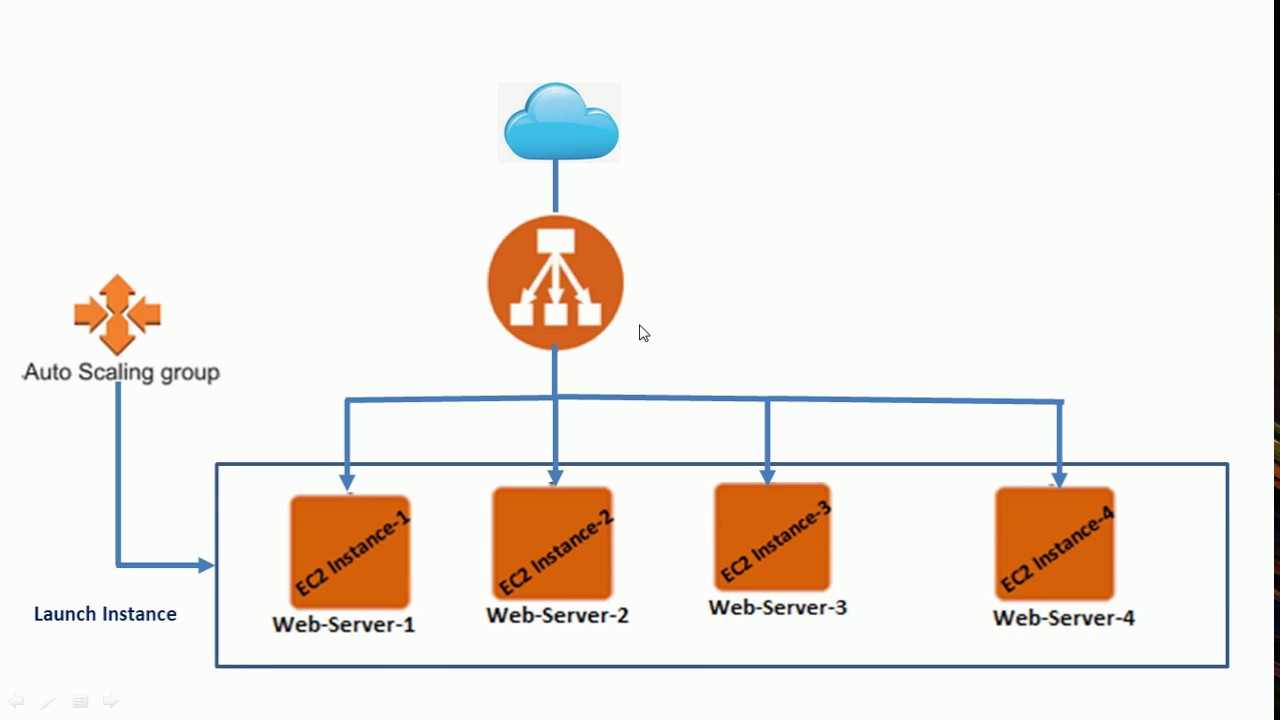

Implementing Auto-Scaling in AWS

Amazon Web Services (AWS) provides a built-in auto-scaling service that can be used to automatically scale resources in response to workload demands. To implement auto-scaling in AWS, you need to create a launch configuration that defines the instance type, image, and security groups for the new instances. You also need to create an auto-scaling group that includes the launch configuration and the scaling policies.

Implementing Auto-Scaling in Azure

Microsoft Azure provides an auto-scaling service called Virtual Machine Scale Sets that enables the automatic scaling of virtual machines. To implement auto-scaling in Azure, you need to create a scale set that includes the virtual machine configuration and the scaling policies. Azure also provides a load balancer that distributes incoming traffic across the virtual machines in the scale set.

Implementing Auto-Scaling in Google Cloud

Google Cloud provides a managed instance group service that enables the automatic scaling of virtual machine instances. To implement auto-scaling in Google Cloud, you need to create a managed instance group that includes the instance template and the scaling policies. Google Cloud also provides a load balancer that distributes incoming traffic across the instances in the managed instance group.

Auto-scaling is an essential feature of cloud computing that can help ensure optimal resource utilization, reduce costs, and improve system performance. By implementing auto-scaling in popular cloud platforms like AWS, Azure, and Google Cloud, you can ensure that your applications have the resources they need to handle workload demands and provide a high-quality user experience.

How to Effectively Balance Workloads and Implement Auto-Scaling

Workload balancing and auto-scaling are critical components of cloud computing that help manage resources, reduce costs, and improve system performance. To effectively balance workloads and implement auto-scaling, it is essential to follow best practices and employ monitoring, alerting, and automation strategies. Here are some practical tips and best practices for balancing workloads and implementing auto-scaling:

Monitoring

Monitoring is a crucial aspect of workload balancing and auto-scaling. By monitoring resource utilization, you can identify trends, detect anomalies, and make informed decisions about scaling resources. Popular monitoring tools for cloud computing include CloudWatch, Azure Monitor, and Google Cloud Monitoring. These tools provide real-time metrics, logs, and alerts that can help you optimize resource utilization and prevent performance issues.

Alerting

Alerting is another essential aspect of workload balancing and auto-scaling. By setting up alerts, you can be notified when resource utilization exceeds a certain threshold or when performance issues occur. Alerts can be configured to trigger scaling actions, such as scaling up or scaling down resources. Popular alerting tools for cloud computing include CloudWatch Alarms, Azure Alerts, and Google Cloud Alerts.

Automation

Automation is key to effective workload balancing and auto-scaling. By automating scaling actions, you can ensure that resources are allocated dynamically based on workload demands. Automation can be achieved through scripting, using APIs, or using cloud-provided automation tools. Popular automation tools for cloud computing include AWS Lambda, Azure Functions, and Google Cloud Functions.

Best Practices

Here are some best practices for balancing workloads and implementing auto-scaling:

- Define clear scaling policies that specify when to scale up or scale down resources.

- Monitor resource utilization regularly and adjust scaling policies as needed.

- Set up alerts to notify you of performance issues or resource utilization anomalies.

- Use automation to allocate resources dynamically based on workload demands.

- Test scaling policies thoroughly before deploying them in production.

- Regularly review and optimize scaling policies to ensure they are still relevant and effective.

Workload balancing and auto-scaling are essential components of cloud computing that can help manage resources, reduce costs, and improve system performance. By following best practices and employing monitoring, alerting, and automation strategies, you can effectively balance workloads and implement auto-scaling in your cloud infrastructure.

Choosing the Right Tools for Workload Balancing and Auto-Scaling

When it comes to workload balancing and auto-scaling in the cloud, choosing the right tools and services is crucial to ensuring optimal resource utilization, reducing costs, and improving system performance. Popular cloud platforms like AWS, Azure, and Google Cloud offer a range of tools and services for workload balancing and auto-scaling, each with its own features, strengths, and weaknesses. Here are some of the most popular tools and services for workload balancing and auto-scaling, compared and recommended based on specific use cases:

AWS Elastic Load Balancer (ELB)

AWS Elastic Load Balancer (ELB) is a fully managed load balancing service that automatically distributes incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, and IP addresses. ELB offers application load balancing, network load balancing, and gateway load balancing, and supports SSL/TLS termination, sticky sessions, and advanced request routing. ELB is a great choice for applications that require high availability, scalability, and security.

Azure Load Balancer

Azure Load Balancer is a fully managed load balancing service that distributes incoming traffic across multiple virtual machines, cloud services, and applications. Azure Load Balancer offers layer 4 load balancing, high availability, and scalability, and supports TCP and UDP traffic. Azure Load Balancer is a great choice for applications that require low latency, high throughput, and simple load balancing rules.

Google Cloud Load Balancing

Google Cloud Load Balancing is a fully managed load balancing service that distributes incoming traffic across multiple regions, zones, and instances. Google Cloud Load Balancing offers layer 3, 4, and 7 load balancing, global load balancing, and HTTP/2 and QUIC protocol support. Google Cloud Load Balancing is a great choice for applications that require low latency, high availability, and advanced traffic management features.

Kubernetes Horizontal Pod Autoscaler

Kubernetes Horizontal Pod Autoscaler is a built-in Kubernetes component that automatically scales the number of replica pods based on observed CPU utilization or other application-provided metrics. Horizontal Pod Autoscaler is a great choice for containerized applications that require dynamic scaling, high availability, and resource optimization.

Terraform Auto-Scaling

Terraform Auto-Scaling is a popular infrastructure as code (IaC) tool that enables users to define and manage auto-scaling policies and groups for cloud resources. Terraform Auto-Scaling supports AWS, Azure, and Google Cloud, and offers customizable triggers, cooldown periods, and scaling strategies. Terraform Auto-Scaling is a great choice for infrastructure teams that require automation, version control, and collaboration.

When choosing the right tools for workload balancing and auto-scaling, it is essential to consider factors such as cost, complexity, and security. It is also important to align the tools and services with the specific use cases and requirements of the application or system. By choosing the right tools and services, organizations can optimize resource utilization, reduce costs, and improve system performance, leading to better user experiences and business outcomes.

Optimizing Cloud-Based Workload Balancing and Auto-Scaling: A Comprehensive Guide

The Role of Workload Balancing and Auto-Scaling in Cloud Computing

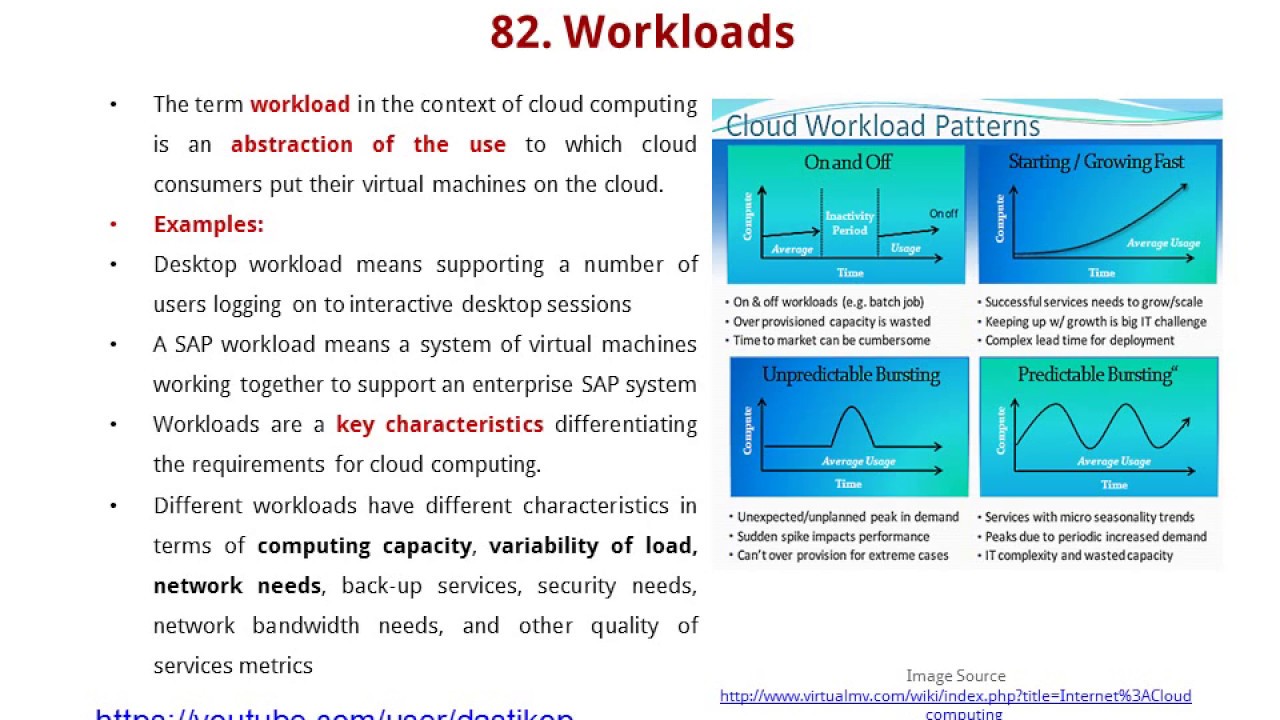

Workload balancing and auto-scaling are essential components of cloud computing, enabling organizations to manage resources, reduce costs, and improve system performance. By distributing workloads across multiple resources and automatically scaling these resources up or down based on demand, organizations can ensure that their applications and services are always available, responsive, and efficient.

Understanding Workload Balancing Techniques

Workload balancing techniques, such as load distribution, load sharing, and load scheduling, play a crucial role in optimizing cloud-based workload balancing and auto-scaling. By understanding the benefits and use cases of these techniques, organizations can choose the right approach for their specific needs and requirements.

Implementing Auto-Scaling in Cloud Infrastructures

Auto-scaling is a key feature of cloud computing, enabling organizations to automatically scale their resources up or down based on demand. By understanding the principles of auto-scaling, including scaling up and scaling down, and how it can be implemented in popular cloud platforms like AWS, Azure, and Google Cloud, organizations can ensure that their applications and services are always available, responsive, and efficient.

How to Effectively Balance Workloads and Implement Auto-Scaling

Balancing workloads and implementing auto-scaling effectively requires careful planning, monitoring, alerting, and automation. By following best practices and tips, organizations can ensure that their workload balancing and auto-scaling system is optimized for their specific needs and requirements.

Choosing the Right Tools for Workload Balancing and Auto-Scaling

Choosing the right tools and services for workload balancing and auto-scaling is essential to ensuring optimal resource utilization, reducing costs, and improving system performance. Popular cloud platforms like AWS, Azure, and Google Cloud offer a range of tools and services for workload balancing and auto-scaling, each with its own features, strengths, and weaknesses. By comparing and recommending these tools and services based on specific use cases, organizations can choose the right approach for their needs.

Potential Challenges and Limitations of Workload Balancing and Auto-Scaling

While workload balancing and auto-scaling in the cloud offer numerous benefits, they also come with potential challenges and limitations that organizations should be aware of. By understanding these challenges and implementing strategies to mitigate them, organizations can ensure successful implementation and operation of their cloud-based workload balancing and auto-scaling systems.

Case Studies: Successful Workload Balancing and Auto-Scaling Implementations

Real-world examples of successful workload balancing and auto-scaling implementations can provide valuable insights and inspiration for organizations looking to optimize their cloud-based workload balancing and auto-scaling systems. By presenting these case studies, organizations can learn from the experiences of others and apply the best practices and strategies to their own implementations.

The Future of Workload Balancing and Auto-Scaling in Cloud Computing

Emerging trends and innovations in workload balancing and auto-scaling, such as AI-driven automation, serverless computing, and edge computing, offer exciting opportunities for organizations looking to optimize their cloud-based workload balancing and auto-scaling systems. By discussing these trends and innovations, organizations can stay ahead of the curve and take advantage of the latest developments in cloud computing.

Case Studies: Successful Workload Balancing and Auto-Scaling Implementations

Workload balancing and auto-scaling in the cloud have become essential strategies for businesses seeking to optimize their cloud computing resources, reduce costs, and improve system performance. Numerous organizations have successfully implemented these techniques, achieving significant benefits and outcomes. This section showcases a few real-world examples of such successful implementations.

1. Netflix: A Leader in Cloud Computing

Netflix, a global streaming service, has been a pioneer in cloud computing, relying heavily on Amazon Web Services (AWS) for its infrastructure. By implementing workload balancing and auto-scaling, Netflix has been able to handle millions of concurrent streams and maintain high-quality service levels. The company uses AWS’s Elastic Load Balancer and Auto Scaling Groups to distribute traffic and automatically adjust the number of instances based on demand. This approach has allowed Netflix to scale seamlessly during peak usage periods, such as weekends and primetime hours, while minimizing costs during off-peak times.

2. Airbnb: Scaling for Global Growth

Airbnb, a popular online marketplace for home-sharing and travel experiences, has experienced rapid growth since its inception. To manage this growth and ensure a seamless user experience, Airbnb has leveraged the Google Cloud Platform (GCP) for workload balancing and auto-scaling. By using GCP’s Load Balancing and Managed Instance Groups, Airbnb has been able to distribute traffic efficiently and automatically scale its resources based on demand. This has resulted in improved system performance, reduced costs, and enhanced user satisfaction.

3. Slack: Handling Real-Time Communication at Scale

Slack, a leading communication platform for teams, relies on the cloud to handle real-time messaging and collaboration for millions of users worldwide. To manage this demanding workload, Slack has implemented workload balancing and auto-scaling using AWS’s Elastic Load Balancer and Auto Scaling Groups. By automatically adjusting the number of instances based on traffic patterns, Slack has been able to maintain high availability, reliability, and performance, even during peak usage times.

These case studies demonstrate the potential benefits and outcomes of implementing workload balancing and auto-scaling in cloud computing. By optimizing resources, reducing costs, and improving system performance, businesses can better meet the needs of their customers and stay competitive in today’s fast-paced digital landscape.

The Future of Workload Balancing and Auto-Scaling in Cloud Computing

As cloud computing continues to evolve, workload balancing and auto-scaling techniques are also advancing, offering new opportunities for businesses to optimize their cloud resources. This section explores emerging trends and innovations in workload balancing and auto-scaling, and their potential impact on cloud computing.

1. AI-Driven Automation

Artificial intelligence (AI) and machine learning (ML) are increasingly being integrated into cloud computing platforms to automate workload balancing and auto-scaling decisions. By analyzing historical data and real-time performance metrics, AI-driven automation can predict demand patterns and optimize resource allocation accordingly. This can lead to improved system performance, reduced costs, and enhanced user experience.

2. Serverless Computing

Serverless computing is an emerging paradigm in cloud computing that enables developers to build and deploy applications without worrying about infrastructure management. By automatically provisioning and scaling resources based on demand, serverless architectures can simplify workload balancing and auto-scaling, allowing businesses to focus on application development and innovation.

3. Edge Computing

Edge computing is a distributed computing model that brings computation and data storage closer to the edge of the network, near the source of data generation. By implementing workload balancing and auto-scaling at the edge, businesses can reduce latency, improve performance, and optimize resource utilization. This can be particularly beneficial for applications that require real-time processing, such as IoT devices and autonomous vehicles.

4. Hybrid and Multi-Cloud Strategies

As businesses increasingly adopt hybrid and multi-cloud strategies, workload balancing and auto-scaling techniques must also evolve to support these complex environments. By implementing consistent policies and tools across multiple cloud platforms, businesses can ensure seamless workload distribution, efficient resource utilization, and cost optimization.

5. Kubernetes and Container Orchestration

Kubernetes and container orchestration platforms are becoming increasingly popular for managing cloud-native applications. By automating workload balancing and auto-scaling at the container level, businesses can achieve fine-grained resource allocation, improved application resilience, and enhanced performance.

In conclusion, workload balancing and auto-scaling in the cloud are critical strategies for managing resources, reducing costs, and improving system performance. As cloud computing continues to evolve, businesses must stay up-to-date with emerging trends and innovations to optimize their cloud resources and stay competitive in today’s fast-paced digital landscape. By implementing AI-driven automation, serverless computing, edge computing, hybrid and multi-cloud strategies, and container orchestration platforms, businesses can achieve greater efficiency, agility, and innovation in their cloud computing initiatives.