What is Data Factory in Azure?

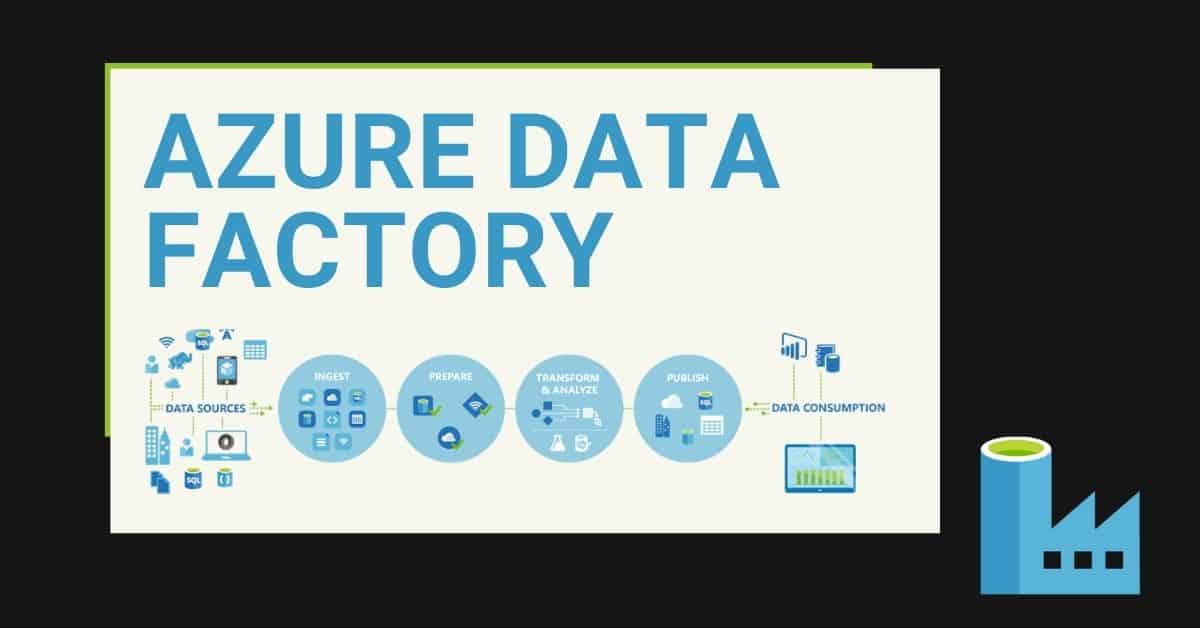

Data Factory in Azure is a cloud-based data integration service that empowers organizations to create, schedule, and manage data workflows efficiently. This fully managed service facilitates seamless data movement and transformation, enabling enterprises to orchestrate and automate data integration tasks at scale. By employing Data Factory in Azure, organizations can unlock valuable insights from their data, streamline data-driven processes, and make informed decisions that drive business growth.

Key Features and Capabilities of Azure Data Factory

Azure Data Factory is a robust and versatile data integration service offering numerous features and capabilities. Among its core strengths are:

- Data Orchestration: Azure Data Factory simplifies the process of creating, scheduling, and managing data workflows, allowing organizations to efficiently move and transform data between various sources and destinations.

- ETL/ETLT Processes: Azure Data Factory supports both ETL (Extract, Transform, Load) and ETLT (Extract, Transform, Load, and Track) processes, enabling organizations to cleanse, enrich, and standardize data before loading it into target systems.

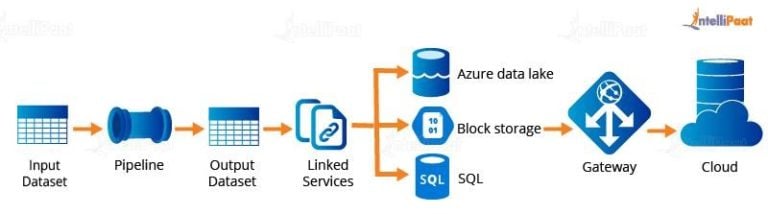

- Support for Various Data Stores: Azure Data Factory supports a wide range of data stores, including relational databases, NoSQL databases, file systems, and big data stores, ensuring seamless integration with your existing data infrastructure.

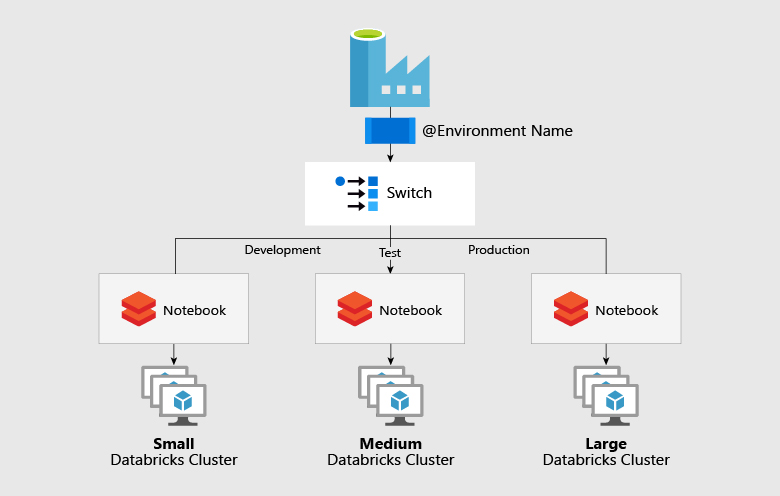

- Integration with Azure Services: Azure Data Factory integrates seamlessly with other Azure services, such as Azure Data Lake Storage, Azure Synapse Analytics, and Azure Databricks, allowing organizations to build end-to-end data analytics solutions on the Azure platform.

By leveraging these features, organizations can create scalable, secure, and efficient data integration pipelines using Azure Data Factory, ultimately unlocking valuable insights and driving informed decision-making.

How to Create and Manage Data Workflows with Azure Data Factory

Creating, configuring, and managing data workflows in Azure Data Factory is a straightforward process, thanks to its user-friendly interface. Here’s a step-by-step guide:

- Create a Pipeline: A pipeline is a logical grouping of activities that perform a specific task. To create a pipeline, navigate to the “Author & Monitor” section in Azure Data Factory and click on “+ New pipeline” to start defining your data workflow.

- Add Activities: Activities are the building blocks of pipelines. You can add various activities, such as copying data, executing a stored procedure, or invoking a web service. To add an activity, drag and drop it from the “Activities” pane onto the pipeline canvas.

- Configure Activities: After adding an activity, you need to configure it by specifying the necessary properties. For example, if you’re copying data, you’ll need to specify the source and sink data stores, as well as any transformation rules.

- Create Triggers: Triggers define when a pipeline should be executed. You can create triggers based on various events, such as a schedule, a data arrival, or a manual trigger. To create a trigger, navigate to the “Triggers” tab and click on “+ New” to define your trigger’s properties.

- Monitor Pipelines: Azure Data Factory provides a built-in monitoring dashboard that allows you to track the status and performance of your pipelines. To access the monitoring dashboard, navigate to the “Author & Monitor” section and click on the “Monitor” tab.

By following these steps, you can create, manage, and monitor data workflows using Azure Data Factory, ensuring seamless data movement and transformation for your organization.

Benefits and Use Cases of Azure Data Factory

Azure Data Factory offers numerous benefits to organizations, including:

- Improved Productivity: By automating data integration tasks, Azure Data Factory enables teams to focus on higher-value activities, such as data analysis and business strategy development.

- Cost Savings: Azure Data Factory reduces the need for on-premises infrastructure and maintenance, leading to lower total cost of ownership and more efficient resource utilization.

- Enhanced Data Governance: Azure Data Factory provides built-in data lineage, auditing, and versioning capabilities, ensuring that data remains accurate, secure, and compliant throughout the integration process.

Real-world use cases demonstrate the value of Azure Data Factory in various industries:

- Healthcare: A healthcare organization can use Azure Data Factory to integrate patient data from various sources, such as electronic health records, clinical trials, and wearable devices, ensuring a comprehensive and up-to-date view of patient health.

- Finance: A financial institution can leverage Azure Data Factory to consolidate data from multiple sources, such as trading platforms, risk management systems, and customer relationship management tools, enabling accurate and timely financial reporting and analysis.

- Retail: A retailer can utilize Azure Data Factory to integrate data from point-of-sale systems, e-commerce platforms, and customer loyalty programs, gaining valuable insights into customer behavior, preferences, and trends.

By harnessing the power of Azure Data Factory, organizations across industries can streamline data integration, unlock valuable insights, and make informed decisions that drive business growth.

Comparing Azure Data Factory with Alternative Solutions

When evaluating data integration tools, organizations often compare Azure Data Factory with other solutions, such as Talend, Informatica, and Matillion. Here’s a brief overview of each platform and how they stack up against Azure Data Factory:

- Talend: Talend is an open-source data integration platform that supports various data integration tasks, including ETL, data quality, and data governance. While Talend offers flexibility and cost savings, Azure Data Factory provides seamless integration with Azure services and a fully managed, serverless infrastructure, reducing the need for manual maintenance and scaling.

- Informatica: Informatica is a comprehensive data integration platform that supports various data integration scenarios, including ETL, ELT, and data replication. Informatica offers advanced features, such as machine learning and AI-driven data transformation. However, Azure Data Factory integrates with Azure services, such as Azure Machine Learning and Azure Cognitive Services, providing similar capabilities with the added benefits of serverless infrastructure and lower total cost of ownership.

- Matillion: Matillion is a cloud-based data integration platform designed for data warehousing and business intelligence scenarios. Matillion offers a user-friendly interface and supports various data stores, including Amazon Redshift, Snowflake, and Google BigQuery. Azure Data Factory, on the other hand, provides seamless integration with Azure services, such as Azure Synapse Analytics and Azure Databricks, and supports a broader range of data stores and use cases, making it a more versatile solution for organizations with diverse data integration needs.

By understanding the unique features and benefits of each platform, organizations can make informed decisions about which data integration tool best meets their needs and objectives.

Best Practices for Implementing Azure Data Factory

To maximize the benefits of Azure Data Factory, consider the following best practices for designing, deploying, and optimizing your solutions:

- Data Modeling: Design a robust data model that aligns with your organization’s data governance policies and objectives. Implement data modeling best practices, such as normalization, denormalization, and data partitioning, to ensure optimal performance and scalability.

- Error Handling: Implement comprehensive error handling mechanisms to minimize disruptions and ensure data integrity. Utilize Azure Data Factory’s built-in error handling features, such as activity and pipeline failure modes, to handle errors gracefully and provide meaningful error messages and alerts.

- Performance Tuning: Optimize the performance of your Azure Data Factory solutions by monitoring and analyzing resource utilization, identifying bottlenecks, and implementing performance tuning best practices, such as parallel processing, data compression, and caching.

- Monitoring and Auditing: Leverage Azure Data Factory’s monitoring and auditing capabilities to track the performance, health, and usage of your data integration workflows. Utilize Azure Monitor, Azure Log Analytics, and Azure Application Insights to gain insights into your Azure Data Factory solutions and proactively address potential issues.

- Security and Compliance: Ensure the security and compliance of your Azure Data Factory solutions by implementing best practices, such as data encryption, access control, and regulatory compliance. Utilize Azure Security Center, Azure Policy, and Azure Active Directory to enforce security and compliance policies and protect your organization’s data.

By following these best practices, organizations can design, deploy, and optimize Azure Data Factory solutions that meet their unique data integration needs and objectives.

Security and Compliance Considerations for Azure Data Factory

When implementing Azure Data Factory, organizations must consider security and compliance aspects to ensure their data remains safe and compliant. Here are some key considerations:

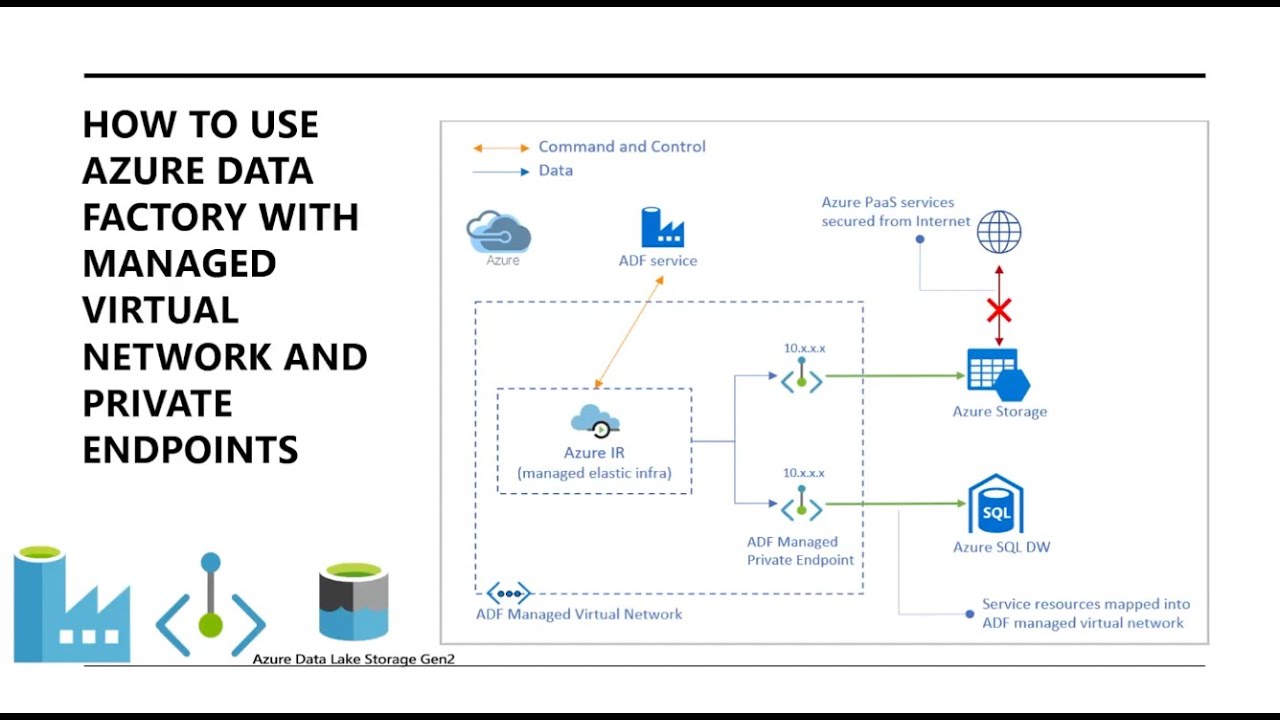

- Data Encryption: Azure Data Factory supports data encryption at rest and in transit. Utilize Azure Data Factory’s built-in encryption features to protect sensitive data and ensure compliance with industry standards and regulations.

- Access Control: Implement robust access control policies to ensure that only authorized users and applications can access your Azure Data Factory resources. Utilize Azure Active Directory, role-based access control (RBAC), and other access control mechanisms to enforce security policies and protect your data.

- Regulatory Compliance: Ensure that your Azure Data Factory solutions comply with relevant industry regulations, such as GDPR, HIPAA, and PCI DSS. Utilize Azure Policy and Azure Security Center to enforce compliance policies and monitor compliance posture.

- Data Privacy: Protect the privacy of your data by implementing data masking, redaction, and other privacy-preserving techniques. Utilize Azure Data Factory’s built-in privacy features, such as dynamic content and data flow expressions, to ensure data privacy and compliance.

- Auditing and Monitoring: Leverage Azure Data Factory’s auditing and monitoring capabilities to track the usage and access of your data integration workflows. Utilize Azure Monitor, Azure Log Analytics, and Azure Application Insights to gain insights into your Azure Data Factory solutions and proactively address potential security and compliance issues.

By considering these security and compliance aspects, organizations can ensure the safe and compliant use of Azure Data Factory for their data integration needs.

Expanding Your Data Capabilities with Azure Data Factory

Azure Data Factory offers advanced features and capabilities that enable organizations to unlock the full potential of their data. Here are some key features to explore:

- Machine Learning Integration: Azure Data Factory integrates with Azure Machine Learning, enabling organizations to build, train, and deploy machine learning models as part of their data integration workflows. Utilize Azure Machine Learning to gain insights from your data, automate decision-making, and drive business outcomes.

- Mapping Data Flows: Azure Data Factory’s Mapping Data Flows feature enables organizations to build and manage data transformation workflows using a visual interface. Utilize Mapping Data Flows to simplify data transformation tasks, automate data workflows, and improve productivity.

- Power Query: Azure Data Factory integrates with Power Query, enabling organizations to connect to various data sources, transform data, and load it into data stores. Utilize Power Query to simplify data preparation tasks, automate data workflows, and improve productivity.

- Data Flow Expressions: Azure Data Factory supports data flow expressions, enabling organizations to perform complex data transformations using a simple and intuitive syntax. Utilize data flow expressions to simplify data transformation tasks, automate data workflows, and improve productivity.

- Integration with Azure Services: Azure Data Factory integrates with various Azure services, such as Azure Data Lake Storage, Azure Synapse Analytics, and Azure Databricks, enabling organizations to build end-to-end data analytics solutions on the Azure platform. Utilize Azure services to simplify data integration tasks, automate data workflows, and improve productivity.

By exploring these advanced features and capabilities, organizations can unlock the full potential of their data and drive business outcomes using Azure Data Factory.