An Overview of Kubernetes and Red Hat

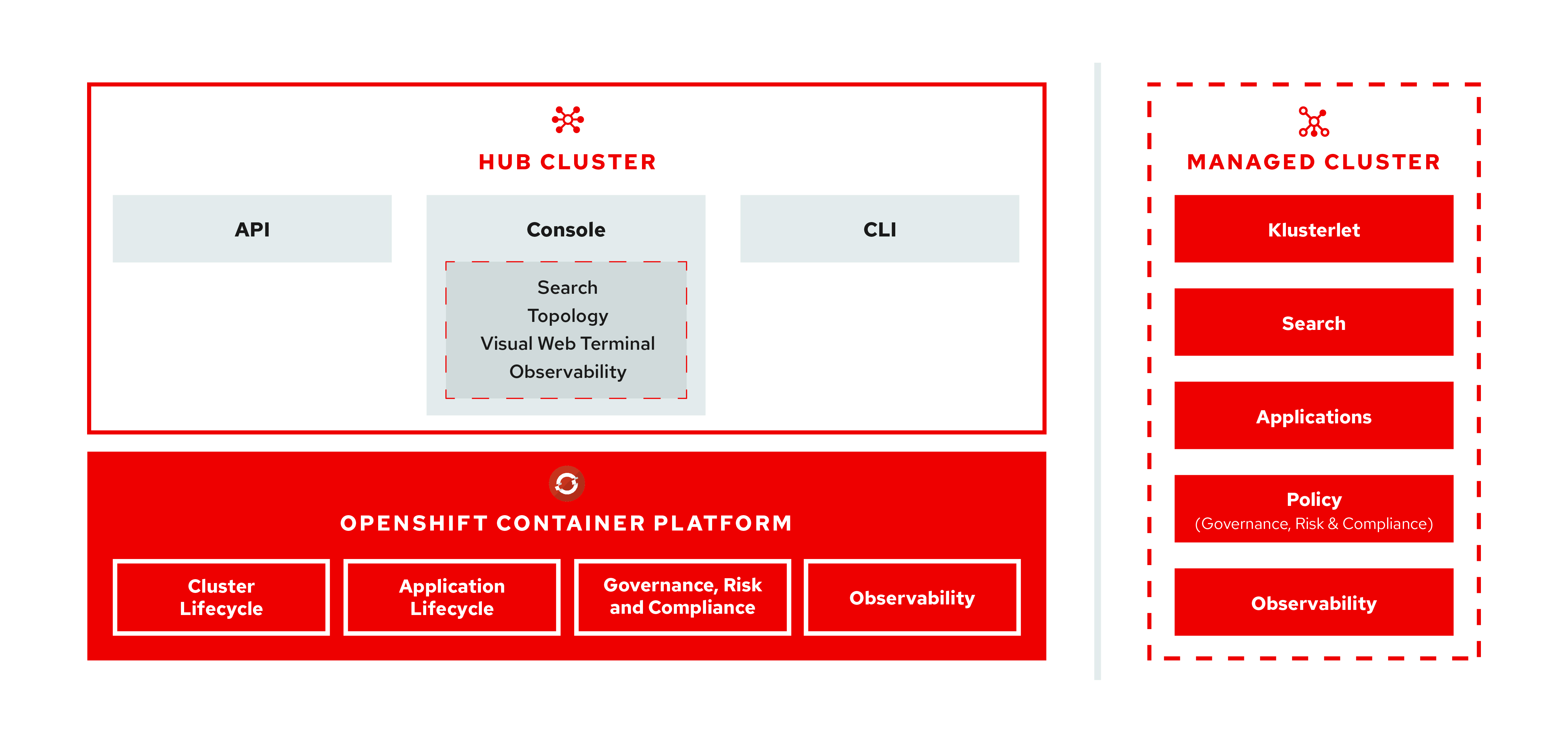

Kubernetes and Red Hat are two powerful technologies that, when combined, offer a robust containerization and orchestration platform. Kubernetes, an open-source container orchestrator, simplifies the deployment, scaling, and management of applications. Red Hat, a leading provider of open-source solutions, offers Red Hat Enterprise Linux (RHEL) and Red Hat OpenShift, a containerization application built around Kubernetes. The synergy between Kubernetes and Red Hat enables organizations to build, deploy, and manage applications with enhanced security, scalability, and automation capabilities.

Key Features of Kubernetes and Red Hat

Kubernetes and Red Hat are designed with powerful features that make them an ideal choice for containerization and orchestration. Kubernetes offers automatic container deployment, scaling, and load balancing, ensuring high availability and efficient resource utilization. It also supports rolling updates and rollbacks, enabling seamless application upgrades and downgrades. Red Hat, on the other hand, provides a stable and secure platform with Red Hat Enterprise Linux (RHEL), which is widely adopted in the enterprise world.

Red Hat OpenShift, a containerization application built around Kubernetes, combines the benefits of both worlds. OpenShift simplifies the deployment and management of applications, offering developer-centric tools and built-in DevOps practices. It also provides advanced security features, such as image signing, automatic security updates, and network policies, ensuring a secure environment for application development and deployment.

Together, Kubernetes and Red Hat offer unparalleled scalability, security, and automation capabilities. The combination enables organizations to build, deploy, and manage applications in a hybrid, multi-cloud environment, ensuring seamless integration, management, and orchestration of containerized applications.

Getting Started with Red Hat for Kubernetes

To get started with Red Hat for Kubernetes, follow these steps:

-

Check the system requirements: Ensure your system meets the minimum requirements for Red Hat Enterprise Linux (RHEL) and Kubernetes. RHEL 8.2 or later is recommended for Kubernetes 1.21 or later.

-

Install Red Hat Enterprise Linux: Follow the official Red Hat documentation to install RHEL on your system. You can choose between the minimal installation or the server with GUI option, depending on your preferences.

-

Register your system: Register your RHEL system with the Red Hat Customer Portal to gain access to the latest updates, errata, and technical support.

-

Install Docker: Docker is the container runtime used by Kubernetes. Install Docker on your RHEL system using the following command:

sudo dnf install docker -

Install Kubernetes: After installing Docker, you can proceed with the Kubernetes installation. Add the Red Hat OpenShift Container Platform repository using the following command:

sudo subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms -

Install the Kubernetes packages: Use the following command to install the Kubernetes packages:

sudo dnf install containerd kubelet kubeadm kubectl -

Initialize your Kubernetes cluster: Run the

sudo kubeadm initcommand to initialize your Kubernetes cluster. This command will generate a token and a kubeconfig file, which you can use to join worker nodes to the cluster. -

Configure kubectl: Run the following command to start using your kubeconfig file:

export KUBECONFIG=/etc/kubernetes/admin.conf -

Join worker nodes to the cluster: Use the token generated during the initialization process to join worker nodes to the cluster.

Now that you have successfully set up Red Hat for Kubernetes, you can proceed with deploying applications and managing your cluster using the powerful features provided by both platforms.

How to Deploy Applications using Kubernetes and Red Hat

Deploying applications using Kubernetes and Red Hat involves managing pods, services, and deployments. Here’s a step-by-step guide on how to deploy applications:

-

Create a pod: A pod is the smallest deployable unit in Kubernetes. It represents a single instance of a running process in a cluster. To create a pod, you can use a pod manifest file, which is a YAML or JSON file that defines the pod’s configuration. Here’s an example of a simple Nginx pod manifest:

{ "apiVersion": "v1", "kind": "Pod", "metadata": { "name": "nginx-pod" }, "spec": { "containers": [ { "name": "nginx", "image": "nginx:1.14.2", "ports": [ { "containerPort": 80 } ] } ] } } -

Create a deployment: A deployment is a Kubernetes object that manages a set of replica pods. It ensures that the desired number of pod replicas are running at any given time. To create a deployment, you can use a deployment manifest file, which is similar to a pod manifest. Here’s an example of a simple Nginx deployment manifest:

{ "apiVersion": "apps/v1", "kind": "Deployment", "metadata": { "name": "nginx-deployment" }, "spec": { "replicas": 3, "selector": { "matchLabels": { "app": "nginx" } }, "template": { "metadata": { "labels": { "app": "nginx" } }, "spec": { "containers": [ { "name": "nginx", "image": "nginx:1.14.2", "ports": [ { "containerPort": 80 } ] } ] } } } } -

Create a service: A service is an abstract way to expose an application running on a set of pods as a network service. With Kubernetes, you can expose your service internally or externally. Here’s an example of a simple Nginx service manifest that exposes the service on port 80:

{ "apiVersion": "v1", "kind": "Service", "metadata": { "name": "nginx-service" }, "spec": { "selector": { "app": "nginx" }, "ports": [ { "protocol": "TCP", "port": 80, "targetPort": 80 } ], "type": "ClusterIP" } }

These are the basic steps for deploying applications using Kubernetes and Red Hat. By mastering pods, services, and deployments, you can effectively manage and scale your applications in a containerized environment.

Securing Kubernetes Clusters with Red Hat

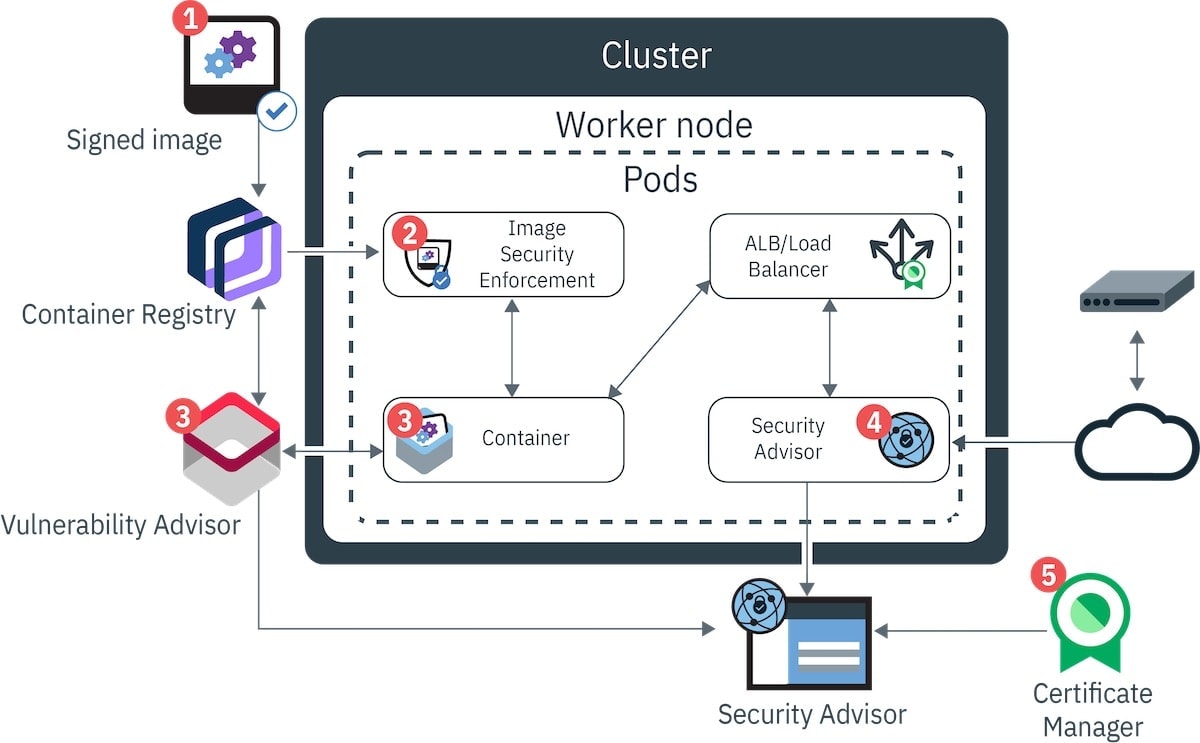

Securing Kubernetes clusters is crucial for protecting sensitive data and maintaining the integrity of your applications. Red Hat provides several best practices for securing Kubernetes clusters, including network policies, secrets management, and role-based access control.

Network Policies

Network policies in Kubernetes allow you to control the flow of traffic between pods and services. By defining network policies, you can restrict access to specific pods and services, ensuring that only authorized traffic is allowed. Red Hat provides a default network policy that denies all ingress traffic, allowing you to explicitly define the policies that allow traffic to flow.

Secrets Management

Secrets management is the process of storing, managing, and distributing sensitive data, such as passwords, API keys, and certificates. Red Hat provides several tools for managing secrets in Kubernetes, including the OpenShift Secret Service and the Kubernetes Secrets API. By using these tools, you can securely store and distribute secrets, ensuring that they are protected from unauthorized access.

Role-Based Access Control

Role-based access control (RBAC) is a security feature that allows you to control who can access your Kubernetes resources. By defining roles and binding them to users or groups, you can ensure that only authorized users have access to your resources. Red Hat provides a built-in RBAC system that allows you to define roles and bindings, ensuring that your resources are protected from unauthorized access.

By following these best practices, you can ensure that your Kubernetes clusters are secure and protected from unauthorized access. Red Hat provides several tools and features for securing Kubernetes clusters, making it an ideal choice for organizations looking to deploy and manage containerized applications.

Optimizing Performance in Kubernetes with Red Hat

Optimizing performance in Kubernetes with Red Hat is essential for ensuring that your applications run smoothly and efficiently. Red Hat provides several techniques for optimizing performance, including resource quotas, horizontal pod autoscaling, and container image optimization.

Resource Quotas

Resource quotas in Kubernetes allow you to limit the amount of compute resources that a namespace can consume. By defining resource quotas, you can ensure that your applications have access to the resources they need while preventing other applications from consuming excessive resources. Red Hat provides a built-in mechanism for defining and enforcing resource quotas, ensuring that your applications are running efficiently.

Horizontal Pod Autoscaling

Horizontal pod autoscaling is a feature in Kubernetes that allows you to automatically scale the number of pods in a deployment based on CPU utilization or other metrics. By using horizontal pod autoscaling, you can ensure that your applications have access to the resources they need to handle increased traffic or workloads. Red Hat provides a built-in mechanism for horizontal pod autoscaling, making it easy to implement and manage.

Container Image Optimization

Optimizing container images is essential for ensuring that your applications start quickly and consume minimal resources. Red Hat provides several tools for optimizing container images, including the Red Hat Container Development Kit and the Red Hat Universal Base Image. By using these tools, you can create optimized container images that are small, fast, and secure.

By following these techniques, you can ensure that your Kubernetes clusters are running efficiently and that your applications are performing optimally. Red Hat provides several tools and features for optimizing performance in Kubernetes, making it an ideal choice for organizations looking to deploy and manage containerized applications.

Monitoring and Troubleshooting Kubernetes Clusters with Red Hat

Monitoring and troubleshooting Kubernetes clusters with Red Hat is essential for ensuring that your applications are running smoothly and that any issues are identified and resolved quickly. Red Hat provides several built-in monitoring tools and third-party integrations for monitoring and troubleshooting Kubernetes clusters.

Built-in Monitoring Tools

Red Hat provides several built-in monitoring tools for Kubernetes clusters, including the Kubernetes Dashboard, Prometheus, and Grafana. The Kubernetes Dashboard provides a user-friendly interface for monitoring and managing Kubernetes resources, while Prometheus and Grafana provide powerful monitoring and visualization capabilities. By using these tools, you can monitor the health and performance of your Kubernetes clusters and identify any issues quickly.

Third-Party Integrations

Red Hat also supports several third-party integrations for monitoring and troubleshooting Kubernetes clusters, including Elasticsearch, Fluentd, and Kibana (EFK) stack, and Jaeger. These tools provide advanced monitoring and tracing capabilities, allowing you to identify and diagnose issues quickly. By using these tools, you can gain deeper insights into your Kubernetes clusters and applications, ensuring that they are running smoothly and efficiently.

By using Red Hat’s monitoring and troubleshooting tools, you can ensure that your Kubernetes clusters are running smoothly and that any issues are identified and resolved quickly. Red Hat provides several built-in monitoring tools and third-party integrations, making it easy to monitor and troubleshoot Kubernetes clusters and applications.

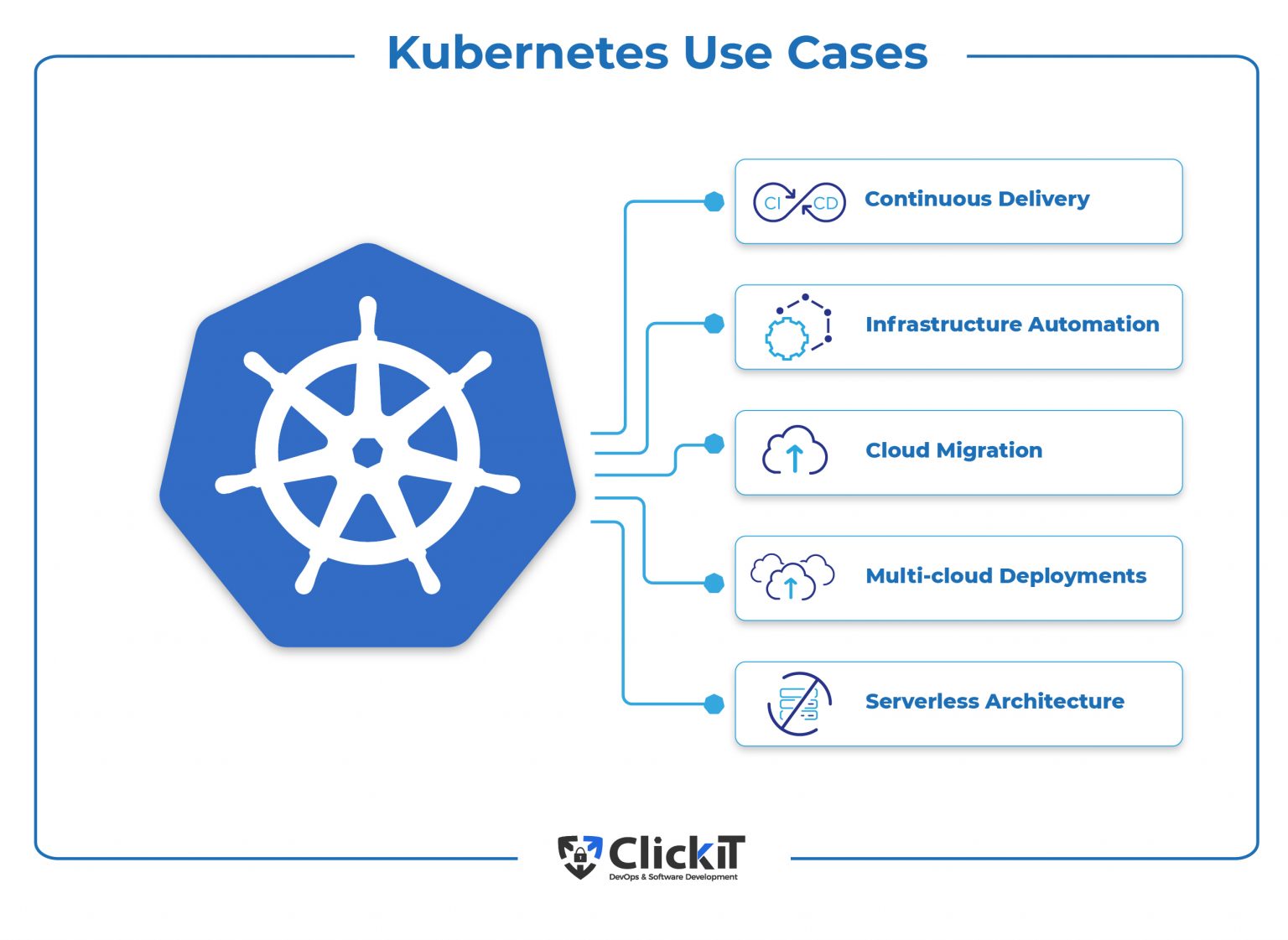

Real-World Use Cases of Kubernetes and Red Hat

Kubernetes and Red Hat are being used in various industries to containerize and orchestrate applications, providing scalability, security, and automation capabilities. Here are some real-world use cases of Kubernetes and Red Hat:

Financial Services

Financial institutions are using Kubernetes and Red Hat to build and deploy applications that require high availability, scalability, and security. By using Kubernetes and Red Hat, financial institutions can ensure that their applications are running smoothly, even during peak times, and that sensitive data is protected.

Healthcare

Healthcare organizations are using Kubernetes and Red Hat to build and deploy applications that require real-time data processing and analysis. By using Kubernetes and Red Hat, healthcare organizations can ensure that their applications are running smoothly, even during high traffic times, and that patient data is protected.

Retail

Retail companies are using Kubernetes and Red Hat to build and deploy applications that require high availability, scalability, and automation. By using Kubernetes and Red Hat, retail companies can ensure that their applications are running smoothly, even during peak shopping seasons, and that their supply chain is optimized.

These are just a few examples of how Kubernetes and Red Hat are being used in various industries. By using Kubernetes and Red Hat, organizations can ensure that their applications are running smoothly, securely, and efficiently, providing value and usefulness to their customers and stakeholders.